Using Operators to manage applications

Clearly, working with Operators involves more than simply reconciling a cluster state. The Operator Framework is an encompassing platform for Kubernetes developers and users to solve unique problems, which makes Kubernetes so flexible.

Cluster administrators' first step in the Operator Framework is usually either with the Operator SDK, to develop their own Operator if there are no existing Operators that address their needs, or OperatorHub if there are.

Summarizing the Operator Framework

When developing an Operator from scratch, there are three choices for development methods: Go, Ansible, or Helm. However, using Ansible or Helm alone will ultimately limit the Operator's capabilities to the most basic levels of functionality.

If the developer wishes to share their Operator, they will need to package it into the standard manifest bundle for OperatorHub. Following a review, their Operator will be available publicly for other users to download and install in their own clusters.

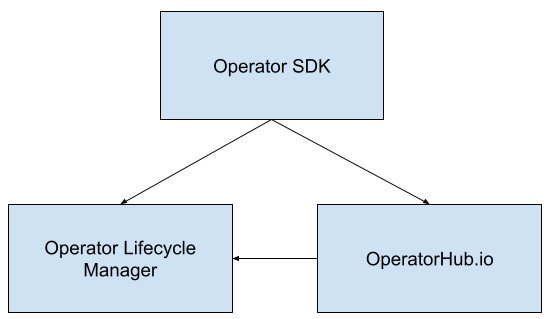

OLM then makes it easy for users to launch Operators in a cluster. These Operators can be sourced from OperatorHub or written from scratch. Either way, OLM makes Operator installation, upgrades, and management much easier. It also provides several stability benefits when working with many Operators. You can see the relationship between the three services in the following diagram:

Figure 1.3 – The relationship between the Operator SDK, OperatorHub, and OLM

Each of these pillars provides distinct functions that aid in the development of Operators. Together, they comprise the foundation of the Operator Framework. Utilization of these pillars is the key distinguishing factor between an Operator and a normal Kubernetes controller. To summarize, while every Operator is essentially a controller, not every controller is an Operator.

Applying Operator capabilities

Revisiting the first example in this chapter, the idea of a simple application with three Pods and a Persistent Volume was examined without Operator management. This application relied on optimistic uptime and future-proof design to run continuously. In real-world deployments, these ideas are unfortunately unreasonable. Designs evolve and change, and unforeseeable failures bring applications down. But how could an Operator help this app persist in an unpredictable world?

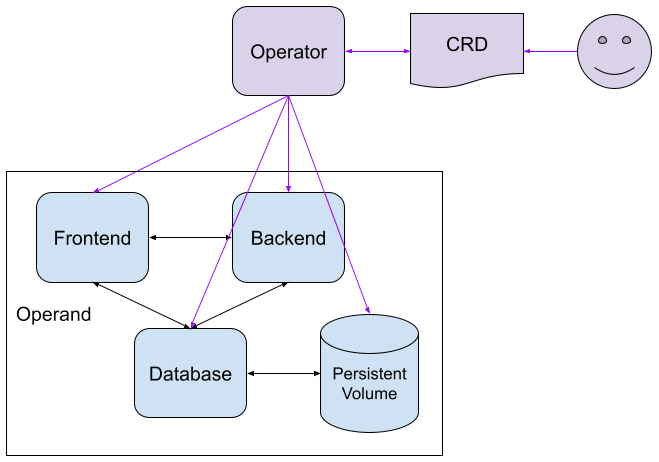

By defining a single declarative configuration, this Operator could control various settings of the application deployment in one spot. This is the reason Operators are built on CRDs. These custom objects allow developers and users to easily interact with their Operators just as if they were native Kubernetes objects. So, the first step in writing an Operator to manage our simple web application would be to define a basic code structure with a CRD that has all the settings we think we'll need. Once we have done this, the new diagram of our application will look like this:

Figure 1.4 – In the new app layout, the cluster administrator only interacts with the Operator; the Operator then manages the workload

This shows how the details of the Operand deployment have been abstracted away from requiring manual administrator control, and the great part about CRDs is that more settings can be added in later versions of the Operator as our app grows. A few examples of settings to start with could be these:

- Database access information

- Application behavior settings

- Log level

While writing our Operator code, we'll also want to write logic for things such as metrics, error handling, and reporting. The Operator can also start to bidirectionally communicate with the Operand. This means that not only can it install and update the Operand, but it can receive communication back from the Operand about its status and report that as well.