Technical requirements

For this chapter, we will leverage Google Colaboratory’s accessibility and economy. Whisper’s small model requires at least 12 GB of GPU memory. Thus, we must try to secure a decent GPU for our Colab! Unfortunately, accessing a good GPU with the free version of Google Colab (with the free version, we get a Tesla T4 16 GB) is becoming much harder. However, with Google Colab Pro, we should have no issues in being allocated a V100 or P100 GPU.

To get a GPU, within Google Colab’s main menu, click Runtime | Change runtime type, then change the Hardware accelerator from None to GPU.

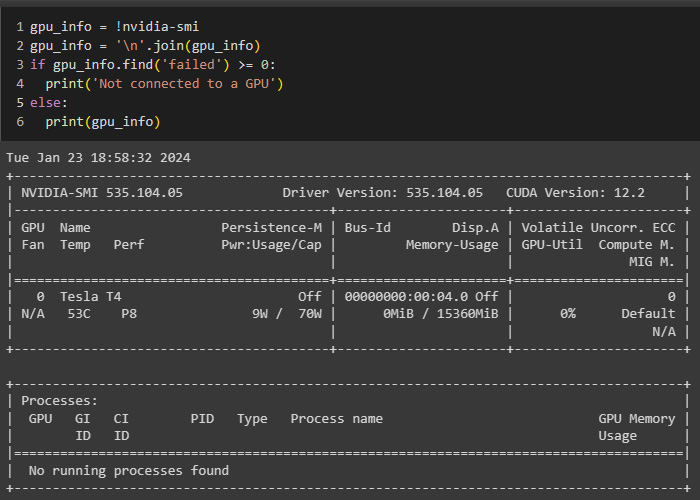

We can verify that we’ve been assigned a GPU and view its specifications by running the following code:

gpu_info = !nvidia-smi

gpu_info = '\n'.join(gpu_info)

if gpu_info.find('failed') >= 0:

print('Not connected to a GPU')

else:

print(gpu_info) Here’s the output: