In this first recipe, we're going to create an app that captures either an image from your camera roll or an image taken from your camera. This will set up our iOS app ready for us to incorporate CoreML to detect objects in our photos.

Getting ready

For this recipe, you'll need the latest version of Xcode available from the Mac App Store.

How to do it...

With Xcode open, let's get started:

- Create a new project in Xcode. Go to File | New | Project | iOS App.

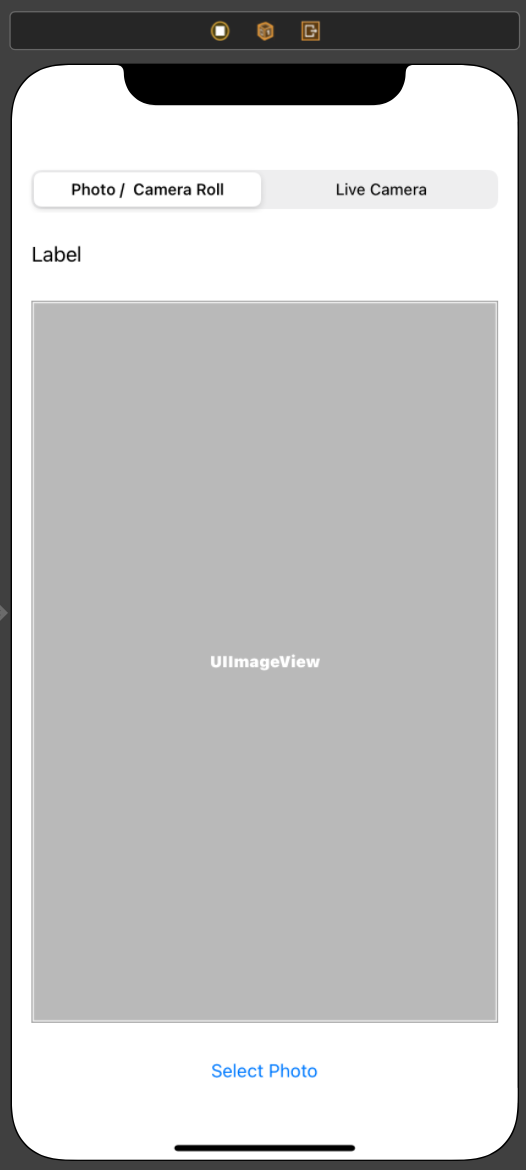

- In Main.storyboard, add the following:

- Add UISegmentedControl with two options (Photo / Camera Roll and Live Camera).

- Next, add a UILabel view just underneath.

- Add a UIImageView view beneath that.

- Finally, add a UIButton component.

- Space these accordingly using AutoLayout constraints with UIImageView being the prominent object:

Figure 11.1 – Camera/photo app

- Once we have this in place, let's hook these up to our ViewController.swift file:

@IBOutlet weak var imageView...