Going cross platform

The main idea is the possibility of cross-platform development in What You See (on a PC) is What You Get (on a device), when most of the application logic can be developed in a familiar desktop environment like Windows, and it can be built for Android using the NDK whenever necessary.

Getting ready

To perform what we just discussed, we have to implement some sort of abstraction on top of the NDK, POSIX, and Windows API. Such an abstraction should feature at least the following:

- Ability to render buffer contents on the screen: Our framework should provide the functions to build the contents of an off-screen framebuffer (a 2D array of pixels) to the screen (for Windows we refer to the window as "the screen").

- Event handling: The framework must be able to process the multi-touch input and virtual/physical key presses (some Android devices, such as the Toshiba AC 100, or the Ouya console, and other gaming devices, have physical buttons), timing events, and asynchronous operation completions.

- Filesystem, networking, and audio playback: The abstraction layers for these entities need a ton of work to be done by you, so the implementations are presented in Chapter 3, Networking, Chapter 4, Organizing a Virtual Filesystem, and Chapter 5, Cross-platform Audio Streaming.

How to do it...

- Let us proceed to write a minimal application for the Windows environment, since we already have the application for Android (for example,

App1). A minimalistic Windows GUI application is the one that creates a single window and starts the event loop (see the following example inWin_Min1/main.c):#include <windows.h> LRESULT CALLBACK MyFunc(HWND h, UINT msg, WPARAM w, LPARAM p) { if(msg == WM_DESTROY) { PostQuitMessage(0); } return DefWindowProc(h, msg, w, p); } char WinName[] = "MyWin"; - The entry point is different from Android. However, its purpose remains the same— to initialize surface rendering and invoke callbacks:

int main() { OnStart(); const char WinName[] = "MyWin"; WNDCLASS wcl; memset( &wcl, 0, sizeof( WNDCLASS ) ); wcl.lpszClassName = WinName; wcl.lpfnWndProc = MyFunc; wcl.hCursor = LoadCursor( NULL, IDC_ARROW ); if ( !RegisterClass( &wcl ) ) { return 0; } RECT Rect; Rect.left = 0; Rect.top = 0; - The size of the window client area is predefined as

ImageWidthandImageHeightconstants. However, the WinAPI functionCreateWindowA()accepts not the size of the client area, but the size of the window, which includes caption, borders, and other decorations. We need to adjust the window rectangle to set the client area to the desired size through the following code:Rect.right = ImageWidth; Rect.bottom = ImageHeight; DWORD dwStyle = WS_OVERLAPPEDWINDOW; AdjustWindowRect( &Rect, dwStyle, false ); int WinWidth = Rect.right - Rect.left; int WinHeight = Rect.bottom - Rect.top; HWND hWnd = CreateWindowA( WinName, "App3", dwStyle,100, 100, WinWidth, WinHeight,0, NULL, NULL, NULL ); ShowWindow( hWnd, SW_SHOW ); HDC dc = GetDC( hWnd );

- Create the offscreen device context and the bitmap, which holds our offscreen framebuffer through the following code:

hMemDC = CreateCompatibleDC( dc ); hTmpBmp = CreateCompatibleBitmap( dc,ImageWidth, ImageHeight ); memset( &BitmapInfo.bmiHeader, 0,sizeof( BITMAPINFOHEADER ) ); BitmapInfo.bmiHeader.biSize = sizeof( BITMAPINFOHEADER ); BitmapInfo.bmiHeader.biWidth = ImageWidth; BitmapInfo.bmiHeader.biHeight = ImageHeight; BitmapInfo.bmiHeader.biPlanes = 1; BitmapInfo.bmiHeader.biBitCount = 32; BitmapInfo.bmiHeader.biSizeImage = ImageWidth*ImageHeight*4; UpdateWindow( hWnd );

- After the application's window is created, we have to run a typical message loop:

MSG msg; while ( GetMessage( &msg, NULL, 0, 0 ) ) { TranslateMessage( &msg ); DispatchMessage( &msg ); } … } - This program only handles the window destruction event and does not render anything. Compilation of this program is done with a single command as follows:

>gcc -o main.exe main.c -lgdi32

How it works…

To render a framebuffer on the screen, we need to create a so-called device context with an associated bitmap, and add the WM_PAINT event handler to the window function.

To handle the keyboard and mouse events, we add the WM_KEYUP and WM_MOUSEMOVE cases to the switch statement in the previous program. Actual event handling is performed in the externally provided routines OnKeyUp() and OnMouseMove(), which contain our game logic.

The following is the complete source code of the program (some omitted parts, similar to the previous example, are omitted). The functions OnMouseMove(), OnMouseDown(), and OnMouseUp() accept two integer arguments that store the current coordinates of the mouse pointer. The functions OnKeyUp() and OnKeyDown() accept a single argument—the pressed (or released) key code:

#include <windows.h> HDC hMemDC; HBITMAP hTmpBmp; BITMAPINFO BmpInfo;

In the following code, we store our global RGBA framebuffer:

unsigned char* g_FrameBuffer;

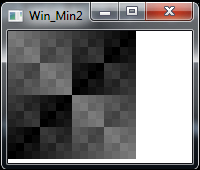

We do all OS-independent frame rendering in this callback. We draw a simple XOR pattern (http://lodev.org/cgtutor/xortexture.html) into the framebuffer as follows:

void DrawFrame()

{

int x, y;

for (y = 0 ; y < ImageHeight ; y++)

{

for (x = 0 ; x < ImageWidth ; x++)

{

int Ofs = y * ImageWidth + x;

int c = (x ^ y) & 0xFF;

int RGB = (c<<16) | (c<<8) | (c<<0) | 0xFF000000;

( ( unsigned int* )g_FrameBuffer )[ Ofs ] = RGB;

}

}

}The following code shows the WinAPI window function:

LRESULT CALLBACK MyFunc(HWND h, UINT msg, WPARAM w, LPARAM p)

{

PAINTSTRUCT ps;

switch(msg)

{

case WM_DESTROY:

PostQuitMessage(0);

break;

case WM_KEYUP:

OnKeyUp(w);

break;

case WM_KEYDOWN:

OnKeyDown(w);

break;

case WM_LBUTTONDOWN:

SetCapture(h);

OnMouseDown(x, y);

break;

case WM_MOUSEMOVE:

OnMouseMove(x, y);

break;

case WM_LBUTTONUP:

OnMouseUp(x, y);

ReleaseCapture();

break;

case WM_PAINT:

dc = BeginPaint(h, &ps);

DrawFrame(); Transfer the g_FrameBuffer to the bitmap through the following code:

SetDIBits(hMemDC, hTmpBmp, 0, Height,g_FrameBuffer, &BmpInfo, DIB_RGB_COLORS);

SelectObject(hMemDC, hTmpBmp);And copy it to the window surface through the following code:

BitBlt(dc, 0, 0, Width, Height, hMemDC, 0, 0, SRCCOPY);

EndPaint(h, &ps);

break;

}

return DefWindowProc(h, msg, w, p);

}Since our project contains a make file the compilation can be done via a single command:

>make all

Running this program should produce the result as shown in the following screenshot, which shows the Win_Min2 example running on Windows:

There's more…

The main difference between the Android and Windows implementation of a main loop can be summarized in the following way. In Windows, we are in control of the main loop. We literally declare a loop, which pulls messages from the system, handles input, updates the game state, and render s the frame (marked green in the following figure). Each stage invokes an appropriate callback from our portable game (denoted with blue color in the following figure). On the contrary, the Android part works entirely differently. The main loop is moved away from the native code and lives inside the Java Activity and GLSurfaceView classes. It invokes the JNI callbacks that we implement in our wrapper native library (shown in red). The native wrapper invokes our portable game callbacks. Let's summarize it in the following way:

The rest of the book is centered on this kind of architecture and the game functionality will be implemented inside these portable On...() callbacks.

There is yet another important note. Responding to timer events to create animation can be done on Windows with the SetTimer() call and the WM_TIMER message handler. We get to that in Chapter 2, Porting Common Libraries, when we speak about rigid body physics simulations. However, it is much better to organize a fixed time-step main loop, which is explained later in the book.

See also

- Chapter 6, Unifying OpenGL ES 3 and OpenGL 3

- The recipe Implementing the main loop in Chapter 8, Writing a Match-3 Game