Chapter 11. Build Your Own Supercomputer with Raspberry Pi

In the previous chapter, we learned how to get the Raspberry Pi to communicate with the Internet, along with some more topics. We learned how to set up the Twitter API and how to send out tweets using it. We also learned how to save data in a database and retrieve it later. Finally, we combined the two concepts and the weather station we built in Chapter 9, Grove Sensors and the Raspberry Pi to create a tweeting weather station that also saves the temperature and humidity data in a database.

In all the chapters prior to this one, we explored the Raspberry Pi functionalities. But this chapter will be different in the sense that we will learn how to combine two or more Raspberry Pi devices to create a more powerful computer. Indeed, if you combine enough Raspberry Pi devices, you can make your own supercomputer! Sounds interesting? We will cover the following in this chapter:

- Using a network switch to create a static network for Pi devices

- Installing and setting up MPICH2 and MPI4PY and running on a single node

- Using the static network of multiple Pi devices is to create an MPI (short for Message Parsing Interface) cluster

- Performance the benchmarking of cluster

- Running GalaxSee (N Body simulation)

Introducing a Pi-based supercomputer

The basic requirements for the building of a supercomputer are as follows:

- Multiple processors

- A mechanism to interconnect them

We already have multiple processors in the form of multiple Raspberry Pi devices. We connect them via a networking hub, which is connected to each Pi via an Ethernet cable. A networking hub is nothing but a router that can accept Ethernet cables. There are dedicated networking hubs that can support many connections but for the purposes of this chapter, we can use a normal Wi-Fi router that accepts four LAN connections in addition to wireless connections. This is because we will not build a cluster of more than four Pi devices. You are welcome to add more devices to this cluster in order to increase your computing power. So, the hardware requirements for this chapter are as follows:

- Three Raspberry Pi devices

- Three Raspbian-installed SD cards

- A networking hub

- Three Ethernet cables

- Power supply for every device

Installing and configuring MPICH2 and MPI4PY

Before we can begin installing the libraries to a network of multiple Pi devices, we need to configure our Raspbian installation to make things a bit easier.

Boot up a Raspberry Pi and in the terminal, enter the following command:

sudo raspi-config

It is assumed that you have followed all the setup steps that are preferred on first starting up the Raspberry Pi. These are as follows:

- Expand the filesystem.

- Overclock the system (this is optional). We will be using a Pi overclocked to 800MHz as shown below:

- Now, enter the advanced menu by selecting Advanced Options. We need to configure the following:

- Set the hostname to

Pi1.

- Enable SSH.

- Set the hostname to

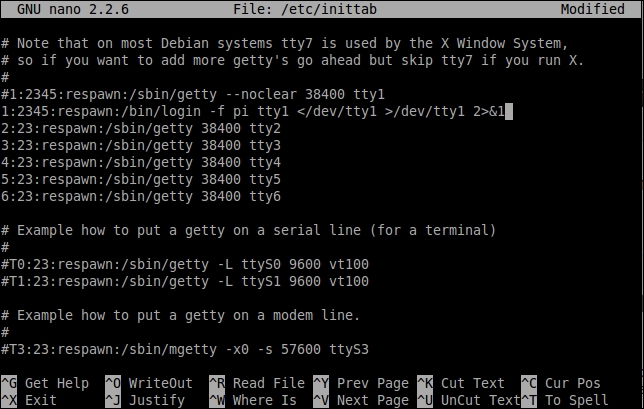

We need to enable auto login so that we do not need to manually log in every Pi once we fire it up. Auto login is enabled by default in the latest versions of the Raspbian operating system, so we don't need to perform the following procedure on the latest version. To enable auto login, exit the configuration menu, but don't reboot yet. To set the auto login, open the inittab file with the following command:

sudo nano /etc/inittab

Comment out the following line:

1:2345:respawn:/sbin/getty --noclear 38400 tty1

This is done so that it looks like this:

#1:2345:respawn:/sbin/getty --noclear 38400 tty1

Then, add the following line:

1:2345:respawn:/bin/login -f pi tty1 </dev/tty1 >/dev/tty1 2>&1

Save the file and reboot the Pi with sudo reboot. When it boots up, you should be auto-logged in.

We are now ready to proceed and begin installing the software that is required to run a cluster of computers.

Installing the MPICH library

MPICH is a freely available, easily portable, and widely used implementation of the MPI standard, which is a message parsing protocol for distributed memory applications used in parallel computing. Work on the first version of MPICH was started around 1992 and is currently in its third version. MPICH is one of the most popular implementations of the MPI standard due to the fact that it is used as a foundation for a vast majority of MPI implementations, including ones by IBM, Cray, Microsoft, and so on.

Note

To know more about the MPICH library, visit http://www.mpich.org/.

At the time of writing, the latest version of MPICH is 3.2. The installation instructions for this are presented. However, these should work on all the sub-versions of MPICH3 and most of the later versions of MPICH2.

To begin the installation, ensure that the Raspberry Pi has a valid Internet connection. Run the following commands in order to get a working installation of MPICH:

- Update your Raspbian distribution:

sudo apt-get update - Create a directory to store the MPICH files:

mkdir mpich cd ~/mpich

- Get the MPICH tar file:

wget http://www.mpich.org/static/downloads/3.2/mpich-3.2.tar.gz - Extract the tar archive:

tar xfz mpich-3.2.tar.gz - This will contain the MPICH installation and build the following:

sudo mkdir /home/rpimpi/ sudo mkdir /home/rpimpi/mpi-install mkdir /home/pi/mpi-build cd /home/pi/mpi-build

- Fortran is a dependency for MPI:

sudo apt-get install gfortran

Now, since we created a build folder for the MPICH installation earlier, we need to configure the installation script and point it to the folder where we want the interface to be installed. We do this by running the configure script and setting the build prefix to the installation directory:

sudo /home/pi/mpich/mpich-3.2/configure -prefix=/home/rpimpi/mpi-install

Finally, we carry out the actual installation.

sudo make sudo make install

Note

Keep in mind that this build might take about an hour to complete. Make sure that the Raspberry Pi stays on for the entire period of the build.

Edit the bashrc file so that the path to the MPICH installation is loaded to the PATH variable every time we open a new terminal window. To do that, open the file in the terminal with the following command:

nano ~/.bashrc

Then, add the following line at the end:

PATH=$PATH:/home/rpimpi/mpi-install/bin

Save and exit the bashrc file by pressing Ctrl + X and then click on Return. We will now test the installation. For that, reboot the Pi:

sudo reboot

Then, run the following command:

mpiexec -n 1 hostname

If the preceding command returns Pi1, or whatever you set your hostname to be, then our MPICH installation is successful and we can proceed to the next step.

We will now look at a short C program that uses MPICH. This is given as follows:

#include "mpi.h"

#include <stdio.h>

void main(int argc, char *argv[]) {

int rank, size

MPI_Init(argc, argv);

MPI_Comm_rank( MPI_COMM_WORLD, &rank);

MPI_Comm_size( MPI_COMM_WORLD, &size);

printf("I have %d rank and %d size \n", rank, size);

MPI_Finalize();

}This program runs on a single node and discovers the total number of nodes in a network. It then communicates with the MPI processes on other nodes and finds out its own rank.

The MPI_Init()function initiates the MPICH process on our computer according to the given information about the IP addresses in a network. The MPI_Comm_rank() function and the MPI_Comm_size() function get the rank of the current process and the total size of the network and save them in rank and size variables, respectively. The MPI_Finalize() function is nothing but an exit routine that ends the MPI process cleanly.

On its own, MPICH can be run using C and Fortran languages. But that might be a hindrance for a lot of users. But fret not! We can use MPICH in Python by installing the Python API called MPI4PY.

Installing MPI4PY

The conventional way of installing the mpi4py module from a package manager such as apt-get or aptitude does not work because it tries to install the openMPI library along with it, which conflicts with the existing installation of MPICH.

So, we have to install it manually. We do this by first cloning the repository from BitBucket:

git clone https://bitbucket.org/mpi4py/mpi4py

Make sure that all the dependencies for the library are met. These are as follows:

- Python 2.6 or higher

- A functional MPICH installation

- Cython

The first two dependencies are already met. We can install Cython with the following command:

sudo apt-get install cython

The following commands will install mpi4py with the setup.py installation script:

cd mpi4py python setup.py build python setup.py install

Add the path to the installation of mpi4py to PYTHONPATH so that the Python environment knows where the installation files are located. This ensures that we are easily able to import mpi4py into our Python applications:

export PYTHONPATH=/home/pi/mpi4py

Finally, run a test script to test the installation:

python demo/helloworld.py

Now that we have completely set up the system to use MPICH, we need to do this for every Pi we wish to use. We can run all of the earlier commands in freshly installed SD cards, but the better option would be to copy the card we prepared earlier into blank SD cards. This saves us the hassle of completely setting up the system from scratch. But remember to change the hostname to our standard scheme (such as Pi01 and Pi02) after the fresh SD card has been set up in a new Raspberry Pi. For Windows, there is an easy-to-use tool called Win32 Disk Imager, which we can use to clone the card into an .img file and then copy it to another blank SD card. If you are using OS X or Linux, then the following procedure should be followed:

- Copy the OS from an existing card:

sudo dd bs=4M if=/dev/disk2 of=~/Desktop/raspi.imgNote

Replace

disk2with your own SD card - Remove the card and insert a new card.

- Unmount the card.

- Format the SD card.

- Copy the OS to the SD card:

sudo dd bs=4M if=~/Desktop/raspi.img of=/dev/disk2

Note

To know more about the mpi4py library and to have a look at the various demos, you can visit the official Git repository on BitBucket at https://bitbucket.org/mpi4py/mpi4py.

Installing the MPICH library

MPICH is a freely available, easily portable, and widely used implementation of the MPI standard, which is a message parsing protocol for distributed memory applications used in parallel computing. Work on the first version of MPICH was started around 1992 and is currently in its third version. MPICH is one of the most popular implementations of the MPI standard due to the fact that it is used as a foundation for a vast majority of MPI implementations, including ones by IBM, Cray, Microsoft, and so on.

Note

To know more about the MPICH library, visit http://www.mpich.org/.

At the time of writing, the latest version of MPICH is 3.2. The installation instructions for this are presented. However, these should work on all the sub-versions of MPICH3 and most of the later versions of MPICH2.

To begin the installation, ensure that the Raspberry Pi has a valid Internet connection. Run the following commands in order to get a working installation of MPICH:

- Update your Raspbian distribution:

sudo apt-get update - Create a directory to store the MPICH files:

mkdir mpich cd ~/mpich

- Get the MPICH tar file:

wget http://www.mpich.org/static/downloads/3.2/mpich-3.2.tar.gz - Extract the tar archive:

tar xfz mpich-3.2.tar.gz - This will contain the MPICH installation and build the following:

sudo mkdir /home/rpimpi/ sudo mkdir /home/rpimpi/mpi-install mkdir /home/pi/mpi-build cd /home/pi/mpi-build

- Fortran is a dependency for MPI:

sudo apt-get install gfortran

Now, since we created a build folder for the MPICH installation earlier, we need to configure the installation script and point it to the folder where we want the interface to be installed. We do this by running the configure script and setting the build prefix to the installation directory:

sudo /home/pi/mpich/mpich-3.2/configure -prefix=/home/rpimpi/mpi-install

Finally, we carry out the actual installation.

sudo make sudo make install

Note

Keep in mind that this build might take about an hour to complete. Make sure that the Raspberry Pi stays on for the entire period of the build.

Edit the bashrc file so that the path to the MPICH installation is loaded to the PATH variable every time we open a new terminal window. To do that, open the file in the terminal with the following command:

nano ~/.bashrc

Then, add the following line at the end:

PATH=$PATH:/home/rpimpi/mpi-install/bin

Save and exit the bashrc file by pressing Ctrl + X and then click on Return. We will now test the installation. For that, reboot the Pi:

sudo reboot

Then, run the following command:

mpiexec -n 1 hostname

If the preceding command returns Pi1, or whatever you set your hostname to be, then our MPICH installation is successful and we can proceed to the next step.

We will now look at a short C program that uses MPICH. This is given as follows:

#include "mpi.h"

#include <stdio.h>

void main(int argc, char *argv[]) {

int rank, size

MPI_Init(argc, argv);

MPI_Comm_rank( MPI_COMM_WORLD, &rank);

MPI_Comm_size( MPI_COMM_WORLD, &size);

printf("I have %d rank and %d size \n", rank, size);

MPI_Finalize();

}This program runs on a single node and discovers the total number of nodes in a network. It then communicates with the MPI processes on other nodes and finds out its own rank.

The MPI_Init()function initiates the MPICH process on our computer according to the given information about the IP addresses in a network. The MPI_Comm_rank() function and the MPI_Comm_size() function get the rank of the current process and the total size of the network and save them in rank and size variables, respectively. The MPI_Finalize() function is nothing but an exit routine that ends the MPI process cleanly.

On its own, MPICH can be run using C and Fortran languages. But that might be a hindrance for a lot of users. But fret not! We can use MPICH in Python by installing the Python API called MPI4PY.

Installing MPI4PY

The conventional way of installing the mpi4py module from a package manager such as apt-get or aptitude does not work because it tries to install the openMPI library along with it, which conflicts with the existing installation of MPICH.

So, we have to install it manually. We do this by first cloning the repository from BitBucket:

git clone https://bitbucket.org/mpi4py/mpi4py

Make sure that all the dependencies for the library are met. These are as follows:

- Python 2.6 or higher

- A functional MPICH installation

- Cython

The first two dependencies are already met. We can install Cython with the following command:

sudo apt-get install cython

The following commands will install mpi4py with the setup.py installation script:

cd mpi4py python setup.py build python setup.py install

Add the path to the installation of mpi4py to PYTHONPATH so that the Python environment knows where the installation files are located. This ensures that we are easily able to import mpi4py into our Python applications:

export PYTHONPATH=/home/pi/mpi4py

Finally, run a test script to test the installation:

python demo/helloworld.py

Now that we have completely set up the system to use MPICH, we need to do this for every Pi we wish to use. We can run all of the earlier commands in freshly installed SD cards, but the better option would be to copy the card we prepared earlier into blank SD cards. This saves us the hassle of completely setting up the system from scratch. But remember to change the hostname to our standard scheme (such as Pi01 and Pi02) after the fresh SD card has been set up in a new Raspberry Pi. For Windows, there is an easy-to-use tool called Win32 Disk Imager, which we can use to clone the card into an .img file and then copy it to another blank SD card. If you are using OS X or Linux, then the following procedure should be followed:

- Copy the OS from an existing card:

sudo dd bs=4M if=/dev/disk2 of=~/Desktop/raspi.imgNote

Replace

disk2with your own SD card - Remove the card and insert a new card.

- Unmount the card.

- Format the SD card.

- Copy the OS to the SD card:

sudo dd bs=4M if=~/Desktop/raspi.img of=/dev/disk2

Note

To know more about the mpi4py library and to have a look at the various demos, you can visit the official Git repository on BitBucket at https://bitbucket.org/mpi4py/mpi4py.

Installing MPI4PY

The conventional way of installing the mpi4py module from a package manager such as apt-get or aptitude does not work because it tries to install the openMPI library along with it, which conflicts with the existing installation of MPICH.

So, we have to install it manually. We do this by first cloning the repository from BitBucket:

git clone https://bitbucket.org/mpi4py/mpi4py

Make sure that all the dependencies for the library are met. These are as follows:

- Python 2.6 or higher

- A functional MPICH installation

- Cython

The first two dependencies are already met. We can install Cython with the following command:

sudo apt-get install cython

The following commands will install mpi4py with the setup.py installation script:

cd mpi4py python setup.py build python setup.py install

Add the path to the installation of mpi4py to PYTHONPATH so that the Python environment knows where the installation files are located. This ensures that we are easily able to import mpi4py into our Python applications:

export PYTHONPATH=/home/pi/mpi4py

Finally, run a test script to test the installation:

python demo/helloworld.py

Now that we have completely set up the system to use MPICH, we need to do this for every Pi we wish to use. We can run all of the earlier commands in freshly installed SD cards, but the better option would be to copy the card we prepared earlier into blank SD cards. This saves us the hassle of completely setting up the system from scratch. But remember to change the hostname to our standard scheme (such as Pi01 and Pi02) after the fresh SD card has been set up in a new Raspberry Pi. For Windows, there is an easy-to-use tool called Win32 Disk Imager, which we can use to clone the card into an .img file and then copy it to another blank SD card. If you are using OS X or Linux, then the following procedure should be followed:

- Copy the OS from an existing card:

sudo dd bs=4M if=/dev/disk2 of=~/Desktop/raspi.imgNote

Replace

disk2with your own SD card - Remove the card and insert a new card.

- Unmount the card.

- Format the SD card.

- Copy the OS to the SD card:

sudo dd bs=4M if=~/Desktop/raspi.img of=/dev/disk2

Note

To know more about the mpi4py library and to have a look at the various demos, you can visit the official Git repository on BitBucket at https://bitbucket.org/mpi4py/mpi4py.

Setting up the Raspberry Pi cluster

Now that we have a running Raspbian distribution in all the Raspberry Pi devices that we wish to use, we will now connect them to create a cluster. We would need a networking hub that has at least the same number of LAN ports as the size of your cluster should be. For the purposes of this chapter, we will use three Raspberry Pis, so we would need a hub that has at least three LAN ports.

If you have a Wi-Fi router, then it should already have some ports at the back, as shown in the following image:

We also need three networking cables, such as those with an Ethernet connector, where one port goes into the Raspberry Pi and the other goes into the networking hub. They will look like the ones shown in the following image:

Connect one end of the Ethernet cable to the Raspberry Pi and the other end to the router for all the devices. Once we complete this networking setup, we can move on to connecting the Raspberry Pi devices with software.

Setting up SSH access from the host to the client

In the setup described here, one master node will control the other slave nodes. The master is called the host and the slaves are called clients. To access the client from the host, we use something called SSH. It is used to get the terminal access to the client from the host, and the command used is as follows:

ssh pi@192.168.1.5

Here, the preceding IP address can be replaced with the IP address of our Raspberry Pi. The IP address of a Pi can be found by simply opening up a new terminal and entering the following command:

ifconfig

This will give you the IP address associated with your connected network and the interface, which is eth0 in our case. A small problem with using this is that every time we try to SSH into a client, we need a password. To remove this restriction, we need to authorize the master to log in to the client. How we do that is by generating an RSA key from the master and then transferring it to the client. Each device has a unique RSA key, and hence, whenever a client receives an SSH request from an authorized RSA device, it will not ask for the password. To do that, we must first generate a public private key pair from the master, which is done using a command in the following format:

ssh-keygen -t rsa -C "your_email@youremail.com"

It will look something like this:

To transfer this key into the slave Raspberry Pis, we need to know each of their IP addresses. This is easily done by logging into their Pi and entering ifconfig.

Next, we need to copy our keys to the slave Raspberry Pi. This is done by the following command, replacing the given IP address with the IP address of your own Raspberry Pi:

cat ~/.ssh/id_rsa.pub | ssh pi@192.168.1.5 "mkdir .ssh;cat >> .ssh/authorized_keys"

Now that we have copied our keys to the slave Raspberry Pi, we should be able to log in without a password with the standard SSH command:

ssh pi@192.168.1.5

Running code in parallel

We have now successfully completed the prerequisites for the running of code on multiple machines. We will now learn how to run our code on the Raspberry Pi cluster using the MPICH library. We will first run a demo included with the mpi4py module, and then we will run an N-Body simulation on our cluster. Sounds fun? Let's get into it.

First, we need to tell the MPICH library the IP address of each of the Pis in our network. This is done by simply creating a new file called machinefile in the home folder, which contains a list of the IP addresses of all the Raspberry Pis connected to our network. Execute the following command to create the file:

nano machinefile

Then, add the IP address of each Raspberry Pi in a new line so that the final result looks like this:

Note that we have added three IP addresses because two Raspberry Pis are slaves and one is a master. So, the MPICH library knows that it needs to run the code on these three devices. Also, note that MPICH does not differentiate between devices. It just needs a valid installation of the library to run properly. This means that we can add different versions of Raspberry Pi to our cluster or even other computers such as a BeagleBone, which has a valid installation of MPICH.

To test our setup, we navigate to the mpi4py/demo directory with the following command:

cd ~/mpi4py/demo

Then, we run the following command:

mpiexec -f ~/machinefile -n 3 python helloworld.py

It will give an output similar to this:

Performance benchmarking of the cluster

Since we have successfully created a cluster of Raspberry Pis, we now need to test their performance. One way of doing that is to measure the latency between different nodes. To this end, Ohio State University has created some benchmarking tests that are included with the mpi4py library. We will run a few of these to measure the performance of our cluster. Simply put, latency measures the amount of time a packet takes to reach its destination and get a response. The lower the latency, the better a cluster will perform. The tests are given in the demo folder of the mpi4py directory. These run in this fashion: the osu_bcast benchmark measures the latency of the MPI_Bcast collective operation across N processes. It measures the minimum, maximum, and average latency for various message lengths and over a large number of iterations.

To run the osu_allgather.py test, we execute the following command:

mpiexec -f machinefile -n 3 python osu_allgather.py

As an interesting experiment, try to gather the latency variation with different sizes of data. It should give you a unique insight into the functioning of the network.

Introducing N-Body simulations

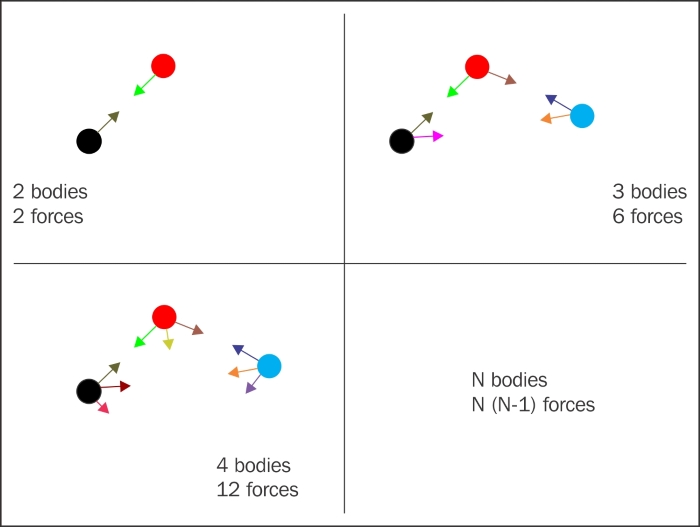

An N-Body simulation is a simulation of a dynamic system of particles that are under the influence of physical forces, such as gravity or magnetism. It is mostly used in astrophysics to study the processes involving nonlinear structure formation, such as the formation of galaxies, planets, and suns. They are also used to study the evolution of the large-scale structure of the universe, including estimating the dynamics of a few body systems such as the earth, moon, and sun.

Now, N-body simulations require a lot of computational resources. For example, a 10-body system has 10*9 forces that need to be computed at the same time. You can see that, if we increase the number of particles, the number of forces increases exponentially. So a 100-body system will require 9,900 forces to be calculated simultaneously. That is why N-Body simulations are generally run on powerful computers. However, we are going to use our Raspberry Pi cluster to accomplish this task because it has three times the computational power of a single Raspberry Pi. For this task, we will use an open source library called GalaxSee.

GalaxSee is modeled as discrete bodies interacting through gravity. The acceleration of an object is given by the sum of the forces acting on that object, divided by its mass. If you know the acceleration of a body, you can calculate the change in velocity, and if you know the velocity, you can calculate the change in the position. Consequently, you will have the position of each object at every point of time. The more objects you have, the more forces you will need to calculate, and every object must know about every other object. To reduce the computational load on a single processor, the Galaxy code is an implementation of simple parallelism. The most computationally heavy calculations involve calculating the forces on a single object, and hence, each client is involved with that calculation given the object's information and the information about each object that exerts force on it. The master then collects the computed forces from the clients.

In this way, we can simulate an N-Body simulation with code running in parallel. Next, we will see how to install and get GalaxSee running.

Note

To learn more about the GalaxSee library, visit https://www.shodor.org/master/galaxsee/.

Installing and running GalaxSee

Now that we have a basic idea of what an N-Body simulation is and how different bodies interact with each other, we will proceed to the installation of the GalaxSee library.

First, we need to download the archive of the source code. We can do this with the wget command:

wget http://www.shodor.org/refdesk/Resources/Tutorials/MPIExamples/Gal.tgz

Next, we extract the .tgz archive, assuming it was downloaded to the home folder. If the path is different, we need to enter the correct path:

tar -xvzf ~/Gal.tgz

Navigate to the folder:

cd Gal

We need to use Makefile to successfully build GalaxSee on our Raspberry Pi. To do that, open the file in the terminal:

nano Makefile

Then, change the line:

cc = mpicc

to:

cc = mpic++

This is done so that the final result looks like this:

To build the program, simply run this:

make

Now if you enter ls inside the Gal folder, you will see a newly created executable file named GalaxSee. Run the file with the following command:

./GalaxSee

This will execute the program and will open a new window that looks like the following:

Here, the white dots are the bodies that are interacting with each other with the force of gravity. There are a few options that you can configure in GalaxSee to change the number of bodies or their interaction. For example, run the following command:

./GalaxSee 200 400 10000

Here, the first argument to the program, 200, gives the number of bodies to be simulated. The second argument gives the speed of the simulation. The higher the number, the higher the speed. The third argument gives the amount of time to run the simulation in milliseconds. So, in the preceding command, we will simulate 200 bodies for 10 seconds. Obviously, we can experiment with different variables and see how these variables affect the simulation.

The preceding example was running on a single Raspberry Pi. But as promised, we will now see how to run it on a cluster. It will probably be surprising if we learn that the program can run on a cluster of Raspberry Pi devices with a single command and one that we've seen before. The following command does the trick:

mpiexec -f ~/machinefile -n 3 ./GalaxSee

In the preceding command, mpiexec initiates the MPI process on our host computer. Here, we will give it three points of data on which to execute the GalaxSee program. These are as follows:

- The path to the file containing the IP addresses for each device in the network

- The number of devices to be used

- The path to the executable file

The -f option specifies the machinefile. The -n option specifies the number of devices to be used, and finally, we give the GalaxSee executable file to it.

Try it for yourself! If you try to increase the number of bodies in the simulation on a single Raspberry Pi, it would probably run slower. But if you try the same on a cluster, it will definitely run faster because now, we have a lot more computational resources at our disposal.

Summary

This chapter was unique in the sense that we learned how to execute software not on a single Raspberry Pi but on multiple ones. We learned the advantages of using parallel computation and learned how to build our own network of clustered Raspberry Pi devices. We also learned how to install the libraries required for parallel computations so that we can run our own software in the cluster. We learned how to configure the Raspberry Pis in our network to communicate with each other and make the communication hassle-free. Once we set up a system, we tested it to to measure the response in the form of latency.

Finally, we learned about the concept of N-body simulation and configured an open source program to simulate it. W also saw how increasing the number of bodies in the simulation could affect the speed of the program's execution.

In the next chapter, we will expand upon this knowledge about clusters and learn about advanced networking concepts, such as DNS and DHCP. We will learn how to add functionalities such as a domain name service and auto detection of nodes and how to initiate a remote shutdown of the cluster.