Mission Briefing

In this project, we will learn how to connect Microsoft's Kinect to a computer and use depth imaging and user tracking from Processing. We will rush through the installation of the OpenNI framework in the first task, as this library is used by the Processing library SimpleOpenNI. We will then learn how to use the depth image feature of the Kinect infrared camera and the player tracking function in Processing.

Then, we will use the so-called skeleton tracker, not only to locate the user in front of the camera, but also the head, neck, and elbows. These 3D coordinates will allow us to control a stick figure.

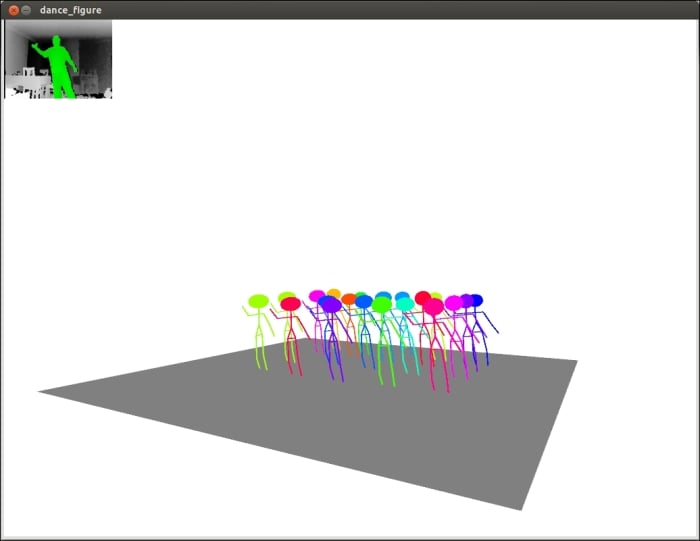

In the final task of our current mission, we are going to add a group of additional dancers that will also be controlled by the 3D coordinates of the players' limbs.

You can see a screenshot of the final sketch here:

Why Is It Awesome?

Kinect enables a whole lot of new possibilities for interacting with a computer. It enables the player to control a computer by simply moving...