Object recognition from the camera

This will be a change that involves a couple of steps. First, we’ll introduce a component that can capture video from the browser. We’ll create a CameraDetect.vue component in the ./components folder: https://github.com/PacktPublishing/Building-Real-world-Web-Applications-with-Vue.js-3/blob/main/09.tensorflow/.notes/9.13-CameraDetect.vue.

The code in the CameraDetect.vue component uses composables from the @vueuse package to interact with the browsers’ Devices and userMedia APIs. We’re using useDevicesList to list the available cameras (lines 33–40) and populate a <v-select /> component (lines 4–14). This allows the user to switch between available cameras.

The user needs to manually activate a camera (also when switching between cameras) for security reasons. The button in the component toggles the camera stream (lines 44–46). To display the stream, we use watchEffect to pipe the stream into the video reference (lines 48–50). We can display the camera feed to the user by referencing the stream in the <video /> HTML component (line 20).

Our stream is the replacement for the file upload of our prototype. We already have our store prepared to detect objects, so now, we’ll connect the stream to the detect function.

Detecting and recognizing objects on a stream

One of the changes from our prototype is the way we provide images to the object recognition method. Using a stream means that we need to continuously process input, just as fast as the browser can.

Recognizing objects

Our detect method from objectStore needs to be able to determine if the recognized objects are the objects we are looking for. We’ll add some capabilities to the function in the object.ts file:

// ...abbreviated const detect = async (img: any, className?: string) => {

try {

detected.value = []

const result = await cocoSsdModel.detect(img)

const filter = className ? (item: DetectedObject) => (item.score >= config.DETECTION_ACCURACY_THRESHOLD && item.class === className) : () => true

detected.value = result.map((item: DetectedObject) => item).filter(filter).sort((a: DetectedObject, b: DetectedObject) => b.score - a.score)

} catch (e) {

// handle error if model is not loaded

}

};

// ...abbreviated

Here, we’re adding an optional parameter called className. If it’s provided, we define a filter function. The filter is applied to the collection of recognized objects. If no className is provided, that filter function just defaults to returning true, which means it doesn’t filter out any objects. We only do this to provide backward compatibility for the <ImageDetect /> component.

Note

When working on existing code bases, you have to keep these sorts of compatibility issues in mind while developing. In our case, backward compatibility was needed for a prototype function, so it’s not vital for our app. I’m highlighting this because, in large-scale applications with low test coverage, you may run into these solutions.

With our changes to the object.ts file, we can pass the stream to objectStore.

Detecting objects from the stream

We’ll begin by passing the video stream’s contents to our updated detect function from objectStore. We’ll also include gameStore so that we can pass the current category as the className property. Let’s add these lines to the CameraDetect.vue file to get ourselves set up:

import { ref, watchEffect, watch } from "vue";// ...abbreviated

import { storeToRefs } from "pinia";

import { useObjectStore } from "@/store/object";

const objectStore = useObjectStore();

const { detected } = storeToRefs(objectStore);

const { detect } = objectStore;

import { useGameStore } from "@/store/game";

const gameStore = useGameStore();

const { currentCategory } = storeToRefs(gameStore);

// ...abbreviated

Don’t forget about the watch hook that we import from Vue; we’ll need it to monitor camera activity! Next, we’ll add a function called detectObject to our scripts:

const detectObject = async (): Promise<void> => { if (!props.disabled) {

await detect(video.value, currentCategory.value);

}

window.requestAnimationFrame(detectObject);

};

What’s happening here? We’ve created a recursive function that continuously calls the detect method by passing the video and currentCategory values. To throttle the calls, we’re using window.requestAnimationFrame (https://developer.mozilla.org/en-US/docs/Web/API/window/requestAnimationFrame). Normally, this API is meant to query the browser when animating: the browser will accept the callback function once it’s ready to process it. This is perfect for our use case as well!

We can trigger the initial call as soon as the video is enabled. The watch hook we’ve imported can monitor the enabled variable and call the detectObject function once the video has been enabled:

watch(enabled, () => { if (enabled.value && video.value) {

video.value.addEventListener("loadeddata", detectObject);

}

});

Finally, once we’ve found a match, we need to signal this to our application. We’ll add an emit event called found to trigger once the detected property has been populated with items:

const emit = defineEmits(["found"]);watch(detected, () => {

if (detected.value?.length > 0) {

emit("found", detected.value[0]);

}

});

We’re returning the top match from the collection of detected items to the parent component.

Note

You can make testing easier by temporarily modifying the objects property in objectsStore so that it holds a couple of values of objects you have on hand, such as person. Later, you can restore the list to its previous state.

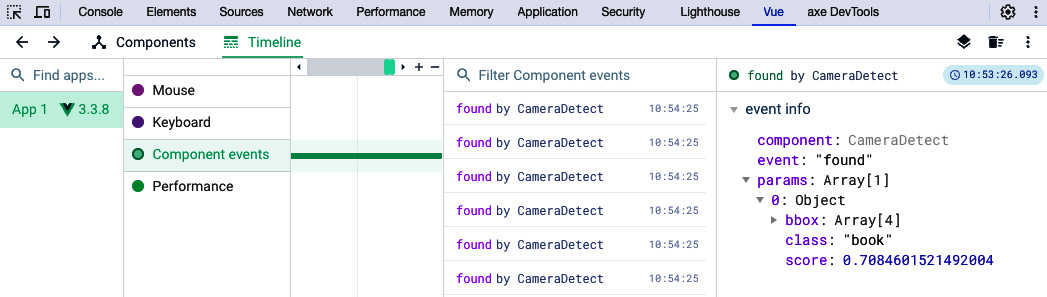

Using Vue’s DevTools, you can test the app again. If you open the DevTools and navigate to the Timeline and Component events panels, once the camera has made a positive match, you will see continuous events being emitted (well, once for every animation frame):

Figure 9.5 – Positive matches being emitted by the <CameraDetect /> component

We can now connect the emitted event to the Find state. So, let’s move over to the ./views/Find.vue file so that we can pick up on the found event and pull it into our little game!

Connecting detection

If we open the Find.vue file, we can now add the event handler on the component to the template. We’ll also provide a disable property to control the camera by changing the component line to the following:

<CameraDetect @found="found" :disabled="detectionDisabled" />

In the script block, we have to make some changes to both pick up on the found event and provide the value for the detectionDisabled property. Let’s look at the new component code: https://github.com/PacktPublishing/Building-Real-world-Web-Applications-with-Vue.js-3/blob/main/09.tensorflow/.notes/9.14-Find.vue.

We’ve added the detectionDisabled reactive variable (line 51) and are passing it down to the <CameraDetect /> component. In the existing skip function, we’re setting the value of detectionDisabled to false (line 68). We’re also adding the found function (lines 78–86), where we update the detectionDisabled value as well and process a new score by calculating the certainty of the recognized object (lines 81–83) and updating gameStore (line 84). Similar to the skip function, we call the newRound function to progress the game.

Once the newRound function has been called, we update the detectionDisabled variable and set it to true to continue detection.

This would be another good time to test the app. In this case, upon detection, you will rapidly progress through the rounds toward the end. If recognition seems unreliable, you can lower DETECTION_ACCURACY_THRESHOLD in the ./config.ts file.