Conversational user interfaces are as old as modern computers themselves. ENIAC, the first programmable general-purpose computer, was built in the year 1946. In 1950, Alan Turing, a British computer scientist, proposed to measure the level of intelligence in machines using a conversational test called the Turing test. The test involved having the machine compete with a human as a dialogue partner to a set of human judges (yet another human). The judges would interact with each of the two participants (the human and the machine) using a text type interface that is not unlike most of the modern messaging chat applications. Over chat, the judges were supposed to identify which of the two participants was the machine. If at least 30% of the judges couldn't differentiate between the two participants, the machine was considered to have passed the test. This was one of the earliest human thoughts on conversational interfaces and their bearing on the intelligence levels of machines that have such capabilities. However, attempts to build such interfaces have not been very successful for several following decades.

For about 35 years, since the 1980s, Graphical User Interfaces (GUI) have been dominating the way in which we have been interacting with machines. With recent developments in AI and growing constraints such as the shrinking size of gadgets (from laptops to mobile phones), reducing on-screen real estates (smart watches), and the need for interfaces to become invisible (smart home and robots), conversational user interfaces are once again becoming a reality. For instance, the best way to interact with mobile robots that are distributed gadgets in smart homes would be using voice. The system should, therefore, be able to understand the users' requests and responses in natural human language. Such capabilities of systems can reduce human effort in learning and understanding current complex interfaces.

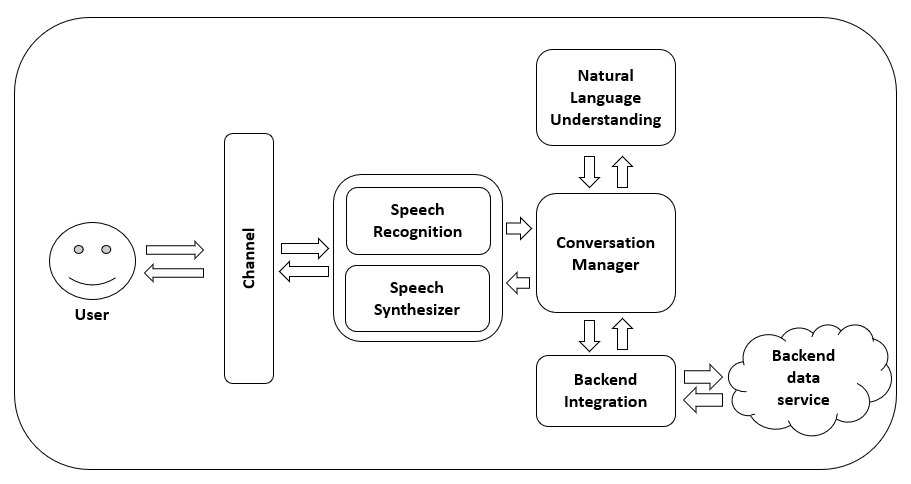

Conversational user interfaces have been known under several names: natural language interfaces, spoken dialogue systems, chatbots, intelligent virtual agents, virtual assistants, and so on. The actual difference between these systems is in terms of the backend integrations (for example, databases, and task/control modules), modalities (for example, text, voice, and visual avatars), and channels they get deployed on. However, one of the common themes among these systems is their ability to interact with users in a conversational manner using natural language.