Generally, server applications respond to clients requests. That is, the request and reply method is the main one for interactions between clients and servers as per the famous client-server architectural style. This is kind of pulling information from servers. The communication is also synchronous. In this case, both clients and servers have to be available online in order to initiate and accomplish the tasks. Further on, when service requests are being processed and performed by server machines, the requesting services/clients have to wait to receive the intended response from servers. That means clients cannot do any other work while waiting to receive servers' responses.

The world is eventually becoming event-driven. That is, applications have to be sensitive and responsive proactively, pre-emptively, and precisely. Whenever there is an event happening, applications have to receive the event information and plunge into the necessary activities immediately. The request and reply notion paves the way for the fire and forgets tenet. The communication becomes asynchronous. There is no need for the participating applications to be available online all the time.

An event is a noteworthy thing that happens inside or outside of any business. An event may signify a problem, an opportunity, a deviation, state change, or a threshold break-in. Every event occurrence has an event header and an event body. The event header contains elements describing the event occurrence details, such as specification ID, event type, name, creator, timestamp, and so on. The event body concisely yet unambiguously describes what happened. The event body has to have all the right and relevant information so that any interested party can use that information to take necessary action in time. If the event is not fully described, then the interested party has to go back to the source system to extract the value-adding information.

EDA is typically based on an asynchronous message-driven communication model to propagate information throughout an enterprise. It supports a more natural alignment with an organization's operational model by describing business activities as series of events. EDA does not bind functionally disparate systems and teams into the same centralized management model. EDA ultimately leads to highly decoupled systems. The common issues being introduced by system dependencies are getting eliminated through the adoption of the proven and potential EDA.

We have seen various forms of events used in different areas. There are business and technical events. Systems update their status and condition emitting events to be captured and subjected to a variety of investigations in order to precisely understand the prevailing situations. The submission of web forms and clicking on some hypertexts generate events to be captured. Incremental database synchronization mechanisms, RFID readings, email messages, short message service (SMS), instant messaging, and so on are events not to be taken lightly. There can be coarse-grained and fine-grained events. Typically, a coarse-grained event is composed of multiple fine-grained events. That is, a coarse-grained event gets abstracted into business concepts and activities. For example, a new customer registration has occurred on the external website, an order has completed the checkout process, a loan application is approved in underwriting, a market trade transaction is completed, a fulfillment request is submitted to a supplier, and so on. On the other hand, fine-grained events such as infrastructure faults, application exceptions, system capacity changes, and change deployments are still important. But their scope is local and limited.

There are event processing engines, message-oriented middleware (MoM) solutions such as message queues and brokers to collect and stock event data and messages. Millions of events can be collected, parsed, and delivered through multiple topics through these MoM solutions. As event sources/producers publish notifications, event receivers can choose to listen to or filter out specific events and make proactive decisions in real-time on what to do next.

EDA style is built on the fundamental aspects of event notifications to facilitate immediate information dissemination and reactive business process execution. In an EDA environment, information can be propagated to all the services and applications in real-time. The EDA pattern enables highly reactive enterprise applications. Real-time analytics is the new normal with the surging popularity of the EDA pattern.

Anuradha Wickramarachchi in his blog writes that this is the most common distributed asynchronous architecture. This architecture is capable of producing highly scalable systems. The architecture consists of single-purpose event processing components that listen to events and process them asynchronously. There are two main topologies in the event-driven architecture:

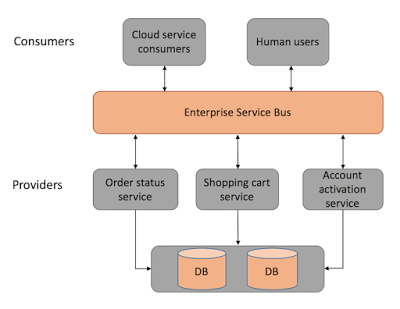

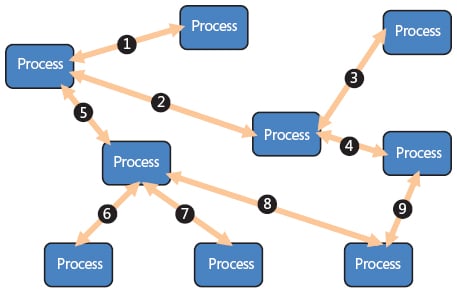

- Mediator topology: The mediator topology has a single event queue and a mediator which directs each of the events to relevant event processors. Usually, events are fed into the event processors passing through an event channel to filter or pre-process events. The implementation of the event queue could be in the form of a simple message queue or through a message passing interface leveraging a large distributed system, which intrinsically involves complex messaging protocols. The following diagram demonstrates the architectural implementation of the mediator topology:

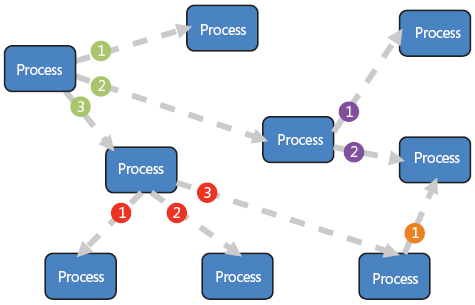

- Broker topology: This topology involves no event queue. Event processors are responsible for obtaining events, processing and publishing another event indicating the end. As the name of the topology implies, event processors act as brokers to chain events. Once an event is processed by a processor, another event is published so that another processor can proceed.

As the diagram indicates, some event processors just process and leave no trace and some tend to publish new events. The steps of certain tasks are chained in the manner of callbacks. That is, when one task ends, the callback is triggered, and all the tasks remain asynchronous in nature.

The prominent examples include programming a web page with JavaScript. This application involves writing the small modules that react to events like mouse clicks or keystrokes. The browser itself orchestrates all of the inputs and makes sure that only the right code sees the right events. This is very different from the layered architecture where all data will typically pass through all layers.