In this recipe, we will implement cross-region replication across accounts.

Implementing S3 cross-region replication across accounts

Getting ready

We need a working AWS account with the following resources configured:

- A user with administrator permission for S3 for a source bucket's account. I will be calling this user asawssecadmin.

- Create one bucket each in two accounts with two different regions and versioning enabled. I will be using the awsseccookbook bucket for the us-east-1 (N. Virginia) region in the source account and the awsseccookbookbackupmumbai bucket with ap-south-1 (Mumbai) in the destination account.

How to do it...

We can enable cross-region replication from the S3 console as follows:

- Go to the Management tab of your bucket and click on Replication.

- Click on Add rule to add a rule for replication. Select Entire bucket.

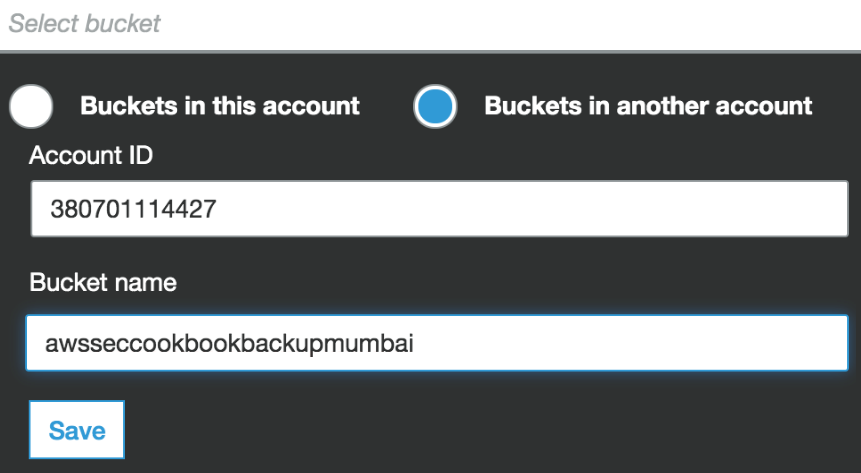

- In the next screen, select a destination bucket from another account, providing that account's account ID, and click Save:

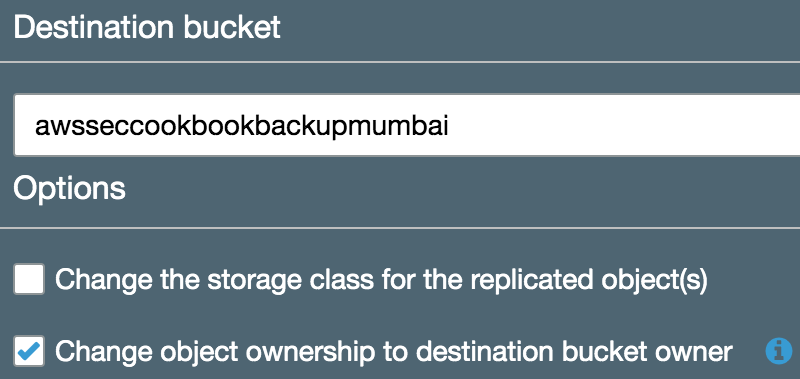

- Select the option to change ownership of the object destination bucket owner and click Next:

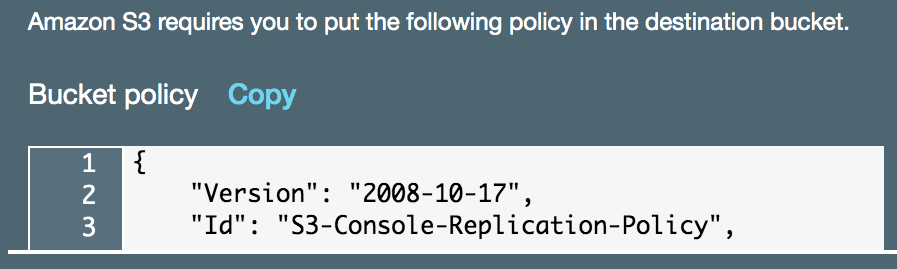

- In the Configure options screen, ask S3 to create the required IAM role for replication (as we did in previous recipes). Also, copy the bucket policy that is provided by S3 and apply it to the destination bucket:

The bucket policy for the destination bucket should appear as follows:

{

"Version": "2008-10-17",

"Id": "S3-Console-Replication-Policy",

"Statement": [

{

"Sid": "S3ReplicationPolicyStmt1",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::135301570106:root"

},

"Action": [

"s3:GetBucketVersioning",

"s3:PutBucketVersioning",

"s3:ReplicateObject",

"s3:ReplicateDelete",

"s3:ObjectOwnerOverrideToBucketOwner"

],

"Resource": [

"arn:aws:s3:::awsseccookbookbackupmumbai",

"arn:aws:s3:::awsseccookbookbackupmumbai/*"

]

}

]

}

- Review the rule and click Save. The autogenerated role's permissions policy document and trust policy document are available with the code files as replication-permissions-policy-other-account.json and assume-role-policy.json, respectively.

- Log in to the account where the destination bucket is present and update the bucket policy copied in step 5 on the destination bucket.

- Upload an object to the source bucket and verify whether the object is replicated in the destination bucket. Also, verify that the destination account is the owner of the uploaded file.

How it works...

We enabled cross-region replication across accounts. For general work on cross-region replication, refer to the Implementing S3 cross-region replication within the same account recipe. Replicating objects in another AWS account (cross-account replication) will provide additional protection for data against situations such as someone gaining illegal access to the source bucket and deleting data within the bucket and its replications.

We asked S3 to create the required role for replication. The autogenerated role has a permissions policy with s3:Get* and s3:ListBucket permissions on the source bucket, and s3:ReplicateObject, s3:ReplicateDelete, s3:ReplicateTags, and s3:GetObjectVersionTagging permissions on the destination bucket. Given that we selected the option to change object ownership to the destination bucket owner, which is required for cross-region replication across accounts, the destination policy included the s3:ObjectOwnerOverrideToBucketOwner action. These actions are required for owner override. Without owner override, the destination bucket account won't be able to access the replicated files or their properties.

With cross-region replication across accounts, the destination bucket in another account should also provide permissions to the source account using a bucket policy. The generated trust relationship document has s3.amazonaws.com as a trusted entity. The trust policy (assume role policy) allows the trusted entity to assume this role through the sts:AssumeRole action. Here, the trust relationship document allows the S3 service from the source account to assume this role in the destination account. For reference, the policy is provided with the code files as assume-role-policy.json.

There's more...

The steps to implement cross-region replication across accounts from the CLI can be summarized as follows:

- Create a role that can be assumed by S3 and has a permissions policy with the s3:Get* and s3:ListBucket actions for the source bucket and objects, and the s3:ReplicateObject, s3:ReplicateDelete, s3:ReplicateTags, s3:GetObjectVersionTagging and s3:ObjectOwnerOverrideToBucketOwner actions for the destination bucket objects.

- Create (or update) the replication configuration for the bucket using the aws s3api put-bucket-replication command by providing a replication configuration JSON. Use the AccessControlTranslation element to give ownership of the file to the destination bucket.

- Update the bucket policy of the destination bucket with the put-bucket-policy sub-command.

Complete CLI commands and policy JSON files are provided with the code files for reference.

Now, let's quickly go through some more concepts and features related to securing data on S3:

- The S3 Object Lock property can be used to prevent an object from being deleted or overwritten. This is useful for a Write-Once-Read-Many (WORM) model.

- We can use the Requester Pays property so that the requester pays for requests and data transfers. While Requester Pays is enabled, anonymous access to this bucket is disabled.

- We can use tags with our buckets to track our costs against projects or other criteria.

- By enabling Server Access Logging for our buckets, S3 will log detailed records for requests that are made to a bucket.

- We can log object-level API activity using the CloudTrail data events feature. This can be enabled from the S3 bucket's properties by providing an existing CloudTrail trail from the same region.

- We can configure Events under bucket properties to receive notifications when an event occurs. Events can be configured based on a prefix or suffix. Supported events include PUT, POST, COPY, multipart upload completion, object create events, object lost in RSS, permanently deleted, delete marker created, all object delete events, restore initiation, and restore completion.

- S3 Transfer Acceleration is a feature that enables the secure transfer of objects over long distances between a client and a bucket.

- We can configure our bucket to allow cross-origin requests by creating a CORS configuration that specifies rules to identify the origins we want to allow, the HTTP methods supported for each origin, and other operation-specific information.

- We can enable storage class analysis for our bucket, prefix, or tags. With this feature, S3 analyzes our access patterns and suggests an age at which to transition objects to Standard-IA.

- S3 supports two types of metrics: the daily storage metric (free and enabled by default) and request and data transfer metrics (paid and need to be opted in to). Metrics can be filtered by bucket, storage type, prefix, object, or tag.

See also

- In this chapter, we learned about important security-related features that are present in S3. You may learn more about S3 from https://cloudmaterials.com/en/book/amazon-s3-and-overview-other-storage-services.