Looking at Terminology in Text Classification Tasks

In a classification problem, we have data and labels – in our case, the data is the collection of headlines (clickbait and non-clickbait) and the labels are the indication of whether a specific headline is in fact "clickbait" or is "not clickbait."

We also have the terms training and evaluation. In the first part of the project, we'll feed both the data and the labels into our machine learning algorithm and it will try to derive a function that maps the data to the labels. We evaluate our model using different metrics, but a common and simple one is accuracy, which is how often the machine can predict the correct label without having access to it.

We'll be using two supervised machine learning algorithms in our project:

- Support Vector Machine (SVM): SVMs project data into higher dimensional space and look for decision boundaries.

- Multi-layer perceptron (MLP): MLPs are in some ways similar to SVMs but are loosely inspired by human brains, and contain a network of "neurons" that can send signals to each other.

The latter is a form of neural network, the model that has become the poster-child of machine learning and artificial intelligence.

We'll also be using a specialized data structure called a sparse matrix. For matrices that contain many zeros, it is not efficient to store every zero. We can, therefore, use a specialized data structure that stores only the non-zero values, but that nonetheless behaves like a normal matrix in many scenarios. Sparse matrices can be many times smaller than dense or normal matrices.

In the next exercise, you'll load a dataset, vectorize it using TF-IDF, and train both an SVM and an MLP classifier using this dataset.

Exercise 1.01: Training a Machine Learning Model to Identify Clickbait Headlines

In this exercise, we'll build a simple clickbait classifier that will automatically classify headlines as "clickbait" or "normal." We won't have to write any rules to tell the algorithm how to do this, as it will learn from examples.

We'll use the Python sklearn library to show how to train a machine learning algorithm that can differentiate between the two classes of "clickbait" and "normal" headlines. Along the way, we'll compare different ways of storing data and show how choosing the correct data structures for storing data can have a large effect on the overall project's feasibility.

We will use a clickbait dataset that contains 10,000 headlines: 5,000 are examples of clickbait while the other 5,000 are normal headlines.

The dataset can be found in our GitHub repository at https://packt.live/2C72sBN

You need to download the clickbait-headlines.tsv file from the GitHub repository.

Before proceeding with the exercises, we need to set up a Python 3 environment with sklearn and Anaconda (for Jupyter Notebook) installed. Please follow the instructions in the Preface to install it.

Perform the following steps to complete the exercise:

- Create a directory,

Chapter01, for all the exercises of this chapter. In theChapter01directory, create two directories namedDatasetsandExercise01.01.Note

If you are downloading the code bundle from https://packt.live/3fpBOmh, then the Dataset folder is present outside Chapter01 folder.

- Move the downloaded

clickbait-headlines.tsvfile to theDatasetsdirectory. - Open your Terminal (macOS or Linux) or Command Prompt (Windows), navigate to the

Chapter01directory, and typejupyter notebook. The Jupyter Notebook should look like the following screenshot:

Figure 1.6: The Chapter01 directory in Jupyter Notebook

- Create a new Jupyter Notebook. Read in the dataset file and check its size as shown in the following code:

import os dataset_filename = "../../Datasets/clickbait-headlines.tsv" print("File: {} \nSize: {} MBs"\ .format(dataset_filename, \ round(os.path.getsize(\ dataset_filename)/1024/1024, 2)))Note

Make sure you change the path of the TSV fie (highlighted) based on where you have saved it on your system. The code snippet shown here uses a backslash (

\) to split the logic across multiple lines. When the code is executed, Python will ignore the backslash, and treat the code on the next line as a direct continuation of the current line.You should get the following output:

File: ../Datasets/clickbait-headlines.tsv Size: 0.55 MBs

We first import the

oslibrary from Python, which is a standard library for running operating system-level commands. Further, we define the path to the dataset file as thedataset_filenamevariable. Lastly, we print out the size of the file using theoslibrary and thegetsize()function. We can see in the output that the file is less than 1 MB in size. - Read the contents of the file from disk and split each line into data and label components, as shown in the following code:

import csv data = [] labels = [] with open(dataset_filename, encoding="utf8") as f: reader = csv.reader(f, delimiter="\t") for line in reader: try: data.append(line[0]) labels.append(line[1]) except Exception as e: print(e) print(data[:3]) print(labels[:3])

You should get the following output:

["Egypt's top envoy in Iraq confirmed killed", 'Carter: Race relations in Palestine are worse than apartheid', 'After Years Of Dutiful Service, The Shiba Who Ran A Tobacco Shop Retires'] ['0', '0', '1']

We import the

csvPython library, which is useful for processing our file and is in the tab-separated values (TSV) file format. We then define two empty arrays,data,andlabels. We open the file, create a CSV reader, and indicate what kind of delimiter ("\t", or a tab character) is used. Then, loop through each line of the file and add the first element to the data array and the second element to the labels array. If anything goes wrong, we print out an error message to indicate this. Finally, we print out the first three elements of each of our arrays. They match up, so the first element in our data array is linked to the first element in our labels array. From the output, we see that the first two elements are0or "not clickbait," while the last element is identified as1, indicating a clickbait headline. - Create

vectorsfrom our text data using thesklearnlibrary, while showing how long it takes, as shown in the following code:%%time from sklearn.feature_extraction.text import TfidfVectorizer vectorizer = TfidfVectorizer() vectors = vectorizer.fit_transform(data) print("The dimensions of our vectors:") print(vectors.shape) print("- - -")You should get the following output:

The dimensions of our vectors: (10000, 13169) - - - Wall time: 294 ms

Note

Some outputs for this exercise may vary from the ones you see here.

The first line is a special Jupyter Notebook command saying that the code should output the total time taken. Then we import a

TfidfVectorizerfrom thesklearnlibrary. We initializevectorizerand call thefit_transform()function, which assigns each word to an index and creates the resulting vectors from the text data in a single step. Finally, we print out the shape of the vectors, noticing that it is10,000rows (the number of headlines) by13,169columns (the number of unique words across all headlines). We can see from the timing output that it took a total of around 200 ms to run this code. - Check how much memory our vectors are taking up in their sparse format compared to a dense format vector, as shown in the following code:

print("The data type of our vectors") print(type(vectors)) print("- - -") print("The size of our vectors (MB):") print(vectors.data.nbytes/1024/1024) print("- - -") print("The size of our vectors in dense format (MB):") print(vectors.todense().nbytes/1024/1024) print("- - - ") print("Number of non zero elements in our vectors") print(vectors.nnz) print("- - -")You should get the following output:

The data type of our vectors <class 'scipy.sparse.csr.csr_matrix'> - - - The size of our vectors (MB): 0.6759414672851562 - - - The size of our vectors in dense format (MB): 1004.7149658203125 - - - Number of non zero elements in our vectors 88597 - - -

We printed out the type of the vectors and saw that it was

csr_matrixor a compressed sparse row matrix, which is the default data structure used bysklearnfor vectors. In memory, it takes up only 0.68 MB of space. Next, we call thetodense()function, which converts the data structure to a standard dense matrix. We check the size again and find the size is over 1 GB. Finally, we output thennz(number of non-zero elements) and see that there were around 88,000 non-zero elements stored. Because we had10,000rows and13,169columns, the total number of elements is 131,690,000, which is why the dense matrix uses so much more memory. - For machine learning, we need to split our data into a train portion for training and a test portion to evaluate how good our model is, using the following code:

from sklearn.model_selection import train_test_split X_train, X_test, \ y_train, y_test = train_test_split(vectors, \ labels, test_size=0.2) print(X_train.shape) print(X_test.shape)

You should get the following output:

(8000, 13169) (2000, 13169)

We imported the

train_test_splitfunction fromsklearnand split our two arrays (vectorsandlabels) into four arrays (X_train,X_test,y_train, andy_test). Theyprefix indicates labels and theXprefix indicates vectorized data. We use thetest_size=0.2argument to indicate that we want 20% of our data held back for testing. We then print out each shape to show that 80% (8,000) of the headlines are in the training set and that 20% (2,000) of the headlines are in the test set. Because each dataset was vectorized at the same time, each still has13,169dimensions or possible words. - Initialize the SVC classifier, train it, and generate predictions with the following code:

%%time from sklearn.svm import LinearSVC svm_classifier = LinearSVC() svm_classifier.fit(X_train, y_train) predictions = svm_classifier.predict(X_test)

You should get the following output:

Wall time: 55 ms

Note

The preceding output will vary based on your system configuration.

We import the

LinearSVCmodel fromsklearnand initialize an instance of it. Then we give it the training data and training labels (note that it does not have access to the test data at this stage). Finally, we give it the testing data, but without the testing labels, and ask it to guess which of the headlines in the held-out test set are clickbait. We call thesepredictions. To get some insight into what is happening, let's take a look at some of these predictions and compare them to the real labels. - Output the first

10headlines along with their predicted class and true class by running the following code:print("prediction, label") for i in range(10): print(y_test[i], predictions[i])You should get the following output:

prediction, label 1 1 1 1 0 0 0 0 1 1 1 1 0 1 0 1 1 1 0 0

We can see that for the first 10 cases, our predictions were spot on. Let's see how we did overall for the test cases.

- Evaluate how well the model performed using the following code:

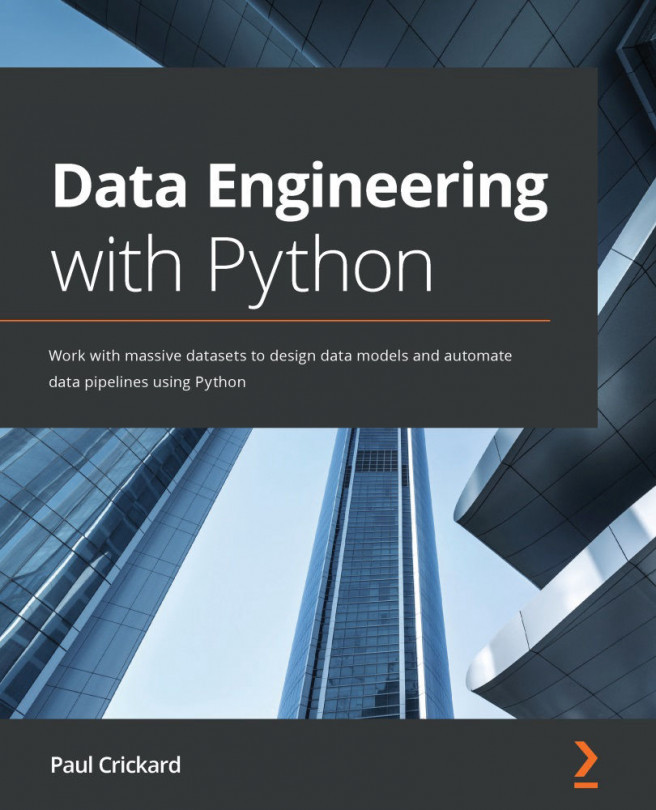

from sklearn.metrics \ import accuracy_score, classification_report print("Accuracy: {}\n"\ .format(accuracy_score(y_test, predictions))) print(classification_report(y_test, predictions))You should get the following output:

Figure 1.7: Looking at the evaluation results of our model

Note

To access the source code for this specific section, please refer to https://packt.live/2ZlQnSf.

We achieved around 96.5% accuracy, which means around 1,930 of the 2,000 test cases were correctly classified by our model. This is a good summary score, but for a fuller picture, we have printed the full classification report. The model could be wrong in different ways: either by classifying a clickbait headline as normal or by classifying a normal headline as clickbait. Because the precision and recall scores are similar, we can confirm that the model is not biased toward a specific kind of mistake.

By completing the exercise, you have successfully implemented a very basic text classification example, but it highlighted several essential ideas around data storage. A lot of the data that we worked on was a small dataset, and we took some shortcuts that we would not be able to do with large data in a real-world setting:

- We read our entire dataset from a single file on disk into memory before saving it to a local disk. If we had more data, we would have had to read from a database, potentially over a network, in smaller chunks.

- We loaded all of the data back into memory and turned it into vectors. We naively did this, again keeping everything in memory simultaneously. With more data, we would have needed to use a larger machine or a cluster of machines, or a smart algorithm to handle processing the data sequentially as a stream.

- We converted our sparse matrix to a dense one for illustrative purposes. At 1,500 times the size, you can imagine that this would not be possible even with a slightly larger dataset.

In the rest of the book, you will examine each of the concepts that we touched on in more detail, through several case studies and real-world use cases. For now, let's take a look at how hardware can help us when dealing with larger amounts of data.