What is Kubernetes?

Kubernetes, often abbreviated as k8s (pronounced as kaytes), is an open source container orchestration platform. Originating from Google’s proprietary orchestration tool, Borg, the project was open sourced in 2015 and was renamed Kubernetes. Following the v1.0 release on July 21, 2015, Google and the Linux Foundation partnered to form the Cloud Native Computing Foundation (CNCF), which acts as the current maintainer of the Kubernetes project.

The word Kubernetes is a Greek word, meaning helmsman or pilot. A helmsman is a person who is in charge of steering a ship and works closely with the ship’s officer to ensure a safe and steady course, along with the overall safety of the crew. Having similar responsibilities with regard to containers and microservices, Kubernetes is in charge of the orchestration and scheduling of containers. It is in charge of steering those containers to proper worker nodes that can handle their workloads. Kubernetes will also help ensure the safety of those microservices by providing high availability (HA) and health checks.

Let’s review some of the ways Kubernetes helps simplify the management of containerized workloads.

Container orchestration

The most prominent feature of Kubernetes is container orchestration. This is a fairly loaded term, so we’ll break it down into different pieces.

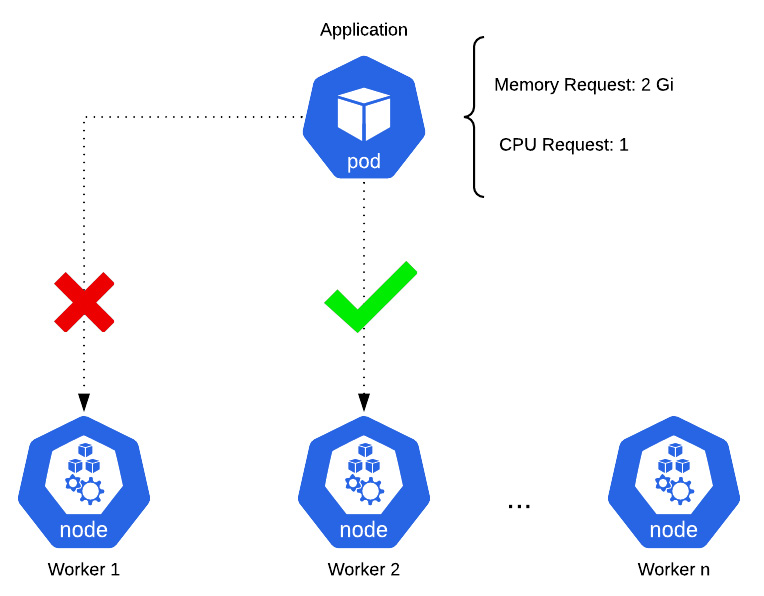

Container orchestration is about placing containers on certain machines from a pool of compute resources based on their requirements. The simplest use case for container orchestration is for deploying containers on machines that can handle their resource requirements. In the following diagram, there is an application that requests 2 Gibibytes (Gi) of memory (Kubernetes resource requests typically use their power-of-two values, which in this case is roughly equivalent to 2 gigabytes (GB)) and one central processing unit (CPU) core. This means that the container will be allocated 2 Gi of memory and 1 CPU core from the underlying machine that it is scheduled on. It is up to Kubernetes to track which machines, or nodes, have the required resources available and to place an incoming container on that machine. If a node does not have enough resources to satisfy the request, the container will not be scheduled on that node. If none of the nodes in a cluster have enough resources to run the workload, the container will not be deployed. Once a node has enough resources free, the container will be deployed on the node with sufficient resources:

Figure 1.1 – Kubernetes orchestration and scheduling

Container orchestration relieves you of the effort required to track the available resources on machines. Kubernetes and other monitoring tools provide insight into these metrics. So, a developer can simply declare the number of resources they expect a container to use, and Kubernetes will take care of the rest on the backend.

HA

Another benefit of Kubernetes is that it provides features that help take care of redundancy and HA. HA is a characteristic that prevents application downtime. It’s performed by a load balancer, which splits incoming traffic across multiple instances of an application. The premise of HA is that if one instance of an application goes down, other instances are still available to accept incoming traffic. In this regard, downtime is avoided, and the end user—whether a human or another microservice—remains completely unaware that there was a failed instance of the application. Kubernetes provides a networking mechanism, called a service, that allows applications to be load-balanced. We will talk about services in greater detail later on, in the Deploying a Kubernetes application section of this chapter.

Scalability

Given the lightweight nature of containers and microservices, developers can use Kubernetes to rapidly scale their workloads, both horizontally and vertically.

Horizontal scaling is the act of deploying more container instances. If a team running their workloads on Kubernetes were expecting increased load, they could simply tell Kubernetes to deploy more instances of their application. Since Kubernetes is a container orchestrator, developers would not need to worry about the physical infrastructure that those applications would be deployed on. It would simply locate a node within the cluster with the available resources and deploy the additional instances there. Each extra instance would be added to a load-balancing pool, which would allow the application to continue to be highly available.

Vertical scaling is the act of allocating additional memory and CPU to an application. Developers can modify the resource requirements of their applications while they are running. This will prompt Kubernetes to redeploy the running instances and reschedule them on nodes that can support the new resource requirements. Depending on how this is configured, Kubernetes can redeploy each instance in a way that prevents downtime while the new instances are being deployed.

Active community

The Kubernetes community is an incredibly active open source community. As a result, Kubernetes frequently receives patches and new features. The community has also made many contributions to documentation, both to the official Kubernetes documentation and to professional or hobbyist blog websites. In addition to documentation, the community is highly involved in planning and attending meetups and conferences around the world, which helps increase education about the platform and innovation surrounding it.

Another benefit of Kubernetes’ large community is the number of different tools built to augment the abilities that are provided. Helm is one such tool. As we’ll see later in this chapter and throughout this book, Helm—a tool built by members of the Kubernetes community—vastly improves a developer’s experience by simplifying application deployments and life cycle management.

With an understanding of the benefits Kubernetes brings to managing containerized workloads, let’s now discuss how an application can be deployed in Kubernetes.