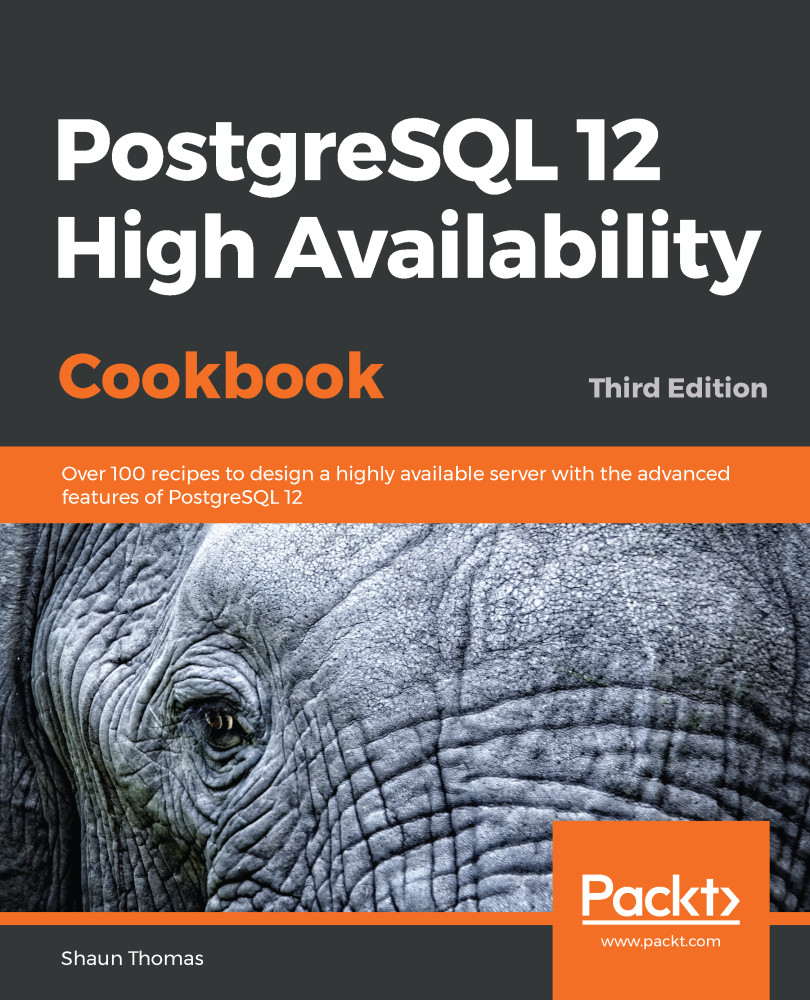

Let's consider an extreme example to explain how this works: a financial institution wants to place six PostgreSQL nodes, one witness server, and a backup system. This would clearly be a silly design, as shown in the following diagram:

This places every node in a single location that could lose its connection to the internet, lose power, or suffer some other catastrophe that would render the entire database stack unusable or even destroyed.

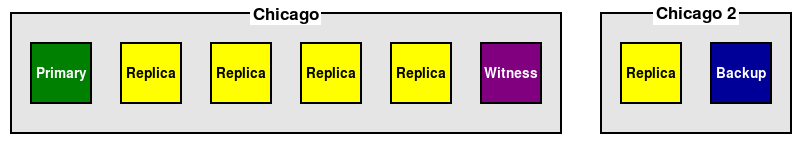

Now, let's apply the guidelines. First of all, we want to protect the backup; let's place that elsewhere, as seen in this following diagram:

Now, one PostgreSQL server and the backup are safe in case something happens to the first data center. Now of course, we have a new problem: what happens if Chicago itself is somehow isolated from the rest of the internet. Though incredibly rare, major internet backbone outages like this are possible.

So, let's add a third data center in Dallas. This allows us to actually follow three separate rules. We can move the backup to that remote location so it's even safer. We can relocate at least one more PostgreSQL server to that data center as well, so it acts as an alternate in case Chicago becomes unavailable. And finally, we have three data centers, so it's possible to safely use automated failover.

Recipes later in this chapter will explain why we keep insisting that safety comes in odd numbers. For now, just keep it in mind when considering the design.

With these revisions, our cluster looks more like the following diagram:

The only remaining rules suggest that our node distributions should be more even, and that the witness should be less likely to lose contact with more than one location at once. This means we need to move a couple more of our nodes to the other data centers. But what about the witness node? It's actually best to leave it in the second Chicago location. If Chicago is separated from Dallas, the witness is still at least in another data center, and is less likely to lose contact with Chicago, thereby preserving its voting abilities.

Given we're discussing a large financial institution that likely has access to multiple data centers, perhaps there's an even better solution. Following one final node reorganization, the cluster probably looks more like this diagram:

Now, we can utilize three data centers that are relatively diverse in their geographic isolation. This may appear to be an extreme case, but is merely illustrative of the process we recommend to ensure that the overall state of the cluster is as safe as possible.