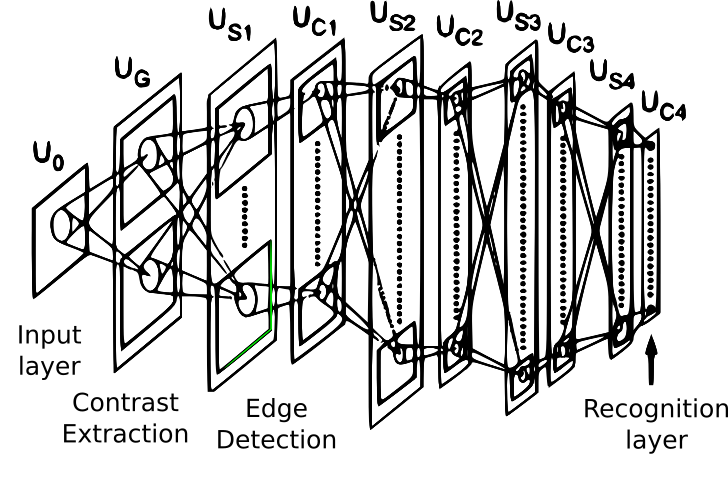

Convolutional neural networks (CNNs) have a remote origin. They developed while multi-layer perceptrons were perfected, and the first concrete example is the neocognitron.

The neocognitron is a hierarchical, multilayered Artificial Neural Network (ANN), and was introduced in a 1980 paper by Prof. Fukushima and has the following principal features:

- Self-organizing

- Tolerant to shifts and deformation in the input

This original idea appeared again in 1986 in the book version of the original backpropagation paper, and was also employed in 1988 for temporal signals in speech recognition.

The design was improved in 1998, with a paper from Ian LeCun, Gradient-Based Learning Aapplied to Document Recognition, presenting the LeNet-5 network, an architecture used to classify handwritten digits. The model showed increased performance compared to other...