Containers and container orchestration

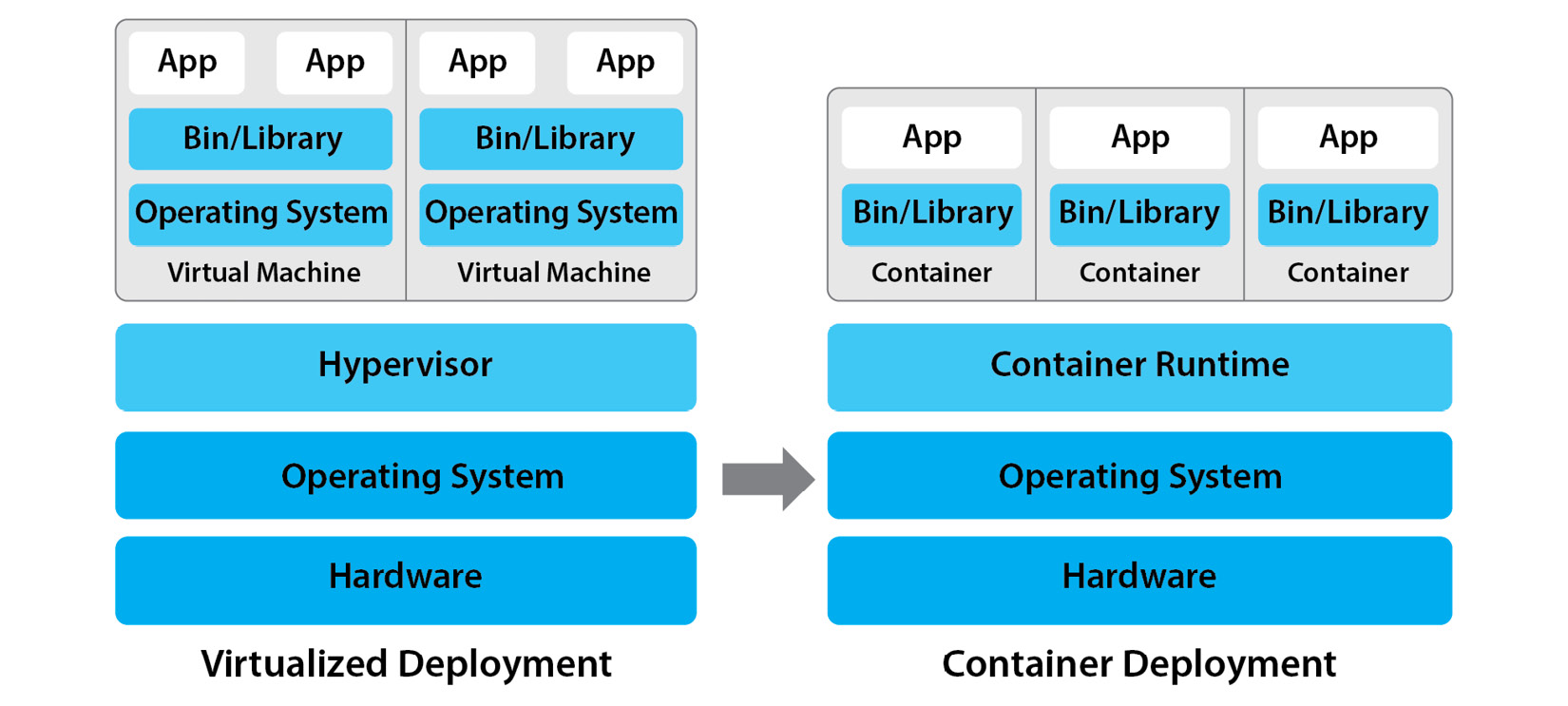

At a very high level, containers are another form of lightweight virtualization, also known as OS-level virtualization. However, containers are different from VMs with their own advantages and disadvantages.

The major difference is that with VMs, we can slice and share one physical server between many VMs, each running their own OS. With containers, we can slice and share an OS kernel between multiple containers and each container will have its own virtual OS. Let’s see this in more detail.

Containers

These are portable units of software that include application code with runtimes, dependencies, and system libraries. Containers share one OS kernel, but each container can have its own isolated OS environment with different packages, system libraries, tools, its own storage, networking, users, processes, and groups.

Portable is important and needs to be elaborated. An application packaged into a container image is guaranteed to run on another host because the container includes its own isolated environment. Starting a container on another host does not interfere with its environment or the application containerized.

A major advantage is also that containers are a lot more lightweight and efficient compared to VMs. They consume less resources (the CPU and RAM) than VMs and start almost instantly because they don’t need to bootstrap a complete OS with a kernel. For example, if a physical server is capable of running 10 VMs, then the same physical server might be able to run 30, 40, or possibly even more containers, each with its own application (the exact number depends on many factors, including the type of workload, so those values are for demonstration purposes only and do not represent any formula).

Containers are also much smaller than VMs in disk size, because they don’t package a full OS with thousands of libraries. Only applications with dependencies and a minimal set of OS packages are included in container images. That makes container images small, portable, and easy to download or share.

Container images

These are essentially templates of container OS environments that we can use to create multiple containers with the same application and environment. Every time we execute an image, a container is created.

Speaking in numbers, a container image of a popular Linux distribution such as Ubuntu Server 20.04 weighs about 70 MB, whereas a KVM QCOW2 virtual machine image of the same Ubuntu Server will weigh roughly 500 MB. Specialized Linux container images such as Alpine can be as small as 5 to 10 MB and provide the bare minimum functionality to install and run applications.

Containers are also agnostic to where they run – whether on physical servers, on-premises VMs, or the cloud, containers can run in any of these locations with the help of container runtimes.

Container runtimes

A container runtime is a special software needed to run containers on a host OS. It is responsible for creating, starting, stopping, and deleting containers based on the container images it downloads. Examples of container runtimes include containerd, CRI-O, and Docker Engine.

Figure 1.3 demonstrates the differences between virtualized and containerized deployments:

Figure 1.3 – Comparison of virtualized and container deployments

Now, a question you might be asking yourself is if containers are so great, why would anyone use VMs and why do cloud providers still offer so many VM types?

Here is the scenario where VMs have an advantage over containers: they provide better security due to stronger isolation because they don’t directly share the same host kernel. That means if an application running in a container has been breached by a hacker, the chances that they can get to all the other containers on the same host are much higher than compared to regular VMs.

We will dive deeper into the technology behind OS-level virtualization and explore the low-level differences between VMs and containers in later chapters.

As containers gained momentum and received wider adoption over the years, it quickly became apparent that managing containers on a large scale can be quite a challenge. The industry needed tools to orchestrate and manage the life cycle of container-based applications.

This had to do with the increasing number of containers that companies and teams had to operate because as the infrastructure tools evolved, so did the application architectures too, transforming from large monolithic architectures into small, distributed, and loosely coupled microservices.