Building an environment and reward mechanism for training RL agents

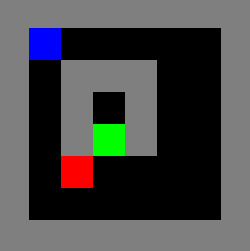

This recipe will walk you through the steps to build a Gridworld learning environment to train RL agents. Gridworld is a simple environment where the world is represented as a grid. Each location on the grid can be referred to as a cell. The goal of an agent in this environment is to find its way to the goal state in a grid like the one shown here:

Figure 1.1 – A screenshot of the Gridworld environment

The agent's location is represented by the blue cell in the grid, while the goal and a mine/bomb/obstacle's location is represented in the grid using green and red cells, respectively. The agent (blue cell) needs to find its way through the grid to reach the goal (green cell) without running over the mine/bomb (red cell).

Getting ready

To complete this recipe, you will first need to activate the tf2rl-cookbook Python/Conda virtual environment and pip install numpy gym. If the following import statements run without issues, you are ready to get started!

import copy import sys import gym import numpy as np

Now we can begin.

How to do it…

To train RL agents, we need a learning environment that is akin to the datasets used in supervised learning. The learning environment is a simulator that provides the observation for the RL agent, supports a set of actions that the RL agent can perform by executing the actions, and returns the resultant/new observation as a result of the agent taking the action.

Perform the following steps to implement a Gridworld learning environment that represents a simple 2D map with colored cells representing the location of the agent, goal, mine/bomb/obstacle, wall, and empty space on a grid:

- We'll start by first defining the mapping between different cell states and their color codes to be used in the Gridworld environment:

EMPTY = BLACK = 0 WALL = GRAY = 1 AGENT = BLUE = 2 MINE = RED = 3 GOAL = GREEN = 4 SUCCESS = PINK = 5

- Next, generate a color map using RGB intensity values:

COLOR_MAP = { BLACK: [0.0, 0.0, 0.0], GRAY: [0.5, 0.5, 0.5], BLUE: [0.0, 0.0, 1.0], RED: [1.0, 0.0, 0.0], GREEN: [0.0, 1.0, 0.0], PINK: [1.0, 0.0, 1.0], } - Let's now define the action mapping:

NOOP = 0 DOWN = 1 UP = 2 LEFT = 3 RIGHT = 4

- Let's then create a

GridworldEnvclass with an__init__function to define necessary class variables, including the observation and action space:class GridworldEnv(): def __init__(self):

We will implement

__init__()in the following steps. - In this step, let's define the layout of the Gridworld environment using the grid cell state mapping:

self.grid_layout = """ 1 1 1 1 1 1 1 1 1 2 0 0 0 0 0 1 1 0 1 1 1 0 0 1 1 0 1 0 1 0 0 1 1 0 1 4 1 0 0 1 1 0 3 0 0 0 0 1 1 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 """

In the preceding layout,

0corresponds to the empty cells,1corresponds to walls,2corresponds to the agent's starting location,3corresponds to the location of the mine/bomb/obstacle, and4corresponds to the goal location based on the mapping we defined in step 1. - Now, we are ready to define the observation space for the Gridworld RL environment:

self.initial_grid_state = np.fromstring( self.grid_layout, dtype=int, sep=" ") self.initial_grid_state = \ self.initial_grid_state.reshape(8, 8) self.grid_state = copy.deepcopy( self.initial_grid_state) self.observation_space = gym.spaces.Box( low=0, high=6, shape=self.grid_state.shape ) self.img_shape = [256, 256, 3] self.metadata = {"render.modes": ["human"]} - Let's define the action space and the mapping between the actions and the movement of the agent in the grid:

self.action_space = gym.spaces.Discrete(5) self.actions = [NOOP, UP, DOWN, LEFT, RIGHT] self.action_pos_dict = { NOOP: [0, 0], UP: [-1, 0], DOWN: [1, 0], LEFT: [0, -1], RIGHT: [0, 1], } - Let's now wrap up the

__init__function by initializing the agent's start and goal states using theget_state()method (which we will implement in the next step):(self.agent_start_state, self.agent_goal_state,) = \ self.get_state()

- Now we need to implement the

get_state()method that returns the start and goal state for the Gridworld environment:def get_state(self): start_state = np.where(self.grid_state == AGENT) goal_state = np.where(self.grid_state == GOAL) start_or_goal_not_found = not (start_state[0] \ and goal_state[0]) if start_or_goal_not_found: sys.exit( "Start and/or Goal state not present in the Gridworld. " "Check the Grid layout" ) start_state = (start_state[0][0], start_state[1][0]) goal_state = (goal_state[0][0], goal_state[1][0]) return start_state, goal_state

- In this step, we will be implementing the

step(action)method to execute the action and retrieve the next state/observation, the associated reward, and whether the episode ended:def step(self, action): """return next observation, reward, done, info""" action = int(action) info = {"success": True} done = False reward = 0.0 next_obs = ( self.agent_state[0] + \ self.action_pos_dict[action][0], self.agent_state[1] + \ self.action_pos_dict[action][1], ) - Next, let's specify the rewards and finally, return

grid_state,reward,done, andinfo:# Determine the reward if action == NOOP: return self.grid_state, reward, False, info next_state_valid = ( next_obs[0] < 0 or next_obs[0] >= \ self.grid_state.shape[0] ) or (next_obs[1] < 0 or next_obs[1] >= \ self.grid_state.shape[1]) if next_state_valid: info["success"] = False return self.grid_state, reward, False, info next_state = self.grid_state[next_obs[0], next_obs[1]] if next_state == EMPTY: self.grid_state[next_obs[0], next_obs[1]] = AGENT elif next_state == WALL: info["success"] = False reward = -0.1 return self.grid_state, reward, False, info elif next_state == GOAL: done = True reward = 1 elif next_state == MINE: done = True reward = -1 # self._render("human") self.grid_state[self.agent_state[0], self.agent_state[1]] = EMPTY self.agent_state = copy.deepcopy(next_obs) return self.grid_state, reward, done, info - Up next is the

reset()method, which resets the Gridworld environment when an episode completes (or if a request to reset the environment is made):def reset(self): self.grid_state = copy.deepcopy( self.initial_grid_state) (self.agent_state, self.agent_goal_state,) = \ self.get_state() return self.grid_state

- To visualize the state of the Gridworld environment in a human-friendly manner, let's implement a render function that will convert the

grid_layoutthat we defined in step 5 to an image and display it. With that, the Gridworld environment implementation will be complete!def gridarray_to_image(self, img_shape=None): if img_shape is None: img_shape = self.img_shape observation = np.random.randn(*img_shape) * 0.0 scale_x = int(observation.shape[0] / self.grid_\ state.shape[0]) scale_y = int(observation.shape[1] / self.grid_\ state.shape[1]) for i in range(self.grid_state.shape[0]): for j in range(self.grid_state.shape[1]): for k in range(3): # 3-channel RGB image pixel_value = \ COLOR_MAP[self.grid_state[i, j]][k] observation[ i * scale_x : (i + 1) * scale_x, j * scale_y : (j + 1) * scale_y, k, ] = pixel_value return (255 * observation).astype(np.uint8) def render(self, mode="human", close=False): if close: if self.viewer is not None: self.viewer.close() self.viewer = None return img = self.gridarray_to_image() if mode == "rgb_array": return img elif mode == "human": from gym.envs.classic_control import \ rendering if self.viewer is None: self.viewer = \ rendering.SimpleImageViewer() self.viewer.imshow(img)

- To test whether the environment is working as expected, let's add a

__main__function that gets executed if the environment script is run directly:if __name__ == "__main__": env = GridworldEnv() obs = env.reset() # Sample a random action from the action space action = env.action_space.sample() next_obs, reward, done, info = env.step(action) print(f"reward:{reward} done:{done} info:{info}") env.render() env.close() - All set! The Gridworld environment is ready and we can quickly test it by running the script (

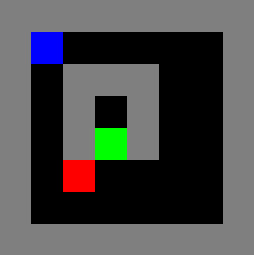

python envs/gridworld.py). An output such as the following will be displayed:reward:0.0 done:False info:{'success': True}The following rendering of the Gridworld environment will also be displayed:

Figure 1.2 – The Gridworld

Let's now see how it works!

How it works…

The grid_layout defined in step 5 in the How to do it… section represents the state of the learning environment. The Gridworld environment defines the observation space, action spaces, and the rewarding mechanism to implement a Markov Decision Process (MDP). We sample a valid action from the action space of the environment and step the environment with the chosen action, which results in the new observation, reward, and a done status Boolean (representing if the episode has finished) as the response from the Gridworld environment. The env.render() method converts the environment's internal grid representation to an image and displays it for visual understanding.