Planning an object classification application

At startup, BeanCounter loads a configuration file and set of images and trains the classifier. This may take several seconds. While loading, the app displays the text Training classifier…, along with a regal image of Queen Elizabeth II and eight dried beans:

Next, BeanCounter shows a live view from the rear-facing camera. A blob detection algorithm is applied to each frame and a green rectangle is drawn around each detected blob. Consider the following screenshot:

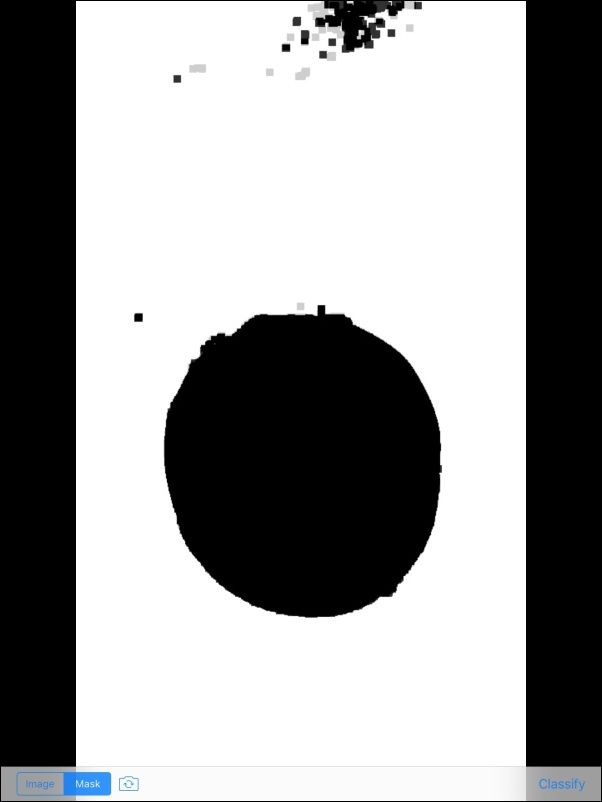

Note the controls in the toolbar below the camera view. The Image and Mask segmented controls enable the user to toggle between the preceding view of detection results in the image and the following view of the mask:

Note

The gray dots in the preceding image are just an artifact of the iOS screenshot function, which sometimes shows a faint ghost of a previous frame. The mask is really pure black and pure white.

The switch camera button has the usual effect of activating a different camera...