Chapter 8 - Need for Speed – Performance and Optimization

Activity 1: Optimizing a Spell Check Algorithm

In this activity, we'll be developing a simple spell check demonstration and try to make it faster incrementally. You can use the skeleton file, Speller.cpp, as a starting point. Perform the following steps to implement this activity:

- For the first implementation of the spell check (the full code can be found in Speller1.cpp) – create a dictionary set in the getMisspelt() function:

set<string> setDict(vecDict.begin(), vecDict.end());

- Loop over the text words and check for words not in the dictionary with the set::count() method. Add the misspelled words to the result vector:

vector<int> ret;

for(int i = 0; i < vecText.size(); ++i)

{

const string &s = vecText[i];

if(!setDict.count(s))

{

ret.push_back(i);

}

};

- Open the terminal. Compile the program and run it as follows:

$ g++ -O3 Speller1.cpp Timer.cpp

$ ./a.out

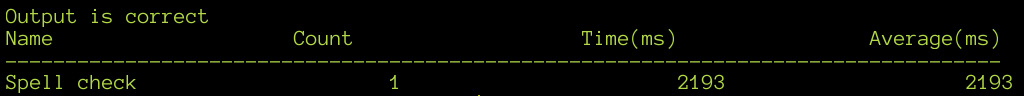

The following output will be generated:

Figure 8.60: Example output of the solution for Step 1

- Open the Speller2.cpp file and add the unordered_set header file to the program:

#include <unordered_set>

- Next, change the set type that's used for the dictionary to unordered_set:

unordered_set<string> setDict(vecDict.begin(), vecDict.end());

- Open the Terminal. Compile the program and run it as follows:

$ g++ -O3 Speller2.cpp Timer.cpp

$ ./a.out

The following output will be generated:

Figure 8.61: Example output of the solution for Step 2

- For the third and final version, that is, Speller3.cpp, we will use a bloom filter. Start by defining a hash function based on the BKDR function. Add the following code to implement this:

const size_t SIZE = 16777215;

template<size_t SEED> size_t hasher(const string &s)

{

size_t h = 0;

size_t len = s.size();

for(size_t i = 0; i < len; i++)

{

h = h * SEED + s[i];

}

return h & SIZE;

}

Here, we used an integer template parameter so that we can create any number of different hash functions with the same code. Notice the use of the 16777215 constant, which is equal to 2^24 – 1. This lets us use the fast bitwise-and operator instead of the modulus operator to keep the hashed integer less than SIZE. If you want to change the size, keep it as one less than a power of two.

- Next, let's declare a vector<bool> for a bloom filter in getMisspelt() and populate it with the words in the dictionary. Use three hash functions. The BKDR hash can be seeded with values such as 131, 3131, 31313, and so on. Add the following code to implement this:

vector<bool> m_Bloom;

m_Bloom.resize(SIZE);

for(auto i = vecDict.begin(); i != vecDict.end(); ++i)

{

m_Bloom[hasher<131>(*i)] = true;

m_Bloom[hasher<3131>(*i)] = true;

m_Bloom[hasher<31313>(*i)] = true;

}

- Write the following code to create a loop that checks the words:

for(int i = 0; i < vecText.size(); ++i)

{

const string &s = vecText[i];

bool hasNoBloom =

!m_Bloom[hasher<131>(s)]

&& !m_Bloom[hasher<3131>(s)]

&& !m_Bloom[hasher<31313>(s)];

if(hasNoBloom)

{

ret.push_back(i);

}

else if(!setDict.count(s))

{

ret.push_back(i);

}

}

The bloom filter is checked first and if it finds the word in the dictionary, we have to verify it, like we did previously.

- Open the terminal. Compile the program and run it as follows:

$ g++ -O3 Speller3.cpp Timer.cpp

$ ./a.out

The following output will be generated:

Figure 8.62: Example output of the solution for Step 3

In the preceding activity, we attempted to solve a real-world problem and make it more efficient. Let's consider some points for each of the implementations in the three steps, as follows:

- For the first version, the most obvious solution with a std::set is used – however, the performance is likely to be low because the set data structure is based on a binary tree, which has O(log N) complexity for finding an element.

- For the second version, we can gain a large performance improvement simply by switching to std::unordered_set, which uses a hash table as the underlying data structure. If the hash function is good, the performance will be close to O(1).

- The third version, based on the Bloom filter data structure, requires some consideration.-The primary performance benefit of a bloom filter is because it is a compact data structure that does not actually store the actual elements in it, thereby providing very good cache performance.

From an implementation perspective, the following guidelines apply:

- vector<bool> can be used as the backing store as it is an efficient way to store and retrieve bits.

- The false positive percentage of the bloom filter should be minimal – anything more than 5% will not be efficient.

- There are many string hashing algorithms – the BKDR hash algorithm is used in the reference implementation. A comprehensive of string hash algorithms with implementation can be found here: http://www.partow.net/programming/hashfunctions/index.html.

- The number of hash functions and the size for the bloom filter that's used are very critical to get the performance benefits.

- The nature of the dataset should be taken into account when deciding what parameters the bloom filter should use – consider that, in this example, there are very few words that are misspelled, and the majority of them are in the dictionary.

There are some questions worth probing, given the results we received:

- Why is the improvement in performance so meager with the Bloom Filter?

- What is the effect of using a larger or smaller capacity Bloom filter?

- What happens when fewer or more hash functions are used?

- Under what conditions would this version be much faster than the one in Speller2.cpp?

Here are the answers to these questions:

- Why is the improvement in performance so meager with the Bloom Filter?

std::unordered_set performs one hash operation and perhaps a couple of memory accesses before reaching the value that's stored. The Bloom filter we use performs three hash operations and three memory accesses. So, in essence, the work that's done by the bloom filter is more than the hash table. Since there are only 31,870 words in our dictionary, the cache benefits of the Bloom filter are lost. This is another case where the traditional analysis of data structures does not correspond to real-life results because of caching.

- What is the effect of using a larger or smaller capacity Bloom filter?

When a larger capacity is used, the number of hash collisions reduce, along with false positives, but the caching behavior worsens. Conversely, when a smaller capacity is used, the hash collisions and the false positives increase, but the caching behavior improves.

- What happens when fewer or more hash functions are used?

The more hash functions are used, the fewer the false positives, and vice versa.

- Under what conditions would this version be much faster than the one in Speller2.cpp?

Bloom filters work best when the cost of testing a few bits is less than the cost of accessing the value in the hash table. This only becomes true when the Bloom filter bits fit completely within the cache and the dictionary does not.