The TestNG examples used in this chapter will be using TestNG Version 6.14.3 and the Maven Failsafe Plugin Version 2.21.0. If you use older versions of these components, the functionality that we are going to use may not be available.

To start, we are going to make some changes to our POM file. We are going to add a threads property, which will be used to determine the number of parallel threads used to run our checks. Then, we are going to use the Maven Failsafe Plugin to configure TestNG:

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<project.reporting.outputEncoding>

UTF-8</project.reporting.outputEncoding>

<java.version>1.8</java.version>

<!-- Dependency versions -->

<selenium.version>3.12.0</selenium.version>

<testng.version>6.14.3</testng.version>

<!-- Plugin versions -->

<maven-compiler-plugin.version>3.7.0

</maven-compiler-plugin.version>

<maven-failsafe-plugin.version>2.21.0

</maven-failsafe-plugin.version>

<!-- Configurable variables -->

<threads>1</threads>

</properties>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>${java.version}</source>

<target>${java.version}</target>

</configuration>

<version>${maven-compiler-plugin.version}</version>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-failsafe-plugin</artifactId>

<version>${maven-failsafe-plugin.version}</version>

<configuration>

<parallel>methods</parallel>

<threadCount>${threads}</threadCount>

</configuration>

<executions>

<execution>

<goals>

<goal>integration-test</goal>

<goal>verify</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

TestNG supports parallel threads out of the box; we just need to tell it how to use them. This is where the Maven Failsafe Plugin comes in. We are going to use it to configure our parallel execution environment for our tests. This configuration will be applied to TestNG if you have TestNG as a dependency; you don't need to do anything special.

In our case, we are interested in parallel and the threadCount configuration settings. We have set parallel to methods. This will search through our project for methods that have the @Test annotation and will collect them all into a great big pool of tests. The Failsafe Plugin will then take tests out of this pool and run them. The number of tests that will be run concurrently will depend on how many threads are available. We will use the threadCount property to control this.

It is important to note that there is no guarantee in which order tests will be run.

We are using the threadCount configuration setting to control how many tests we run in parallel, but as you may have noticed we have not specified a number. Instead, we have used the Maven variable ${threads}, this will take the value of the maven property threads that we defined in our properties block and pass it into threadCount.

Since threads is a Maven property, we are able to override its value on the command line by using the -D switch. If we do not override its value, it will use the value we have set in the POM as a default.

So, if we run the following command, it will use the default value of 1 in the POM file.:

mvn clean verify -Dwebdriver.gecko.driver=<PATH_TO_GECKODRIVER_BINARY>

However, if we use this next command, it will overwrite the value of 1 stored in the POM file and use the value 2 instead:

mvn clean verify -Dthreads=2 -Dwebdriver.gecko.driver=<PATH_TO_GECKODRIVER_BINARY>

As you can see, this gives us the ability to tweak the number of threads that we use to run our tests without making any code changes at all.

We have used the power of Maven and the Maven Failsafe Plugin to set the number of threads that we want to use when running our tests in parallel, but we still have more work to do!

If you run your tests right now, you will see that even though we are supplying multiple threads to our code, all the tests still run in a single thread. Selenium is not thread safe, so we need to write some code that will make sure that each Selenium instance runs in its own isolated thread and does not leak over to other threads.

Previously, we were instantiating an instance of FirefoxDriver in each of our tests. Let's pull this out of the test, and put browser instantiation into its own class called DriverFactory. We will then add a class called DriverBase that will deal with the marshaling of the threads.

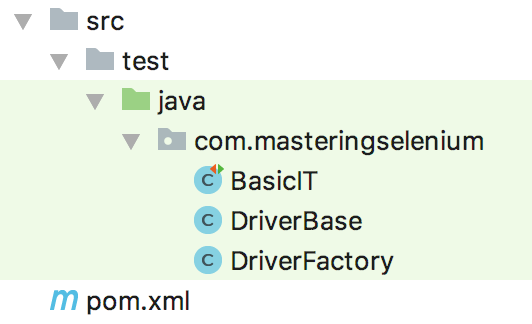

We are going to now build a project structure that looks like this:

First of all, we need to create our DriverFactory class by using the following code:

package com.masteringselenium;

import org.openqa.selenium.firefox.FirefoxDriver;

import org.openqa.selenium.remote.RemoteWebDriver;

public class DriverFactory {

private RemoteWebDriver webDriver;

private final String operatingSystem =

System.getProperty("os.name").toUpperCase();

private final String systemArchitecture =

System.getProperty("os.arch");

RemoteWebDriver getDriver() {

if (null == webDriver) {

System.out.println(" ");

System.out.println("Current Operating System: " +

operatingSystem);

System.out.println("Current Architecture: " +

systemArchitecture);

System.out.println("Current Browser Selection:

Firefox");

System.out.println(" ");

webDriver = new FirefoxDriver();

}

return webDriver;

}

void quitDriver() {

if (null != webDriver) {

webDriver.quit();

webDriver = null;

}

}

}

This class holds a reference to a WebDriver object, and ensures that every time you call getDriver() you get a valid instance of WebDriver back. If one has been started up, you will get the existing one. If one hasn't been started up, it will start one for you.

It also provides a quitDriver() method that will perform quit() on your WebDriver object. It also nullifies the WebDriver object held in the class. This prevents errors that would be caused by attempting to interact with a WebDriver object that has been closed.

Next, we need to create a class called DriverBase by using this command:

package com.masteringselenium;

import org.openqa.selenium.remote.RemoteWebDriver;

import org.testng.annotations.AfterMethod;

import org.testng.annotations.AfterSuite;

import org.testng.annotations.BeforeSuite;

import java.util.ArrayList;

import java.util.Collections;

import java.util.List;

public class DriverBase {

private static List<DriverFactory> webDriverThreadPool =

Collections.synchronizedList(new ArrayList<DriverFactory>());

private static ThreadLocal<DriverFactory> driverThread;

@BeforeSuite(alwaysRun = true)

public static void instantiateDriverObject() {

driverThread = new ThreadLocal<DriverFactory>() {

@Override

protected DriverFactory initialValue() {

DriverFactory webDriverThread = new DriverFactory();

webDriverThreadPool.add(webDriverThread);

return webDriverThread;

}

};

}

public static RemoteWebDriver getDriver() {

return driverThread.get().getDriver();

}

@AfterMethod(alwaysRun = true)

public static void clearCookies() {

getDriver().manage().deleteAllCookies();

}

@AfterSuite(alwaysRun = true)

public static void closeDriverObjects() {

for (DriverFactory webDriverThread : webDriverThreadPool) {

webDriverThread.quitDriver();

}

}

}

This is a small class that will hold a pool of driver objects. We are using a ThreadLocal object to instantiate our WebDriverThread objects in separate threads. We have also created a getDriver() method that uses the getDriver() method on the DriverFactory object to pass each test a WebDriver instance that it can use.

We are doing this to isolate each instance of WebDriver to make sure that there is no cross contamination between tests. When our tests start running in parallel, we don't want different tests to start firing commands to the same browser window. Each instance of WebDriver is now safely locked away in its own thread.

Since we are using this factory class to start up all our browser instances, we need to make sure that we close them down as well. To do this, we have created a method with an @AfterMethod annotation that will destroy the driver after our test has run. This also has the added advantage of cleaning up if our test fails to reach the line where it would normally call driver.quit(), for example, if there was an error in the test that caused it to fail and finish early.

Note that our @AfterMethod and @BeforeSuite annotations have a parameter of alwaysRun = true set on them. This makes sure that these functions are always run. For example, with our @AfterMethod annotation this makes sure that, even if a test fails, we will call the driver.quit() method. This ensures that we shut down our driver instance which will in turn close the browser. This should reduce the chance of you having some open browser windows left over after your test run if some of your tests fail.

All that is left now is to clean up the code in our basicTest class and change its name to BasicIT. Why have we changed the name of the test? Well, we are going to use the maven-failsafe-plugin to run our tests in the integration-test phase. This plugin picks up files that end in IT by default. If we left the class with a name ending in TEST, it would be picked up by the maven-surefire-plugin. We don't want the maven-surefire-plugin to pick up our tests, that should really be used for unit tests, we want to use the maven-failsafe-plugin instead, so we will use this code:

package com.masteringselenium;

import org.openqa.selenium.By;

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.WebElement;

import org.openqa.selenium.support.ui.ExpectedCondition;

import org.openqa.selenium.support.ui.WebDriverWait;

import org.testng.annotations.Test;

public class BasicIT extends DriverBase {

private ExpectedCondition<Boolean> pageTitleStartsWith(final

String searchString) {

return driver -> driver.getTitle().toLowerCase()

.startsWith(searchString.toLowerCase());

}

private void googleExampleThatSearchesFor(final String

searchString) {

WebDriver driver = DriverBase.getDriver();

driver.get("http://www.google.com");

WebElement searchField = driver.findElement(By.name("q"));

searchField.clear();

searchField.sendKeys(searchString);

System.out.println("Page title is: " + driver.getTitle());

searchField.submit();

WebDriverWait wait = new WebDriverWait(driver, 10, 100);

wait.until(pageTitleStartsWith(searchString));

System.out.println("Page title is: " + driver.getTitle());

}

@Test

public void googleCheeseExample() {

googleExampleThatSearchesFor("Cheese!");

}

@Test

public void googleMilkExample() {

googleExampleThatSearchesFor("Milk!");

}

}

We have modified our basic test so that it extends DriverBase. Instead of instantiating a new FirefoxDriver in the test, we are calling DriverBase.getDriver() to get a valid WebDriver instance. Finally, we have removed the driver.quit() from our generic method as this is all done by our DriverBase class now.

If we spin up our test again using this code, you won't notice any difference.:

mvn clean verify -Dwebdriver.gecko.driver=<PATH_TO_GECKODRIVER_BINARY>

However, if you now specify some threads by running this code, you will see that, this time, two Firefox browsers open, both tests run in parallel, and then both browsers are closed again.:

mvn clean verify -Dthreads=2 -Dwebdriver.gecko.driver=<PATH_TO_GECKODRIVER_BINARY>

This will show the current thread ID so that you can see that the FirefoxDriver instances are running in different threads.

As you may have noticed, with two very small tests such as the ones we are using in our example, you will not see a massive decrease in the time taken to run the complete suite. This is because most of the time is spent compiling the code and loading up browsers, but as you add more tests the decrease in time taken to run the tests becomes more and more apparent.

So, how can we speed things up even more? Well, starting up a web browser is a computationally intensive task, so we could choose to not close the browser after every test. This obviously has some side effects. You may not be at the usual entry page to your application, and you may have some session information that is not wanted.

If there is a risk of side effects, why are we contemplating it? The reason for doing this is, quite simply, speed. Let's imagine we have a suite of fifty tests. If you are spending 10 seconds loading up and shutting down a browser for each test that you run, reusing browsers will dramatically reduce the amount of time it takes. If we can only spend 10 seconds starting up and shutting down a browser for all fifty tests, we have shaved eight minutes and 10 seconds off our total test time.

Let's try it and see how it works for us. First, we will try and deal with our session problem. WebDriver has a command that will allow you to clear out your cookies, so we will trigger this after every test. We will then add a new @AfterSuite annotation to close the browser once all of the tests have finished. Take a look at the following code:

package com.masteringselenium;

import com.masteringselenium.config.DriverFactory;

import org.openqa.selenium.remote.RemoteWebDriver;

import org.testng.annotations.AfterMethod;

import org.testng.annotations.AfterSuite;

import org.testng.annotations.BeforeSuite;

import java.util.ArrayList;

import java.util.Collections;

import java.util.List;

public class DriverBase {

private static List<DriverFactory> webDriverThreadPool =

Collections.synchronizedList(new ArrayList<DriverFactory>());

private static ThreadLocal<DriverFactory> driverThread;

@BeforeSuite(alwaysRun = true)

public static void instantiateDriverObject() {

driverThread = new ThreadLocal<DriverFactory>() {

@Override

protected DriverFactory initialValue() {

DriverFactory webDriverThread = new DriverFactory();

webDriverThreadPool.add(webDriverThread);

return webDriverThread;

}

};

}

public static RemoteWebDriver getDriver() {

return driverThread.get().getDriver();

}

@AfterMethod(alwaysRun = true)

public static void clearCookies() {

try {

getDriver().manage().deleteAllCookies();

} catch (Exception ex) {

System.err.println("Unable to delete cookies: " + ex);

}

}

@AfterSuite(alwaysRun = true)

public static void closeDriverObjects() {

for (DriverFactory webDriverThread : webDriverThreadPool) {

webDriverThread.quitDriver();

}

}

}

The first addition to our code is a synchronized list where we can store all our instances of WebDriverThread. We have then modified our initialValue() method to add each instance of WebDriverThread that we create to this new synchronized list. We have done this to enable us to keep track of our threads.

Next, we have renamed our @AfterSuite method to ensure that the method names stay as descriptive as possible. It is now called closeDriverObjects(). This method does not just close down the instance of WebDriver that we are using as it did previously. Instead, it iterates through our webDriverThreadPool list, closing every threaded instance that we are keeping track of.

We don't actually know how many threads we are going to have run since this will be controlled by Maven. This is not an issue though, as this code has been written to make sure that we don't have to know. What we do know is that when our tests are finished, each WebDriver instance will be closed down cleanly and without errors, all thanks to the use of the webDriverThreadPool list.

Finally, we have added @AfterMethod called clearCookies() that will clear down the browser's cookies after each test. This should reset the browser to a neutral state without closing it so that we can start another test safely.