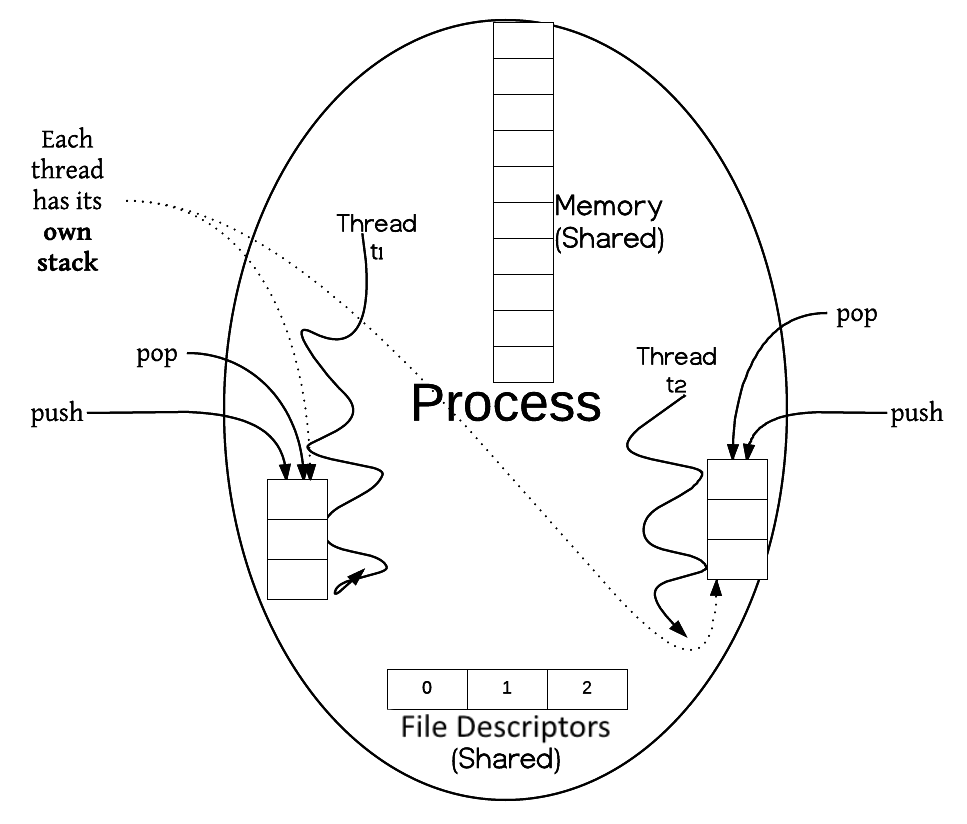

What if we write a multithreaded program to achieve the same result? A thread of execution is a sequence of programming instructions, scheduled and managed by the operating system. A process could contain multiple threads; in other words, a process is a container for concurrently executing threads, as shown in the following diagram:

As shown in the preceding diagram, multiple threads share the process memory. Two concurrently running processes do not share memory or any other resources, such as file descriptors. In other words, different concurrent processes have their own address space, while multiple threads within the same process share their address space. Each thread also has a stack of its own. This stack is used for returning after a process call. Locally scoped variables are also created on the stack. The relationships between these elements are shown in the following diagram:

As shown in the preceding diagram, both the threads communicate via the process's global memory. There is a FIFO (first in first out) queue in which the producer thread t1 enters the filenames. The consumer thread, t2, picks up the queue entries as and when it can.

What does this data structure do? It works on a similar principle as the aforementioned pipe. The producer can produce items as fast or slow as it can. Likewise, the consumer thread picks the entries from the queue as needed. Both work at their own pace, without caring or knowing of each other.

Exchanging information this way looks simpler. However, it brings with it a host of problems. Access to the shared data structure needs to be synchronized correctly. This is surprisingly hard to achieve. The next few sections will deal with the various issues that crop up. We will also see the various paradigms that shy away from the shared state model and tilt towards message passing.