Setting up a basic cluster

This section will help you to create a shopping list of components that you need and provide you step-by-step instructions to set up a basic Proxmox cluster. The steps in this section are in a much simpler form to get you up and running quickly. You can see Proxmox setup instructions in greater details from Proxmox Wiki documentation at http://pve.proxmox.com/wiki/Installation.

To set up a shared storage to be used with a Proxmox cluster, we are going to use Ubuntu or FreeNAS storage. There are options other than FreeNAS, such as OpenMediaVault, NAS4Free, GlusterFS, and DRBD to name a few. FreeNAS is an excellent choice for shared storage due to its ZFS filesystem implementation, simplicity of installation, large active community, and no licensing cost. Although we have used FreeNAS in this book, you can use just about any flavor of shared storage with the NFS and iSCSI support you want. Installation guide to set up FreeNAS is beyond the scope of this book.

Note

For complete setup instructions for FreeNAS, visit http://doc.freenas.org/index.php/Installing_from_CDROM.

Ubuntu is also a great choice to learn how shared storage works with Proxmox. Almost anything you can set up with FreeNAS, you can also set up in Ubuntu. The only difference is that there is no user-friendly graphical interface in Ubuntu as in FreeNAS. Deeper into the book, we will look at the ultimate shared storage solution using Ceph. But to get our first basic cluster up and running, we will use Ubuntu or FreeNAS to set up an NFS and iSCSI share.

Note

For installation instructions for Ubuntu server, visit http://www.ubuntu.com/download/server.

The hardware list

The following is a list of hardware components that we will need to put together our first basic Proxmox cluster. If you already have some components you would like to use to set up your cluster, it is important to check if they will support virtualization. Not all hardware platforms support virtualization, especially if they are quite old. To get details on how to check your components, visit http://virt-tools.org/learning/check-hardware-virt/.

A quicker way to check is through the BIOS and look for one of the following settings in the BIOS option. Any one of the these should be Enabled in order for the hypervisor to work.

- Intel ® Virtualization Technology

- Virtualization Technology (VTx)

- Virtualization

Note

This list of hardware is to build a bare minimum Proxmox cluster for learning purposes only and not suitable for enterprise-class infrastructure.

Component type

Brand/model

Quantity

CPU/Processor

Intel i3-2120 3.30 Ghz 4 Core

2

Motherboard

Asus P8B75-M/CSM

2

RAM

Kingston 8 GB 1600 Mhz DDR3 240 Pin Non-ECC

3

HDD

Seagate Momentus 250 GB 2.5" SATA

2

USB stick

Patriot Memory 4 GB

1

Power supply

300+ Watt

3

LAN switch

Netgear GS108NA 8-Port Gigabit Switch

1

The software list

Download the software given in the following table in the ISO format from their respective URL, and then create a CD from the ISO images.

|

Software |

Download link |

|---|---|

|

Proxmox VE | |

|

FreeNAS | |

|

Ubuntu Server | |

|

clearOS community |

Hardware setup

The next diagram is a network diagram of a basic Proxmox cluster. We will start with two node clusters with one shared storage setup with either Ubuntu or FreeNAS. The setup in the illustration is a guideline only. Depending on the level of experience, budget, and available hardware on hand, you can set up any way you see fit. Regardless of whatever setup you use, it should meet the following requirements:

- Two Proxmox nodes with two Network Interface Cards

- One shared storage with NFS and iSCSI connectivity

- One physical firewall

- One 8+ port physical switch

- One KVM virtual machine

- One OpenVZ/container machine

This book is intended for beyond-beginner-level user and, therefore, full instruction of the hardware assembly process is not detailed here. After connecting all equipment together, it should resemble the following diagram:

Proxmox installation

Perform the following simple steps to install Proxmox VE on Proxmox nodes:

- Assemble all three nodes with proper components, and connect all of them with a LAN switch.

- Power up the first node and access BIOS to make necessary changes such as enabling virtualization.

- Boot the node from the Proxmox installation disc.

- Follow along the Proxmox graphical installation process. Enter the IP address

192.168.145.1, or any other subnet you wish, when prompted. Also enterpmxvm1.domain.comor any other hostname that you choose to use. - Perform step 3 and 4 for second node. Use IP address

192.168.145.2or any other subnet. Usepmxvm2.domain.comas hostname or any other hostname.

Cluster creation

We are now going to create a Proxmox cluster with two Proxmox nodes we just installed. From admin PC, (Linux/Windows), log in to Proxmox node #1 (pmxvm01) through secure login. If the admin PC is Windows based, use program such as PuTTY to remotely log in to Proxmox node.

Note

Download PuTTY from http://www.chiark.greenend.org.uk/~sgtatham/putty/download.html.

Linux users use the following command to securely log in to Proxmox node:

# ssh root@192.168.145.1

After logging in, it is now time to create our cluster. The command to create a Proxmox cluster is as follows:

# pvecm create <cluster_name>

This command can be executed on any of the Proxmox nodes but only once.

Tip

Never run a cluster creation command on more than one node in the same cluster. The cluster creation process must be completed on one node before adding nodes to the cluster.

The cluster does not operate on master/slave basis, but on Quorum. In order to achieve healthy cluster status, all nodes need to be online. Let's execute the following command and create the cluster:

root@pmxvm01:~# pvecm create pmx-cluster

The preceding command will display the following messages on the screen as it creates a new cluster and activates it:

Restarting pve cluster filesystem: pve-cluster[dcdb] notice: wrote new cluster config '/etc/cluster/cluster.conf' Starting cluster: Checking if cluster has been disabled at boot. . . [ OK ] Checking Network Manager. . . [ OK ] Global setup. . . [ OK ] Loading kernel modules. . . [ OK ] Mounting configfs. . . [ OK ] Starting cman. . . [ OK ] Waiting for quorum. . . [ OK ] Starting fenced. . . [ OK ] Starting dlm_controld. . . [ OK ] Tuning DLM kernel config. . . [ OK ] Unfencing self. . . [ OK ] root@pmxvm01:~#

After cluster creation is complete, check its status by using the following command:

root@pmxvm01:~# pvecm status

The preceding command will display the following output:

Version: 6.2.0 Config Version: 1 Cluster Name: pmx-cluster Cluster ID: 23732 Cluster Member: Yes Cluster Generation: 4 Membership status: Cluster-Member Nodes: 1 Expected votes: 1 Total votes: 1 Quorum: 1 Active subsystem: 5 Flags: Ports Bound: 0 Node name: pxvm01 Node ID: 1 Multicast addresses: 239.192.92.17 Node addresses: 192.168.145.1 root@pmxvm01:~#

The status shows some vital information that is needed to see how the cluster is doing and what the other member nodes of the cluster are. Although from Proxmox GUI we can visually see cluster health, command-line information gives a little bit more in-depth picture.

After the cluster has been created, the next step is to add Proxmox nodes into the cluster. Securely log in to the other node and run the following command:

root@pmxvm02:~# pvecm add 192.168.145.1

Verify that this node is now joined with the cluster with the following command:

root@pmxvm02:~# pvecm nodes

It should print the following node list that are member of the cluster we have just created:

Node Sts Inc Joined Name 1 M 4900 2014-01-26 16:02:34 pmxvm01 2 M 4774 2014-01-26 16:12:19 pmxvm02

The next step is to log in to Proxmox Web GUI to see the cluster and attach shared storage. Use the URL in the following format from a browser on the admin computer to access the Proxmox graphical user interface:

https://<ip_proxmox_node>:8006

The cluster should look similar to the following screenshot:

Proxmox subscription

On a clean installed Proxmox node, a paid subscription-based repository is enabled by default. When you log in to the Proxmox GUI, the following message will pop up on entering login information:

If you want to continue using Proxmox without subscription, perform the following steps to remove the enterprise repository and enable a subscription-less repository. This needs to be done on all Proxmox nodes in the cluster.

- Run the following command:

# nano /etc/apt/sources.list.d/pve-enterprise.list - Comment out enterprise repository as follows:

#deb https://enterprise.proxmox.com/debian wheezy pve-enterprise

- Run the following command:

# nano /etc/apt/sources.list - Add a subscription-less repository as follows:

deb http://download.proxmox.com/debian wheezy pve-no-subscription

Attaching shared storage

A Proxmox cluster can function with local storage just fine. But shared storage has many advantages over local storage, especially when we throw migration and disaster-related downtime in the mix. Live migration while a virtual machine is powered on is not possible without shared storage. We will start our journey into Proxmox with NFS/iSCSI shared storage, such as Ubuntu or FreeNAS.

Tip

Take a pause reading right here, and set up the third node with Ubuntu or FreeNAS. Both websites Ubuntu and FreeNAS have complete instructions to get you up and running.

After you have the choice of shared storage server setup, attach the storage with Proxmox by navigating to Datacenter | Storage. There should be three shares as shown in the following table:

|

Share ID |

Share type |

Content |

Purpose |

|---|---|---|---|

|

ISO-nfs-01 |

NFS |

ISO, templates |

To store ISO images |

|

vm-nfs-01 |

NFS |

Image, containers |

To store VM with the |

|

nas-lvm-01 |

iSCSI |

Image |

To store a raw VM |

After setting up both the NFS and iSCSI shares, the Proxmox GUI should look like the following screenshot:

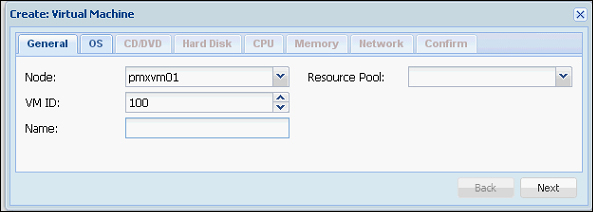

Adding virtual machines

With our cluster up and running, it is time to add some virtual machines to it. Click on Create VM to start a KVM virtual machine creation process. The option window to create a virtual machine looks like the following screenshot:

Main virtual machine

The virtual machine we are going to create will act as main server for the rest of the virtual machines in the cluster. This will provide services such as DHCP, DNS, and so on. You can use any Linux flavor you are familiar with to get the DHCP/DNS Server set up. The ClearOS Community edition is a great choice since it allows putting all services in one machine and actually works very well.

Note

ClearOS is an open source, server-in-a-box Linux distribution, which means it can pull the weight of multiple servers/services in one setup. ClearOS is a Linux replacement of Windows Small Business Server. Learn more details and download it from http://www.clearfoundation.com/Software/overview.html.

Before creating a KVM-based virtual machine from scratch, we have to upload an ISO image of an operating system into Proxmox. This also applies to any ISO image we want a user to have access to, such as an ISO image of the installation disk for Microsoft Office or any other software. Not all storage types are supported to store ISO images. As of this writing, only local Proxmox storage, NFS, Ceph FS, and GlusterFS can be used to store ISO images. To upload an ISO image, perform the following steps:

- Select proper storage from the Datacenter or Storage view on the Proxmox GUI.

- Click on the Content tab.

- Click on the Upload button to open the upload dialog box as shown in the following screenshot:

- Click on the Select File… button to select the ISO image, and then click on the Upload button. After uploading, the ISO will show up on the content page as shown in the following screenshot:

Since the upload happens through the browser, it may cause a timeout error while uploading a large ISO file. In these cases, use a client program such FileZilla to upload the ISO image. Usually the Proxmox directory path to upload an ISO file is /mnt/pve/<storage_name>/template/iso.

After the ISO image is in place, we can proceed with KVM virtual machine creation using the configuration in the following table:

|

VM creation tab |

Specification |

Selection |

|---|---|---|

|

General |

Node |

pmxvm01 |

|

Virtual machine ID |

101 | |

|

Virtual machine name |

pmxMS01 | |

|

OS |

Linux/other OS types |

Linux 3.x/2.6 Kernel |

|

CD/DVD |

Use CD/DVD disc image file |

ClearOS 6 Community |

|

Hard disk |

Bus/device |

virtio |

|

Storage |

vm-nfs-01 | |

|

Disk size (GB) |

25 | |

|

Format |

QEMU image (qcow2) | |

|

CPU |

Sockets |

1 |

|

Cores |

1 | |

|

Type |

Default (kvm64) | |

|

Memory |

Automatically allocate memory within range |

Max. 1024 MB Minimum 512 MB |

|

Network |

Bridged mode |

vmbr0 |

|

Model |

Intel E1000 / VirtIO |

Creating a KVM virtual machine

After the main server is set up, we are now going to create a second virtual machine with Ubuntu as the operating system. Proxmox has a cloning feature, which saves lot of time when deploying VMs with the same operating system and configuration. We will use the Ubuntu virtual machine as the template for all Linux-based VMs throughout this book. Create the Ubuntu VM with the following configuration:

|

VM creation tab |

Specification |

Selection |

|---|---|---|

|

General |

Node |

pmxvm01 |

|

Virtual machine ID |

201 | |

|

Virtual machine name |

template-Ubuntu | |

|

OS |

Linux/other OS types |

Linux 3.x/2.6 Kernel |

|

CD/DVD |

Use CD/DVD disc image file |

Ubuntu server ISO |

|

Hard disk |

Bus/device |

virtio |

|

Storage |

vm-nfs-01 | |

|

Disk size (GB) |

30 | |

|

Format |

QEMU image (qcow2) | |

|

CPU |

Sockets |

1 |

|

Cores |

1 | |

|

Type |

Default (kvm64) | |

|

Memory |

Automatically allocate memory within range |

Maximum 1024 MB Minimum 512 MB |

|

Network |

Bridged mode |

vmbr0 |

|

Model |

Intel E1000 / VirtIO |

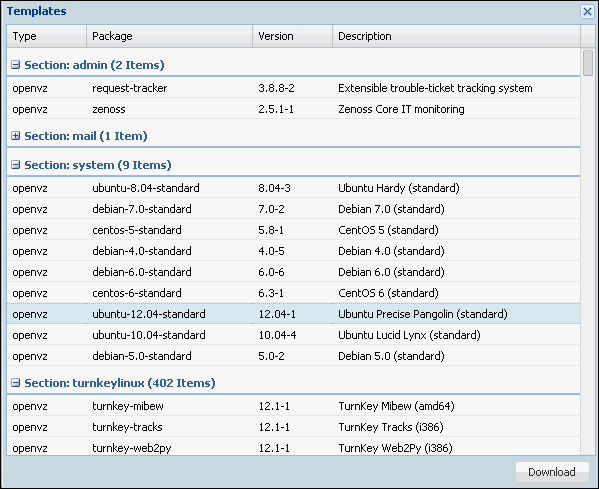

Creating an OpenVZ virtual machine

Now we will create one OpenVZ/container virtual machine. OpenVZ is container-based virtualization for Linux where all containers share the base host operating system. At this moment, only the Linux OpenVZ virtual machine is possible, and no Windows-based container. Although OpenVZ containers act as independent virtual machines, they rely heavily on the underlying Linux kernel of the hypervisor. All containers in a cluster share the same Linux kernel of the same version. The biggest advantage of the OpenVZ container is soft memory allocation where memory not used in one container can be used by other containers. Since each container does not have its own full version of the operating system, the backup size of containers is much smaller than the KVM-based virtual machine. OpenVZ is a great option for an environment such as a web hosting provider, where many instances can run simultaneously to host client sites.

Tip

Go to http://openvz.org/Main_Page for more details on OpenVZ.

Unlike a KVM virtual machine, OpenVZ containers cannot be installed using an ISO image. Proxmox uses templates to create OpenVZ container virtual machines and comes with the very nice feature of templates repository. At the time of this writing, the repository has close to 400 templates ready to download through the Proxmox GUI.

Templates could also be user-created with specific configurations. Creating your own template can be a difficult task and usually requires extensive knowledge of the operating system. To take the difficulties out of the equation, Proxmox provides an excellent script called Debian Appliance Builder (DAB) to create OpenVZ templates. Visit the following links before undertaking OpenVZ templates:

From the Proxmox GUI, click on the Templates button as shown in the following screenshot to open the built-in template browser dialog box and to download templates:

For our OpenVZ virtual machine lesson, we will be using the Ubuntu 12.04 template under Section: system as shown in the following screenshot:

Create the OpenVZ container using the following specifications:

|

OpenVZ creation tab |

Specification |

Selection |

|---|---|---|

|

General |

Node |

pxvm01 |

|

Virtual machine ID |

121 | |

|

Virtual machine hostname |

ubuntuCT-01 | |

|

Storage |

vm-nfs-01 | |

|

Password |

any | |

|

Template |

Storage |

ISO-nfs-01 |

|

Template |

ubuntu-12.04-standard | |

|

Resources |

Memory |

1024 MB |

|

Swap |

512 MB | |

|

Disk size (GB) |

30 | |

|

CPUs |

1 | |

|

Network |

Bridged mode |

vmbr0 |

Tip

OpenVZ containers cannot be cloned for mass deployment. If such mass deployment is required, then the container can be backed up and restored with different VM IDs as many times as required.

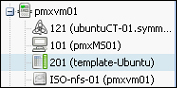

With all three of the virtual machines created, the Proxmox cluster GUI should look like the following screenshot:

Proxmox cloning/template

One of the great features of Proxmox is the ability to clone a virtual machine for mass deployment. It saves an enormous amount of time while deploying virtual machines with similar operating systems.

Introducing cloning using a template

It is entirely possible to clone a virtual machine without ever creating a template. The main advantage of creating a template is virtual machine organizing within the cluster.

Tip

The template for cloning is not the same as the template required to create OpenVZ/container.

A template has a distinct icon as seen in the following screenshot, which easily identifies it from a standard virtual machine. Just create a VM with desired configuration, and then by the touch of a mouse click, turn the VM into a template. Whenever a new VM is required, just clone the template.

For a small cluster with few virtual machines, it is not an issue. But an enterprise cluster with hundreds, if not thousands, of virtual machines, finding the right template can become a tedious task. By right-clicking on a VM, you can pull up a context menu, which shows the option related to that VM.

|

Menu item |

Function |

|---|---|

|

Start |

Starts virtual machine. |

|

Migrate |

Allow online/offline migration of virtual machine. |

|

Shutdown |

Safely powers down virtual machine. |

|

Stop |

Powers down virtual machine immediately. Might cause data loss. Similar to holding down the Power button for 6 seconds on a physical machine. |

|

Clone |

Clones virtual machine. |

|

Convert to Template |

Transforms a virtual machine into a template for cloning. Templates themselves cannot be used as a regular virtual machine. |

|

Console |

Opens a virtual machine in a VNC console. |

Transforming VM into a template

Let's turn our Ubuntu virtual machine we created in the Creating a KVM virtual machine section into a template. Perform the following steps:

- Right-click on a virtual machine to open the context menu.

- Click on Convert to template as shown in the following screenshot. This will convert the VM into a template that can be used to clone an unlimited number of virtual machines. While creating a template, keep in mind that a template itself cannot be used as a virtual machine. But it can be migrated to different hosts just like a virtual machine.

Cloning using a template

The template is now ready for cloning. Right-clicking on the template will open up the context menu, which will have only two menu options: Migrate and Clone. Click on Clone to open the template cloning option window as shown in the following screenshot:

The most important option to notice in this menu is the Mode option. A clone can be created from a template using either Full Clone or Linked Clone.

Full Clone versus Linked Clone

The following is a comparison table with features of Full Clone and Linked Clone:

|

Full clone |

Linked clone |

|---|---|

|

Fully independent from original VM/template |

Linked with original VM/template it was created from |

|

Takes the same amount of disk space as original VM/template |

Takes less disk space than original VM/template |

|

Supported file types: |

Does not support storage file in lvm and iSCSI |

|

If original VM/template is lost or damaged, the cloned VM stays intact |

If original VM/template is lost, the cloned VM can no longer function. All linked VMs are connected to original VM/template |

|

Full Clone has greater performance over Linked Clone |

Performance degrades in Linked VM as more people share the same original VM/template |

|

Full Clone takes much longer to create |

Linked Clone can be created within minutes |

|

Full Clone is a replica of the original |

Linked Clone is created from a snapshot of original VM/template |

From the previous table, we can see that both Full Clone and Linked Clone have pros and cons. One rule of thumb is that if performance is the main focus, go with Full Clone. If storage space conservation is the focus, then go with Linked Clone.

Tip

Attention: a damaged original VM/template can render all linked VMs unusable!

VM migration

Proxmox migration allows a VM or OpenVZ container to be moved to a Proxmox node in both offline and online modes. The most common scenario of VM migration is when a Proxmox node needs a reboot due to a major kernel update. Without the migration option, each reboot would be very difficult for an administrator as all the running VMs have to be stopped first before reboot occurs, which will cause major downtime in a mission-critical virtual environment.

With the migration option, a running VM can be moved to another node without a single downtime. During live migration, VM does not experience any major slowdown. After the node reboots, simply migrate the VMs back to the original node. Any offline VMs can also be moved with ease.

Proxmox takes a very minimalistic approach to the migration process. Just select the destination node and online/offline check box. Then hit the Migrate button to get the migration process started. Depending on the size of virtual drive and allocated memory of the VM, the entire migration process time can vary.

Tip

Live/online migration also migrates virtual memory content of the VM. The bigger the memory, the longer it will take to migrate.