Naïve, advanced, and modular RAG in code

This section introduces naïve, advanced, and modular RAG through basic educational examples. The program builds keyword matching, vector search, and index-based retrieval methods. Using OpenAI’s GPT models, it generates responses based on input queries and retrieved documents.

The goal of the notebook is for a conversational agent to answer questions on RAG in general. We will build the retriever from the bottom up, from scratch, in Python and run the generator with OpenAI GPT-4o in eight sections of code divided into two parts:

Part 1: Foundations and Basic Implementation

- Environment setup for OpenAI API integration

- Generator function using GPT-4o

- Data setup with a list of documents (

db_records) - Query for user input

Part 2: Advanced Techniques and Evaluation

- Retrieval metrics to measure retrieval responses

- Naïve RAG with a keyword search and matching function

- Advanced RAG with vector search and index-based search

- Modular RAG implementing flexible retrieval methods

To get started, open RAG_Overview.ipynb in the GitHub repository. We will begin by establishing the foundations of the notebook and exploring the basic implementation.

Part 1: Foundations and basic implementation

In this section, we will set up the environment, create a function for the generator, define a function to print a formatted response, and define the user query.

The first step is to install the environment.

The section titles of the following implementation of the notebook follow the structure in the code. Thus, you can follow the code in the notebook or read this self-contained section.

1. Environment

The main package to install is OpenAI to access GPT-4o through an API:

!pip install openai==1.40.3

Make sure to freeze the OpenAI version you install. In RAG framework ecosystems, we will have to install several packages to run advanced RAG configurations. Once we have stabilized an installation, we will freeze the version of the packages installed to minimize potential conflicts between the libraries and modules we implement.

Once you have installed openai, you will have to create an account on OpenAI (if you don’t have one) and obtain an API key. Make sure to check the costs and payment plans before running the API.

Once you have a key, store it in a safe place and retrieve it as follows from Google Drive, for example, as shown in the following code:

#API Key

#Store you key in a file and read it(you can type it directly in the notebook but it will be visible for somebody next to you)

from google.colab import drive

drive.mount('/content/drive')

You can use Google Drive or any other method you choose to store your key. You can read the key from a file, or you can also choose to enter the key directly in the code:

f = open("drive/MyDrive/files/api_key.txt", "r")

API_KEY=f.readline().strip()

f.close()

#The OpenAI Key

import os

import openai

os.environ['OPENAI_API_KEY'] =API_KEY

openai.api_key = os.getenv("OPENAI_API_KEY")

With that, we have set up the main resources for our project. We will now write a generation function for the OpenAI model.

2. The generator

The code imports openai to generate content and time to measure the time the requests take:

import openai

from openai import OpenAI

import time

client = OpenAI()

gptmodel="gpt-4o"

start_time = time.time() # Start timing before the request

We now create a function that creates a prompt with an instruction and the user input:

def call_llm_with_full_text(itext):

# Join all lines to form a single string

text_input = '\n'.join(itext)

prompt = f"Please elaborate on the following content:\n{text_input}"

The function will try to call gpt-4o, adding additional information for the model:

try:

response = client.chat.completions.create(

model=gptmodel,

messages=[

{"role": "system", "content": "You are an expert Natural Language Processing exercise expert."},

{"role": "assistant", "content": "1.You can explain read the input and answer in detail"},

{"role": "user", "content": prompt}

],

temperature=0.1 # Add the temperature parameter here and other parameters you need

)

return response.choices[0].message.content.strip()

except Exception as e:

return str(e)

Note that the instruction messages remain general in this scenario so that the model remains flexible. The temperature is low (more precise) and set to 0.1. If you wish for the system to be more creative, you can set temperature to a higher value, such as 0.7. However, in this case, it is recommended to ask for precise responses.

We can add textwrap to format the response as a nice paragraph when we call the generative AI model:

import textwrap

def print_formatted_response(response):

# Define the width for wrapping the text

wrapper = textwrap.TextWrapper(width=80) # Set to 80 columns wide, but adjust as needed

wrapped_text = wrapper.fill(text=response)

# Print the formatted response with a header and footer

print("Response:")

print("---------------")

print(wrapped_text)

print("---------------\n")

The generator is now ready to be called when we need it. Due to the probabilistic nature of generative AI models, it might produce different outputs each time we call it.

The program now implements the data retrieval functionality.

3. The Data

Data collection includes text, images, audio, and video. In this notebook, we will focus on data retrieval through naïve, advanced, and modular configurations, not data collection. We will collect and embed data later in Chapter 2, RAG Embedding Vector Stores with Deep Lake and OpenAI. As such, we will assume that the data we need has been processed and thus collected, cleaned, and split into sentences. We will also assume that the process included loading the sentences into a Python list named db_records.

This approach illustrates three aspects of the RAG ecosystem we described in The RAG ecosystem section and the components of the system described in Figure 1.3:

- The retriever (D) has three data processing components, collect (D1), process (D2), and storage (D3), which are preparatory phases of the retriever.

- The retriever query (D4) is thus independent of the first three phases (collect, process, and storage) of the retriever.

- The data processing phase will often be done independently and prior to activating the retriever query, as we will implement starting in Chapter 2.

This program assumes that data processing has been completed and the dataset is ready:

db_records = [

"Retrieval Augmented Generation (RAG) represents a sophisticated hybrid approach in the field of artificial intelligence, particularly within the realm of natural language processing (NLP).",

…/…

We can display a formatted version of the dataset:

import textwrap

paragraph = ' '.join(db_records)

wrapped_text = textwrap.fill(paragraph, width=80)

print(wrapped_text)

The output joins the sentences in db_records for display, as printed in this excerpt, but db_records remains unchanged:

Retrieval Augmented Generation (RAG) represents a sophisticated hybrid approach in the field of artificial intelligence, particularly within the realm of natural language processing (NLP)…

The program is now ready to process a query.

4.The query

The retriever (D4 in Figure 1.3) query process depends on how the data was processed, but the query itself is simply user input or automated input from another AI agent. We all dream of users who introduce the best input into software systems, but unfortunately, in real life, unexpected inputs lead to unpredictable behaviors. We must, therefore, build systems that take imprecise inputs into account.

In this section, we will imagine a situation in which hundreds of users in an organization have heard the word “RAG” associated with “LLM” and “vector stores.” Many of them would like to understand what these terms mean to keep up with a software team that’s deploying a conversational agent in their department. After a couple of days, the terms they heard become fuzzy in their memory, so they ask the conversational agent, GPT-4o in this case, to explain what they remember with the following query:

query = "define a rag store"

In this case, we will simply store the main query of the topic of this program in query, which represents the junction between the retriever and the generator. It will trigger a configuration of RAG (naïve, advanced, and modular). The choice of configuration will depend on the goals of each project.

The program takes the query and sends it to a GPT-4o model to be processed and then displays the formatted output:

# Call the function and print the result

llm_response = call_llm_with_full_text(query)

print_formatted_response(llm_response)

The output is revealing. Even the most powerful generative AI models cannot guess what a user, who knows nothing about AI, is trying to find out in good faith. In this case, GPT-4o will answer as shown in this excerpt of the output:

Response:

---------------

Certainly! The content you've provided appears to be a sequence of characters

that, when combined, form the phrase "define a rag store." Let's break it down

step by step:…

… This is an indefinite article used before words that begin with a consonant sound. - **rag**: This is a noun that typically refers to a pieceof old, often torn, cloth. - **store**: This is a noun that refers to a place where goods are sold. 4. **Contextual Meaning**: - **"Define a rag store"**: This phrase is asking for an explanation or definition of what a "rag store" is. 5. **Possible Definition**: - A "rag store" could be a shop or retail establishment that specializes in selling rags,…

The output will seem like a hallucination, but is it really? The user wrote the query with the good intentions of every beginner trying to learn a new topic. GPT-4o, in good faith, did what it could with the limited context it had with its probabilistic algorithm, which might even produce a different response each time we run it. However, GPT-4o is being wary of the query. It wasn’t very clear, so it ends the response with the following output that asks the user for more context:

…Would you like more information or a different type of elaboration on this content?…

The user is puzzled, not knowing what to do, and GPT-4o is awaiting further instructions. The software team has to do something!

Generative AI is based on probabilistic algorithms. As such, the response provided might vary from one run to another, providing similar (but not identical) responses.

That is when RAG comes in to save the situation. We will leave this query as it is for the whole notebook and see if a RAG-driven GPT-4o system can do better.

Part 2: Advanced techniques and evaluation

In Part 2, we will introduce naïve, advanced, and modular RAG. The goal is to introduce the three methods, not to process complex documents, which we will implement throughout the following chapters of this book.

Let’s first begin by defining retrieval metrics to measure the accuracy of the documents we retrieve.

1. Retrieval metrics

This section explores retrieval metrics, first focusing on the role of cosine similarity in assessing the relevance of text documents. Then we will implement enhanced similarity metrics by incorporating synonym expansion and text preprocessing to improve the accuracy of similarity calculations between texts.

We will explore more metrics in the Metrics calculation and display section in Chapter 7, Building Scalable Knowledge-Graph-Based RAG with Wikipedia API and LlamaIndex.

In this chapter, let’s begin with cosine similarity.

Cosine similarity

Cosine similarity measures the cosine of the angle between two vectors. In our case, the two vectors are the user query and each document in a corpus.

The program first imports the class and function we need:

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics.pairwise import cosine_similarity

TfidfVectorizer imports the class that converts text documents into a matrix of TF-IDF features. Term Frequency-Inverse Document Frequency (TF-IDF) quantifies the relevance of a word to a document in a collection, distinguishing common words from those significant to specific texts. TF-IDF will thus quantify word relevance in documents using frequency across the document and inverse frequency across the corpus. cosine_similarity imports the function we will use to calculate the similarity between vectors.

calculate_cosine_similarity(text1, text2) then calculates the cosine similarity between the query (text1) and each record of the dataset.

The function converts the query text (text1) and each record (text2) in the dataset into a vector with a vectorizer. Then, it calculates and returns the cosine similarity between the two vectors:

def calculate_cosine_similarity(text1, text2):

vectorizer = TfidfVectorizer(

stop_words='english',

use_idf=True,

norm='l2',

ngram_range=(1, 2), # Use unigrams and bigrams

sublinear_tf=True, # Apply sublinear TF scaling

analyzer='word' # You could also experiment with 'char' or 'char_wb' for character-level features

)

tfidf = vectorizer.fit_transform([text1, text2])

similarity = cosine_similarity(tfidf[0:1], tfidf[1:2])

return similarity[0][0]

The key parameters of this function are:

stop_words='english: Ignores common English words to focus on meaningful contentuse_idf=True: Enables inverse document frequency weightingnorm='l2': Applies L2 normalization to each output vectorngram_range=(1, 2): Considers both single words and two-word combinationssublinear_tf=True: Applies logarithmic term frequency scalinganalyzer='word': Analyzes text at the word level

Cosine similarity can be limited in some cases. Cosine similarity has limitations when dealing with ambiguous queries because it strictly measures the similarity based on the angle between vector representations of text. If a user asks a vague question like “What is rag?” in the program of this chapter and the database primarily contains information on “RAG” as in “retrieval-augmented generation” for AI, not “rag cloths,” the cosine similarity score might be low. This low score occurs because the mathematical model lacks contextual understanding to differentiate between the different meanings of “rag.” It only computes similarity based on the presence and frequency of similar words in the text, without grasping the user’s intent or the broader context of the query. Thus, even if the answers provided are technically accurate within the available dataset, the cosine similarity may not reflect the relevance accurately if the query’s context isn’t well-represented in the data.

In this case, we can try enhanced similarity.

Enhanced similarity

Enhanced similarity introduces calculations that leverage natural language processing tools to better capture semantic relationships between words. Using libraries like spaCy and NLTK, it preprocesses texts to reduce noise, expands terms with synonyms from WordNet, and computes similarity based on the semantic richness of the expanded vocabulary. This method aims to improve the accuracy of similarity assessments between two texts by considering a broader context than typical direct comparison methods.

The code contains four main functions:

get_synonyms(word): Retrieves synonyms for a given word from WordNetpreprocess_text(text): Converts all text to lowercase, lemmatizes gets the (roots of words), and filters stopwords (common words) and punctuation from textexpand_with_synonyms(words): Enhances a list of words by adding their synonymscalculate_enhanced_similarity(text1, text2): Computes cosine similarity between preprocessed and synonym-expanded text vectors

The calculate_enhanced_similarity(text1, text2) function takes two texts and ultimately returns the cosine similarity score between two processed and synonym-expanded texts. This score quantifies the textual similarity based on their semantic content and enhanced word sets.

The code begins by downloading and importing the necessary libraries and then runs the four functions beginning with calculate_enhanced_similarity(text1, text2):

import spacy

import nltk

nltk.download('wordnet')

from nltk.corpus import wordnet

from collections import Counter

import numpy as np

# Load spaCy model

nlp = spacy.load("en_core_web_sm")

…

Enhanced similarity takes this a bit further in terms of metrics. However, integrating RAG with generative AI presents multiple challenges.

No matter which metric we implement, we will face the following limitations:

- Input versus Document Length: User queries are often short, while retrieved documents are longer and richer, complicating direct similarity evaluations.

- Creative Retrieval: Systems may creatively select longer documents that meet user expectations but yield poor metric scores due to unexpected content alignment.

- Need for Human Feedback: Often, human judgment is crucial to accurately assess the relevance and effectiveness of retrieved content, as automated metrics may not fully capture user satisfaction. We will explore this critical aspect of RAG in Chapter 5, Boosting RAG Performance with Expert Human Feedback.

We will always have to find the right balance between mathematical metrics and human feedback.

We are now ready to create an example with naïve RAG.

2. Naïve RAG

Naïve RAG with keyword search and matching can prove efficient with well-defined documents within an organization, such as legal and medical documents. These documents generally have clear titles or labels for images, for example. In this naïve RAG function, we will implement keyword search and matching. To achieve this, we will apply a straightforward retrieval method in the code:

- Split the query into individual keywords

- Split each record in the dataset into keywords

- Determine the length of the common matches

- Choose the record with the best score

The generation method will:

- Augment the user input with the result of the retrieval query

- Request the generation model, which is

gpt-4oin this case - Display the response

Let’s write the keyword search and matching function.

Keyword search and matching

The best matching function first initializes the best scores:

def find_best_match_keyword_search(query, db_records):

best_score = 0

best_record = None

The query is then split into keywords. Each record is also split into words to find the common words, measure the length of common content, and find the best match:

# Split the query into individual keywords

query_keywords = set(query.lower().split())

# Iterate through each record in db_records

for record in db_records:

# Split the record into keywords

record_keywords = set(record.lower().split())

# Calculate the number of common keywords

common_keywords = query_keywords.intersection(record_keywords)

current_score = len(common_keywords)

# Update the best score and record if the current score is higher

if current_score > best_score:

best_score = current_score

best_record = record

return best_score, best_record

We now call the function, format the response, and print it:

# Assuming 'query' and 'db_records' are defined in previous cells in your Colab notebook

best_keyword_score, best_matching_record = find_best_match_keyword_search(query, db_records)

print(f"Best Keyword Score: {best_keyword_score}")

#print(f"Best Matching Record: {best_matching_record}")

print_formatted_response(best_matching_record)

The main query of this notebook will be query = "define a rag store" to see if each RAG method produces an acceptable output.

The keyword search finds the best record in the list of sentences in the dataset:

Best Keyword Score: 3

Response:

---------------

A RAG vector store is a database or dataset that contains vectorized data points.

---------------

Let’s run the metrics.

Metrics

We created the similarity metrics in the 1. Retrieval metrics section of this chapter. We will first apply cosine similarity:

# Cosine Similarity

score = calculate_cosine_similarity(query, best_matching_record)

print(f"Best Cosine Similarity Score: {score:.3f}")

The output similarity is low, as explained in the 1. Retrieval metrics section of this chapter. The user input is short and the response is longer and complete:

Best Cosine Similarity Score: 0.126

Enhanced similarity will produce a better score:

# Enhanced Similarity

response = best_matching_record

print(query,": ", response)

similarity_score = calculate_enhanced_similarity(query, response)

print(f"Enhanced Similarity:, {similarity_score:.3f}")

The score produced is higher with enhanced functionality:

define a rag store : A RAG vector store is a database or dataset that contains vectorized data points.

Enhanced Similarity:, 0.642

The output of the query will now augment the user input.

Augmented input

The augmented input is the concatenation of the user input and the best matching record of the dataset detected with the keyword search:

augmented_input=query+ ": "+ best_matching_record

The augmented input is displayed if necessary for maintenance reasons:

print_formatted_response(augmented_input)

The output then shows that the augmented input is ready:

Response:

---------------

define a rag store: A RAG vector store is a database or dataset that contains

vectorized data points.

---------------

The input is now ready for the generation process.

Generation

We are now ready to call GPT-4o and display the formatted response:

llm_response = call_llm_with_full_text(augmented_input)

print_formatted_response(llm_response)

The following excerpt of the response shows that GPT-4o understands the input and provides an interesting, pertinent response:

Response:

---------------

Certainly! Let's break down and elaborate on the provided content: ### Define a

RAG Store: A **RAG (Retrieval-Augmented Generation) vector store** is a

specialized type of database or dataset that is designed to store and manage

vectorized data points…

Naïve RAG can be sufficient in many situations. However, if the volume of documents becomes too large or the content becomes more complex, then advanced RAG configurations will provide better results. Let’s now explore advanced RAG.

3. Advanced RAG

As datasets grow larger, keyword search methods might prove too long to run. For instance, if we have hundreds of documents and each document contains hundreds of sentences, it will become challenging to use keyword search only. Using an index will reduce the computational load to just a fraction of the total data.

In this section, we will go beyond searching text with keywords. We will see how RAG transforms text data into numerical representations, enhancing search efficiency and processing speed. Unlike traditional methods that directly parse text, RAG first converts documents and user queries into vectors, numerical forms that speed up calculations. In simple terms, a vector is a list of numbers representing various features of text. Simple vectors might count word occurrences (term frequency), while more complex vectors, known as embeddings, capture deeper linguistic patterns.

In this section, we will implement vector search and index-based search:

- Vector Search: We will convert each sentence in our dataset into a numerical vector. By calculating the cosine similarity between the query vector (the user query) and these document vectors, we can quickly find the most relevant documents.

- Index-Based Search: In this case, all sentences are converted into vectors using TF-IDF (Term Frequency-Inverse Document Frequency), a statistical measure used to evaluate how important a word is to a document in a collection. These vectors act as indices in a matrix, allowing quick similarity comparisons without parsing each document fully.

Let’s start with vector search and see these concepts in action.

3.1.Vector search

Vector search converts the user query and the documents into numerical values as vectors, enabling mathematical calculations that retrieve relevant data faster when dealing with large volumes of data.

The program runs through each record of the dataset to find the best matching document by computing the cosine similarity of the query vector and each record in the dataset:

def find_best_match(text_input, records):

best_score = 0

best_record = None

for record in records:

current_score = calculate_cosine_similarity(text_input, record)

if current_score > best_score:

best_score = current_score

best_record = record

return best_score, best_record

The code then calls the vector search function and displays the best record found:

best_similarity_score, best_matching_record = find_best_match(query, db_records)

print_formatted_response(best_matching_record)

The output is satisfactory:

Response:

---------------

A RAG vector store is a database or dataset that contains vectorized data

points.

The response is the best one found, like with naïve RAG. This shows that there is no silver bullet. Each RAG technique has its merits. The metrics will confirm this observation.

Metrics

The metrics are the same for both similarity methods as for naïve RAG because the same document was retrieved:

print(f"Best Cosine Similarity Score: {best_similarity_score:.3f}")

The output is:

Best Cosine Similarity Score: 0.126

And with enhanced similarity, we obtain the same output as for naïve RAG:

# Enhanced Similarity

response = best_matching_record

print(query,": ", response)

similarity_score = calculate_enhanced_similarity(query, best_matching_record)

print(f"Enhanced Similarity:, {similarity_score:.3f}")

The output confirms the trend:

define a rag store : A RAG vector store is a database or dataset that contains vectorized data points.

Enhanced Similarity:, 0.642

So why use vector search if it produces the same outputs as naïve RAG? Well, in a small dataset, everything looks easy. But when we’re dealing with datasets of millions of complex documents, keyword search will not capture subtleties that vectors can. Let’s now augment the user query with this information retrieved.

Augmented input

We add the information retrieved to the user query with no other aid and display the result:

# Call the function and print the result

augmented_input=query+": "+best_matching_record

print_formatted_response(augmented_input)

We only added a space between the user query and the retrieved information; nothing else. The output is satisfactory:

Response:

---------------

define a rag store: A RAG vector store is a database or dataset that contains

vectorized data points.

---------------

Let’s now see how the generative AI model reacts to this augmented input.

Generation

We now call GPT-4o with the augmented input and display the formatted output:

# Call the function and print the result

augmented_input=query+best_matching_record

llm_response = call_llm_with_full_text(augmented_input)

print_formatted_response(llm_response)

The response makes sense, as shown in the following excerpt:

Response:

---------------

Certainly! Let's break down and elaborate on the provided content: ### Define a RAG Store: A **RAG (Retrieval-Augmented Generation) vector store** is a specialized type of database or dataset that is designed to store and manage vectorized data points…

While vector search significantly speeds up the process of finding relevant documents by sequentially going through each record, its efficiency can decrease as the dataset size increases. To address this scalability issue, indexed search offers a more advanced solution. Let’s now see how index-based search can accelerate document retrieval.

3.2. Index-based search

Index-based search compares the vector of a user query not with the direct vector of a document’s content but with an indexed vector that represents this content.

The program first imports the class and function we need:

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics.pairwise import cosine_similarity

TfidfVectorizer imports the class that converts text documents into a matrix of TF-IDF features. TF-IDF will quantify word relevance in documents using frequency across the document. The function finds the best matches using the cosine similarity function to calculate the similarity between the query and the weighted vectors of the matrix:

def find_best_match(query, vectorizer, tfidf_matrix):

query_tfidf = vectorizer.transform([query])

similarities = cosine_similarity(query_tfidf, tfidf_matrix)

best_index = similarities.argmax() # Get the index of the highest similarity score

best_score = similarities[0, best_index]

return best_score, best_index

The function’s main tasks are:

- Transform Query: Converts the input query into TF-IDF vector format using the provided vectorizer

- Calculate Similarities: Computes the cosine similarity between the query vector and all vectors in the tfidf_matrix

- Identify Best Match: Finds the index (

best_index) of the highest similarity score in the results - Retrieve Best Score: Extracts the highest cosine similarity score (

best_score)

The output is the best similarity score found and the best index.

The following code first calls the dataset vectorizer and then searches for the most similar record through its index:

vectorizer, tfidf_matrix = setup_vectorizer(db_records)

best_similarity_score, best_index = find_best_match(query, vectorizer, tfidf_matrix)

best_matching_record = db_records[best_index]

Finally, the results are displayed:

print_formatted_response(best_matching_record)

The system finds the best similar document to the user’s input query:

Response:

---------------

A RAG vector store is a database or dataset that contains vectorized data

points.

---------------

We can see that the fuzzy user query produced a reliable output at the retrieval level before running GPT-4o.

The metrics that follow in the program are the same as for naïve and advanced RAG with vector search. This is normal because the document found is the closest to the user’s input query. We will be introducing more complex documents for RAG starting in Chapter 2, RAG Embedding Vector Stores with Deep Lake and OpenAI. For now, let’s have a look at the features that influence how the words are represented in vectors.

Feature extraction

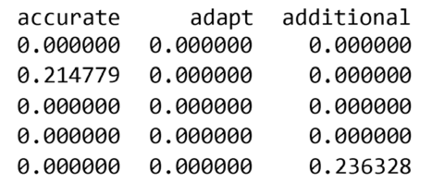

Before augmenting the input with this document, run the following cell, which calls the setup_vectorizer(records) function again but displays the matrix so that you can see its format. This is shown in the following excerpt for the words “accurate” and “additional” in one of the sentences:

Figure 1.4: Format of the matrix

Let’s now augment the input.

Augmented input

We will simply add the query to the best matching record in a minimal way to see how GPT-4o will react and display the output:

augmented_input=query+": "+best_matching_record

print_formatted_response(augmented_input)

The output is close to or the same as with vector search, but the retrieval method is faster:

Response:

---------------

define a rag store: A RAG vector store is a database or dataset that contains

vectorized data points.

---------------

We will now plug this augmented input into the generative AI model.

Generation

We now call GPT-4o with the augmented input and display the output:

# Call the function and print the result

llm_response = call_llm_with_full_text(augmented_input)

print_formatted_response(llm_response)

The output makes sense for the user who entered the initial fuzzy query:

Response:

---------------

Certainly! Let's break down and elaborate on the given content: --- **Define a RAG store:** A **RAG vector store** is a **database** or **dataset** that contains **vectorized data points**. --- ### Detailed Explanation: 1. **RAG Store**: - **RAG** stands for **Retrieval-Augmented Generation**. It is a technique used in natural language processing (NLP) where a model retrieves relevant information from a database or dataset to augment its generation capabilities…

This approach worked well in a closed environment within an organization in a specific domain. In an open environment, the user might have to elaborate before submitting a request.

In this section, we saw that a TF-IDF matrix pre-computes document vectors, enabling faster, simultaneous comparisons without repeated vector transformations. We have seen how vector and index-based search can improve retrieval. However, in one project, we may need to apply naïve and advanced RAG depending on the documents we need to retrieve. Let’s now see how modular RAG can improve our system.

4. Modular RAG

Should we use keyword search, vector search, or index-based search when implementing RAG? Each approach has its merits. The choice will depend on several factors:

- Keyword search suits simple retrieval

- Vector search is ideal for semantic-rich documents

- Index-based search offers speed with large data.

However, all three methods can perfectly fit together in a project. In one scenario, for example, a keyword search can help find clearly defined document labels, such as the titles of PDF files and labeled images, before they are processed. Then, indexed search will group the documents into indexed subsets. Finally, the retrieval program can search the indexed dataset, find a subset, and only use vector search to go through a limited number of documents to find the most relevant one.

In this section, we will create a RetrievalComponent class that can be called at each step of a project to perform the task required. The code sums up the three methods we have built in this chapter and that we can sum for the RetrievalComponent through its main members.

The following code initializes the class with search method choice and prepares a vectorizer if needed. self refers to the current instance of the class to access its variables, methods, and functions:

def __init__(self, method='vector'):

self.method = method

if self.method == 'vector' or self.method == 'indexed':

self.vectorizer = TfidfVectorizer()

self.tfidf_matrix = None

In this case, the vector search is activated.

The fit method builds a TF-IDF matrix from records, and is applicable for vector or indexed search methods:

def fit(self, records):

if self.method == 'vector' or self.method == 'indexed':

self.tfidf_matrix = self.vectorizer.fit_transform(records)

The retrieve method directs the query to the appropriate search method:

def retrieve(self, query):

if self.method == 'keyword':

return self.keyword_search(query)

elif self.method == 'vector':

return self.vector_search(query)

elif self.method == 'indexed':

return self.indexed_search(query)

The keyword search method finds the best match by counting common keywords between queries and documents:

def keyword_search(self, query):

best_score = 0

best_record = None

query_keywords = set(query.lower().split())

for index, doc in enumerate(self.documents):

doc_keywords = set(doc.lower().split())

common_keywords = query_keywords.intersection(doc_keywords)

score = len(common_keywords)

if score > best_score:

best_score = score

best_record = self.documents[index]

return best_record

The vector search method computes similarities between query TF-IDF and document matrix and returns the best match:

def vector_search(self, query):

query_tfidf = self.vectorizer.transform([query])

similarities = cosine_similarity(query_tfidf, self.tfidf_matrix)

best_index = similarities.argmax()

return db_records[best_index]

The indexed search method uses a precomputed TF-IDF matrix for fast retrieval of the best-matching document:

def indexed_search(self, query):

# Assuming the tfidf_matrix is precomputed and stored

query_tfidf = self.vectorizer.transform([query])

similarities = cosine_similarity(query_tfidf, self.tfidf_matrix)

best_index = similarities.argmax()

return db_records[best_index]

We can now activate modular RAG strategies.

Modular RAG strategies

We can call the retrieval component for any RAG configuration we wish when needed:

# Usage example

retrieval = RetrievalComponent(method='vector') # Choose from 'keyword', 'vector', 'indexed'

retrieval.fit(db_records)

best_matching_record = retrieval.retrieve(query)

print_formatted_response(best_matching_record)

In this case, the vector search method was activated.

The following cells select the best record, as in the 3.1. Vector search section, augment the input, call the generative model, and display the output as shown in the following excerpt:

Response:

---------------

Certainly! Let's break down and elaborate on the content provided: ---

**Define a RAG store:** A **RAG (Retrieval-Augmented Generation) store** is a specialized type of data storage system designed to support the retrieval and generation of information...

We have built a program that demonstrated how different search methodologies—keyword, vector, and index-based—can be effectively integrated into a RAG system. Each method has its unique strengths and addresses specific needs within a data retrieval context. The choice of method depends on the dataset size, query type, and performance requirements, which we will explore in the following chapters.

It’s now time to summarize our explorations in this chapter and move to the next level!