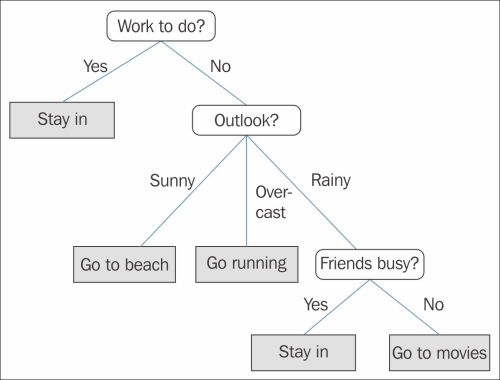

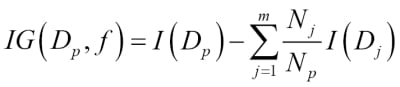

Chapter 3. A Tour of Machine Learning Classifiers Using Scikit-learn

In this chapter, we will take a tour through a selection of popular and powerful machine learning algorithms that are commonly used in academia as well as in the industry. While learning about the differences between several supervised learning algorithms for classification, we will also develop an intuitive appreciation of their individual strengths and weaknesses. Also, we will take our first steps with the scikit-learn library, which offers a user-friendly interface for using those algorithms efficiently and productively.

The topics that we will learn about throughout this chapter are as follows:

- Introduction to the concepts of popular classification algorithms

- Using the scikit-learn machine learning library

- Questions to ask when selecting a machine learning algorithm

Choosing a classification algorithm

Choosing an appropriate classification algorithm for a particular problem task requires practice: each algorithm has its own quirks and is based on certain assumptions. To restate the "No Free Lunch" theorem: no single classifier works best across all possible scenarios. In practice, it is always recommended that you compare the performance of at least a handful of different learning algorithms to select the best model for the particular problem; these may differ in the number of features or samples, the amount of noise in a dataset, and whether the classes are linearly separable or not.

Eventually, the performance of a classifier, computational power as well as predictive power, depends heavily on the underlying data that are available for learning. The five main steps that are involved in training a machine learning algorithm can be summarized as follows:

- Selection of features.

- Choosing a performance metric.

- Choosing a classifier and optimization algorithm.

- Evaluating the performance of the model.

- Tuning the algorithm.

Since the approach of this book is to build machine learning knowledge step by step, we will mainly focus on the principal concepts of the different algorithms in this chapter and revisit topics such as feature selection and preprocessing, performance metrics, and hyperparameter tuning for more detailed discussions later in this book.

First steps with scikit-learn

In Chapter 2, Training Machine Learning Algorithms for Classification, you learned about two related learning algorithms for classification: the perceptron rule and Adaline, which we implemented in Python by ourselves. Now we will take a look at the scikit-learn API, which combines a user-friendly interface with a highly optimized implementation of several classification algorithms. However, the scikit-learn library offers not only a large variety of learning algorithms, but also many convenient functions to preprocess data and to fine-tune and evaluate our models. We will discuss this in more detail together with the underlying concepts in Chapter 4, Building Good Training Sets – Data Preprocessing, and Chapter 5, Compressing Data via Dimensionality Reduction.

Training a perceptron via scikit-learn

To get started with the scikit-learn library, we will train a perceptron model similar to the one that we implemented in Chapter 2, Training Machine Learning Algorithms for Classification. For simplicity, we will use the already familiar Iris dataset throughout the following sections. Conveniently, the Iris dataset is already available via scikit-learn, since it is a simple yet popular dataset that is frequently used for testing and experimenting with algorithms. Also, we will only use two features from the Iris flower dataset for visualization purposes.

We will assign the petal length and petal width of the 150 flower samples to the feature matrix X and the corresponding class labels of the flower species to the vector y:

>>> from sklearn import datasets >>> import numpy as np >>> iris = datasets.load_iris() >>> X = iris.data[:, [2, 3]] >>> y = iris.target

If we executed np.unique(y) to return the different class labels stored in iris.target, we would see that the Iris flower class names, Iris-Setosa, Iris-Versicolor, and Iris-Virginica, are already stored as integers (0, 1, 2), which is recommended for the optimal performance of many machine learning libraries.

To evaluate how well a trained model performs on unseen data, we will further split the dataset into separate training and test datasets. Later in Chapter 5, Compressing Data via Dimensionality Reduction, we will discuss the best practices around model evaluation in more detail:

>>> from sklearn.cross_validation import train_test_split >>> X_train, X_test, y_train, y_test = train_test_split( ... X, y, test_size=0.3, random_state=0)

Using the train_test_split function from scikit-learn's cross_validation module, we randomly split the X and y arrays into 30 percent test data (45 samples) and 70 percent training data (105 samples).

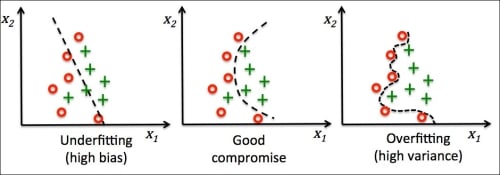

Many machine learning and optimization algorithms also require feature scaling for optimal performance, as we remember from the

gradient descent example in Chapter 2, Training Machine Learning Algorithms for Classification. Here, we will standardize the features using the StandardScaler class from scikit-learn's preprocessing module:

>>> from sklearn.preprocessing import StandardScaler >>> sc = StandardScaler() >>> sc.fit(X_train) >>> X_train_std = sc.transform(X_train) >>> X_test_std = sc.transform(X_test)

Using the preceding code, we loaded the StandardScaler class from the preprocessing module and initialized a new StandardScaler object that we assigned to the variable sc. Using the fit method, StandardScaler estimated the parameters  (sample mean) and

(sample mean) and  (standard deviation) for each feature dimension from the training data. By calling the

(standard deviation) for each feature dimension from the training data. By calling the transform method, we then standardized the training data using those estimated parameters  and

and  . Note that we used the same scaling parameters to standardize the test set so that both the values in the training and test dataset are comparable to each other.

. Note that we used the same scaling parameters to standardize the test set so that both the values in the training and test dataset are comparable to each other.

Having standardized the training data, we can now train a perceptron model. Most algorithms in scikit-learn already support multiclass classification by default via the One-vs.-Rest (OvR) method, which allows us to feed the three flower classes to the perceptron all at once. The code is as follows:

>>> from sklearn.linear_model import Perceptron >>> ppn = Perceptron(n_iter=40, eta0=0.1, random_state=0) >>> ppn.fit(X_train_std, y_train)

The scikit-learn interface reminds us of our perceptron implementation in Chapter 2, Training Machine Learning Algorithms for Classification: after loading the Perceptron class from the linear_model module, we initialized a new Perceptron object and trained the model via the fit method. Here, the model parameter eta0 is equivalent to the learning rate eta that we used in our own perceptron implementation, and the parameter n_iter defines the number of epochs (passes over the training set). As we remember from Chapter 2, Training Machine Learning Algorithms for Classification, finding an appropriate learning rate requires some experimentation. If the learning rate is too large, the algorithm will overshoot the global cost minimum. If the learning rate is too small, the algorithm requires more epochs until convergence, which can make the learning slow—especially for large datasets. Also, we used the random_state parameter for reproducibility of the initial shuffling of the training dataset after each epoch.

Having trained a model in scikit-learn, we can make predictions via the predict method, just like in our own perceptron implementation in Chapter 2, Training Machine Learning Algorithms for Classification. The code is as follows:

>>> y_pred = ppn.predict(X_test_std)

>>> print('Misclassified samples: %d' % (y_test != y_pred).sum())

Misclassified samples: 4On executing the preceding code, we see that the perceptron misclassifies 4 out of the 45 flower samples. Thus, the misclassification error on the test dataset is 0.089 or 8.9 percent  .

.

Note

Instead of the misclassification error, many machine learning practitioners report the classification accuracy of a model, which is simply calculated as follows:

1 - misclassification error = 0.911 or 91.1 percent.

Scikit-learn also implements a large variety of different performance metrics that are available via the metrics module. For example, we can calculate the classification accuracy of the perceptron on the test set as follows:

>>> from sklearn.metrics import accuracy_score

>>> print('Accuracy: %.2f' % accuracy_score(y_test, y_pred))

0.91Here, y_test are the true class labels and y_pred are the class labels that we predicted previously.

Note

Note that we evaluate the performance of our models based on the test set in this chapter. In Chapter 5, Compressing Data via Dimensionality Reduction, you will learn about useful techniques, including graphical analysis such as learning curves, to detect and prevent overfitting. Overfitting means that the model captures the patterns in the training data well, but fails to generalize well to unseen data.

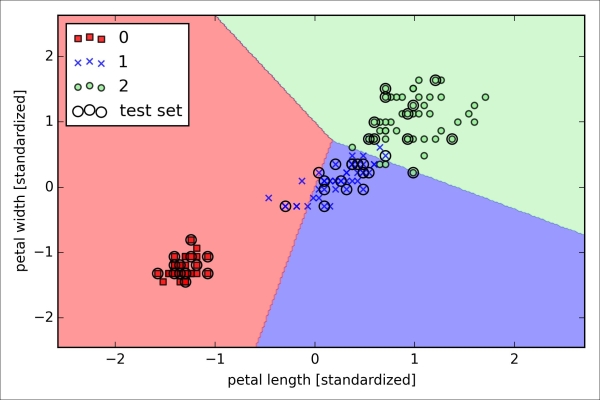

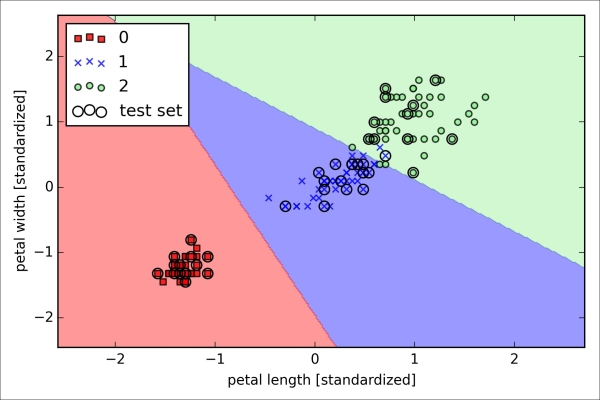

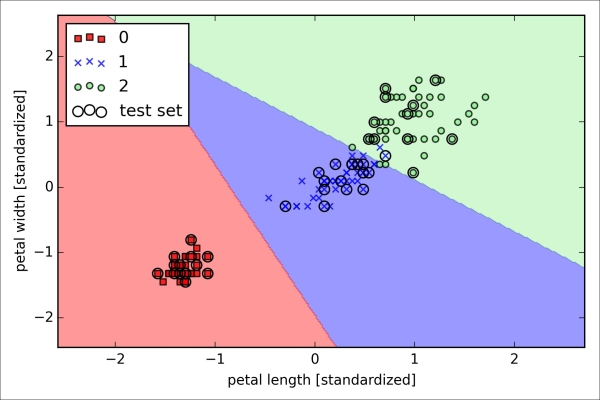

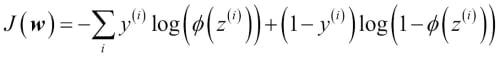

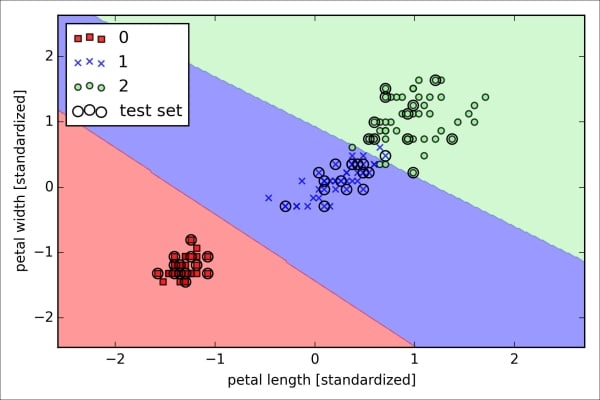

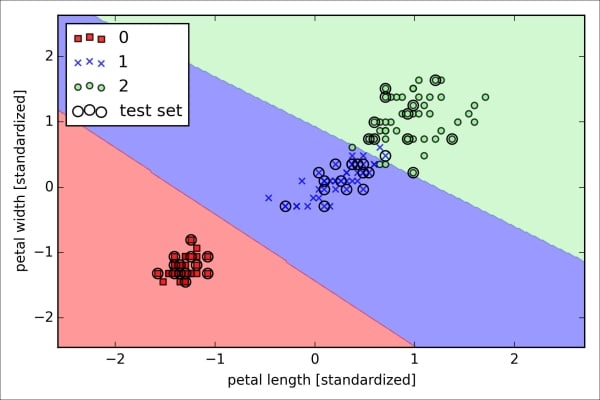

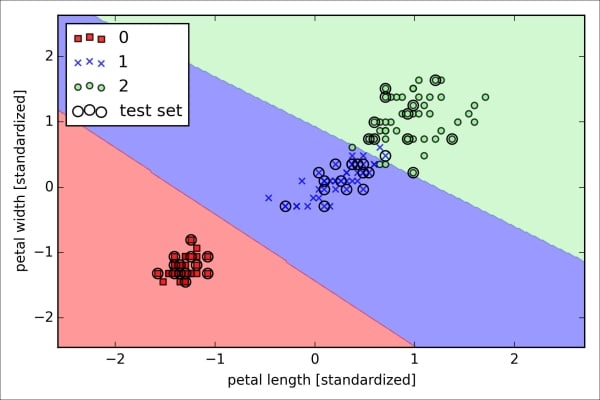

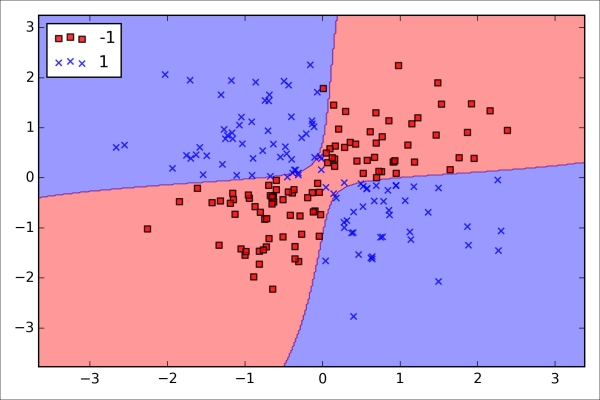

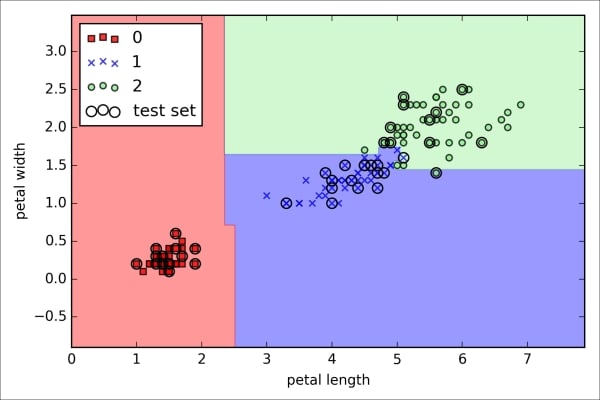

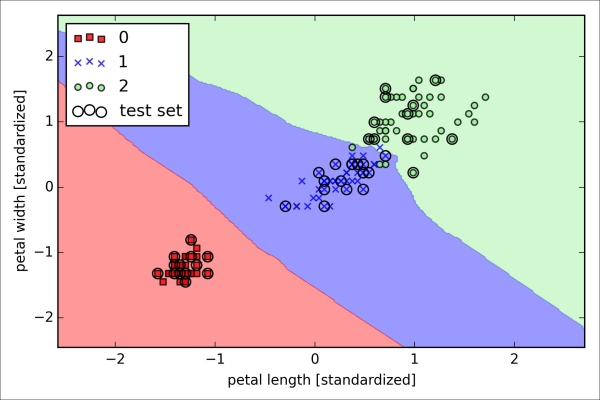

Finally, we can use our plot_decision_regions function from Chapter 2, Training Machine Learning Algorithms for Classification, to plot the

decision regions of our newly trained perceptron model and visualize how well it separates the different flower samples. However, let's add a small modification to highlight the samples from the test dataset via small circles:

from matplotlib.colors import ListedColormap

import matplotlib.pyplot as plt

def plot_decision_regions(X, y, classifier,

test_idx=None, resolution=0.02):

# setup marker generator and color map

markers = ('s', 'x', 'o', '^', 'v')

colors = ('red', 'blue', 'lightgreen', 'gray', 'cyan')

cmap = ListedColormap(colors[:len(np.unique(y))])

# plot the decision surface

x1_min, x1_max = X[:, 0].min() - 1, X[:, 0].max() + 1

x2_min, x2_max = X[:, 1].min() - 1, X[:, 1].max() + 1

xx1, xx2 = np.meshgrid(np.arange(x1_min, x1_max, resolution),

np.arange(x2_min, x2_max, resolution))

Z = classifier.predict(np.array([xx1.ravel(), xx2.ravel()]).T)

Z = Z.reshape(xx1.shape)

plt.contourf(xx1, xx2, Z, alpha=0.4, cmap=cmap)

plt.xlim(xx1.min(), xx1.max())

plt.ylim(xx2.min(), xx2.max())

# plot all samples

X_test, y_test = X[test_idx, :], y[test_idx]

for idx, cl in enumerate(np.unique(y)):

plt.scatter(x=X[y == cl, 0], y=X[y == cl, 1],

alpha=0.8, c=cmap(idx),

marker=markers[idx], label=cl)

# highlight test samples

if test_idx:

X_test, y_test = X[test_idx, :], y[test_idx]

plt.scatter(X_test[:, 0], X_test[:, 1], c='',

alpha=1.0, linewidth=1, marker='o',

s=55, label='test set')With the slight modification that we made to the plot_decision_regions function (highlighted in the preceding code), we can now specify the indices of the samples that we want to mark on the resulting plots. The code is as follows:

>>> X_combined_std = np.vstack((X_train_std, X_test_std))

>>> y_combined = np.hstack((y_train, y_test))

>>> plot_decision_regions(X=X_combined_std,

... y=y_combined,

... classifier=ppn,

... test_idx=range(105,150))

>>> plt.xlabel('petal length [standardized]')

>>> plt.ylabel('petal width [standardized]')

>>> plt.legend(loc='upper left')

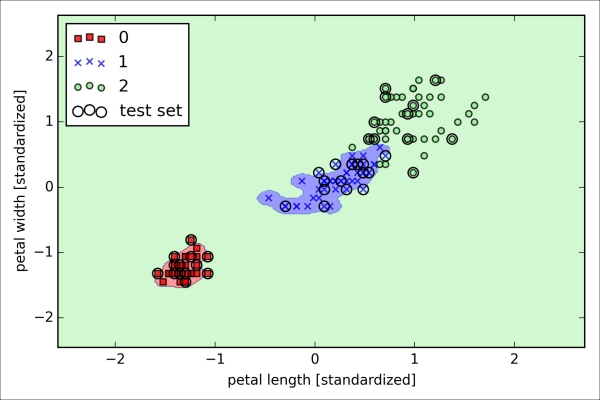

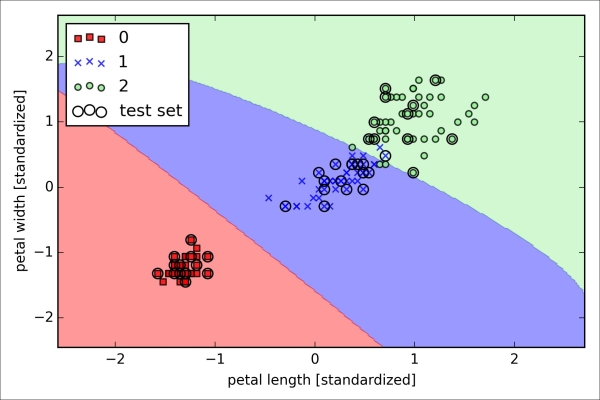

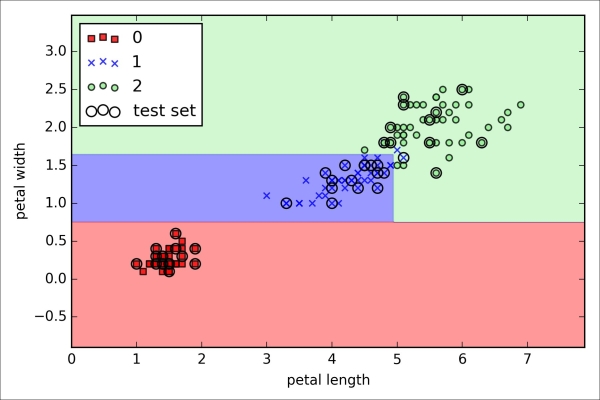

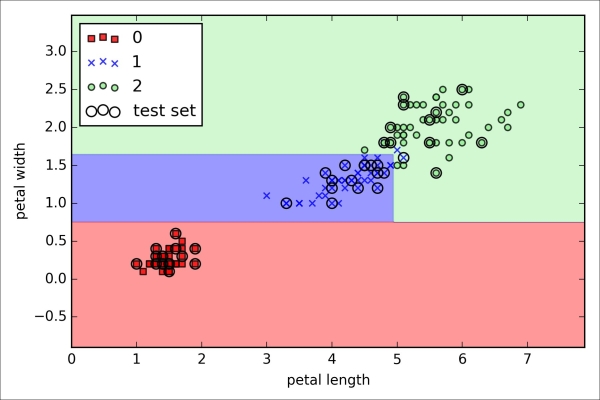

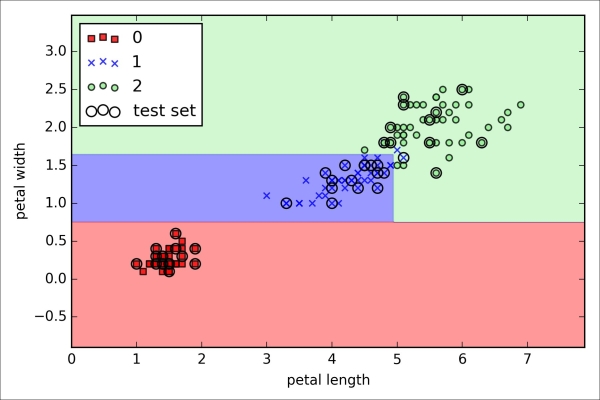

>>> plt.show()As we can see in the resulting plot, the three flower classes cannot be perfectly separated by a linear decision boundaries:

We remember from our discussion in Chapter 2, Training Machine Learning Algorithms for Classification, that the perceptron algorithm never converges on datasets that aren't perfectly linearly separable, which is why the use of the perceptron algorithm is typically not recommended in practice. In the following sections, we will look at more powerful linear classifiers that converge to a cost minimum even if the classes are not perfectly linearly separable.

Note

The Perceptron as well as other scikit-learn functions and classes have additional parameters that we omit for clarity. You can read more about those parameters using the help function in Python (for example, help(Perceptron)) or by going through the excellent scikit-learn online documentation at http://scikit-learn.org/stable/.

Training a perceptron via scikit-learn

To get started with the scikit-learn library, we will train a perceptron model similar to the one that we implemented in Chapter 2, Training Machine Learning Algorithms for Classification. For simplicity, we will use the already familiar Iris dataset throughout the following sections. Conveniently, the Iris dataset is already available via scikit-learn, since it is a simple yet popular dataset that is frequently used for testing and experimenting with algorithms. Also, we will only use two features from the Iris flower dataset for visualization purposes.

We will assign the petal length and petal width of the 150 flower samples to the feature matrix X and the corresponding class labels of the flower species to the vector y:

>>> from sklearn import datasets >>> import numpy as np >>> iris = datasets.load_iris() >>> X = iris.data[:, [2, 3]] >>> y = iris.target

If we executed np.unique(y) to return the different class labels stored in iris.target, we would see that the Iris flower class names, Iris-Setosa, Iris-Versicolor, and Iris-Virginica, are already stored as integers (0, 1, 2), which is recommended for the optimal performance of many machine learning libraries.

To evaluate how well a trained model performs on unseen data, we will further split the dataset into separate training and test datasets. Later in Chapter 5, Compressing Data via Dimensionality Reduction, we will discuss the best practices around model evaluation in more detail:

>>> from sklearn.cross_validation import train_test_split >>> X_train, X_test, y_train, y_test = train_test_split( ... X, y, test_size=0.3, random_state=0)

Using the train_test_split function from scikit-learn's cross_validation module, we randomly split the X and y arrays into 30 percent test data (45 samples) and 70 percent training data (105 samples).

Many machine learning and optimization algorithms also require feature scaling for optimal performance, as we remember from the

gradient descent example in Chapter 2, Training Machine Learning Algorithms for Classification. Here, we will standardize the features using the StandardScaler class from scikit-learn's preprocessing module:

>>> from sklearn.preprocessing import StandardScaler >>> sc = StandardScaler() >>> sc.fit(X_train) >>> X_train_std = sc.transform(X_train) >>> X_test_std = sc.transform(X_test)

Using the preceding code, we loaded the StandardScaler class from the preprocessing module and initialized a new StandardScaler object that we assigned to the variable sc. Using the fit method, StandardScaler estimated the parameters  (sample mean) and

(sample mean) and  (standard deviation) for each feature dimension from the training data. By calling the

(standard deviation) for each feature dimension from the training data. By calling the transform method, we then standardized the training data using those estimated parameters  and

and  . Note that we used the same scaling parameters to standardize the test set so that both the values in the training and test dataset are comparable to each other.

. Note that we used the same scaling parameters to standardize the test set so that both the values in the training and test dataset are comparable to each other.

Having standardized the training data, we can now train a perceptron model. Most algorithms in scikit-learn already support multiclass classification by default via the One-vs.-Rest (OvR) method, which allows us to feed the three flower classes to the perceptron all at once. The code is as follows:

>>> from sklearn.linear_model import Perceptron >>> ppn = Perceptron(n_iter=40, eta0=0.1, random_state=0) >>> ppn.fit(X_train_std, y_train)

The scikit-learn interface reminds us of our perceptron implementation in Chapter 2, Training Machine Learning Algorithms for Classification: after loading the Perceptron class from the linear_model module, we initialized a new Perceptron object and trained the model via the fit method. Here, the model parameter eta0 is equivalent to the learning rate eta that we used in our own perceptron implementation, and the parameter n_iter defines the number of epochs (passes over the training set). As we remember from Chapter 2, Training Machine Learning Algorithms for Classification, finding an appropriate learning rate requires some experimentation. If the learning rate is too large, the algorithm will overshoot the global cost minimum. If the learning rate is too small, the algorithm requires more epochs until convergence, which can make the learning slow—especially for large datasets. Also, we used the random_state parameter for reproducibility of the initial shuffling of the training dataset after each epoch.

Having trained a model in scikit-learn, we can make predictions via the predict method, just like in our own perceptron implementation in Chapter 2, Training Machine Learning Algorithms for Classification. The code is as follows:

>>> y_pred = ppn.predict(X_test_std)

>>> print('Misclassified samples: %d' % (y_test != y_pred).sum())

Misclassified samples: 4On executing the preceding code, we see that the perceptron misclassifies 4 out of the 45 flower samples. Thus, the misclassification error on the test dataset is 0.089 or 8.9 percent  .

.

Note

Instead of the misclassification error, many machine learning practitioners report the classification accuracy of a model, which is simply calculated as follows:

1 - misclassification error = 0.911 or 91.1 percent.

Scikit-learn also implements a large variety of different performance metrics that are available via the metrics module. For example, we can calculate the classification accuracy of the perceptron on the test set as follows:

>>> from sklearn.metrics import accuracy_score

>>> print('Accuracy: %.2f' % accuracy_score(y_test, y_pred))

0.91Here, y_test are the true class labels and y_pred are the class labels that we predicted previously.

Note

Note that we evaluate the performance of our models based on the test set in this chapter. In Chapter 5, Compressing Data via Dimensionality Reduction, you will learn about useful techniques, including graphical analysis such as learning curves, to detect and prevent overfitting. Overfitting means that the model captures the patterns in the training data well, but fails to generalize well to unseen data.

Finally, we can use our plot_decision_regions function from Chapter 2, Training Machine Learning Algorithms for Classification, to plot the

decision regions of our newly trained perceptron model and visualize how well it separates the different flower samples. However, let's add a small modification to highlight the samples from the test dataset via small circles:

from matplotlib.colors import ListedColormap

import matplotlib.pyplot as plt

def plot_decision_regions(X, y, classifier,

test_idx=None, resolution=0.02):

# setup marker generator and color map

markers = ('s', 'x', 'o', '^', 'v')

colors = ('red', 'blue', 'lightgreen', 'gray', 'cyan')

cmap = ListedColormap(colors[:len(np.unique(y))])

# plot the decision surface

x1_min, x1_max = X[:, 0].min() - 1, X[:, 0].max() + 1

x2_min, x2_max = X[:, 1].min() - 1, X[:, 1].max() + 1

xx1, xx2 = np.meshgrid(np.arange(x1_min, x1_max, resolution),

np.arange(x2_min, x2_max, resolution))

Z = classifier.predict(np.array([xx1.ravel(), xx2.ravel()]).T)

Z = Z.reshape(xx1.shape)

plt.contourf(xx1, xx2, Z, alpha=0.4, cmap=cmap)

plt.xlim(xx1.min(), xx1.max())

plt.ylim(xx2.min(), xx2.max())

# plot all samples

X_test, y_test = X[test_idx, :], y[test_idx]

for idx, cl in enumerate(np.unique(y)):

plt.scatter(x=X[y == cl, 0], y=X[y == cl, 1],

alpha=0.8, c=cmap(idx),

marker=markers[idx], label=cl)

# highlight test samples

if test_idx:

X_test, y_test = X[test_idx, :], y[test_idx]

plt.scatter(X_test[:, 0], X_test[:, 1], c='',

alpha=1.0, linewidth=1, marker='o',

s=55, label='test set')With the slight modification that we made to the plot_decision_regions function (highlighted in the preceding code), we can now specify the indices of the samples that we want to mark on the resulting plots. The code is as follows:

>>> X_combined_std = np.vstack((X_train_std, X_test_std))

>>> y_combined = np.hstack((y_train, y_test))

>>> plot_decision_regions(X=X_combined_std,

... y=y_combined,

... classifier=ppn,

... test_idx=range(105,150))

>>> plt.xlabel('petal length [standardized]')

>>> plt.ylabel('petal width [standardized]')

>>> plt.legend(loc='upper left')

>>> plt.show()As we can see in the resulting plot, the three flower classes cannot be perfectly separated by a linear decision boundaries:

We remember from our discussion in Chapter 2, Training Machine Learning Algorithms for Classification, that the perceptron algorithm never converges on datasets that aren't perfectly linearly separable, which is why the use of the perceptron algorithm is typically not recommended in practice. In the following sections, we will look at more powerful linear classifiers that converge to a cost minimum even if the classes are not perfectly linearly separable.

Note

The Perceptron as well as other scikit-learn functions and classes have additional parameters that we omit for clarity. You can read more about those parameters using the help function in Python (for example, help(Perceptron)) or by going through the excellent scikit-learn online documentation at http://scikit-learn.org/stable/.

Modeling class probabilities via logistic regression

Although the perceptron rule offers a nice and easygoing introduction to machine learning algorithms for classification, its biggest disadvantage is that it never converges if the classes are not perfectly linearly separable. The classification task in the previous section would be an example of such a scenario. Intuitively, we can think of the reason as the weights are continuously being updated since there is always at least one misclassified sample present in each epoch. Of course, you can change the learning rate and increase the number of epochs, but be warned that the perceptron will never converge on this dataset. To make better use of our time, we will now take a look at another simple yet more powerful algorithm for linear and binary classification problems: logistic regression. Note that, in spite of its name, logistic regression is a model for classification, not regression.

Logistic regression intuition and conditional probabilities

Logistic regression is a classification model that is very easy to implement but performs very well on linearly separable classes. It is one of the most widely used algorithms for classification in industry. Similar to the perceptron and Adaline, the logistic regression model in this chapter is also a linear model for binary classification that can be extended to multiclass classification via the OvR technique.

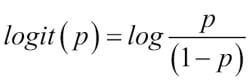

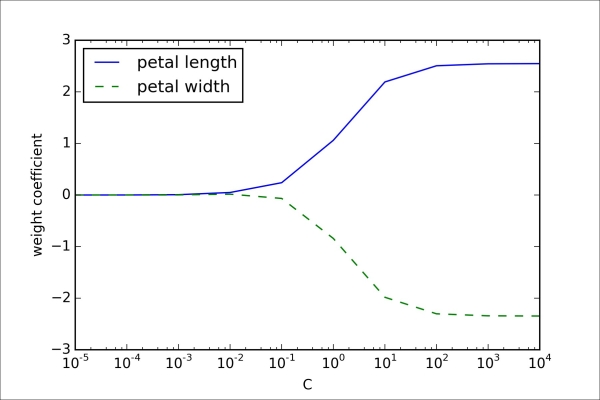

To explain the idea behind logistic regression as a probabilistic model, let's first introduce the

odds ratio, which is the odds in favor of a particular event. The odds ratio can be written as  , where

, where  stands for the probability of the positive event. The term positive event does not necessarily mean good, but refers to the event that we want to predict, for example, the probability that a patient has a certain disease; we can think of the positive event as class label

stands for the probability of the positive event. The term positive event does not necessarily mean good, but refers to the event that we want to predict, for example, the probability that a patient has a certain disease; we can think of the positive event as class label  . We can then further define the

logit function, which is simply the logarithm of the odds ratio (log-odds):

. We can then further define the

logit function, which is simply the logarithm of the odds ratio (log-odds):

The logit function takes input values in the range 0 to 1 and transforms them to values over the entire real number range, which we can use to express a linear relationship between feature values and the log-odds:

Here,  is the conditional probability that a particular sample belongs to class 1 given its features x.

is the conditional probability that a particular sample belongs to class 1 given its features x.

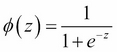

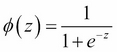

Now what we are actually interested in is predicting the probability that a certain sample belongs to a particular class, which is the inverse form of the logit function. It is also called the logistic function, sometimes simply abbreviated as sigmoid function due to its characteristic S-shape.

Here, z is the net input, that is, the linear combination of weights and sample features and can be calculated as  .

.

Now let's simply plot the sigmoid function for some values in the range -7 to 7 to see what it looks like:

>>> import matplotlib.pyplot as plt

>>> import numpy as np

>>> def sigmoid(z):

... return 1.0 / (1.0 + np.exp(-z))

>>> z = np.arange(-7, 7, 0.1)

>>> phi_z = sigmoid(z)

>>> plt.plot(z, phi_z)

>>> plt.axvline(0.0, color='k')

>>> plt.axhspan(0.0, 1.0, facecolor='1.0', alpha=1.0, ls='dotted')

>>> plt.axhline(y=0.5, ls='dotted', color='k')

>>> plt.yticks([0.0, 0.5, 1.0])

>>> plt.ylim(-0.1, 1.1)

>>> plt.xlabel('z')

>>> plt.ylabel('$\phi (z)$')

>>> plt.show() As a result of executing the previous code example, we should now see the S-shaped (sigmoidal) curve:

We can see that  approaches 1 if z goes towards infinity (

approaches 1 if z goes towards infinity ( ), since

), since  becomes very small for large values of z. Similarly,

becomes very small for large values of z. Similarly,  goes towards 0 for

goes towards 0 for  as the result of an increasingly large denominator. Thus, we conclude that this sigmoid function takes real number values as input and transforms them to values in the range [0, 1] with an intercept at

as the result of an increasingly large denominator. Thus, we conclude that this sigmoid function takes real number values as input and transforms them to values in the range [0, 1] with an intercept at  .

.

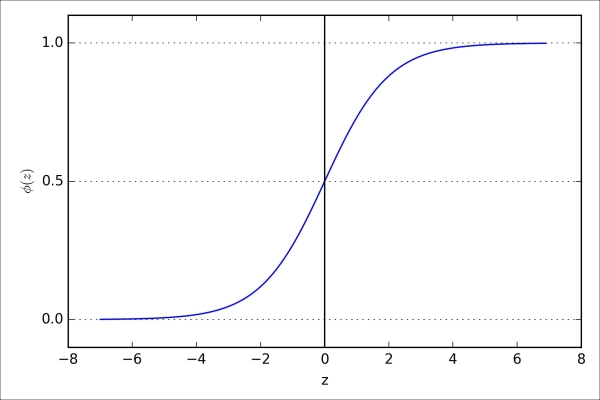

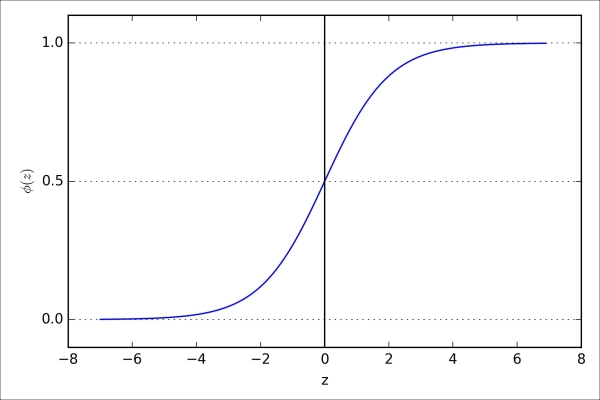

To build some intuition for the logistic regression model, we can relate it to our previous Adaline implementation in Chapter 2, Training Machine Learning Algorithms for Classification. In Adaline, we used the identity function  as the activation function. In logistic regression, this activation function simply becomes the sigmoid function that we defined earlier, which is illustrated in the following figure:

as the activation function. In logistic regression, this activation function simply becomes the sigmoid function that we defined earlier, which is illustrated in the following figure:

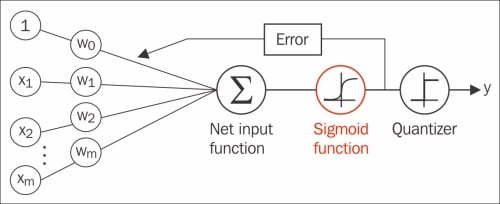

The output of the sigmoid function is then interpreted as the probability of particular sample belonging to class 1  , given its features x parameterized by the weights w. For example, if we compute

, given its features x parameterized by the weights w. For example, if we compute  for a particular flower sample, it means that the chance that this sample is an Iris-Versicolor flower is 80 percent. Similarly, the probability that this flower is an Iris-Setosa flower can be calculated as

for a particular flower sample, it means that the chance that this sample is an Iris-Versicolor flower is 80 percent. Similarly, the probability that this flower is an Iris-Setosa flower can be calculated as  or 20 percent. The predicted probability can then simply be converted into a binary outcome via a quantizer (unit step function):

or 20 percent. The predicted probability can then simply be converted into a binary outcome via a quantizer (unit step function):

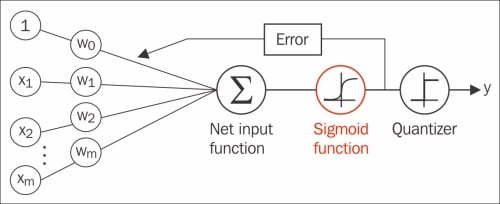

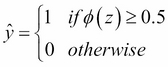

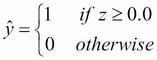

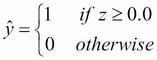

If we look at the preceding sigmoid plot, this is equivalent to the following:

In fact, there are many applications where we are not only interested in the predicted class labels, but where estimating the class-membership probability is particularly useful. Logistic regression is used in weather forecasting, for example, to not only predict if it will rain on a particular day but also to report the chance of rain. Similarly, logistic regression can be used to predict the chance that a patient has a particular disease given certain symptoms, which is why logistic regression enjoys wide popularity in the field of medicine.

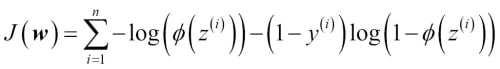

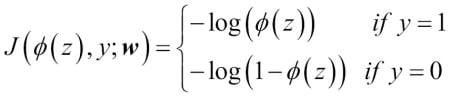

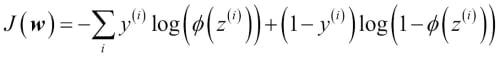

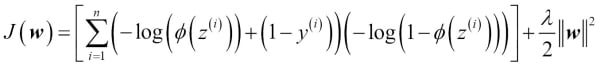

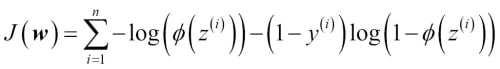

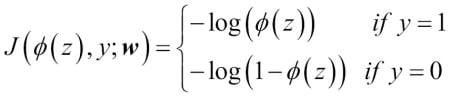

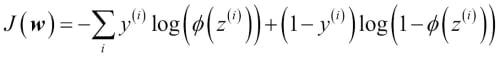

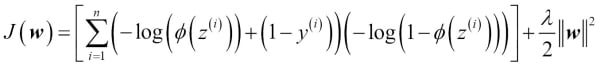

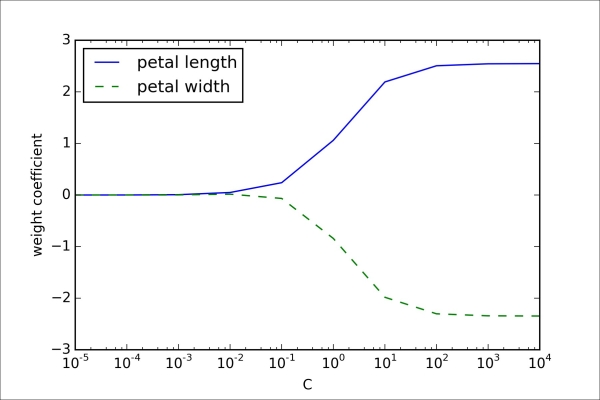

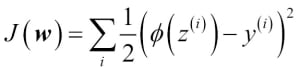

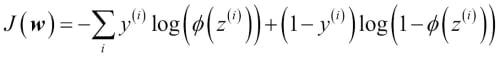

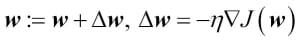

Learning the weights of the logistic cost function

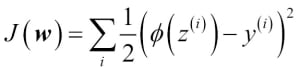

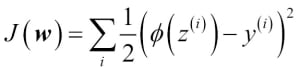

You learned how we could use the logistic regression model to predict probabilities and class labels. Now let's briefly talk about the parameters of the model, for example, weights w. In the previous chapter, we defined the sum-squared-error cost function:

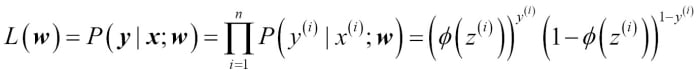

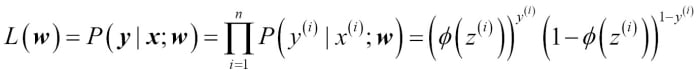

We minimized this in order to learn the weights w for our Adaline classification model. To explain how we can derive the cost function for logistic regression, let's first define the likelihood L that we want to maximize when we build a logistic regression model, assuming that the individual samples in our dataset are independent of one another. The formula is as follows:

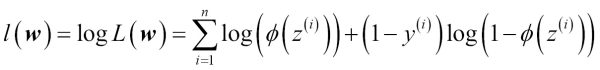

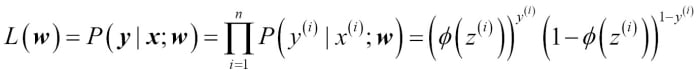

In practice, it is easier to maximize the (natural) log of this equation, which is called the log-likelihood function:

Firstly, applying the log function reduces the potential for numerical underflow, which can occur if the likelihoods are very small. Secondly, we can convert the product of factors into a summation of factors, which makes it easier to obtain the derivative of this function via the addition trick, as you may remember from calculus.

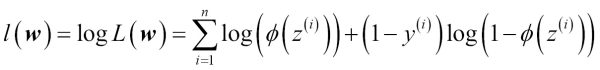

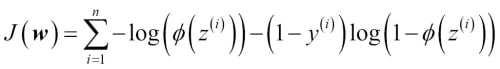

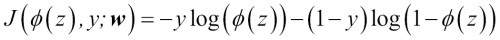

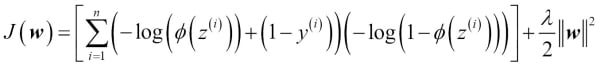

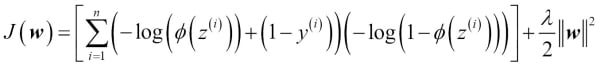

Now we could use an optimization algorithm such as gradient ascent to maximize this log-likelihood function. Alternatively, let's rewrite the log-likelihood as a cost function  that can be minimized using gradient descent as in Chapter 2, Training Machine Learning Algorithms for Classification:

that can be minimized using gradient descent as in Chapter 2, Training Machine Learning Algorithms for Classification:

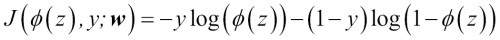

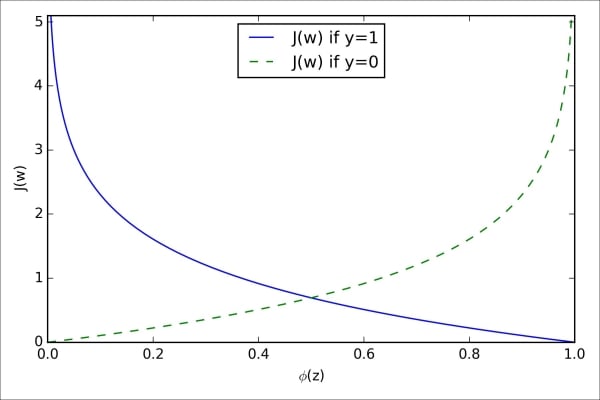

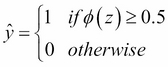

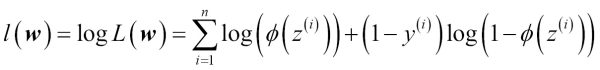

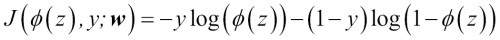

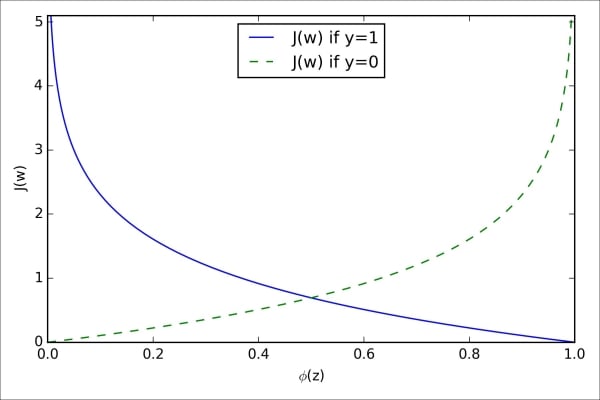

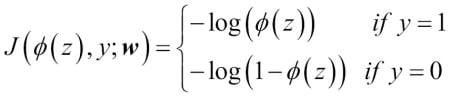

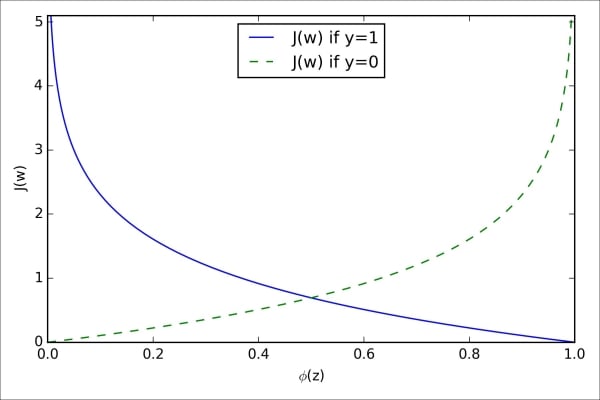

To get a better grasp on this cost function, let's take a look at the cost that we calculate for one single-sample instance:

Looking at the preceding equation, we can see that the first term becomes zero if  , and the second term becomes zero if

, and the second term becomes zero if  , respectively:

, respectively:

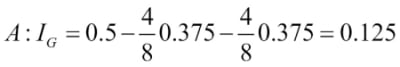

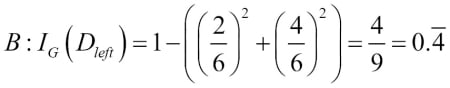

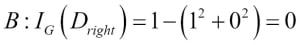

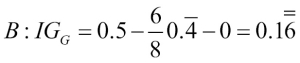

The following plot illustrates the cost for the classification of a single-sample instance for different values of  :

:

We can see that the cost approaches 0 (plain blue line) if we correctly predict that a sample belongs to class 1. Similarly, we can see on the y axis that the cost also approaches 0 if we correctly predict  (dashed line). However, if the prediction is wrong, the cost goes towards infinity. The moral is that we penalize wrong predictions with an increasingly larger cost.

(dashed line). However, if the prediction is wrong, the cost goes towards infinity. The moral is that we penalize wrong predictions with an increasingly larger cost.

Training a logistic regression model with scikit-learn

If we were to implement logistic regression ourselves, we could simply substitute the cost function  in our Adaline implementation from Chapter 2, Training Machine Learning Algorithms for Classification, by the new cost function:

in our Adaline implementation from Chapter 2, Training Machine Learning Algorithms for Classification, by the new cost function:

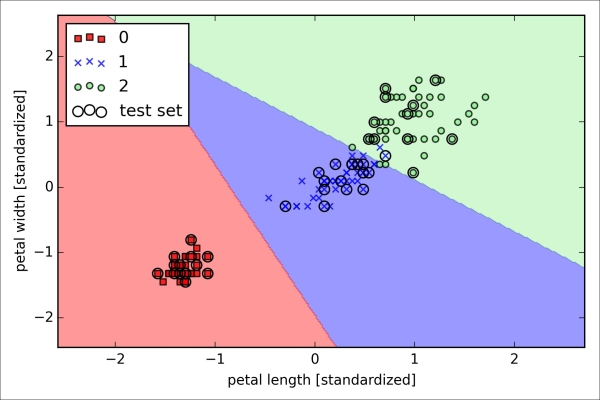

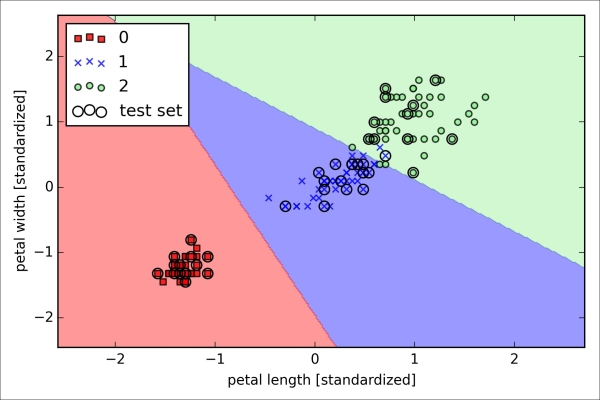

This would compute the cost of classifying all training samples per epoch and we would end up with a working logistic regression model. However, since scikit-learn implements a highly optimized version of logistic regression that also supports multiclass settings off-the-shelf, we will skip the implementation and use the sklearn.linear_model.LogisticRegression class as well as the familiar fit method to train the model on the standardized flower training dataset:

>>> from sklearn.linear_model import LogisticRegression

>>> lr = LogisticRegression(C=1000.0, random_state=0)

>>> lr.fit(X_train_std, y_train)

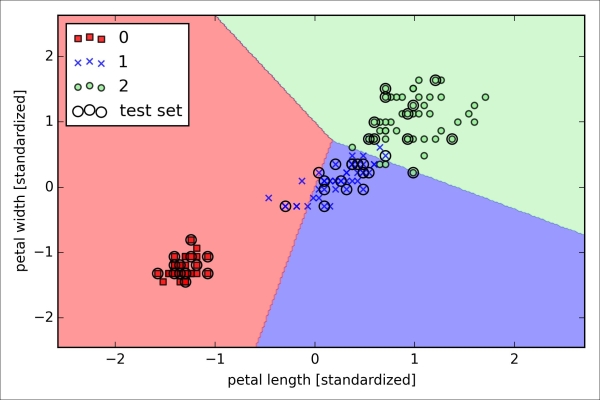

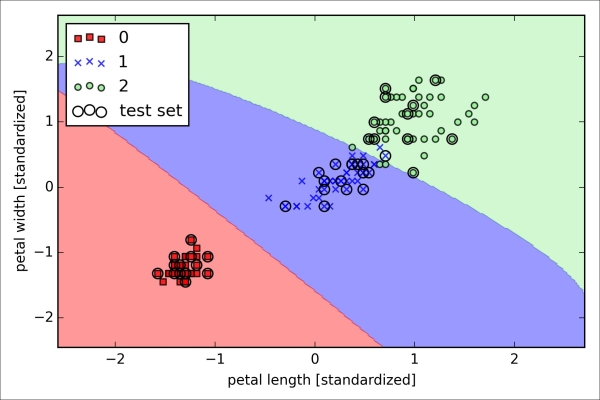

>>> plot_decision_regions(X_combined_std,

... y_combined, classifier=lr,

... test_idx=range(105,150))

>>> plt.xlabel('petal length [standardized]')

>>> plt.ylabel('petal width [standardized]')

>>> plt.legend(loc='upper left')

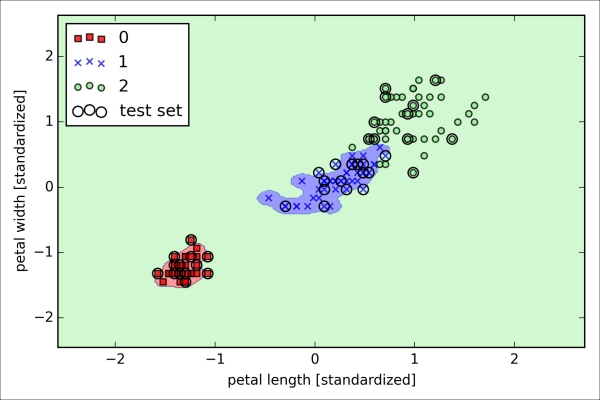

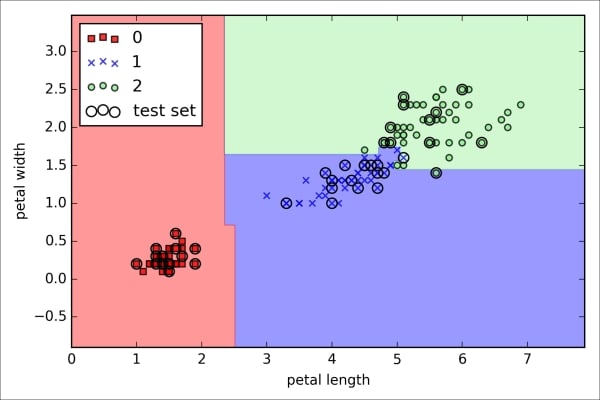

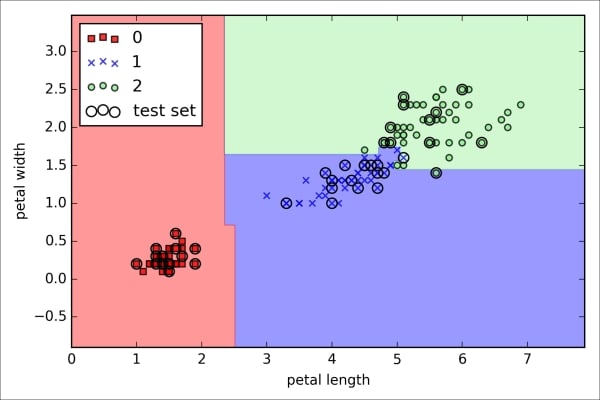

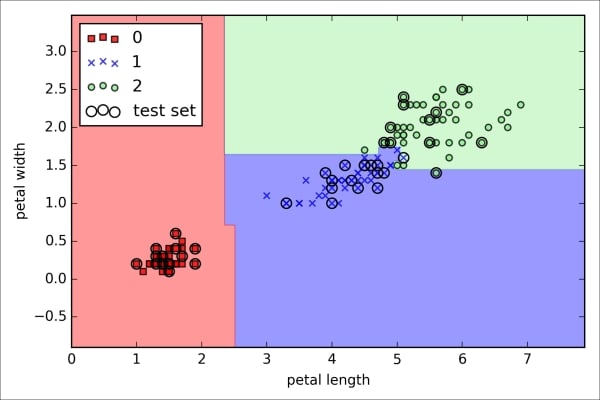

>>> plt.show()After fitting the model on the training data, we plotted the decision regions, training samples and test samples, as shown here:

Looking at the preceding code that we used to train the LogisticRegression model, you might now be wondering, "What is this mysterious parameter C?" We will get to this in a second, but let's briefly go over the concept of overfitting and regularization in the next subsection first.

Furthermore, we can predict the class-membership probability of the samples via the predict_proba method. For example, we can predict the probabilities of the first Iris-Setosa sample:

>>> lr.predict_proba(X_test_std[0,:])

This returns the following array:

array([[ 0.000, 0.063, 0.937]])

The preceding array tells us that the model predicts a chance of 93.7 percent that the sample belongs to the Iris-Virginica class, and a 6.3 percent chance that the sample is a Iris-Versicolor flower.

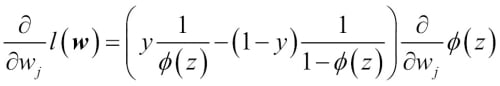

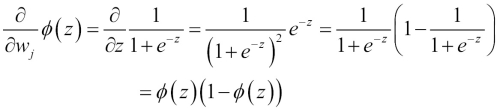

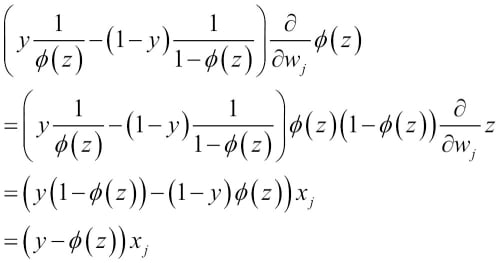

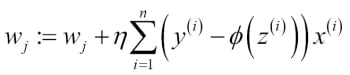

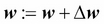

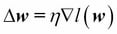

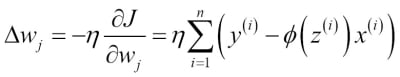

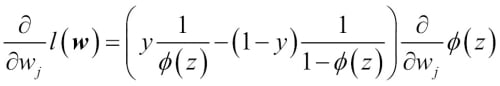

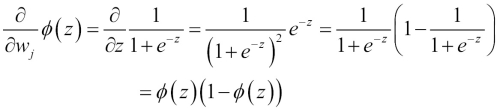

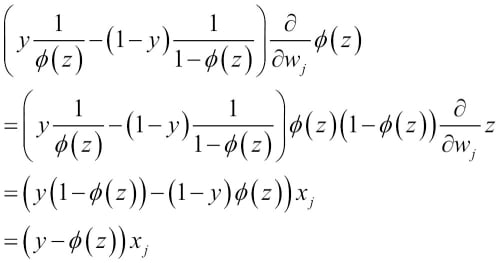

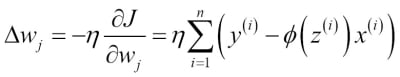

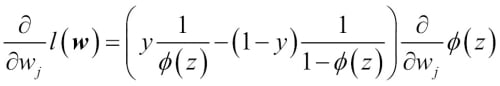

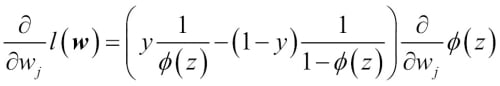

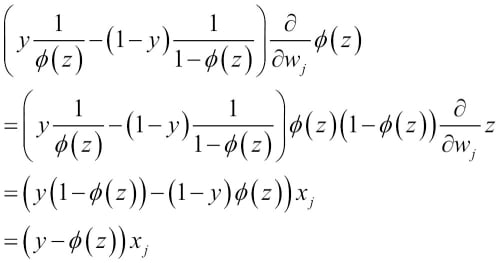

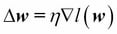

We can show that the weight update in logistic regression via gradient descent is indeed equal to the equation that we used in Adaline in Chapter 2, Training Machine Learning Algorithms for Classification. Let's start by calculating the partial derivative of the log-likelihood function with respect to the jth weight:

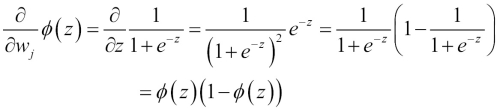

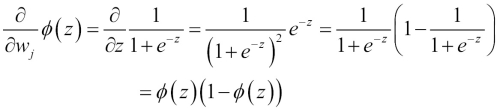

Before we continue, let's calculate the partial derivative of the sigmoid function first:

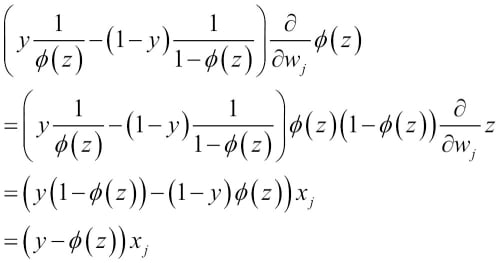

Now we can resubstitute  =

=  in our first equation to obtain the following:

in our first equation to obtain the following:

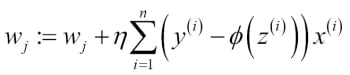

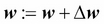

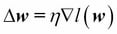

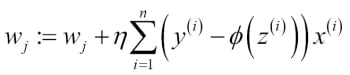

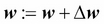

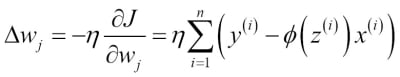

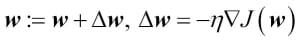

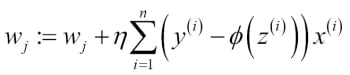

Remember that the goal is to find the weights that maximize the log-likelihood so that we would perform the update for each weight as follows:

Since we update all weights simultaneously, we can write the general update rule as follows:

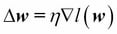

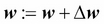

We define  as follows:

as follows:

Since maximizing the log-likelihood is equal to minimizing the cost function  that we defined earlier, we can write the gradient descent update rule as follows:

that we defined earlier, we can write the gradient descent update rule as follows:

This is equal to the gradient descent rule in Adaline in Chapter 2, Training Machine Learning Algorithms for Classification.

Tackling overfitting via regularization

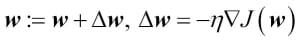

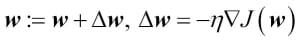

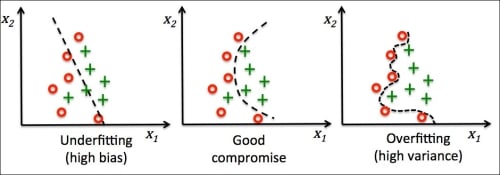

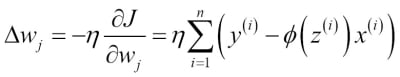

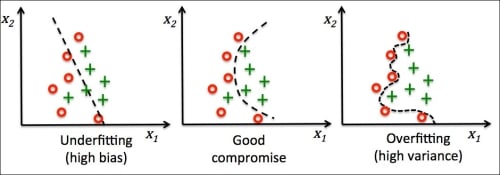

Overfitting is a common problem in machine learning, where a model performs well on training data but does not generalize well to unseen data (test data). If a model suffers from overfitting, we also say that the model has a high variance, which can be caused by having too many parameters that lead to a model that is too complex given the underlying data. Similarly, our model can also suffer from underfitting (high bias), which means that our model is not complex enough to capture the pattern in the training data well and therefore also suffers from low performance on unseen data.

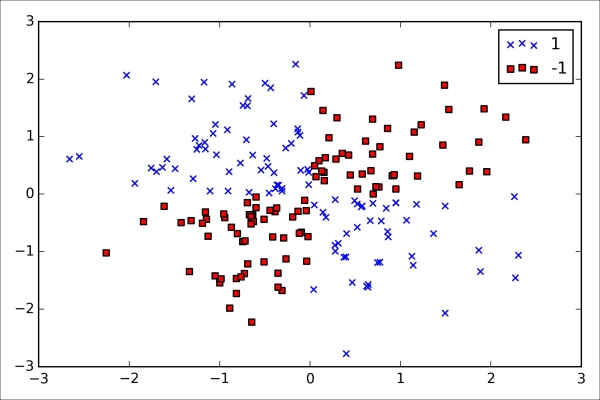

Although we have only encountered linear models for classification so far, the problem of overfitting and underfitting can be best illustrated by using a more complex, nonlinear decision boundary as shown in the following figure:

Note

Variance measures the consistency (or variability) of the model prediction for a particular sample instance if we would retrain the model multiple times, for example, on different subsets of the training dataset. We can say that the model is sensitive to the randomness in the training data. In contrast, bias measures how far off the predictions are from the correct values in general if we rebuild the model multiple times on different training datasets; bias is the measure of the systematic error that is not due to randomness.

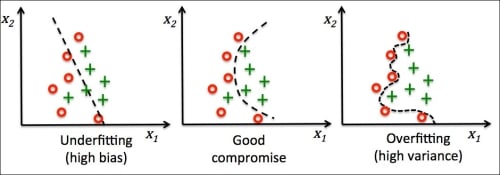

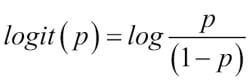

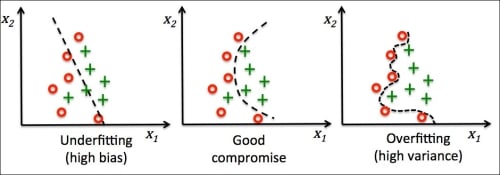

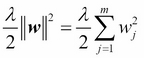

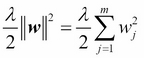

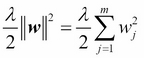

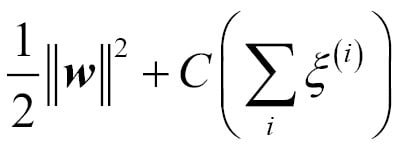

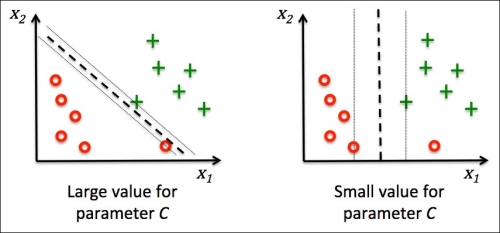

One way of finding a good bias-variance tradeoff is to tune the complexity of the model via regularization. Regularization is a very useful method to handle collinearity (high correlation among features), filter out noise from data, and eventually prevent overfitting. The concept behind regularization is to introduce additional information (bias) to penalize extreme parameter weights. The most common form of regularization is the so-called L2 regularization (sometimes also called L2 shrinkage or weight decay), which can be written as follows:

Here,  is the so-called regularization parameter.

is the so-called regularization parameter.

Note

Regularization is another reason why feature scaling such as standardization is important. For regularization to work properly, we need to ensure that all our features are on comparable scales.

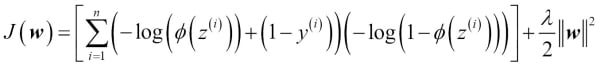

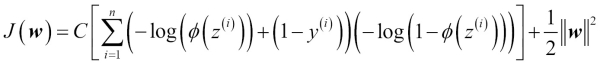

In order to apply regularization, we just need to add the regularization term to the cost function that we defined for logistic regression to shrink the weights:

Via the regularization parameter  , we can then control how well we fit the training data while keeping the weights small. By increasing the value of

, we can then control how well we fit the training data while keeping the weights small. By increasing the value of  , we increase the regularization strength.

, we increase the regularization strength.

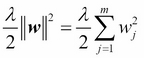

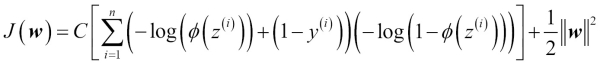

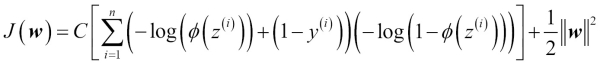

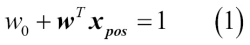

The parameter C that is implemented for the LogisticRegression class in scikit-learn comes from a convention in support vector machines, which will be the topic of the next section. C is directly related to the regularization parameter  , which is its inverse:

, which is its inverse:

So we can rewrite the regularized cost function of logistic regression as follows:

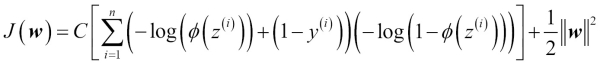

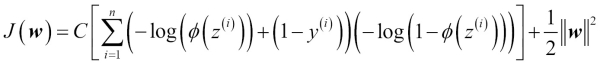

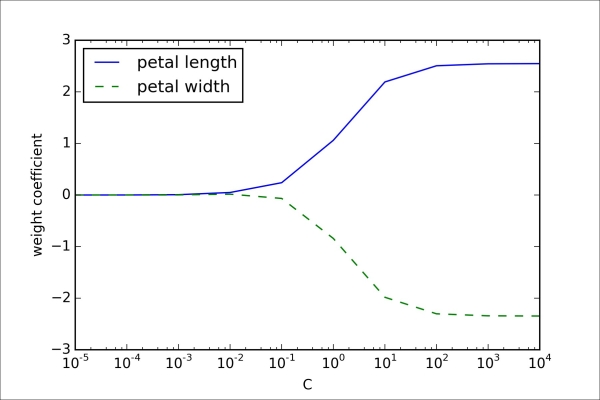

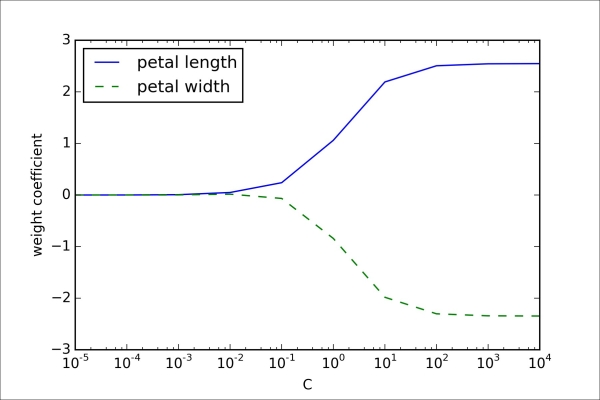

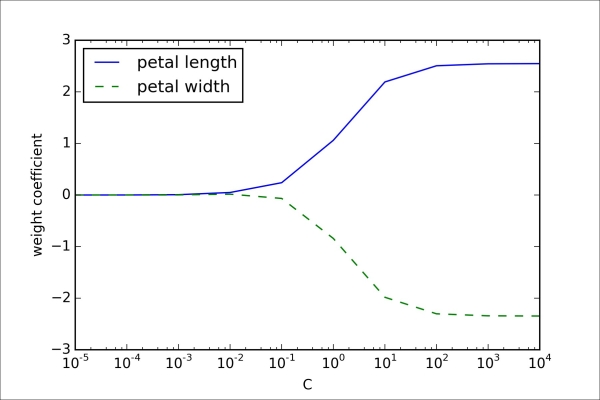

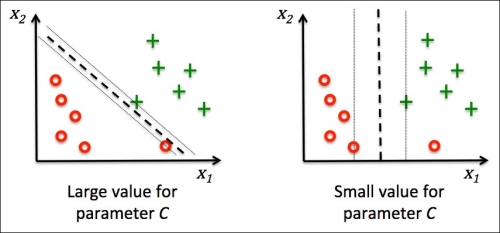

Consequently, decreasing the value of the inverse regularization parameter C means that we are increasing the regularization strength, which we can visualize by plotting the L2 regularization path for the two weight coefficients:

>>> weights, params = [], []

>>> for c in np.arange(-5, 5):

... lr = LogisticRegression(C=10**c, random_state=0)

... lr.fit(X_train_std, y_train)

... weights.append(lr.coef_[1])

... params.append(10**c)

>>> weights = np.array(weights)

>>> plt.plot(params, weights[:, 0],

... label='petal length')

>>> plt.plot(params, weights[:, 1], linestyle='--',

... label='petal width')

>>> plt.ylabel('weight coefficient')

>>> plt.xlabel('C')

>>> plt.legend(loc='upper left')

>>> plt.xscale('log')

>>> plt.show()By executing the preceding code, we fitted ten logistic regression models with different values for the inverse-regularization parameter C. For the purposes of illustration, we only collected the weight coefficients of the class 2 vs. all classifier. Remember that we are using the OvR technique for multiclass classification.

As we can see in the resulting plot, the weight coefficients shrink if we decrease the parameter C, that is, if we increase the regularization strength:

Note

Since an in-depth coverage of the individual classification algorithms exceeds the scope of this book, I warmly recommend Dr. Scott Menard's Logistic Regression: From Introductory to Advanced Concepts and Applications, Sage Publications, to readers who want to learn more about logistic regression.

Logistic regression intuition and conditional probabilities

Logistic regression is a classification model that is very easy to implement but performs very well on linearly separable classes. It is one of the most widely used algorithms for classification in industry. Similar to the perceptron and Adaline, the logistic regression model in this chapter is also a linear model for binary classification that can be extended to multiclass classification via the OvR technique.

To explain the idea behind logistic regression as a probabilistic model, let's first introduce the

odds ratio, which is the odds in favor of a particular event. The odds ratio can be written as  , where

, where  stands for the probability of the positive event. The term positive event does not necessarily mean good, but refers to the event that we want to predict, for example, the probability that a patient has a certain disease; we can think of the positive event as class label

stands for the probability of the positive event. The term positive event does not necessarily mean good, but refers to the event that we want to predict, for example, the probability that a patient has a certain disease; we can think of the positive event as class label  . We can then further define the

logit function, which is simply the logarithm of the odds ratio (log-odds):

. We can then further define the

logit function, which is simply the logarithm of the odds ratio (log-odds):

The logit function takes input values in the range 0 to 1 and transforms them to values over the entire real number range, which we can use to express a linear relationship between feature values and the log-odds:

Here,  is the conditional probability that a particular sample belongs to class 1 given its features x.

is the conditional probability that a particular sample belongs to class 1 given its features x.

Now what we are actually interested in is predicting the probability that a certain sample belongs to a particular class, which is the inverse form of the logit function. It is also called the logistic function, sometimes simply abbreviated as sigmoid function due to its characteristic S-shape.

Here, z is the net input, that is, the linear combination of weights and sample features and can be calculated as  .

.

Now let's simply plot the sigmoid function for some values in the range -7 to 7 to see what it looks like:

>>> import matplotlib.pyplot as plt

>>> import numpy as np

>>> def sigmoid(z):

... return 1.0 / (1.0 + np.exp(-z))

>>> z = np.arange(-7, 7, 0.1)

>>> phi_z = sigmoid(z)

>>> plt.plot(z, phi_z)

>>> plt.axvline(0.0, color='k')

>>> plt.axhspan(0.0, 1.0, facecolor='1.0', alpha=1.0, ls='dotted')

>>> plt.axhline(y=0.5, ls='dotted', color='k')

>>> plt.yticks([0.0, 0.5, 1.0])

>>> plt.ylim(-0.1, 1.1)

>>> plt.xlabel('z')

>>> plt.ylabel('$\phi (z)$')

>>> plt.show() As a result of executing the previous code example, we should now see the S-shaped (sigmoidal) curve:

We can see that  approaches 1 if z goes towards infinity (

approaches 1 if z goes towards infinity ( ), since

), since  becomes very small for large values of z. Similarly,

becomes very small for large values of z. Similarly,  goes towards 0 for

goes towards 0 for  as the result of an increasingly large denominator. Thus, we conclude that this sigmoid function takes real number values as input and transforms them to values in the range [0, 1] with an intercept at

as the result of an increasingly large denominator. Thus, we conclude that this sigmoid function takes real number values as input and transforms them to values in the range [0, 1] with an intercept at  .

.

To build some intuition for the logistic regression model, we can relate it to our previous Adaline implementation in Chapter 2, Training Machine Learning Algorithms for Classification. In Adaline, we used the identity function  as the activation function. In logistic regression, this activation function simply becomes the sigmoid function that we defined earlier, which is illustrated in the following figure:

as the activation function. In logistic regression, this activation function simply becomes the sigmoid function that we defined earlier, which is illustrated in the following figure:

The output of the sigmoid function is then interpreted as the probability of particular sample belonging to class 1  , given its features x parameterized by the weights w. For example, if we compute

, given its features x parameterized by the weights w. For example, if we compute  for a particular flower sample, it means that the chance that this sample is an Iris-Versicolor flower is 80 percent. Similarly, the probability that this flower is an Iris-Setosa flower can be calculated as

for a particular flower sample, it means that the chance that this sample is an Iris-Versicolor flower is 80 percent. Similarly, the probability that this flower is an Iris-Setosa flower can be calculated as  or 20 percent. The predicted probability can then simply be converted into a binary outcome via a quantizer (unit step function):

or 20 percent. The predicted probability can then simply be converted into a binary outcome via a quantizer (unit step function):

If we look at the preceding sigmoid plot, this is equivalent to the following:

In fact, there are many applications where we are not only interested in the predicted class labels, but where estimating the class-membership probability is particularly useful. Logistic regression is used in weather forecasting, for example, to not only predict if it will rain on a particular day but also to report the chance of rain. Similarly, logistic regression can be used to predict the chance that a patient has a particular disease given certain symptoms, which is why logistic regression enjoys wide popularity in the field of medicine.

Learning the weights of the logistic cost function

You learned how we could use the logistic regression model to predict probabilities and class labels. Now let's briefly talk about the parameters of the model, for example, weights w. In the previous chapter, we defined the sum-squared-error cost function:

We minimized this in order to learn the weights w for our Adaline classification model. To explain how we can derive the cost function for logistic regression, let's first define the likelihood L that we want to maximize when we build a logistic regression model, assuming that the individual samples in our dataset are independent of one another. The formula is as follows:

In practice, it is easier to maximize the (natural) log of this equation, which is called the log-likelihood function:

Firstly, applying the log function reduces the potential for numerical underflow, which can occur if the likelihoods are very small. Secondly, we can convert the product of factors into a summation of factors, which makes it easier to obtain the derivative of this function via the addition trick, as you may remember from calculus.

Now we could use an optimization algorithm such as gradient ascent to maximize this log-likelihood function. Alternatively, let's rewrite the log-likelihood as a cost function  that can be minimized using gradient descent as in Chapter 2, Training Machine Learning Algorithms for Classification:

that can be minimized using gradient descent as in Chapter 2, Training Machine Learning Algorithms for Classification:

To get a better grasp on this cost function, let's take a look at the cost that we calculate for one single-sample instance:

Looking at the preceding equation, we can see that the first term becomes zero if  , and the second term becomes zero if

, and the second term becomes zero if  , respectively:

, respectively:

The following plot illustrates the cost for the classification of a single-sample instance for different values of  :

:

We can see that the cost approaches 0 (plain blue line) if we correctly predict that a sample belongs to class 1. Similarly, we can see on the y axis that the cost also approaches 0 if we correctly predict  (dashed line). However, if the prediction is wrong, the cost goes towards infinity. The moral is that we penalize wrong predictions with an increasingly larger cost.

(dashed line). However, if the prediction is wrong, the cost goes towards infinity. The moral is that we penalize wrong predictions with an increasingly larger cost.

Training a logistic regression model with scikit-learn

If we were to implement logistic regression ourselves, we could simply substitute the cost function  in our Adaline implementation from Chapter 2, Training Machine Learning Algorithms for Classification, by the new cost function:

in our Adaline implementation from Chapter 2, Training Machine Learning Algorithms for Classification, by the new cost function:

This would compute the cost of classifying all training samples per epoch and we would end up with a working logistic regression model. However, since scikit-learn implements a highly optimized version of logistic regression that also supports multiclass settings off-the-shelf, we will skip the implementation and use the sklearn.linear_model.LogisticRegression class as well as the familiar fit method to train the model on the standardized flower training dataset:

>>> from sklearn.linear_model import LogisticRegression

>>> lr = LogisticRegression(C=1000.0, random_state=0)

>>> lr.fit(X_train_std, y_train)

>>> plot_decision_regions(X_combined_std,

... y_combined, classifier=lr,

... test_idx=range(105,150))

>>> plt.xlabel('petal length [standardized]')

>>> plt.ylabel('petal width [standardized]')

>>> plt.legend(loc='upper left')

>>> plt.show()After fitting the model on the training data, we plotted the decision regions, training samples and test samples, as shown here:

Looking at the preceding code that we used to train the LogisticRegression model, you might now be wondering, "What is this mysterious parameter C?" We will get to this in a second, but let's briefly go over the concept of overfitting and regularization in the next subsection first.

Furthermore, we can predict the class-membership probability of the samples via the predict_proba method. For example, we can predict the probabilities of the first Iris-Setosa sample:

>>> lr.predict_proba(X_test_std[0,:])

This returns the following array:

array([[ 0.000, 0.063, 0.937]])

The preceding array tells us that the model predicts a chance of 93.7 percent that the sample belongs to the Iris-Virginica class, and a 6.3 percent chance that the sample is a Iris-Versicolor flower.

We can show that the weight update in logistic regression via gradient descent is indeed equal to the equation that we used in Adaline in Chapter 2, Training Machine Learning Algorithms for Classification. Let's start by calculating the partial derivative of the log-likelihood function with respect to the jth weight:

Before we continue, let's calculate the partial derivative of the sigmoid function first:

Now we can resubstitute  =

=  in our first equation to obtain the following:

in our first equation to obtain the following:

Remember that the goal is to find the weights that maximize the log-likelihood so that we would perform the update for each weight as follows:

Since we update all weights simultaneously, we can write the general update rule as follows:

We define  as follows:

as follows:

Since maximizing the log-likelihood is equal to minimizing the cost function  that we defined earlier, we can write the gradient descent update rule as follows:

that we defined earlier, we can write the gradient descent update rule as follows:

This is equal to the gradient descent rule in Adaline in Chapter 2, Training Machine Learning Algorithms for Classification.

Tackling overfitting via regularization

Overfitting is a common problem in machine learning, where a model performs well on training data but does not generalize well to unseen data (test data). If a model suffers from overfitting, we also say that the model has a high variance, which can be caused by having too many parameters that lead to a model that is too complex given the underlying data. Similarly, our model can also suffer from underfitting (high bias), which means that our model is not complex enough to capture the pattern in the training data well and therefore also suffers from low performance on unseen data.

Although we have only encountered linear models for classification so far, the problem of overfitting and underfitting can be best illustrated by using a more complex, nonlinear decision boundary as shown in the following figure:

Note

Variance measures the consistency (or variability) of the model prediction for a particular sample instance if we would retrain the model multiple times, for example, on different subsets of the training dataset. We can say that the model is sensitive to the randomness in the training data. In contrast, bias measures how far off the predictions are from the correct values in general if we rebuild the model multiple times on different training datasets; bias is the measure of the systematic error that is not due to randomness.

One way of finding a good bias-variance tradeoff is to tune the complexity of the model via regularization. Regularization is a very useful method to handle collinearity (high correlation among features), filter out noise from data, and eventually prevent overfitting. The concept behind regularization is to introduce additional information (bias) to penalize extreme parameter weights. The most common form of regularization is the so-called L2 regularization (sometimes also called L2 shrinkage or weight decay), which can be written as follows:

Here,  is the so-called regularization parameter.

is the so-called regularization parameter.

Note

Regularization is another reason why feature scaling such as standardization is important. For regularization to work properly, we need to ensure that all our features are on comparable scales.

In order to apply regularization, we just need to add the regularization term to the cost function that we defined for logistic regression to shrink the weights:

Via the regularization parameter  , we can then control how well we fit the training data while keeping the weights small. By increasing the value of

, we can then control how well we fit the training data while keeping the weights small. By increasing the value of  , we increase the regularization strength.

, we increase the regularization strength.

The parameter C that is implemented for the LogisticRegression class in scikit-learn comes from a convention in support vector machines, which will be the topic of the next section. C is directly related to the regularization parameter  , which is its inverse:

, which is its inverse:

So we can rewrite the regularized cost function of logistic regression as follows:

Consequently, decreasing the value of the inverse regularization parameter C means that we are increasing the regularization strength, which we can visualize by plotting the L2 regularization path for the two weight coefficients:

>>> weights, params = [], []

>>> for c in np.arange(-5, 5):

... lr = LogisticRegression(C=10**c, random_state=0)

... lr.fit(X_train_std, y_train)

... weights.append(lr.coef_[1])

... params.append(10**c)

>>> weights = np.array(weights)

>>> plt.plot(params, weights[:, 0],

... label='petal length')

>>> plt.plot(params, weights[:, 1], linestyle='--',

... label='petal width')

>>> plt.ylabel('weight coefficient')

>>> plt.xlabel('C')

>>> plt.legend(loc='upper left')

>>> plt.xscale('log')

>>> plt.show()By executing the preceding code, we fitted ten logistic regression models with different values for the inverse-regularization parameter C. For the purposes of illustration, we only collected the weight coefficients of the class 2 vs. all classifier. Remember that we are using the OvR technique for multiclass classification.

As we can see in the resulting plot, the weight coefficients shrink if we decrease the parameter C, that is, if we increase the regularization strength:

Note

Since an in-depth coverage of the individual classification algorithms exceeds the scope of this book, I warmly recommend Dr. Scott Menard's Logistic Regression: From Introductory to Advanced Concepts and Applications, Sage Publications, to readers who want to learn more about logistic regression.

Learning the weights of the logistic cost function

You learned how we could use the logistic regression model to predict probabilities and class labels. Now let's briefly talk about the parameters of the model, for example, weights w. In the previous chapter, we defined the sum-squared-error cost function:

We minimized this in order to learn the weights w for our Adaline classification model. To explain how we can derive the cost function for logistic regression, let's first define the likelihood L that we want to maximize when we build a logistic regression model, assuming that the individual samples in our dataset are independent of one another. The formula is as follows:

In practice, it is easier to maximize the (natural) log of this equation, which is called the log-likelihood function:

Firstly, applying the log function reduces the potential for numerical underflow, which can occur if the likelihoods are very small. Secondly, we can convert the product of factors into a summation of factors, which makes it easier to obtain the derivative of this function via the addition trick, as you may remember from calculus.

Now we could use an optimization algorithm such as gradient ascent to maximize this log-likelihood function. Alternatively, let's rewrite the log-likelihood as a cost function  that can be minimized using gradient descent as in Chapter 2, Training Machine Learning Algorithms for Classification:

that can be minimized using gradient descent as in Chapter 2, Training Machine Learning Algorithms for Classification:

To get a better grasp on this cost function, let's take a look at the cost that we calculate for one single-sample instance:

Looking at the preceding equation, we can see that the first term becomes zero if  , and the second term becomes zero if

, and the second term becomes zero if  , respectively:

, respectively:

The following plot illustrates the cost for the classification of a single-sample instance for different values of  :

:

We can see that the cost approaches 0 (plain blue line) if we correctly predict that a sample belongs to class 1. Similarly, we can see on the y axis that the cost also approaches 0 if we correctly predict  (dashed line). However, if the prediction is wrong, the cost goes towards infinity. The moral is that we penalize wrong predictions with an increasingly larger cost.

(dashed line). However, if the prediction is wrong, the cost goes towards infinity. The moral is that we penalize wrong predictions with an increasingly larger cost.

Training a logistic regression model with scikit-learn

If we were to implement logistic regression ourselves, we could simply substitute the cost function  in our Adaline implementation from Chapter 2, Training Machine Learning Algorithms for Classification, by the new cost function:

in our Adaline implementation from Chapter 2, Training Machine Learning Algorithms for Classification, by the new cost function:

This would compute the cost of classifying all training samples per epoch and we would end up with a working logistic regression model. However, since scikit-learn implements a highly optimized version of logistic regression that also supports multiclass settings off-the-shelf, we will skip the implementation and use the sklearn.linear_model.LogisticRegression class as well as the familiar fit method to train the model on the standardized flower training dataset:

>>> from sklearn.linear_model import LogisticRegression

>>> lr = LogisticRegression(C=1000.0, random_state=0)

>>> lr.fit(X_train_std, y_train)

>>> plot_decision_regions(X_combined_std,

... y_combined, classifier=lr,

... test_idx=range(105,150))

>>> plt.xlabel('petal length [standardized]')

>>> plt.ylabel('petal width [standardized]')

>>> plt.legend(loc='upper left')

>>> plt.show()After fitting the model on the training data, we plotted the decision regions, training samples and test samples, as shown here:

Looking at the preceding code that we used to train the LogisticRegression model, you might now be wondering, "What is this mysterious parameter C?" We will get to this in a second, but let's briefly go over the concept of overfitting and regularization in the next subsection first.

Furthermore, we can predict the class-membership probability of the samples via the predict_proba method. For example, we can predict the probabilities of the first Iris-Setosa sample:

>>> lr.predict_proba(X_test_std[0,:])

This returns the following array:

array([[ 0.000, 0.063, 0.937]])

The preceding array tells us that the model predicts a chance of 93.7 percent that the sample belongs to the Iris-Virginica class, and a 6.3 percent chance that the sample is a Iris-Versicolor flower.

We can show that the weight update in logistic regression via gradient descent is indeed equal to the equation that we used in Adaline in Chapter 2, Training Machine Learning Algorithms for Classification. Let's start by calculating the partial derivative of the log-likelihood function with respect to the jth weight:

Before we continue, let's calculate the partial derivative of the sigmoid function first:

Now we can resubstitute  =

=  in our first equation to obtain the following:

in our first equation to obtain the following:

Remember that the goal is to find the weights that maximize the log-likelihood so that we would perform the update for each weight as follows:

Since we update all weights simultaneously, we can write the general update rule as follows:

We define  as follows:

as follows:

Since maximizing the log-likelihood is equal to minimizing the cost function  that we defined earlier, we can write the gradient descent update rule as follows:

that we defined earlier, we can write the gradient descent update rule as follows:

This is equal to the gradient descent rule in Adaline in Chapter 2, Training Machine Learning Algorithms for Classification.

Tackling overfitting via regularization

Overfitting is a common problem in machine learning, where a model performs well on training data but does not generalize well to unseen data (test data). If a model suffers from overfitting, we also say that the model has a high variance, which can be caused by having too many parameters that lead to a model that is too complex given the underlying data. Similarly, our model can also suffer from underfitting (high bias), which means that our model is not complex enough to capture the pattern in the training data well and therefore also suffers from low performance on unseen data.

Although we have only encountered linear models for classification so far, the problem of overfitting and underfitting can be best illustrated by using a more complex, nonlinear decision boundary as shown in the following figure:

Note

Variance measures the consistency (or variability) of the model prediction for a particular sample instance if we would retrain the model multiple times, for example, on different subsets of the training dataset. We can say that the model is sensitive to the randomness in the training data. In contrast, bias measures how far off the predictions are from the correct values in general if we rebuild the model multiple times on different training datasets; bias is the measure of the systematic error that is not due to randomness.

One way of finding a good bias-variance tradeoff is to tune the complexity of the model via regularization. Regularization is a very useful method to handle collinearity (high correlation among features), filter out noise from data, and eventually prevent overfitting. The concept behind regularization is to introduce additional information (bias) to penalize extreme parameter weights. The most common form of regularization is the so-called L2 regularization (sometimes also called L2 shrinkage or weight decay), which can be written as follows:

Here,  is the so-called regularization parameter.

is the so-called regularization parameter.

Note

Regularization is another reason why feature scaling such as standardization is important. For regularization to work properly, we need to ensure that all our features are on comparable scales.

In order to apply regularization, we just need to add the regularization term to the cost function that we defined for logistic regression to shrink the weights:

Via the regularization parameter  , we can then control how well we fit the training data while keeping the weights small. By increasing the value of

, we can then control how well we fit the training data while keeping the weights small. By increasing the value of  , we increase the regularization strength.

, we increase the regularization strength.

The parameter C that is implemented for the LogisticRegression class in scikit-learn comes from a convention in support vector machines, which will be the topic of the next section. C is directly related to the regularization parameter  , which is its inverse:

, which is its inverse:

So we can rewrite the regularized cost function of logistic regression as follows:

Consequently, decreasing the value of the inverse regularization parameter C means that we are increasing the regularization strength, which we can visualize by plotting the L2 regularization path for the two weight coefficients:

>>> weights, params = [], []

>>> for c in np.arange(-5, 5):

... lr = LogisticRegression(C=10**c, random_state=0)

... lr.fit(X_train_std, y_train)

... weights.append(lr.coef_[1])

... params.append(10**c)

>>> weights = np.array(weights)

>>> plt.plot(params, weights[:, 0],

... label='petal length')

>>> plt.plot(params, weights[:, 1], linestyle='--',

... label='petal width')

>>> plt.ylabel('weight coefficient')

>>> plt.xlabel('C')

>>> plt.legend(loc='upper left')

>>> plt.xscale('log')

>>> plt.show()By executing the preceding code, we fitted ten logistic regression models with different values for the inverse-regularization parameter C. For the purposes of illustration, we only collected the weight coefficients of the class 2 vs. all classifier. Remember that we are using the OvR technique for multiclass classification.

As we can see in the resulting plot, the weight coefficients shrink if we decrease the parameter C, that is, if we increase the regularization strength:

Note

Since an in-depth coverage of the individual classification algorithms exceeds the scope of this book, I warmly recommend Dr. Scott Menard's Logistic Regression: From Introductory to Advanced Concepts and Applications, Sage Publications, to readers who want to learn more about logistic regression.

Training a logistic regression model with scikit-learn

If we were to implement logistic regression ourselves, we could simply substitute the cost function  in our Adaline implementation from Chapter 2, Training Machine Learning Algorithms for Classification, by the new cost function:

in our Adaline implementation from Chapter 2, Training Machine Learning Algorithms for Classification, by the new cost function:

This would compute the cost of classifying all training samples per epoch and we would end up with a working logistic regression model. However, since scikit-learn implements a highly optimized version of logistic regression that also supports multiclass settings off-the-shelf, we will skip the implementation and use the sklearn.linear_model.LogisticRegression class as well as the familiar fit method to train the model on the standardized flower training dataset:

>>> from sklearn.linear_model import LogisticRegression

>>> lr = LogisticRegression(C=1000.0, random_state=0)

>>> lr.fit(X_train_std, y_train)

>>> plot_decision_regions(X_combined_std,

... y_combined, classifier=lr,

... test_idx=range(105,150))

>>> plt.xlabel('petal length [standardized]')

>>> plt.ylabel('petal width [standardized]')

>>> plt.legend(loc='upper left')

>>> plt.show()After fitting the model on the training data, we plotted the decision regions, training samples and test samples, as shown here:

Looking at the preceding code that we used to train the LogisticRegression model, you might now be wondering, "What is this mysterious parameter C?" We will get to this in a second, but let's briefly go over the concept of overfitting and regularization in the next subsection first.

Furthermore, we can predict the class-membership probability of the samples via the predict_proba method. For example, we can predict the probabilities of the first Iris-Setosa sample:

>>> lr.predict_proba(X_test_std[0,:])

This returns the following array:

array([[ 0.000, 0.063, 0.937]])

The preceding array tells us that the model predicts a chance of 93.7 percent that the sample belongs to the Iris-Virginica class, and a 6.3 percent chance that the sample is a Iris-Versicolor flower.

We can show that the weight update in logistic regression via gradient descent is indeed equal to the equation that we used in Adaline in Chapter 2, Training Machine Learning Algorithms for Classification. Let's start by calculating the partial derivative of the log-likelihood function with respect to the jth weight:

Before we continue, let's calculate the partial derivative of the sigmoid function first:

Now we can resubstitute  =

=  in our first equation to obtain the following:

in our first equation to obtain the following:

Remember that the goal is to find the weights that maximize the log-likelihood so that we would perform the update for each weight as follows:

Since we update all weights simultaneously, we can write the general update rule as follows:

We define  as follows:

as follows:

Since maximizing the log-likelihood is equal to minimizing the cost function  that we defined earlier, we can write the gradient descent update rule as follows:

that we defined earlier, we can write the gradient descent update rule as follows:

This is equal to the gradient descent rule in Adaline in Chapter 2, Training Machine Learning Algorithms for Classification.

Tackling overfitting via regularization

Overfitting is a common problem in machine learning, where a model performs well on training data but does not generalize well to unseen data (test data). If a model suffers from overfitting, we also say that the model has a high variance, which can be caused by having too many parameters that lead to a model that is too complex given the underlying data. Similarly, our model can also suffer from underfitting (high bias), which means that our model is not complex enough to capture the pattern in the training data well and therefore also suffers from low performance on unseen data.

Although we have only encountered linear models for classification so far, the problem of overfitting and underfitting can be best illustrated by using a more complex, nonlinear decision boundary as shown in the following figure:

Note

Variance measures the consistency (or variability) of the model prediction for a particular sample instance if we would retrain the model multiple times, for example, on different subsets of the training dataset. We can say that the model is sensitive to the randomness in the training data. In contrast, bias measures how far off the predictions are from the correct values in general if we rebuild the model multiple times on different training datasets; bias is the measure of the systematic error that is not due to randomness.

One way of finding a good bias-variance tradeoff is to tune the complexity of the model via regularization. Regularization is a very useful method to handle collinearity (high correlation among features), filter out noise from data, and eventually prevent overfitting. The concept behind regularization is to introduce additional information (bias) to penalize extreme parameter weights. The most common form of regularization is the so-called L2 regularization (sometimes also called L2 shrinkage or weight decay), which can be written as follows:

Here,  is the so-called regularization parameter.

is the so-called regularization parameter.

Note

Regularization is another reason why feature scaling such as standardization is important. For regularization to work properly, we need to ensure that all our features are on comparable scales.

In order to apply regularization, we just need to add the regularization term to the cost function that we defined for logistic regression to shrink the weights:

Via the regularization parameter  , we can then control how well we fit the training data while keeping the weights small. By increasing the value of

, we can then control how well we fit the training data while keeping the weights small. By increasing the value of  , we increase the regularization strength.

, we increase the regularization strength.

The parameter C that is implemented for the LogisticRegression class in scikit-learn comes from a convention in support vector machines, which will be the topic of the next section. C is directly related to the regularization parameter  , which is its inverse:

, which is its inverse:

So we can rewrite the regularized cost function of logistic regression as follows:

Consequently, decreasing the value of the inverse regularization parameter C means that we are increasing the regularization strength, which we can visualize by plotting the L2 regularization path for the two weight coefficients:

>>> weights, params = [], []

>>> for c in np.arange(-5, 5):

... lr = LogisticRegression(C=10**c, random_state=0)

... lr.fit(X_train_std, y_train)

... weights.append(lr.coef_[1])

... params.append(10**c)

>>> weights = np.array(weights)

>>> plt.plot(params, weights[:, 0],

... label='petal length')

>>> plt.plot(params, weights[:, 1], linestyle='--',

... label='petal width')

>>> plt.ylabel('weight coefficient')

>>> plt.xlabel('C')

>>> plt.legend(loc='upper left')

>>> plt.xscale('log')

>>> plt.show()By executing the preceding code, we fitted ten logistic regression models with different values for the inverse-regularization parameter C. For the purposes of illustration, we only collected the weight coefficients of the class 2 vs. all classifier. Remember that we are using the OvR technique for multiclass classification.

As we can see in the resulting plot, the weight coefficients shrink if we decrease the parameter C, that is, if we increase the regularization strength:

Note

Since an in-depth coverage of the individual classification algorithms exceeds the scope of this book, I warmly recommend Dr. Scott Menard's Logistic Regression: From Introductory to Advanced Concepts and Applications, Sage Publications, to readers who want to learn more about logistic regression.

Tackling overfitting via regularization

Overfitting is a common problem in machine learning, where a model performs well on training data but does not generalize well to unseen data (test data). If a model suffers from overfitting, we also say that the model has a high variance, which can be caused by having too many parameters that lead to a model that is too complex given the underlying data. Similarly, our model can also suffer from underfitting (high bias), which means that our model is not complex enough to capture the pattern in the training data well and therefore also suffers from low performance on unseen data.

Although we have only encountered linear models for classification so far, the problem of overfitting and underfitting can be best illustrated by using a more complex, nonlinear decision boundary as shown in the following figure:

Note

Variance measures the consistency (or variability) of the model prediction for a particular sample instance if we would retrain the model multiple times, for example, on different subsets of the training dataset. We can say that the model is sensitive to the randomness in the training data. In contrast, bias measures how far off the predictions are from the correct values in general if we rebuild the model multiple times on different training datasets; bias is the measure of the systematic error that is not due to randomness.

One way of finding a good bias-variance tradeoff is to tune the complexity of the model via regularization. Regularization is a very useful method to handle collinearity (high correlation among features), filter out noise from data, and eventually prevent overfitting. The concept behind regularization is to introduce additional information (bias) to penalize extreme parameter weights. The most common form of regularization is the so-called L2 regularization (sometimes also called L2 shrinkage or weight decay), which can be written as follows:

Here,  is the so-called regularization parameter.

is the so-called regularization parameter.

Note

Regularization is another reason why feature scaling such as standardization is important. For regularization to work properly, we need to ensure that all our features are on comparable scales.

In order to apply regularization, we just need to add the regularization term to the cost function that we defined for logistic regression to shrink the weights:

Via the regularization parameter  , we can then control how well we fit the training data while keeping the weights small. By increasing the value of