Adding touch events to your mobile application (Intermediate)

A user interacts with a mobile application using a touch-based interface. The mobile application should be able to provide quality support for touch-based user interfaces by interpreting finger activity on touch screens. The Kendo UI Mobile framework provides a set of APIs, using which the mobile application can handle user-initiated touch events, event sequences such as drags and swipes, and also in recognizing multi-touch gestures.

How to do it...

Create a document containing a layout or a view.

Define a widget with its

data-roleattribute set totouch.Place this widget in the layout or the view created in step 1 as shown in the following code:

<div data-role="view" id="touchWidgetContainer"> <div id="touchSurface" data-role="touch" data-touchstart="touchstart" data-tap="tap"> This is a touch surface. </div> </div>

When the mobile application first runs, the touch widgets inside a view or a layout will be initialized. In the previous markup, you will notice that a widget with the data-role attribute touch is defined. It also defines a couple of other data attributes, touchstart and tap. These declare touch event handlers that need to be invoked when the user starts the touch (touchstart) and for handling user taps on the screen (tap).

The definition for the touch event handlers, touchstart and tap, are as shown in the following code:

function touchstart(event) {

alert('Event - Touch Start' + " X= " + event.touch.x.location + " Y= " + event.touch.y.location);

}

function tap(event) {

alert("Event - tap");

}.The touch event object contains information on the active touch. It contains touch axis information; that is, the x and y coordinate where the touch was initiated. The properties x.location and y.location on the touch event object indicates the offset of the touch relative to the entire document. When a user touches the screen, the touchstart event is triggered. On the other hand, the tap event is fired when the user taps; that is, touches and then leaves the screen, similar to mouseclick and mouseleave on a desktop web application.

Apart from touchstart and tap, the framework can handle various other events such as double tap, hold, and swipe.

The following code snippet handles various touch events that have been mentioned previously:

<div data-role="view"

id="touchWidgetContainer">

<div id="touchSurface"

data-role="touch"

data-touchstart="touchstart"

data-tap="tap"

data-doubletap="doubletap"

data-hold="hold"

data-enable-swipe="true"

data-swipe="swipe">

Touch surface to handle various touch events<br>

Touch, tap, double-tap, swipe and hold

</div>

</div>Notice that there is another attribute, data-enable-swipe, that is set to true. By default, swipe events are not enabled; to enable them, you need to set the data-enable-swipe attribute to true. This will also disable the dragStart, drag, and dragEnd events that were to be triggered.

The corresponding event handlers are defined as follows:

<script>

function touchstart(event) {

alert('Event - Touch Start' + " X= " +event.touch.x.location + " Y= " + event.touch.y.location);

}

function tap(event) {

alert('Event - Tap');

}

function doubletap(event) {

alert('Event - Double tap');

}

function hold(event) {

alert('Event - Hold');

}

function swipe(event)

{

alert('Swipe direction ' + event.direction);

}

</script>The previous code snippet defines event handlers for touchstart, tap, doubletap, hold, and swipe. Note that the swipe event handles horizontal swipes, and as observed in the previous code snippet, in the swipe event handler the touch event object includes the direction information indicating the direction in which the swipe was triggered. It can either be left or right.

How it works...

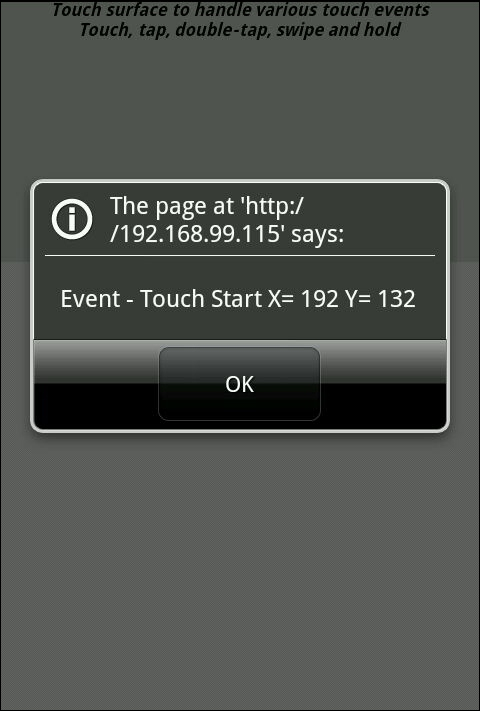

When you view the application on a mobile device and touch the screen, an alert popup, indicating the x and y location where the touch was initiated, would be shown as in the following screenshot: