Sometimes, you may have to download the datasets directly from their repository by using a web browser or a wget command (on Linux systems).

If you have already downloaded and unpacked the data (if necessary) into your working directory, the simplest way to load your data and start working is offered by the NumPy and the pandas library with their respective loadtxt and read_csv functions.

For instance, if you intend to analyze the Boston housing data and use the version present at http://mldata.org/repository/data/viewslug/regression-datasets-housing, you first have to download the regression-datasets-housing.csv file in your local directory.

You can use the following link for a direct download of the dataset: http://mldata.org/repository/data/download/csv/regression-datasets-housing.

Since the variables in the dataset are all numeric (13 continuous and one binary), the fastest way to load and start using it is by trying out the loadtxt NumPy function and directly loading all the data into an array.

Even in real-life datasets, you will often find mixed types of variables, which can be addressed by pandas.read_table or pandas.read_csv. Data can then be extracted by the values method; loadtxt can save a lot of memory if your data is already numeric. In fact, the loadtxt command doesn't require any in-memory duplication:

In: housing = np.loadtxt('regression-datasets-housing.csv',

delimiter=',')

print (type(housing))

Out: <class 'numpy.ndarray'>

In: print (housing.shape)

Out: (506, 14)

The loadtxt function expects, by default, tabulation as a separator between the values on a file. If the separator is a colon (,) or a semicolon(;), you have to make it explicit by using the parameter delimiter:

>>> import numpy as np

>>> type(np.loadtxt)

<type 'function'>

>>> help(np.loadtxt)

Help on the loadtxt function can be found in the numpy.lib.npyio module.

Another important default parameter is dtype, which is set to float.

This means that loadtxt will force all of the loaded data to be converted into a floating-point number.

If you need to determinate a different type (for example, int), you have to declare it beforehand.

For instance, if you want to convert numeric data to int, use the following code:

In: housing_int =housing.astype(int)

Printing the first three elements of the row of the housing and housing_int arrays can help you understand the difference:

In: print (housing[0,:3], 'n', housing_int[0,:3])

Out: [ 6.32000000e-03 1.80000000e+01 2.31000000e+00]

[ 0 18 2]

Frequently, though not always the case in our example, the data on files feature in the first line of a textual header contains the name of the variables. In this situation, the parameter that is skipped will point out the row in the loadtxt file from where it will start reading the data. Being the header on row 0 (in Python, counting always starts from 0), the skip=1 parameter will save the day and allow you to avoid an error and fail to load your data.

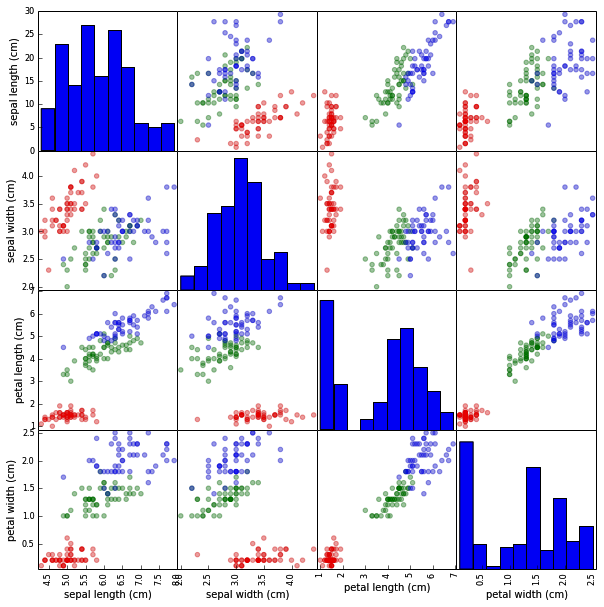

The situation would be slightly different if you were to download the Iris dataset, which is present at http://mldata.org/repository/data/viewslug/datasets-uci-iris/. In fact, this dataset presents a qualitative target variable, class, which is a string that expresses the iris species. Specifically, it's a categorical variable with four levels.

Therefore, if you were to use the loadtxt function, you will get a value error because an array must have all its elements of the same type. The variable class is a string, whereas the other variables are constituted by floating-point values.

The pandas library offers the solution to this and many similar cases, thanks to its DataFrame data structure that can easily handle datasets in a matrix form (row per columns) that is made up of different types of variables.

First, just download the datasets-uci-iris.csv file and have it saved in your local directory.

The dataset can be downloaded from http://archive.ics.uci.edu/ml/machine-learning-databases/iris/. This archive is the UC Irvine Machine Learning Repository, which currently maintains 440 datasets as a service to the machine learning community. Apart from this Iris dataset, you are free to download and try any other dataset present in the repository.

At this point, using read_csv from pandas is quite straightforward:

In: iris_filename = 'datasets-uci-iris.csv'

iris = pd.read_csv(iris_filename, sep=',', decimal='.',

header=None, names= ['sepal_length', 'sepal_width', \

'petal_length', 'petal_width', 'target'])

print (type(iris))

Out: <class 'pandas.core.frame.DataFrame'>

In order to not make the snippets of code printed in this book too cumbersome, we often wrap it and make it nicely formatted. When necessary, in order to safely interrupt the code and wrap it to a new line, we use the backslash symbol \ as in the preceding code example. When rendering the code of the book by yourself, you can ignore backslash symbols and go on writing all of the instructions on the same line, or you can digit the backslash and start a new line continuing with the code instructions. Please be warned that typing the backslash and then continuing the instruction on the same line will cause an execution error.

Apart from the filename, you can specify the separator (sep), the way the decimal points are expressed (decimal), whether there is a header (in this case, header=None; normally, if you have a header, then header=0), and the name of the variable where there is one (you can use a list; otherwise, pandas will provide some automatic naming).

Also, we have defined names that use single words (instead of spaces, we used underscores). Thus, we can later directly extract single variables by calling them as we do for methods; for instance, iris.sepal_length will extract the sepal length data.

At this point, if you need to convert the pandas DataFrame into a couple of NumPy arrays that contain the data and target values, this can be done easily in a couple of commands:

In: iris_data = iris.values[:,:4]

iris_target, iris_target_labels = pd.factorize(iris.target)

print (iris_data.shape, iris_target.shape)

Out: (150, 4) (150,)