Creating a basic Lake House architecture

In this section, we will go through a hands-on example to create a basic data Lake House architecture. This tutorial will use the AWS CLI and the AWS console. By the end of this section, we will have spun up a working data lake and a data warehouse environment with demo data loaded.

Important note

The resources for this tutorial might introduce charges to your AWS account. Once you finish with the exercise, make sure you clean up the resources to prevent incurring further charges.

Creating the data lake storage

In this step, we will add the data lake storage. Then we will upload a demo dataset and will discover its schema automatically.

Step 1 – creating the S3 bucket

Let's begin:

- If you haven't installed it already, follow the AWS documentation to install and configure the AWS CLI. To complete this tutorial, you will need to use a role that has access to the S3, Glue, Redshift, and IAM services: https://docs.aws.amazon.com/cli/latest/userguide/cli-chap-configure.html.

- First, let's create the S3 bucket as the data lake storage. Your S3 bucket needs to have a globally unique name. For that reason, we should introduce some randomness to it. Let's pick a random set of 10 characters and numbers. For this tutorial, you should choose your own random set of characters for this string; for example,

SF482XHS7M.We will use the random string in the data lake name, to ensure it is globally unique.

Let's use

my-data-lake-<random string>as the bucket name. - To create a bucket, we can type the following command into the CLI. Replace the following random string with your random string:

% aws s3api create-bucket --bucket data-lake-xxxxxxxxxx --region us-east-1

And the response should look like this:

{ "Location": "/data-lake-xxxxxxxxxx" }

Step 2 – adding data into the data lake

Now let's add some data. For this exercise, we will use a subset of the New York City Taxi and Limousine Commission (TLC) Trip Record Data:

- Let's first have a look at the dataset:

% aws s3 ls 's3://nyc-tlc/trip data/' --no-sign-request

This command will return all the files in the open S3 location:

2016-08-11 15:32:21 85733063 fhv_tripdata_2015-01.csv 2016-08-11 15:33:04 97863482 fhv_tripdata_2015-02.csv 2016-08-11 15:33:40 102220197 fhv_tripdata_2015-03.csv … 2021-02-26 16:54:00 138989555 yellow_tripdata_2020-11.csv 2021-02-26 16:54:00 134481400 yellow_tripdata_2020-12.csv

We don't need to download all of them. For this tutorial, we will copy only the files for 2020.

- We can use the S3 CLI

excludeandincludeparameters to apply a pattern to match the desired filenames. The command to copy is as follows:% aws s3 cp "s3://nyc-tlc/trip data/" s3://data-lake-xxxxxxxxxx/yellowtrips/ --recursive --exclude "*" --include "yellow_tripdata_2020*"

- Once completed, we can then verify that the files exist in our environment with the

aws s3 lscommand, which lists the files under a specific S3 location:% aws s3 ls s3://data-lake-xxxxxxxxxx/yellowtrips/ 2021-03-27 16:53:41 593610736 yellow_tripdata_2020-01.csv 2021-03-27 16:53:41 584190585 yellow_tripdata_2020-02.csv 2021-03-27 16:53:42 278288608 yellow_tripdata_2020-03.csv 2021-03-27 16:53:41 21662261 yellow_tripdata_2020-04.csv 2021-03-27 16:53:43 31641590 yellow_tripdata_2020-05.csv 2021-03-27 16:53:42 50277193 yellow_tripdata_2020-06.csv 2021-03-27 16:53:44 73326707 yellow_tripdata_2020-07.csv 2021-03-27 16:53:46 92411545 yellow_tripdata_2020-08.csv 2021-03-27 16:53:50 123394595 yellow_tripdata_2020-09.csv 2021-03-27 16:53:54 154917592 yellow_tripdata_2020-10.csv 2021-03-27 16:53:57 138989555 yellow_tripdata_2020-11.csv 2021-03-27 16:53:58 134481400 yellow_tripdata_2020-12.csv

Note

You can use data in a shared data lake as part of your data lake without the need to actually copy it across to your data lake.

Step 3 – identifying the schema

The next step is to identify the schema of the dataset. For this purpose, we will use the AWS Glue crawlers. AWS Glue crawlers crawl through the data to detect the schema. If a schema can be determined (remember there is no guarantee that the data has a specific schema) then Glue crawlers will populate the Glue Catalog with the schemas identified after crawling the data. Glue tables always belong to a Glue database. A database in Glue is just a logical repository of tables in the Glue Catalog:

- Let's start by creating a database using the

create-databasecommand:% aws glue create-database --database-input "{\"Name\":\"my-data-lake-db\"}" --region us-east-1 - We can verify the successful database creation using the

get-databasescommand:% aws glue get-databases --region us-east-1 { "DatabaseList": [ { "Name": "default", "CreateTime": 1553517157.0 }, { "Name": "my-data-lake-db", "CreateTime": 1616865129.0 } ] } - Before we create the Glue Crawler, we need to create an IAM role that will be assigned to the Crawler and allow it to access the data in the data lake. The crawler doesn't need to write to the data lake location, therefore only the read access permission is needed. To give the required permissions to a role, we need to attach policies that define the permissions. Let's define a policy document that allows read access to our data lake:

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "s3:GetObject", "s3:ListBucket" ], "Resource": [ "arn:aws:s3:::data-lake-xxxxxxxxxx", "arn:aws:s3:::data-lake-xxxxxxxxxx/*" ] } ] }The preceding policy document allows the policy holder to use the S3

ListBucketand theGetObjectAPI. The crawler will useListBucketto list the objects in our data lake bucket andgetObjectto read objects as it crawls data. This policy restricts access to the data lake bucket only. - Now, let's create a file and copy the policy text. Replace the random string in the data lake name with the random string in your environment. I used

vim, but you can use any text editor:% vim policy

- Then, let's create the IAM policy using the

create-policyCLI command:% aws iam create-policy --policy-name DataLakeReadAccess --policy-document file://policy

The preceding command created the policy and we should get a confirmation JSON object back. Note the policy ARN, as we will use it in a later step.

- Next, let's create the IAM role that the Glue crawler will assume. First, let's define the role policy document:

{ "Version": "2012-10-17", "Statement": [ { "Action": "sts:AssumeRole", "Effect": "Allow", "Principal": { "Service": "glue.amazonaws.com" } } ] } - Then create a file called

role-policyand copy in the preceding JSON document:% vim role-policy

This role policy document allows the Glue service to assume the role we will create.

- To create the role, we will use the

iam create-roleCLI command:% aws iam create-role --role-name GlueCrawlerRole --assume-role-policy-document file://role-policy

We should get a confirmation JSON message after running the command.

- Capture the role ARN, as it will be used later when defining the crawler.

- Then, let's attach the required policies to this role. For this role, we want to allocate two policies: the

AWSGlueServiceRolepolicy (this is managed by AWS) and theDataLakeReadAccesspolicy we created earlier. To attach policies to the IAM role we will use theiam attach-role-policycommand. Let's start with theAWSGlueServiceRolepolicy:% aws iam attach-role-policy --role-name GlueCrawlerRole --policy-arn arn:aws:iam::aws:policy/service-role/AWSGlueServiceRole

- Then we will attach the

DataLakeReadAccesspolicy. We will need the policy ARN that we captured earlier. The policy ARN should look like the following line:arn:aws:iam::<accountid>:policy/DataLakeReadAccess

And the command should look like the following:

% aws iam attach-role-policy --role-name GlueCrawlerRole --policy-arn arn:aws:iam::<ACCOUNT-ID>:policy/DataLakeReadAccess

- Now, let's create the AWS Glue crawler. For this purpose, we will use the

glue create-crawlerCLI command. Make sure you replace the role ARN and the data lake location with the values for your environment:% aws glue create-crawler --name qs-book-crawler --role arn:aws:iam::xxxxxxxxxxxx:role/GlueCrawlerRole --database-name my-data-lake-db --targets "{\"S3Targets\":[{\"Path\":\"s3://data-lake-xxxxxxxxxx/yellowtrips\"}]}" --region us-east-1 - Then, just start the crawler using the

glue start-crawlercommand:% aws glue start-crawler --name qs-book-crawler --region us-east-1

After 1-2 minutes, the Glue crawler should populate the database.

- We can confirm this by calling the

glue get-tables clicommand:% aws glue get-tables --database-name my-data-lake-db

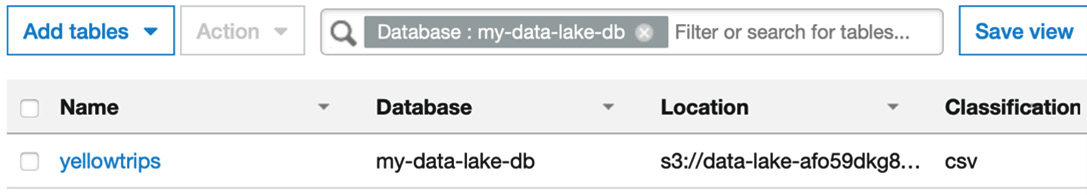

- You can view the Catalog from the AWS Console. Log in to the AWS Console and navigate to AWS Glue.

- Then on the left-hand side menu, under Data Catalog, choose Databases and then find

my-data-lake-db. Then click on View tables undermy-data-lake-db. It should look like the following screenshot:

Figure 1.5 – Glue console

Tip

You can click the checkbox to select the table and then, under Action, you can choose Preview Data. This will open the Amazon Athena console and run an Athena query that returns 10 values from the table.

Step 4 – creating the data warehouse

Let's create our data warehouse next.

To create the data warehouse, we will use the redshift create-cluster CLI command, or you can use the AWS Console:

%aws redshift create-cluster --node-type dc2.large --number-of-nodes 2 --master-username admin --master-user-password R3dsh1ft --cluster-identifier mycluster --region us-east-1

This command should give a response with the cluster metadata. After a few minutes, our cluster will be up and running.

Note

This command will create a Redshift cluster with a public IP address. This is something that should be avoided in real-world scenarios. The instructions provided are oversimplified for the purposes of this tutorial as this book is not focused on Amazon Redshift.

Step 5 – loading the data into the data warehouse

First, let's create an IAM role that we will assign to the Redshift cluster. We will use this role when using the Redshift Spectrum feature to query data in S3. We want the cluster to be able to write and read to our S3 location. We also want the cluster to be able to have read access to the Glue Catalog:

- Similarly to what we did earlier, we will create the following role policy document to allow the role to be assumed by the Redshift service:

{ "Version": "2012-10-17", "Statement": [ { "Action": "sts:AssumeRole", "Effect": "Allow", "Principal": { "Service": "redshift.amazonaws.com" } } ] } - Then copy this JSON object into a policy document:

% vim role-policy-redshift

- Now, let's create the role using the

iam create-rolecommand:% aws iam create-role --role-name RedshiftSpectrumRole --assume-role-policy-document file://role-policy-redshift

Note the role ARN, as we will use it later to attach it to the cluster.

- Next, we need to give the desired permissions by attaching the correct policies. This time, for simplicity, we will just attach two AWS managed policies. These policies are overly permissive, and normally we would attach policies with narrower permissions, as we did for the Glue crawlers in Step 3. Let's attach

AWSFullS3AccessandAWSFullGlueAccess:% aws iam attach-role-policy --role-name RedshiftSpectrumRole --policy-arn arn:aws:iam::aws:policy/AmazonS3FullAccess % aws iam attach-role-policy --role-name RedshiftSpectrumRole --policy-arn arn:aws:iam::aws:policy/AWSGlueConsoleFullAccess

- Next, we will attach this role to our cluster using the

redshift modify-cluster-iam-rolesCLI command. Note that you need to update the role ARN with the value from your environment:% aws redshift modify-cluster-iam-roles --cluster-identifier mycluster --add-iam-roles arn:aws:iam::<ACCOUNT-ID>:role/RedshiftSpectrumRole --region us-east-1

- The cluster change will take a few minutes to be applied. After the change is applied, the cluster will be ready to fetch data from the S3 data lake. To connect to the cluster, we will use the built-in query editor found in the AWS Management Console. To find the editor, navigate to the Redshift console, and see the left-hand side menu. The editor will need to establish a connection. Make sure you select the cluster we created earlier, and type

devas the database name andadminas the username.Note

We didn't set a database name earlier. Redshift uses

devas the default value. - In the editor page, we will need to create a table to store the data. Let's name the table

yellowtrips_3mo, as we will only store 3 months' worth of data:create table yellowtrips_3mo (vendorid varchar(10), tpep_pickup_datetime datetime, tpep_dropoff_datetime datetime, passenger_count int, trip_distance float, ratecodeid varchar(10), store_and_fwd_flag char(1), pulocationid varchar(10), dolocationid varchar(10), payment_type varchar(10), fare_amount float, extra float, mta_tax float, tip_amount float, tolls_amount float, improvement_surcharge float, total_amount float, congestion_surcharge float);

- Then, let's copy 3 months' worth of data into the data warehouse. Let's use the

COPYcommand, as follows:copy yellowtrips_3mo from 's3://data-lake-afo59dkg84/yellowtrips/yellow_tripdata_2020-10.csv' iam_role 'arn:aws:iam::xxxxxxxxxxxx:role/ RedshiftSpectrumRole' FORMAT AS CSV dateformat 'auto' ignoreheader 1; copy yellowtrips_3mo from 's3://data-lake-afo59dkg84/yellowtrips/yellow_tripdata_2020-11.csv' iam_role 'arn:aws:iam::xxxxxxxxxxxx:role/ RedshiftSpectrumRole' FORMAT AS CSV dateformat 'auto' ignoreheader 1; copy yellowtrips_3mo from 's3://data-lake-afo59dkg84/yellowtrips/yellow_tripdata_2020-12.csv' iam_role 'arn:aws:iam::xxxxxxxxxxxx:role/ RedshiftSpectrumRole' FORMAT AS CSV dateformat 'auto' ignoreheader 1;

- At this stage, we have a data lake with 12 months' worth of data and a data warehouse that contains only the most recent data (3 months). One of the characteristics of the Lake House architecture is that it allows its users to query the data lake from the data warehouse. Feel free to query the data and start getting an understanding of the dataset. Let's create the external schema so that we can enable the Spectrum feature. Use the following command in your Redshift editor. Replace the role ARN with the values from your environment:

create external schema spectrum_schema from data catalog database 'my-data-lake-db' iam_role 'arn:aws:iam::xxxxxxxxxxxx:role/RedshiftSpectrumRole' create external database if not exists;

- Let's just compare the size of the two tables using a simple

count(*)query:select count(*) from public.yellowtrips_3mo; select count(*) from spectrum_schema.yellowtrips;

The first query will run against the recent data in the data warehouse. The second will run against the first query using the Spectrum nodes using the data in the data lake. As expected, the number of records in the data lake should be much higher than the number of records in the data warehouse. Specifically, the query result was 24,648,499 for the year 2020 and 4,652,013 records for the last 3 months of 2020.

Note

The Spectrum queries use the Spectrum nodes and are charged separately from the Redshift cluster. Every query incurs an added cost based on the data it needs to scan. Refer to the AWS pricing for details.

Feel free to experiment with the data and trigger a few queries to understand the dataset. When you finish with the Redshift cluster, you can pause the cluster so that you stop the on-demand billing. Once the cluster is paused you will only pay for the cluster storage.