The values of each feature in a dataset can vary between random values. So, sometimes it is important to scale them so that this becomes a level playing field. Through this statistical procedure, it's possible to compare identical variables belonging to different distributions and different variables.

Data scaling

Getting ready

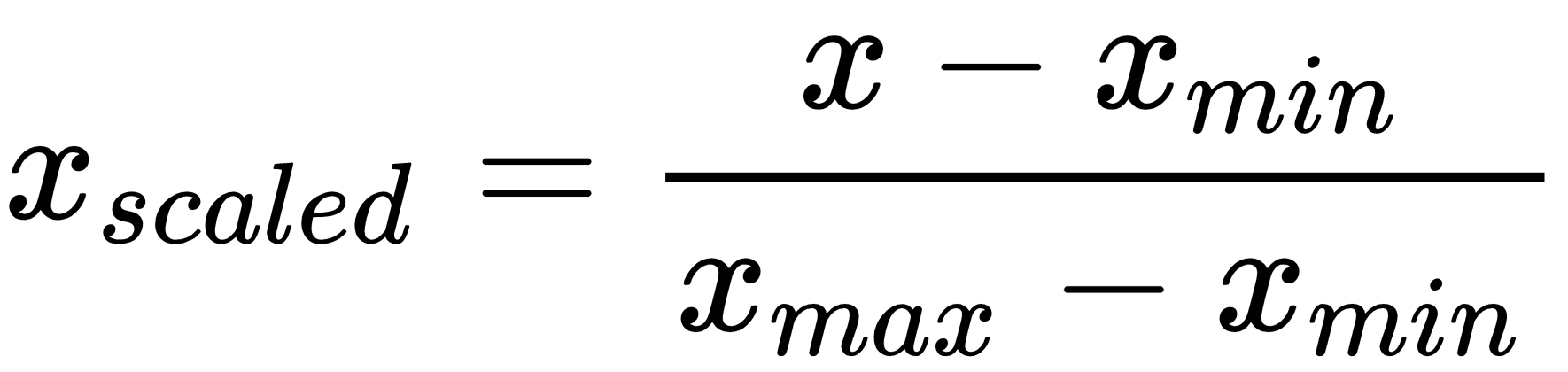

We'll use the min-max method (usually called feature scaling) to get all of the scaled data in the range [0, 1]. The formula used to achieve this is as follows:

To scale features between a given minimum and maximum value—in our case, between 0 and 1—so that the maximum absolute value of each feature is scaled to unit size, the preprocessing.MinMaxScaler() function can be used.

How to do it...

Let's see how to scale data in Python:

- Let's start by defining the data_scaler variable:

>> data_scaler = preprocessing.MinMaxScaler(feature_range=(0, 1))

- Now we will use the fit_transform() method, which fits the data and then transforms it (we will use the same data as in the previous recipe):

>> data_scaled = data_scaler.fit_transform(data)

A NumPy array of a specific shape is returned. To understand how this function has transformed data, we display the minimum and maximum of each column in the array.

- First, for the starting data and then for the processed data:

>> print("Min: ",data.min(axis=0))

>> print("Max: ",data.max(axis=0))

The following results are returned:

Min: [ 0. -1.5 -1.9 -5.4]

Max: [3. 4. 2. 2.1]

- Now, let's do the same for the scaled data using the following code:

>> print("Min: ",data_scaled.min(axis=0))

>> print("Max: ",data_scaled.max(axis=0))

The following results are returned:

Min: [0. 0. 0. 0.]

Max: [1. 1. 1. 1.]

After scaling, all the feature values range between the specified values.

- To display the scaled array, we will use the following code:

>> print(data_scaled)

The output will be displayed as follows:

[[ 1. 0. 1. 0. ]

[ 0. 1. 0.41025641 1. ]

[ 0.33333333 0.87272727 0. 0.14666667]]

Now, all the data is included in the same interval.

How it works...

When data has different ranges, the impact on response variables might be higher than the one with a lesser numeric range, which can affect the prediction accuracy. Our goal is to improve predictive accuracy and ensure this doesn't happen. Hence, we may need to scale values under different features so that they fall within a similar range. Through this statistical procedure, it's possible to compare identical variables belonging to different distributions and different variables or variables expressed in different units.

There's more...

Feature scaling consists of limiting the excursion of a set of values within a certain predefined interval. It guarantees that all functionalities have the exact same scale, but does not handle anomalous values well. This is because extreme values become the extremes of the new range of variation. In this way, the actual values are compressed by keeping the distance to the anomalous values.

See also

- Scikit-learn's official documentation of the sklearn.preprocessing.MinMaxScaler() function: https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.MinMaxScaler.html.