Introducing key features in Polars

Polars is a blazingly fast DataFrame library that allows you to manipulate and transform your structured data. It is designed to work on a single machine utilizing all the available CPUs.

There are many other DataFrame libraries in Python including pandas and PySpark. Polars is one of the newest DataFrame libraries. It is performant and it has been gaining popularity at lightning speed.

A DataFrame is a two-dimensional structure that contains one or more Series. A Series is a one-dimensional structure, array, or list. You can think of a DataFrame as a table and a Series as a column. However, Polars is so much more. There are concepts and features that make Polars a fast and high-performant DataFrame library. It’s good to have at least some level of understanding of these key features to maximize your learning and effective use of Polars.

At a high level, these are the key features that make Polars unique:

- Speed and efficiency

- Expressions

- The lazy API

Speed and efficiency

We know that Polars is fast and efficient. But what has contributed to making Polars the way it is today? There are a few main components that contribute to its speed and efficiency:

- The Rust programming language

- The Apache Arrow columnar format

- The lazy API

Polars is written in Rust, a low-level programming language that gives a similar level of performance and full control over memory as C/C++. Because of the support for concurrency in Rust, Polars can execute many operations in parallel, utilizing all the CPUs available on your machine without any configuration. We call that embarrassingly parallel execution.

Also, Polars is based on Apache Arrow’s columnar memory format. That means that Polars can not only utilize the optimization of columnar memory but also share data between other Arrow-based tools for free without copying the data every time (using pointers to the original data, eliminating the need to copy data around).

Finally, the lazy API makes Polars even faster and more efficient by implementing several other query optimizations. We’ll cover that in a second under The lazy API.

These core components have essentially made it possible to implement the features that make Polars so fast and efficient.

Expressions

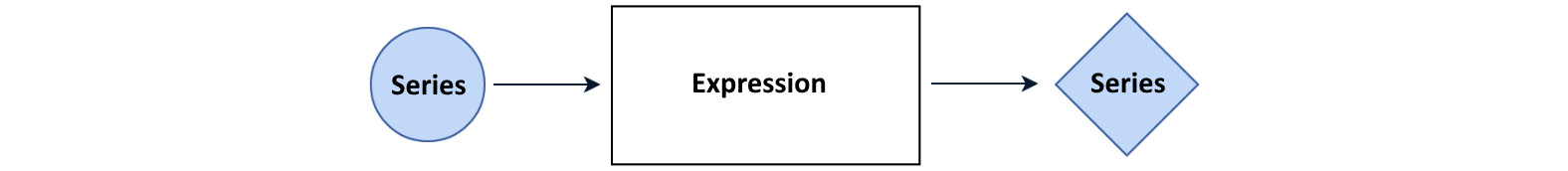

Expressions are what makes Polars’s syntax readable and easy to use. Its expressive syntax allows you to write complex logic in an organized, efficient fashion. Simply put, an expression takes a Series as an input and gives back a Series as an output (think of a Series like a column in a table or DataFrame). You can combine multiple expressions to build complex queries. This chain of expressions is the essence that makes your query even more powerful.

An expression takes a Series and gives back a Series as shown in the following diagram:

Figure 1.1 – The Polars expressions mechanism

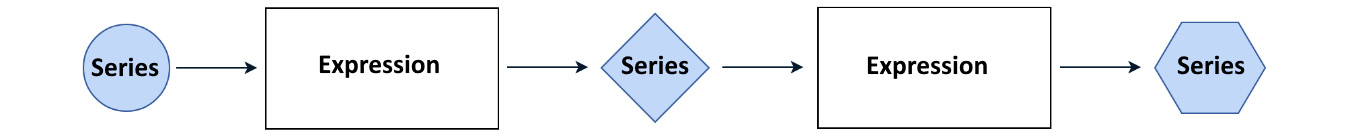

Multiple expressions work on a Series one after another as shown in the following diagram:

Figure 1.2 – Chained Polars expressions

As it relates to expressions, context is an important concept. A context is essentially the environment in which an expression is evaluated. In other words, expressions can be used when you expose them within a context. Of the contexts you have access to in Polars, these are the three main ones:

- Selection

- Filtering

- Group by/aggregation

We’ll look at specific examples and use cases of how you can utilize expressions in these contexts throughout the book. You’ll unlock the power of Polars as you learn to understand and use expressions extensively in your code.

Expressions are part of the clean and simple Polars API. This provides you with better ergonomics and usability for building your data transformation logic in Polars.

The lazy API

The lazy API makes Polars even faster and more efficient by applying additional optimizations such as predicate pushdown and projection pushdown. It also optimizes the query plan automatically, meaning that Polars figures out the most optimal way of executing your query. You can access the lazy API by using LazyFrame, which is a different variation of DataFrame.

The lazy API uses lazy evaluation, which is a strategy that involves delaying the evaluation of an expression until the resulting value is needed. With the lazy API, Polars processes your query end-to-end instead of processing it one operation at a time. You can see the full list of optimizations available with the lazy API in the Polars user guide here: https://pola-rs.github.io/polars/user-guide/lazy/optimizations/.

One other feature that’s available in the lazy API is streaming processing or the streaming API. It allows you to process data that’s larger than the amount of memory available on your machine. For example, if you have 16 GB of RAM on your laptop, you may be able to process 50 GB of data.

However, it’s good to keep in mind that there is a limitation. Although this larger-than-RAM processing feature is available on many of the operations, not all operations are available (as of the time of authoring the book).

Note

Eager evaluation is another evaluation strategy in which an expression is evaluated as soon as it is called. The Polars DataFrame and other DataFrame libraries like pandas use it by default.

See also

To learn more about how Python Polars works, including its optimizations and mechanics, please refer to these resources:

- https://pola-rs.github.io/polars/

- https://pola-rs.github.io/polars/user-guide/lazy/optimizations/

- https://blog.jetbrains.com/dataspell/2023/08/polars-vs-pandas-what-s-the-difference/

- Ritchie Vink Polars; done the fast, now the scale PyCon 2023 - https://www.youtube.com/watch?v=apuFzB4j2_E&list=LL&index=5

- Polars, the fastest DataFrame library you never heard of - https://www.youtube.com/watch?v=pzx99Mp52C8&list=LL&index=5

- Polars, the Fastest Dataframe Library You Never Heard of. - Ritchie Vink | PyData Global 2021 - https://www.youtube.com/watch?v=iwGIuGk5nCE