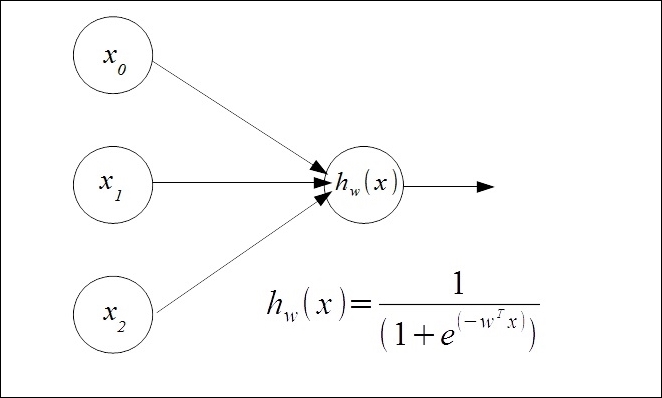

Logistic units

As a starting point, we use the idea of a logistic unit over the simplified model of a neuron. It consists of a set of inputs and outputs and an activation function. This activation function is essentially performing a calculation on the set of inputs, and subsequently giving an output. Here, we set the activation function to the sigmoid that we used for logistic regression in the previous chapter:

We have Two input units, x1 and x2 and a bias unit, x0, that is set to one. These are fed into a hypothesis function that uses the sigmoid logistic function and a weight vector, w, which parameterizes the hypothesis function. The feature vector, consisting of binary values, and the parameter vector for the preceding example consist of the following:

To see how we can get this to perform logical functions, let's give the model some weights. We can write this as a function of the sigmoid, g, and our weights. To get started, we are just going to choose some weights. We will learn shortly...