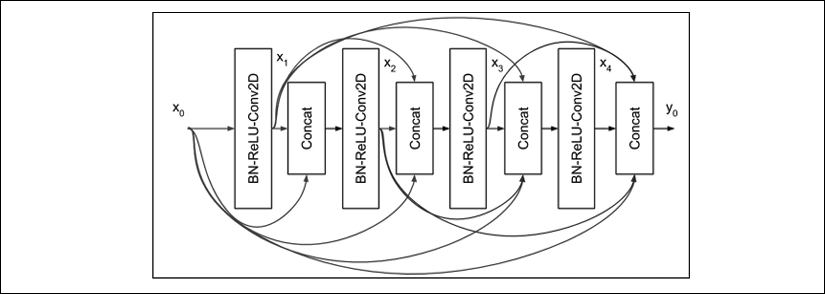

4. Densely Connected Convolutional Network (DenseNet)

Figure 2.4.1: A 4-layer Dense block in DenseNet.The input to each layer is made of all the previous feature maps.

DenseNet attacks the problem of vanishing gradient using a different approach. Instead of using shortcut connections, all the previous feature maps will become the input of the next layer. The preceding figure shows an example of a Dense interconnection in one Dense block.

For simplicity, in this figure, we'll only show four layers. Notice that the input to layer l is the concatenation of all previous feature maps. If we let BN-ReLU-Conv2D be represented by the operation H(x), then the output of layer l is:

xl = H (x0,x1,x2, ,xl-1) (Equation 2.4.1)

Conv2D uses a kernel of size 3. The number of feature maps generated per layer is called the growth rate, k. Normally, k = 12, but k = 24 is also used in the paper Densely Connected Convolutional Networks by Huang et al. (2017) [5]. Therefore...