For classification algorithms, the winner is...

Let’s take a moment to compare the performance metrics of the various algorithms we’ve discussed. However, keep in mind that these metrics are highly dependent on the data we’ve used in these examples, and they can significantly vary for different datasets.

The performance of a model can be influenced by factors such as the nature of the data, the quality of the data, and how well the assumptions of the model align with the data.

Here’s a summary of our observations:

|

Algorithm |

Accuracy |

Recall |

Precision |

|

Decision tree |

0.94 |

0.93 |

0.88 |

|

|

0.93 |

0.90 |

0.87 |

|

|

0.93 |

0.90 |

0.87 |

|

|

0.91 |

0.81 |

0.89 |

|

|

0.89 |

0.71 |

0.92 |

|

|

0.92 |

0.81 |

0.92 |

From the table above, the decision tree classifier exhibits the highest performance in terms of both accuracy and recall in this particular context. For precision, we see a tie between the SVM and Naive Bayes algorithms.

However, remember that these results are data-dependent. For instance, SVM might excel in scenarios where data is linearly separable or can be made so through kernel transformations. Naive Bayes, on the other hand, performs well when the features are independent. Decision trees and Random Forests might be preferred when we have complex non-linear relationships. Logistic regression is a solid choice for binary classification tasks and can serve as a good benchmark model. Lastly, XGBoost, being an ensemble technique, is powerful when dealing with a wide range of data types and often leads in terms of model performance across various tasks.

So, it’s critical to understand your data and the requirements of your task before choosing a model. These results are merely a starting point, and deeper exploration and validation should be performed for each specific use case.

Understanding regression algorithms

A supervised machine learning model uses one of the regression algorithms if the label is a continuous variable. In this case, the machine learning model is called a regressor.

To provide a more concrete understanding, let’s take a couple of examples. Suppose we want to predict the temperature for the next week based on historical data, or we aim to forecast sales for a retail store in the coming months.

Both temperatures and sales figures are continuous variables, which means they can take on any value within a specified range, as opposed to categorical variables, which have a fixed number of distinct categories. In such scenarios, we would use a regressor rather than a classifier.

In this section, we will present various algorithms that can be used to train a supervised machine learning regression model—or, put simply, a regressor. Before we go into the details of the algorithms, let’s first create a challenge for these algorithms to test their performance, abilities, and effectiveness.

Presenting the regressors challenge

Similar to the approach that we used with the classification algorithms, we will first present a problem to be solved as a challenge for all regression algorithms. We will call this common problem the regressors challenge. Then, we will use three different regression algorithms to address the challenge. This approach of using a common challenge for different regression algorithms has two benefits:

- We can prepare the data once and use the prepared data on all three regression algorithms.

- We can compare the performance of three regression algorithms in a meaningful way, as we will use them to solve the same problem.

Let’s look at the problem statement of the challenge.

The problem statement of the regressors challenge

Predicting the mileage of different vehicles is important these days. An efficient vehicle is good for the environment and is also cost-effective. The mileage can be estimated from the power of the engine and the characteristics of the vehicle. Let’s create a challenge for regressors to train a model that can predict the Miles per Gallon (MPG) of a vehicle based on its characteristics.

Let’s look at the historical dataset that we will use to train the regressors.

Exploring the historical dataset

The following are the features of the historical dataset data that we have:

|

Name |

Type |

Description |

|

|

Category |

Identifies a particular vehicle |

|

|

Continuous |

The number of cylinders (between four and eight) |

|

|

Continuous |

The displacement of the engine in cubic inches |

|

|

Continuous |

The horsepower of the engine |

|

|

Continuous |

The time it takes to accelerate from 0 to 60 mph (in seconds) |

The label for this problem is a continuous variable, MPG, that specifies the MPG for each of the vehicles.

Let’s first design the data processing pipeline for this problem.

Feature engineering using a data processing pipeline

Let’s see how we can design a reusable processing pipeline to address the regressors challenge. As mentioned, we will prepare the data once and then use it in all the regression algorithms. Let’s follow these steps:

- We will start by importing the dataset, as follows:

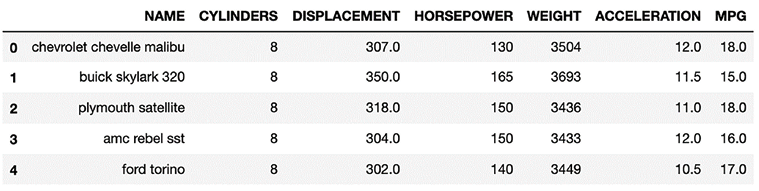

dataset = pd.read_csv('https://storage.googleapis.com/neurals/data/data/auto.csv') - Let’s now preview the dataset:

dataset.head(5) - This is how the dataset will look:

Figure 7.16: Please add a caption here

- Now, let’s proceed on to feature selection. Let’s drop the

NAMEcolumn, as it is only an identifier that is needed for cars. Columns that are used to identify the rows in our dataset are not relevant to training the model. Let’s drop this column. - Let’s convert all of the input variables and impute all the

nullvalues:dataset=dataset.drop(columns=['NAME']) dataset.head(5) dataset= dataset.apply(pd.to_numeric, errors='coerce') dataset.fillna(0, inplace=True)Imputation improves the quality of the data and prepares it to be used to train the model. Now, let’s see the final step.

- Let’s divide the data into testing and training partitions:

y=dataset['MPG'] X=dataset.drop(columns=['MPG']) # Splitting the dataset into the Training set and Test set from sklearn.model_selection import train_test_split from sklearn.cross_validation import train_test_split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.25, random_state = 0)

This has created the following four data structures:

X_train: A data structure containing the features of the training dataX_test: A data structure containing the features of the training testy_train: A vector containing the values of the label in the training datasety_test: A vector containing the values of the label in the testing dataset

Now, let’s use the prepared data on three different regressors so that we can compare their performance.