Chapter 8: State of the art in Natural Language Processing

Activity 11: Build a Text Summarization Model

Solution:

- Import the necessary Python packages and classes.

import os

import re

import pdb

import string

import numpy as np

import pandas as pd

from keras.utils import to_categorical

import matplotlib.pyplot as plt

%matplotlib inline

- Load the dataset and read the file.

path_data = "news_summary_small.csv"

df_text_file = pd.read_csv(path_data)

df_text_file.headlines = df_text_file.headlines.str.lower()

df_text_file.text = df_text_file.text.str.lower()

lengths_text = df_text_file.text.apply(len)

dataset = list(zip(df_text_file.text.values, df_text_file.headlines.values))

- Make vocab dictionary.

input_texts = []

target_texts = []

input_chars = set()

target_chars = set()

for line in dataset:

input_text, target_text = list(line[0]), list(line[1])

target_text = ['BEGIN_'] + target_text + ['_END']

input_texts.append(input_text)

target_texts.append(target_text)

for character in input_text:

if character not in input_chars:

input_chars.add(character)

for character in target_text:

if character not in target_chars:

target_chars.add(character)

input_chars.add("<unk>")

input_chars.add("<pad>")

target_chars.add("<pad>")

input_chars = sorted(input_chars)

target_chars = sorted(target_chars)

human_vocab = dict(zip(input_chars, range(len(input_chars))))

machine_vocab = dict(zip(target_chars, range(len(target_chars))))

inv_machine_vocab = dict(enumerate(sorted(machine_vocab)))

def string_to_int(string_in, length, vocab):

"""

Converts all strings in the vocabulary into a list of integers representing the positions of the

input string's characters in the "vocab"

Arguments:

string -- input string

length -- the number of time steps you'd like, determines if the output will be padded or cut

vocab -- vocabulary, dictionary used to index every character of your "string"

Returns:

rep -- list of integers (or '<unk>') (size = length) representing the position of the string's character in the vocabulary

"""

- Convert lowercase to standardize.

string_in = string_in.lower()

string_in = string_in.replace(',','')

if len(string_in) > length:

string_in = string_in[:length]

rep = list(map(lambda x: vocab.get(x, '<unk>'), string_in))

if len(string_in) < length:

rep += [vocab['<pad>']] * (length - len(string_in))

return rep

def preprocess_data(dataset, human_vocab, machine_vocab, Tx, Ty):

X, Y = zip(*dataset)

X = np.array([string_to_int(i, Tx, human_vocab) for i in X])

Y = [string_to_int(t, Ty, machine_vocab) for t in Y]

print("X shape from preprocess: {}".format(X.shape))

Xoh = np.array(list(map(lambda x: to_categorical(x, num_classes=len(human_vocab)), X)))

Yoh = np.array(list(map(lambda x: to_categorical(x, num_classes=len(machine_vocab)), Y)))

return X, np.array(Y), Xoh, Yoh

def softmax(x, axis=1):

"""Softmax activation function.

# Arguments

x : Tensor.

axis: Integer, axis along which the softmax normalization is applied.

# Returns

Tensor, output of softmax transformation.

# Raises

ValueError: In case 'dim(x) == 1'.

"""

ndim = K.ndim(x)

if ndim == 2:

return K.softmax(x)

elif ndim > 2:

e = K.exp(x - K.max(x, axis=axis, keepdims=True))

s = K.sum(e, axis=axis, keepdims=True)

return e / s

else:

raise ValueError('Cannot apply softmax to a tensor that is 1D')

- Run the previous code snippet to load data, get vocab dictionaries and define some utility functions to be used later. Define length of input characters and output characters.

Tx = 460

Ty = 75

X, Y, Xoh, Yoh = preprocess_data(dataset, human_vocab, machine_vocab, Tx, Ty)

Define the model functions (Repeator, Concatenate, Densors, Dotor)

# Defined shared layers as global variables

repeator = RepeatVector(Tx)

concatenator = Concatenate(axis=-1)

densor1 = Dense(10, activation = "tanh")

densor2 = Dense(1, activation = "relu")

activator = Activation(softmax, name='attention_weights')

dotor = Dot(axes = 1)

Define one-step-attention function:

def one_step_attention(h, s_prev):

"""

Performs one step of attention: Outputs a context vector computed as a dot product of the attention weights

"alphas" and the hidden states "h" of the Bi-LSTM.

Arguments:

h -- hidden state output of the Bi-LSTM, numpy-array of shape (m, Tx, 2*n_h)

s_prev -- previous hidden state of the (post-attention) LSTM, numpy-array of shape (m, n_s)

Returns:

context -- context vector, input of the next (post-attetion) LSTM cell

"""

- Use repeator to repeat s_prev to be of shape (m, Tx, n_s) so that you can concatenate it with all hidden states "a"

s_prev = repeator(s_prev)

- Use concatenator to concatenate a and s_prev on the last axis (≈ 1 line)

concat = concatenator([h, s_prev])

- Use densor1 to propagate concat through a small fully-connected neural network to compute the "intermediate energies" variable e.

e = densor1(concat)

- Use densor2 to propagate e through a small fully-connected neural network to compute the "energies" variable energies.

energies = densor2(e)

- Use "activator" on "energies" to compute the attention weights "alphas"

alphas = activator(energies)

- Use dotor together with "alphas" and "a" to compute the context vector to be given to the next (post-attention) LSTM-cell

context = dotor([alphas, h])

return context

Define the number of hidden states for decoder and encoder.

n_h = 32

n_s = 64

post_activation_LSTM_cell = LSTM(n_s, return_state = True)

output_layer = Dense(len(machine_vocab), activation=softmax)

Define the model architecture and run it to obtain a model.

def model(Tx, Ty, n_h, n_s, human_vocab_size, machine_vocab_size):

"""

Arguments:

Tx -- length of the input sequence

Ty -- length of the output sequence

n_h -- hidden state size of the Bi-LSTM

n_s -- hidden state size of the post-attention LSTM

human_vocab_size -- size of the python dictionary "human_vocab"

machine_vocab_size -- size of the python dictionary "machine_vocab"

Returns:

model -- Keras model instance

"""

- Define the inputs of your model with a shape (Tx,)

- Define s0 and c0, initial hidden state for the decoder LSTM of shape (n_s,)

X = Input(shape=(Tx, human_vocab_size), name="input_first")

s0 = Input(shape=(n_s,), name='s0')

c0 = Input(shape=(n_s,), name='c0')

s = s0

c = c0

- Initialize empty list of outputs

outputs = []

- Define your pre-attention Bi-LSTM. Remember to use return_sequences=True.

a = Bidirectional(LSTM(n_h, return_sequences=True))(X)

# Iterate for Ty steps

for t in range(Ty):

# Perform one step of the attention mechanism to get back the context vector at step t

context = one_step_attention(h, s)

- Apply the post-attention LSTM cell to the "context" vector.

# Pass: initial_state = [hidden state, cell state]

s, _, c = post_activation_LSTM_cell(context, initial_state = [s,c])

- Apply Dense layer to the hidden state output of the post-attention LSTM

out = output_layer(s)

- Append "out" to the "outputs" list

outputs.append(out)

- Create model instance taking three inputs and returning the list of outputs.

model = Model(inputs=[X, s0, c0], outputs=outputs)

return model

model = model(Tx, Ty, n_h, n_s, len(human_vocab), len(machine_vocab))

#Define model loss functions and other hyperparameters. Also #initialize decoder state vectors.

opt = Adam(lr = 0.005, beta_1=0.9, beta_2=0.999, decay = 0.01)

model.compile(loss='categorical_crossentropy', optimizer=opt, metrics=['accuracy'])

s0 = np.zeros((10000, n_s))

c0 = np.zeros((10000, n_s))

outputs = list(Yoh.swapaxes(0,1))

Fit the model to our data:

model.fit([Xoh, s0, c0], outputs, epochs=1, batch_size=100)

#Run inference step for the new text.

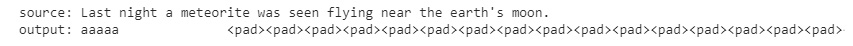

EXAMPLES = ["Last night a meteorite was seen flying near the earth's moon."]

for example in EXAMPLES:

source = string_to_int(example, Tx, human_vocab)

source = np.array(list(map(lambda x: to_categorical(x, num_classes=len(human_vocab)), source)))

source = source[np.newaxis, :]

prediction = model.predict([source, s0, c0])

prediction = np.argmax(prediction, axis = -1)

output = [inv_machine_vocab[int(i)] for i in prediction]

print("source:", example)

print("output:", ''.join(output))

The output is as follows: