NumPy

When handling data, we often need a way to work with multidimensional arrays. As we discussed previously, we also have to apply some basic mathematical and statistical operations on that data. This is exactly where NumPy positions itself. It provides support for large n-dimensional arrays and has built-in support for many high-level mathematical and statistical operations.

Note

Before NumPy, there was a library called Numeric. However, it's no longer used, because NumPy's signature ndarray allows the performant handling of large and high-dimensional matrices.

Ndarrays are the essence of NumPy. They are what makes it faster than using Python's built-in lists. Other than the built-in list data type, ndarrays provide a stridden view of memory (for example, int[] in Java). Since they are homogeneously typed, meaning all the elements must be of the same type, the stride is consistent, which results in less memory wastage and better access times.

The stride is the number of locations between the beginnings of two adjacent elements in an array. They are normally measured in bytes or in units of the size of the array elements. A stride can be larger or equal to the size of the element, but not smaller; otherwise, it would intersect the memory location of the next element.

Note

Remember that NumPy arrays have a defined data type. This means you are not able to insert strings into an integer type array. NumPy is mostly used with double-precision data types.

The following are some of the built-in methods that we will use in the exercises and activities of this chapter.

mean

NumPy provides implementations of all the mathematical operations we covered in the Overview of Statistics section of this chapter. The mean, or average, is the one we will look at in more detail in the upcoming exercise:

Note

The # symbol in the code snippet below denotes a code comment. Comments are added into code to help explain specific bits of logic.

# mean value for the whole dataset np.mean(dataset) # mean value of the first row np.mean(dataset[0]) # mean value of the whole first column np.mean(dataset[:, 0] # mean value of the first 10 elements of the second row np.mean(dataset[1, 0:10])

median

Several of the mathematical operations have the same interface. This makes them easy to interchange if necessary. The median, var, and std methods will be used in the upcoming exercises and activities:

# median value for the whole dataset np.median(dataset) # median value of the last row using reverse indexing np.median(dataset[-1]) # median value of values of rows >5 in the first column np.median(dataset[5:, 0])

Note that we can index every element from the end of our dataset as we can from the front by using reverse indexing. It's a simple way to get the last or several of the last elements of a list. Instead of [0] for the first/last element, it starts with dataset[-1] and then decreases until dataset[-len(dataset)], which is the first element in the dataset.

var

As we mentioned in the Overview of Statistics section, the variance describes how far a set of numbers is spread out from their mean. We can calculate the variance using the var method of NumPy:

# variance value for the whole dataset np.var(dataset) # axis used to get variance per column np.var(dataset, axis=0) # axis used to get variance per row np.var(dataset, axis=1)

std

One of the advantages of the standard deviation is that it remains in the scalar system of the data. This means that the unit of the deviation will have the same unit as the data itself. The std method works just like the others:

# standard deviation for the whole dataset np.std(dataset) # std value of values from the 2 first rows and columns np.std(dataset[:2, :2]) # axis used to get standard deviation per row np.std(dataset, axis=1)

Now we will do an exercise to load a dataset and calculate the mean using these methods.

Note

All the exercises and activities in this chapter will be developed in Jupyter Notebooks. Please download the GitHub repository with all the prepared templates from https://packt.live/31USkof. Make sure you have installed all the libraries as mentioned in the preface.

Exercise 1.01: Loading a Sample Dataset and Calculating the Mean Using NumPy

In this exercise, we will be loading the normal_distribution.csv dataset and calculating the mean of each row and each column in it:

- Using the Anaconda Navigator launch either Jupyter Labs or Jupyter Notebook. In the directory of your choice, create a

Chapter01/Exercise1.01folder. - Create a new Jupyter Notebook and save it as

Exercise1.01.ipynbin theChapter01/Exercise1.01folder. - Now, begin writing the code for this exercise as shown in the steps below. We begin with the import statements. Import

numpywith an alias:import numpy as np

- Use the

genfromtxtmethod of NumPy to load the dataset:Note

The code snippet shown here uses a backslash (

\) to split the logic across multiple lines. When the code is executed, Python will ignore the backslash, and treat the code on the next line as a direct continuation of the current line.dataset = \ np.genfromtxt('../../Datasets/normal_distribution.csv', \ delimiter=',')Note

In the preceding snippet, and for the rest of the book, we will be using a relative path to load the datasets. However, for the preceding code to work as intended, you need to follow the folder arrangement as present in this link: https://packt.live/3ftUu3P. Alternatively, you can also use the absolute path; for example,

dataset = np.genfromtxt('C:/Datasets/normal_distribution.csv', delimiter=','). If your Jupyter Notebook is saved in the same folder as the dataset, then you can simply use the filename:dataset = np.genfromtxt('normal_distribution.csv', delimiter=',')The

genfromtxtmethod helps load the data from a given text or.csvfile. If everything works as expected, the generation should run through without any error or output.Note

The

numpy.genfromtextmethod is less efficient than thepandas.read_csvmethod. We shall refrain from going into the details of why this is the case as this explanation is beyond the scope of this text. - Check the data you just imported by simply writing the name of the ndarray in the next cell. Simply executing a cell that returns a value, such as an ndarray, will use Jupyter formatting, which looks nice and, in most cases, displays more information than using

print:# looking at the dataset dataset

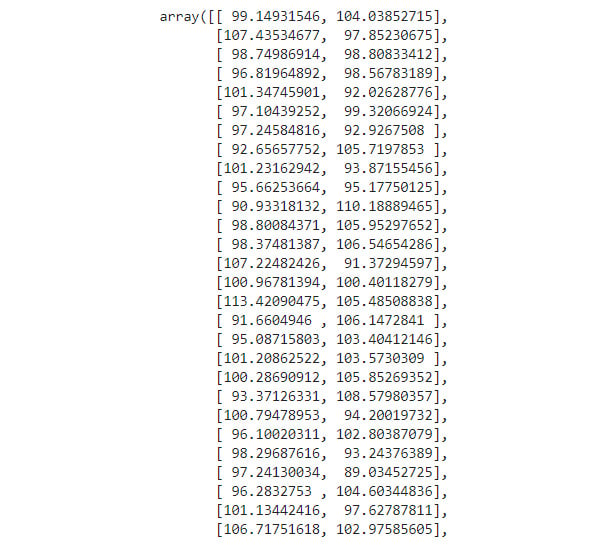

A section of the output resulting from the preceding code is as follows:

Figure 1.9: The first few rows of the normal_distribution.csv file

- Print the shape using the

dataset.shapecommand to get a quick overview of our dataset. This will give us output in the form (rows, columns):dataset.shape

We can also call the rows as instances and the columns as features. This means that our dataset has 24 instances and 8 features. The output of the preceding code is as follows:

(24,8)

- Calculate the mean after loading and checking our dataset. The first row in a NumPy array can be accessed by simply indexing it with zero; for example,

dataset[0]. As we mentioned previously, NumPy has some built-in functions for calculations such as the mean. Callnp.mean()and pass in the dataset's first row to get the result:# calculating the mean for the first row np.mean(dataset[0])

The output of the preceding code is as follows:

100.177647525

- Now, calculate the mean of the first column by using

np.mean()in combination with the column indexingdataset[:, 0]:np.mean(dataset[:, 0])

The output of the preceding code is as follows:

99.76743510416668

Whenever we want to define a range to select from a dataset, we can use a colon,

:, to provide start and end values for the to be selected range. If we don't provide start and end values, the default of 0 to n is used, where n is the length of the current axis. - Calculate the mean for every single row, aggregated in a list, using the

axistools of NumPy. Note that by simply passing theaxisparameter in thenp.mean()call, we can define the dimension our data will be aggregated on.axis=0is horizontal andaxis=1is vertical. Get the result for each row by usingaxis=1:np.mean(dataset, axis=1)

The output of the preceding code is as follows:

Figure 1.10: Mean of the elements of each row

Get the mean of each column by using

axis=0:np.mean(dataset, axis=0)

The output of the preceding code is as follows:

Figure 1.11: Mean of elements for each column

- Calculate the mean of the whole matrix by summing all the values we retrieved in the previous steps:

np.mean(dataset)

The output of the preceding code is as follows:

100.16536917390624

Note

To access the source code for this specific section, please refer to https://packt.live/30IkAMp.

You can also run this example online at https://packt.live/2Y4yHK1.

You are already one step closer to using NumPy in combination with plotting libraries and creating impactful visualizations. Since we've now covered the very basics and calculated the mean, it's now up to you to solve the upcoming activity.

Activity 1.01: Using NumPy to Compute the Mean, Median, Variance, and Standard Deviation of a Dataset

In this activity, we will use the skills we've learned to import datasets and perform some basic calculations (mean, median, variance, and standard deviation) to compute our tasks.

Perform the following steps to implement this activity:

- Open the

Activity1.01.ipynbJupyter Notebook from theChapter01folder to do this activity. Import NumPy and give it the aliasnp. - Load the

normal_distribution.csvdataset by using thegenfromtxtmethod from NumPy. - Print a subset of the first two rows of the dataset.

- Load the dataset and calculate the mean of the third row. Access the third row by using index 2,

dataset[2]. - Index the last element of an ndarray in the same way a regular Python list can be accessed.

dataset[:, -1]will give us the last column of every row. - Get a submatrix of the first three elements of every row of the first three columns by using the double-indexing mechanism of NumPy.

- Calculate the median for the last row of the dataset.

- Use reverse indexing to define a range to get the last three columns. We can use

dataset[:, -3:]here. - Aggregate the values along an

axisto calculate the rows. We can useaxis=1here. - Calculate the variance for each column using

axis 0. - Calculate the variance of the intersection of the last two rows and the first two columns.

- Calculate the standard deviation for the dataset.

Note

The solution for this activity can be found via this link.

You have now completed your first activity using NumPy. In the following activities, this knowledge will be consolidated further.

Basic NumPy Operations

In this section, we will learn about basic NumPy operations such as indexing, slicing, splitting, and iterating and implement them in an exercise.

Indexing

Indexing elements in a NumPy array, at a high level, works the same as with built-in Python lists. Therefore, we can index elements in multi-dimensional matrices:

# index single element in outermost dimension dataset[0] # index in reversed order in outermost dimension dataset[-1] # index single element in two-dimensional data dataset[1, 1] # index in reversed order in two-dimensional data dataset[-1, -1]

Slicing

Slicing has also been adapted from Python's lists. Being able to easily slice parts of lists into new ndarrays is very helpful when handling large amounts of data:

# rows 1 and 2 dataset[1:3] # 2x2 subset of the data dataset[:2, :2] # last row with elements reversed dataset[-1, ::-1] # last 4 rows, every other element up to index 6 dataset[-5:-1, :6:2]

Splitting

Splitting data can be helpful in many situations, from plotting only half of your time-series data to separating test and training data for machine learning algorithms.

There are two ways of splitting your data, horizontally and vertically. Horizontal splitting can be done with the hsplit method. Vertical splitting can be done with the vsplit method:

# split horizontally in 3 equal lists np.hsplit(dataset, (3)) # split vertically in 2 equal lists np.vsplit(dataset, (2))

Iterating

Iterating the NumPy data structures, ndarrays, is also possible. It steps over the whole list of data one after another, visiting every single element in the ndarray once. Considering that they can have several dimensions, indexing gets very complex.

The nditer is a multi-dimensional iterator object that iterates over a given number of arrays:

# iterating over whole dataset (each value in each row) for x in np.nditer(dataset): print(x)

The ndenumerate will give us exactly this index, thus returning (0, 1) for the second value in the first row:

Note

The triple-quotes ( """ ) shown in the code snippet below are used to denote the start and end points of a multi-line code comment. Comments are added into code to help explain specific bits of logic.

""" iterating over the whole dataset with indices matching the position in the dataset """ for index, value in np.ndenumerate(dataset): print(index, value)

Now, we will perform an exercise using these basic NumPy operations.

Exercise 1.02: Indexing, Slicing, Splitting, and Iterating

In this exercise, we will use the features of NumPy to index, slice, split, and iterate ndarrays to consolidate what we've learned. A client has provided us with a dataset, normal_distribution_splittable.csv, wants us to confirm that the values in the dataset are closely distributed around the mean value of 100.

Note

You can obviously plot a distribution and show the spread of data, but here we want to practice implementing the aforementioned operations using the NumPy library.

Let's use the features of NumPy to index, slice, split, and iterate ndarrays.

Indexing

- Create a new Jupyter Notebook and save it as

Exercise1.02.ipynbin theChapter01/Exercise1.02folder. - Import the necessary libraries:

import numpy as np

- Load the

normal_distribution.csvdataset using NumPy. Have a look at the ndarray to verify that everything works:dataset = np.genfromtxt('../../Datasets/'\ 'normal_distribution_splittable.csv', \ delimiter=',')Note

As mentioned in the previous exercise, here too we have used a relative path to load the dataset. You can change the path depending on where you have saved the Jupyter Notebook and the dataset.

Remember that we need to show that our dataset is closely distributed around a mean of 100; that is, whatever value we wish to show/calculate should be around 100. For this purpose, first we will calculate the mean of the values of the second and the last row.

- Use simple indexing for the second row, as we did in our first exercise. For a clearer understanding, all the elements of the second row are saved to a variable and then we calculate the mean of these elements:

second_row = dataset[1] np.mean(second_row)

The output of the preceding code is as follows:

96.90038836444445

- Now, reverse index the last row and calculate the mean of that row. Always remember that providing a negative number as the index value will index the list from the end:

last_row = dataset[-1] np.mean(last_row)

The output of the preceding code is as follows:

100.18096645222221

From the outputs obtained in step 4 and 5, we can say that these values indeed are close to 100. To further convince our client, we will access the first value of the first row and the last value of the second last row.

- Index the first value of the first row using the Python standard syntax of [0][0]:

first_val_first_row = dataset[0][0] np.mean(first_val_first_row)

The output of the preceding code is as follows:

99.14931546

- Use reverse indexing to access the last value of the second last row (we want to use the combined access syntax here). Remember that

-1means the last element:last_val_second_last_row = dataset[-2, -1] np.mean(last_val_second_last_row)

The output of the preceding code is as follows:

101.2226037

Note

For steps 6 and 7, even if you had not used

np.mean(), you would have got the same values as presently shown. This is because the mean of a single value will be the value itself. You can try the above steps with the following code:first_val_first_row = dataset[0][0]first_val_first_rowlast_val_second_last_row = dataset[-2, -1]last_val_second_last_row

From all the preceding outputs, we can confidently say that the values we obtained hover around a mean of 100. Next, we'll use slicing, splitting, and iterating to achieve our goal.

Slicing

- Create a 2x2 matrix that starts at the second row and second column using

[1:3, 1:3]:""" slicing an intersection of 4 elements (2x2) of the first two rows and first two columns """ subsection_2x2 = dataset[1:3, 1:3] np.mean(subsection_2x2)

The output of the preceding code is as follows:

95.63393608250001

- In this task, we want to have every other element of the fifth row. Provide indexing of

::2as our second element to get every second element of the given row:every_other_elem = dataset[4, ::2] np.mean(every_other_elem)

The output of the preceding code is as follows:

98.35235805800001

Introducing the second column into the indexing allows us to add another layer of complexity. The third value allows us to only select certain values (such as every other element) by providing a value of 2. This means it skips the values between and only takes each second element from the used list.

- Reverse the elements in a slice using negative numbers:

reversed_last_row = dataset[-1, ::-1] np.mean(reversed_last_row)

The output of the preceding code is as follows:

100.18096645222222

Splitting

- Use the

hsplitmethod to split our dataset into three equal parts:hor_splits = np.hsplit(dataset,(3))

Note that if the dataset can't be split with the given number of slices, it will throw an error.

- Split the first third into two equal parts vertically. Use the

vsplitmethod to vertically split the dataset in half. It works likehsplit:ver_splits = np.vsplit(hor_splits[0],(2))

- Compare the shapes. We can see that the subset has the required half of the rows and the third half of the columns:

print("Dataset", dataset.shape) print("Subset", ver_splits[0].shape)The output of the preceding code is as follows:

Dataset (24, 9) Subset (12, 3)

Iterating

- Iterate over the whole dataset (each value in each row):

curr_index = 0 for x in np.nditer(dataset): print(x, curr_index) curr_index += 1

The output of the preceding code is as follows:

Figure 1.12: Iterating the entire dataset

Looking at the given piece of code, we can see that the index is simply incremented with each element. This only works with one-dimensional data. If we want to index multi-dimensional data, this won't work.

- Use the

ndenumeratemethod to iterate over the whole dataset. It provides two positional values,indexandvalue:for index, value in np.ndenumerate(dataset): print(index, value)

The output of the preceding code is as follows:

Figure 1.13: Enumerating the dataset with multi-dimensional data

Notice that all the output values we obtained are close to our mean value of 100. Thus, we have successfully managed to convince our client using several NumPy methods that our data is closely distributed around the mean value of 100.

Note

To access the source code for this specific section, please refer to https://packt.live/2Neteuh.

You can also run this example online at https://packt.live/3e7K0qq.

We've already covered most of the basic data wrangling methods for NumPy. In the next section, we'll take a look at more advanced features that will give you the tools you need to get better at analyzing your data.

Advanced NumPy Operations

In this section, we will learn about advanced NumPy operations such as filtering, sorting, combining, and reshaping and then implement them in an exercise.

Filtering

Filtering is a very powerful tool that can be used to clean up your data if you want to avoid outlier values.

In addition to the dataset[dataset > 10] shorthand notation, we can use the built-in NumPy extract method, which does the same thing using a different notation, but gives us greater control with more complex examples.

If we only want to extract the indices of the values that match our given condition, we can use the built-in where method. For example, np.where(dataset > 5) will return a list of indices of the values from the initial dataset that is bigger than 5:

# values bigger than 10 dataset[dataset > 10] # alternative – values smaller than 3 np.extract((dataset < 3), dataset) # values bigger 5 and smaller 10 dataset[(dataset > 5) & (dataset < 10)] # indices of values bigger than 5 (rows and cols) np.where(dataset > 5)

Sorting

Sorting each row of a dataset can be really useful. Using NumPy, we are also able to sort on other dimensions, such as columns.

In addition, argsort gives us the possibility to get a list of indices, which would result in a sorted list:

# values sorted on last axis np.sort(dataset) # values sorted on axis 0 np.sort(dataset, axis=0) # indices of values in sorted list np.argsort(dataset)

Combining

Stacking rows and columns onto an existing dataset can be helpful when you have two datasets of the same dimension saved to different files.

Given two datasets, we use vstack to stack dataset_1 on top of dataset_2, which will give us a combined dataset with all the rows from dataset_1, followed by all the rows from dataset_2.

If we use hstack, we stack our datasets "next to each other," meaning that the elements from the first row of dataset_1 will be followed by the elements of the first row of dataset_2. This will be applied to each row:

# combine datasets vertically np.vstack([dataset_1, dataset_2]) # combine datasets horizontally np.hstack([dataset_1, dataset_2]) # combine datasets on axis 0 np.stack([dataset_1, dataset_2], axis=0)

Reshaping

Reshaping can be crucial for some algorithms. Depending on the nature of your data, it might help you to reduce dimensionality to make visualization easier:

# reshape dataset to two columns x rows dataset.reshape(-1, 2) # reshape dataset to one row x columns np.reshape(dataset, (1, -1))

Here, -1 is an unknown dimension that NumPy identifies automatically. NumPy will figure out the length of any given array and the remaining dimensions and will thus make sure that it satisfies the required standard.

Next, we will perform an exercise using advanced NumPy operations.

Exercise 1.03: Filtering, Sorting, Combining, and Reshaping

This final exercise for NumPy provides some more complex tasks to consolidate our learning. It will also combine most of the previously learned methods as a recap. We'll use the filtering features of NumPy for sorting, stacking, combining, and reshaping our data:

- Create a new Jupyter Notebook and save it as

Exercise1.03.ipynbin theChapter01/Exercise1.03folder. - Import the necessary libraries:

import numpy as np

- Load the

normal_distribution_splittable.csvdataset using NumPy. Make sure that everything works by having a look at the ndarray:dataset = np.genfromtxt('../../Datasets/'\ 'normal_distribution_splittable.csv', \ delimiter=',') dataset - You will obtain the following output:

Figure 1.14: Rows and columns of the dataset

Note

For ease of presentation, we have shown only a part of the output.

Filtering

- Get values greater than 105 by supplying the condition

> 105in the brackets:vals_greater_five = dataset[dataset > 105] vals_greater_five

You will obtain the following output:

Figure 1.15: Filtered dataset displaying values greater than 105

You can see in the preceding figure that all the values in the output are greater than 105.

- Extract the values of our dataset that are between the values 90 and 95. To use more complex conditions, we might want to use the

extractmethod of NumPy:vals_between_90_95 = np.extract((dataset > 90) \ & (dataset < 95), dataset) vals_between_90_95

You will obtain the following output:

Figure 1.16: Filtered dataset displaying values between 90 and 95

The preceding output clearly shows that only values lying between 90 and 95 are printed.

- Use the

wheremethod to get the indices of values that have a delta of less than 1; that is, [individual value] – 100 should be less than 1. Use those indices (row, col)in a list comprehension and print them out:rows, cols = np.where(abs(dataset - 100) < 1) one_away_indices = [[rows[index], \ cols[index]] for (index, _) \ in np.ndenumerate(rows)] one_away_indices

The

wheremethod from NumPy allows us to getindices (rows, cols)for each of the matching values.Observe the following truncated output:

Figure 1.17: Indices of the values that have a delta of less than 1

Let us confirm if we indeed obtained the right indices. The first set of indices 0,0 refer to the very first value in the output shown in Figure 1.14. Indeed, this is the correct value as abs (99.14931546 – 100) < 1. We can quickly check this for a couple of more values and conclude that indeed the code has worked as intended.

Note

List comprehensions are Python's way of mapping over data. They're a handy notation for creating a new list with some operation applied to every element of the old list.

For example, if we want to double the value of every element in this list, list = [1, 2, 3, 4, 5], we would use list comprehensions like this: doubled_list=[x*x for x in list]. This gives us the following list: [1, 4, 9, 16, 25]. To get a better understanding of list comprehensions, please visit https://docs.python.org/3/tutorial/datastructures.html#list-comprehensions.

Sorting

- Sort each row in our dataset by using the

sortmethod:row_sorted = np.sort(dataset) row_sorted

As described before, by default, the last axis will be used. In a two-dimensional dataset, this is axis 1 which represents the rows. So, we can omit the

axis=1argument in thenp.sortmethod call. You will obtain the following output:

Figure 1.18: Dataset with sorted rows

Compare the preceding output with that in Figure 1.14. What do you observe? The values along the rows have been sorted in an ascending order as expected.

- With multi-dimensional data, we can use the

axisparameter to define which dataset should be sorted. Use the0axes to sort the values by column:col_sorted = np.sort(dataset, axis=0) col_sorted

A truncated version of the output is as follows:

Figure 1.19: Dataset with sorted columns

As expected, the values along the columns (91.37294597, 91.02628776, 94.11176915, and so on) are now sorted in an ascending order.

- Create a sorted index list and use fancy indexing to get access to sorted elements easily. To keep the order of our dataset and obtain only the values of a sorted dataset, we will use

argsort:index_sorted = np.argsort(dataset[0]) dataset[0][index_sorted]

The output is as follows:

Figure 1.20: First row with sorted values from argsort

As can be seen from the preceding output, we have obtained the first row with sorted values.

Combining

- Use the combining features to add the second half of the first column back together, add the second column to our combined dataset, and add the third column to our combined dataset.

thirds = np.hsplit(dataset, (3)) halfed_first = np.vsplit(thirds[0], (2)) halfed_first[0]

The output of the preceding code is as follows:

Figure 1.21: Splitting the dataset

- Use

vstackto vertically combine thehalfed_firstdatasets:first_col = np.vstack([halfed_first[0], halfed_first[1]]) first_col

The output of the preceding code is as follows:

Figure 1.22: Vertically combining the dataset

After stacking the second half of our split dataset, we have one-third of our initial dataset stacked together again. Now, we want to add the other two remaining datasets to our

first_coldataset. - Use the

hstackmethod to combine our already combinedfirst_colwith the second of the three split datasets:first_second_col = np.hstack([first_col, thirds[1]]) first_second_col

A truncated version of the output resulting from the preceding code is as follows:

Figure 1.23: Horizontally combining the dataset

- Use

hstackto combine the last one-third column with our dataset. This is the same thing we did with our second-third column in the previous step:full_data = np.hstack([first_second_col, thirds[2]]) full_data

A truncated version of the output resulting from the preceding code is as follows:

Figure 1.24: The complete dataset

Reshaping

- Reshape our dataset into a single list using the

reshapemethod:single_list = np.reshape(dataset, (1, -1)) single_list

A truncated version of the output resulting from the preceding code is as follows:

Figure 1.25: Reshaped dataset

- Provide a

-1for the dimension. This tells NumPy to figure the dimension out itself:# reshaping to a matrix with two columns two_col_dataset = dataset.reshape(-1, 2) two_col_dataset

A truncated version of the output resulting from the preceding code is as follows:

Figure 1.26: The dataset in a two-column format

Note

To access the source code for this specific section, please refer to https://packt.live/2YD4AZn.

You can also run this example online at https://packt.live/3e6F7Ol.

You have now used many of the basic operations that are needed so that you can analyze a dataset. Next, we will be learning about pandas, which will provide several advantages when working with data that is more complex than simple multi-dimensional numerical data. pandas also support different data types in datasets, meaning that we can have columns that hold strings and others that have numbers.

NumPy, as you've seen, has some powerful tools. Some of them are even more powerful when combined with pandas DataFrames.