1.2 Real-Time Computer Systems

1.2.1 Time and Criticality Issues

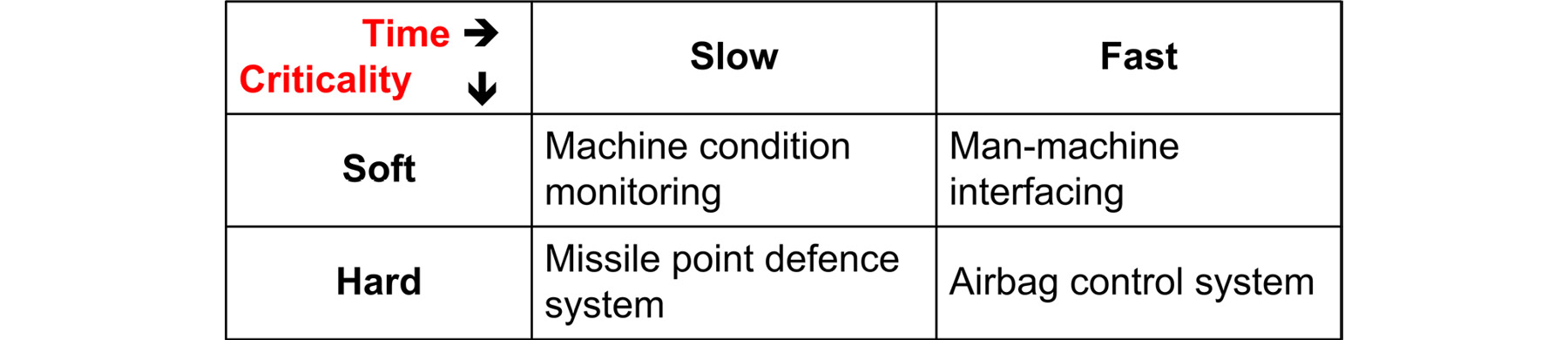

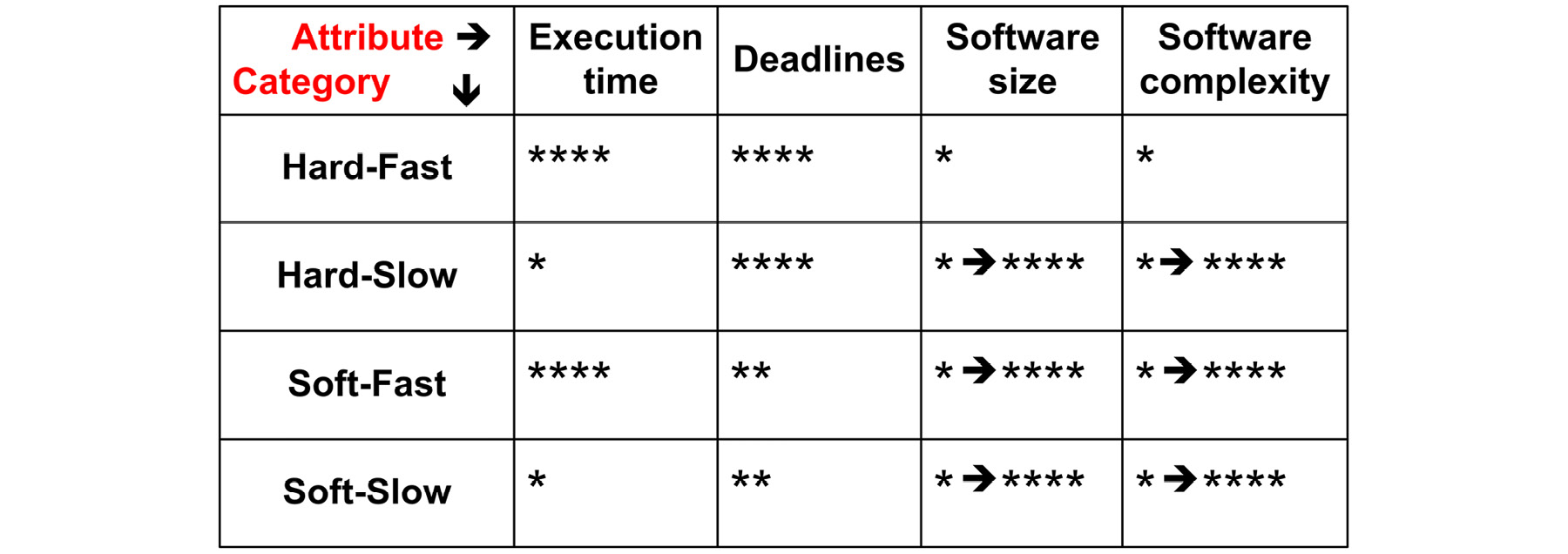

From what's been said so far, one factor distinguishes real-time systems from batch and online applications: timeliness. Unfortunately, this is a rather limited definition; a more precise one is needed. Many ways of categorizing real-time systems have been proposed and are in use. One particular pragmatic scheme, based on time and criticality, is shown in Figure 1.4. An arbitrary boundary between slow and fast is 1 second (chosen because problems shift from individual computing issues to overall system aspects at around this point). The related attributes are given in Figure 1.5.

Hard, fast embedded systems tend, in computing terms, to be small (or maybe a small, localized part of a larger system). Computation times are short (typically, in the tens of milliseconds or faster), and deadlines are critical. Software complexity is usually low, especially in safety-critical work. A good example is the airbag deployment system in motor vehicles. Late deployment defeats the whole purpose of airbag protection:

Figure 1.4: Real-time system categorization

Figure 1.5: Attributes of real-time systems

Hard, slow systems do not fall into any particular size category (though, many, as with process controllers, are small). An illustrative example of such an application is an anti-aircraft missile-based point-defense system for fast patrol boats. Here, the total reaction time is in the order of 10 seconds. However, the consequences of failing to respond in this time frame are self-evident.

Larger systems usually include comprehensive, and sometimes complex, human-machine interfaces (HMIs). Such interfaces may form an integral part of the total system operation as, for instance, in integrated weapon fire-control systems. Fast operator responses may be required, but deadlines are not as critical as in the previous cases. Significant tolerance can be permitted (in fact, this is generally true when humans form part of the system operation). HMI software tends to be large and complex.

The final category, soft/slow, is typified by condition monitoring, trend analysis, and statistical analysis in, for example, factory automation. Frequently, such software is large and complex. Applications like these may be classified as information processing (IP) systems.

1.2.2 Real-Time System Structures

It is clear that the fundamental difference between real-time and others (such as batch and interactive) systems is timeliness. However, this in itself tells us little about the structure of such computer systems. So, before looking at modern real-time systems, it's worth digressing to consider the setup of IT-type mainframe installations. While most modern mainframe systems are large and complex (and may be used for a whole variety of jobs) they have many features in common. In the first case, the essential architectures are broadly similar; the real differences lie in the applications themselves and the application software. Second, the physical environments are usually benign ones, often including air conditioning. Peripheral devices include terminals, PCs, printers, plotters, disks, tapes, communication links, and little else. Common to many mainframe installations is the use of terabytes of disk and tape storage. The installation itself is staffed and maintained by professional data processing (DP) personnel. It requires maintenance in the broadest sense, including that for upgrading and modifying programs. In such a setting, it's not surprising that the computer is the focus of attention and concern.

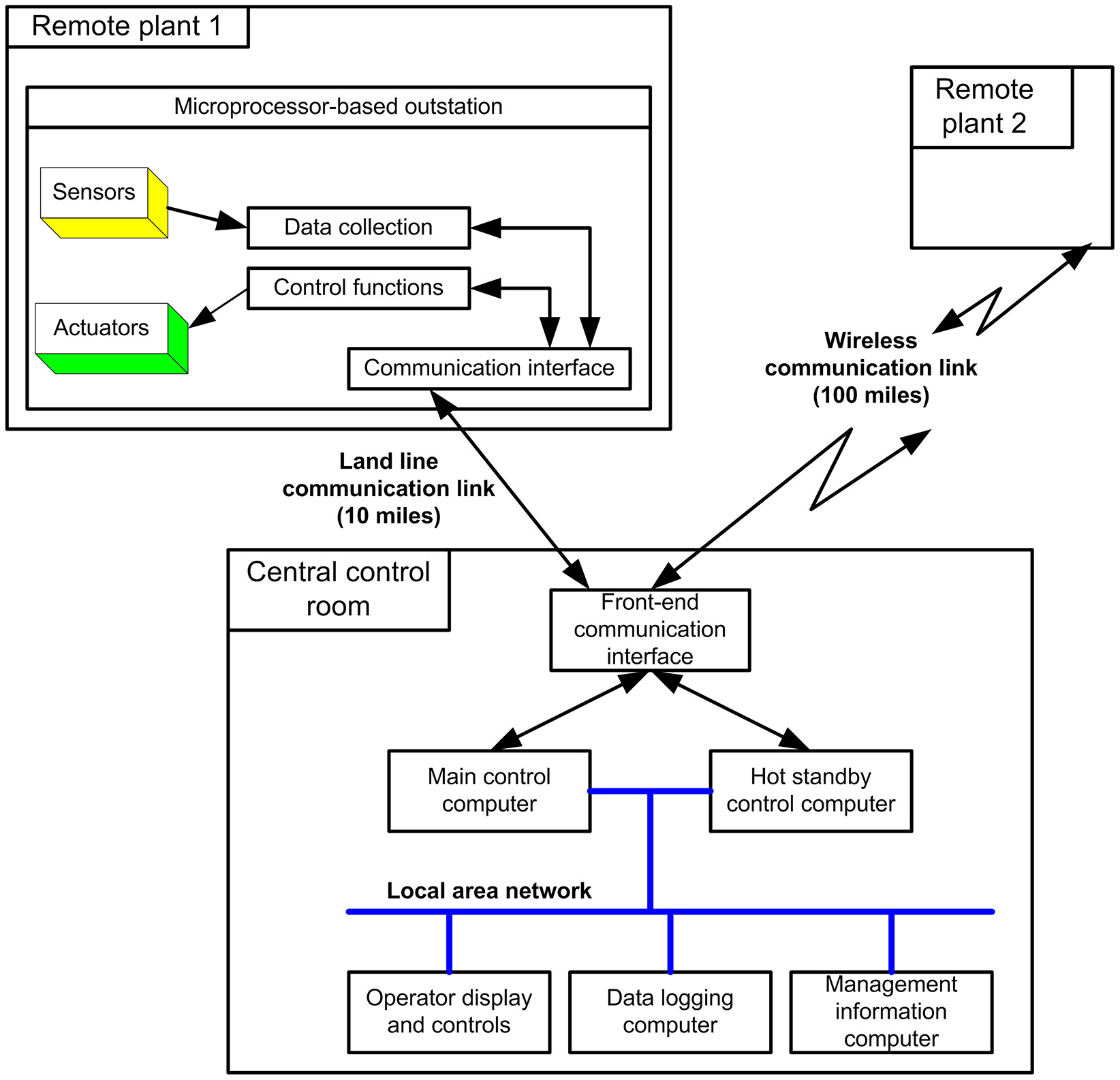

By contrast, real-time systems come in many types and sizes. The largest, in geographical terms, are telemetry control systems (Figure 1.6):

Figure 1.6: Telemetry control system

Such systems are widely used in the gas, oil, water, and electricity industries. They provide centralized control and monitoring of remote sites from a single control room.

Smaller in size, but probably more complex in nature, are missile control systems (Figure 1.7):

Figure 1.7: Sea Viper missile system

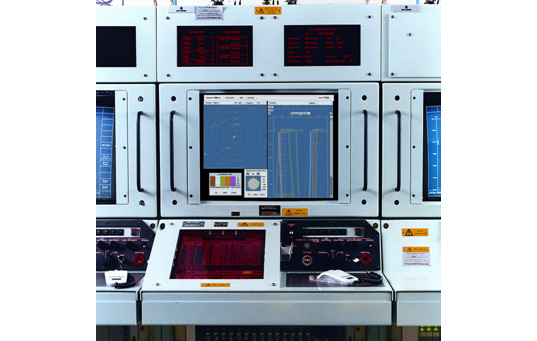

Many larger embedded applications involve a considerable degree of complex man-machine interaction. Typical of these are the command and control systems of modern naval vessels (Figure 1.8):

Figure 1.8: Submarine control system console (reproduced with permission from BAE systems)

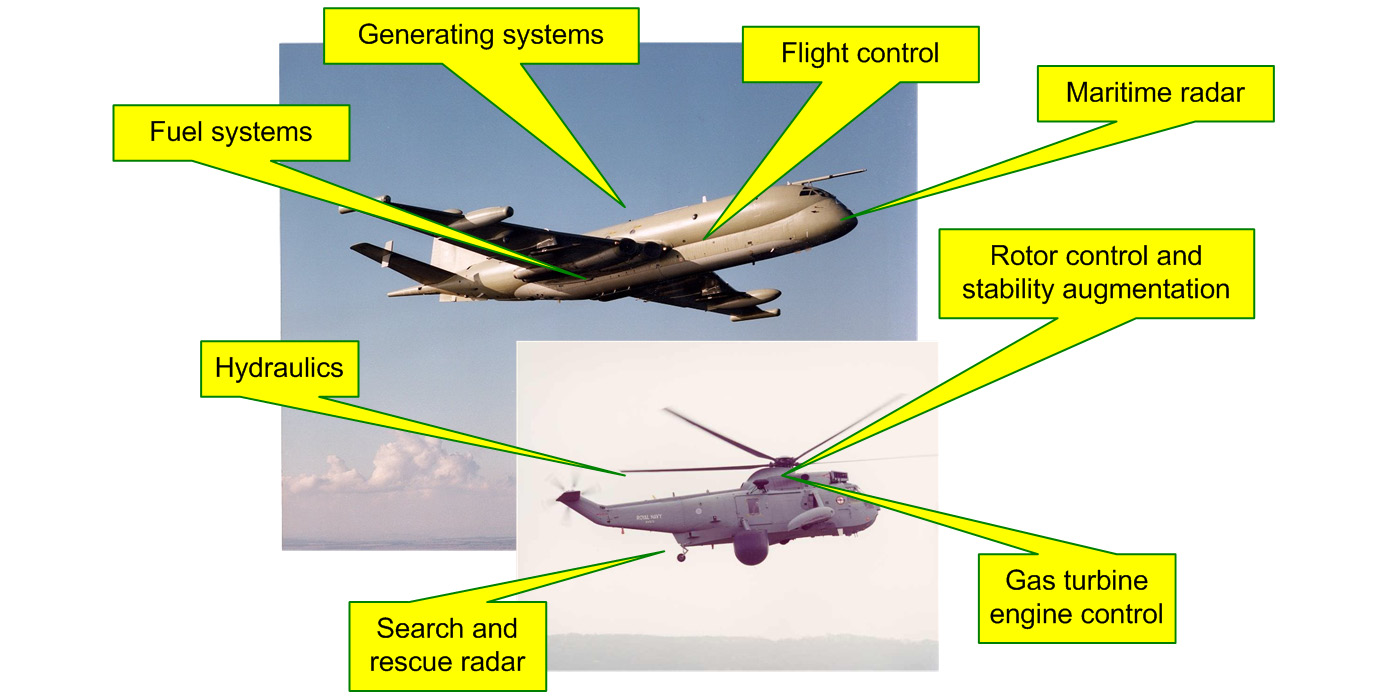

And, of course, one of the major application areas of real-time systems is that of avionics (Figure 1.9):

Figure 1.9: Typical avionic platforms (reproduced with permission from Thales Ltd.)

These, in particular, involve numerous hard, fast, and safety-critical systems.

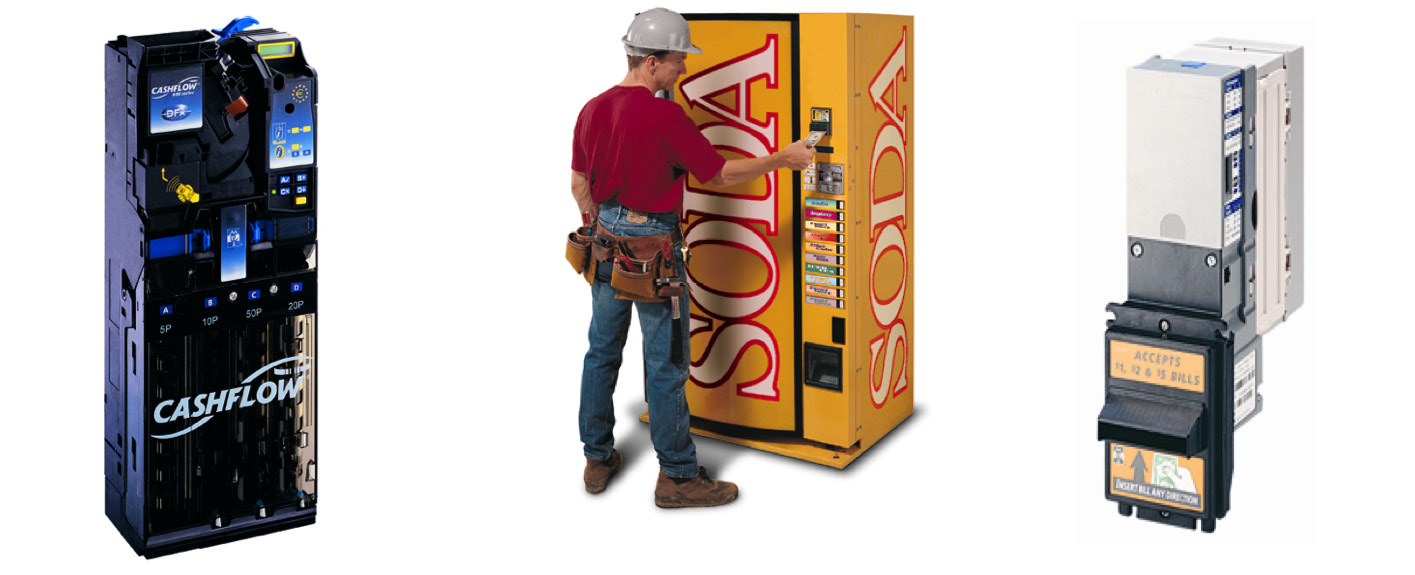

On the industrial scene, there are many installations that use computer-based standalone controllers (often for quite dedicated functions). Applications include vending machines (Figure 1.10), printer controllers, anti-lock braking, and burglar alarms; the list is endless.

Figure 1.10: Microprocessor-based vending machine units

These examples differ in many detailed ways from DP installations, and such factors are discussed next. There are, though, two fundamental points. First, as stated previously, the computer is seen to be merely one component of a larger system. Second, the user does not normally have the requirements – or facilities – to modify programs on a day-to-day basis. In practice, most users won't have the knowledge or skills to reprogram the machine.

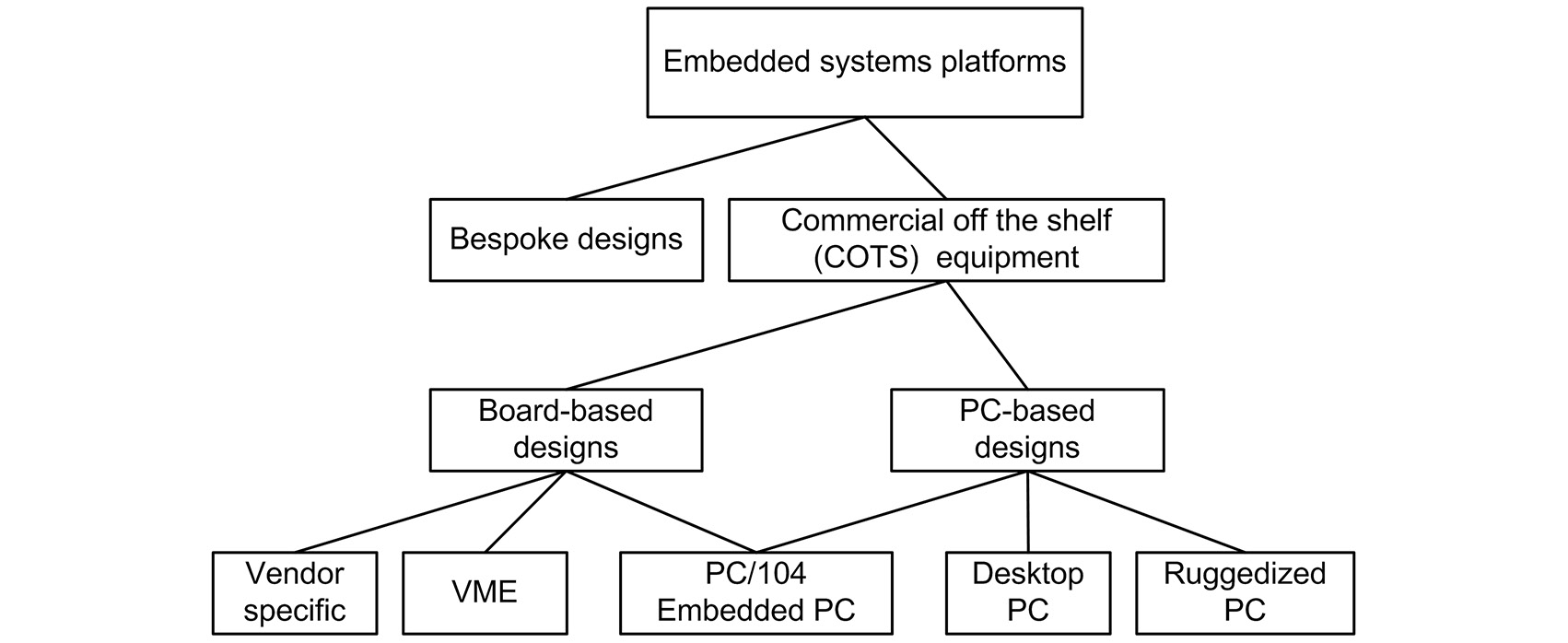

Embedded systems use a variety of hardware architectures (or platforms), as shown in Figure 1.11:

Figure 1.11: Embedded systems platforms

Many are based on special-to-purpose (that is, bespoke) designs, especially where there are significant constraints such as:

- Environmental aspects (temperature, shock, vibration, humidity, and so on)

- Size and weight (aerospace, auto, telecomms, and so on)

- Cost (auto, consumer goods, and so on)

The advantage of bespoke systems is that products are optimized for the applications. Unfortunately, design and development is a costly and time-consuming process. A much cheaper and faster approach is to use ready-made items, a commercial off-the-shelf (COTS) buying policy. Broadly speaking, there are two alternative approaches:

- Base the hardware design on the use of sets of circuit boards

- Implement the design using some form of PC

In reality, these aren't mutually exclusive.

(a) COTS Board-Based Designs

Many vendors offer single-board computer systems, based on particular processors and having a wide range of peripheral boards. In some cases, these may be compatible with standard PC buses such as PCI (peripheral component interconnect). For embedded applications, it is problematic whether boards from different suppliers can be mixed and matched with confidence. However, where boards are designed to comply with well-defined standards, this can be done (generally) without worry. One great advantage of this is that it doesn't tie a company to one specific supplier. Two standards are particularly important in the embedded world: VME and PC/104.

VME was originally introduced by a number of vendors in 1981 and was later standardized as IEEE standard 1014-1987. It is especially important to developers of military and similar systems, as robust, wide-temperature range boards are available. A second significant standard for embedded applications is PC/104, which is a cheaper alternative to VME. It is essentially a PC but with a different physical construction, being based on stackable circuit boards (it gets its name from its PC roots and the number of pins used to connect the boards together, that is, 104). At present, it is estimated that more than 150 vendors manufacture PC/104 compatible products.

(b) COTS PC-Based Designs

Clearly, PC/104 designs are PC-based. However, an alternative to the board solution is to use ready-made personal computers. These may be tailored to particular applications by using specialized plug-in boards (for example, stepper motor drives, data acquisition units, and so on). If the machine is to be located in, say, an office environment, then a standard desktop computer may be satisfactory. However, these are not designed to cope with conditions met on the factory floor, such as dust, moisture, and so on. In such situations, ruggedized, industrial-standard PCs can be used. Where reliability, durability, and serviceability are concerned, these are immensely superior to the desktop machines.

1.2.3 Characteristics of Embedded Systems

Embedded computers are defined to be those where the computer is used as a component within a system, not as a computing engine in its own right. This definition is the one that, at heart, separates embedded from non-embedded designs (note that, from now on, "embedded" implicitly means "real-time embedded").

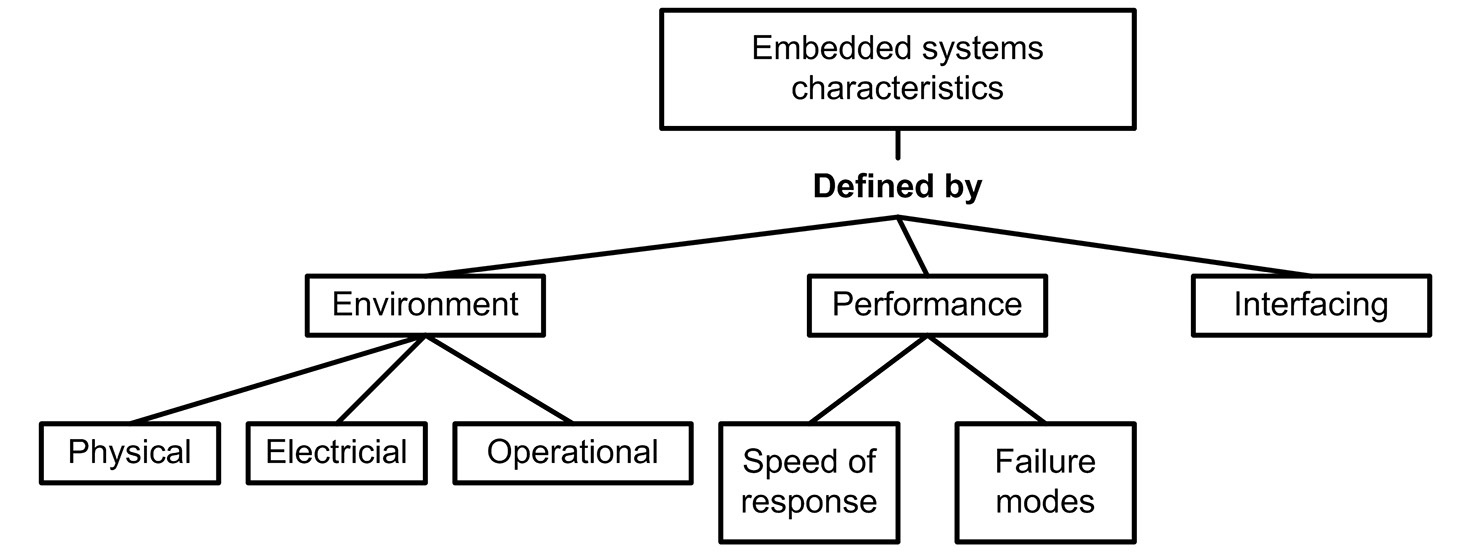

Embedded systems are characterized (Figure 1.12) by the following:

- The environments they work in

- The performance expected of them

- The interfaces to the outside world:

Figure 1.12: Embedded systems characteristics

(a) Environmental Aspects

Environmental factors may, at first glance, seem to have little bearing on software. Primarily, they affect the following:

- Hardware design and construction

- Operator interaction with the system

But these, to a large extent, determine how the complete system works – and that defines the overall software requirements. Consider the following physical effects:

- Temperature

- Shock and vibration

- Humidity

- Size limits

- Weight limits

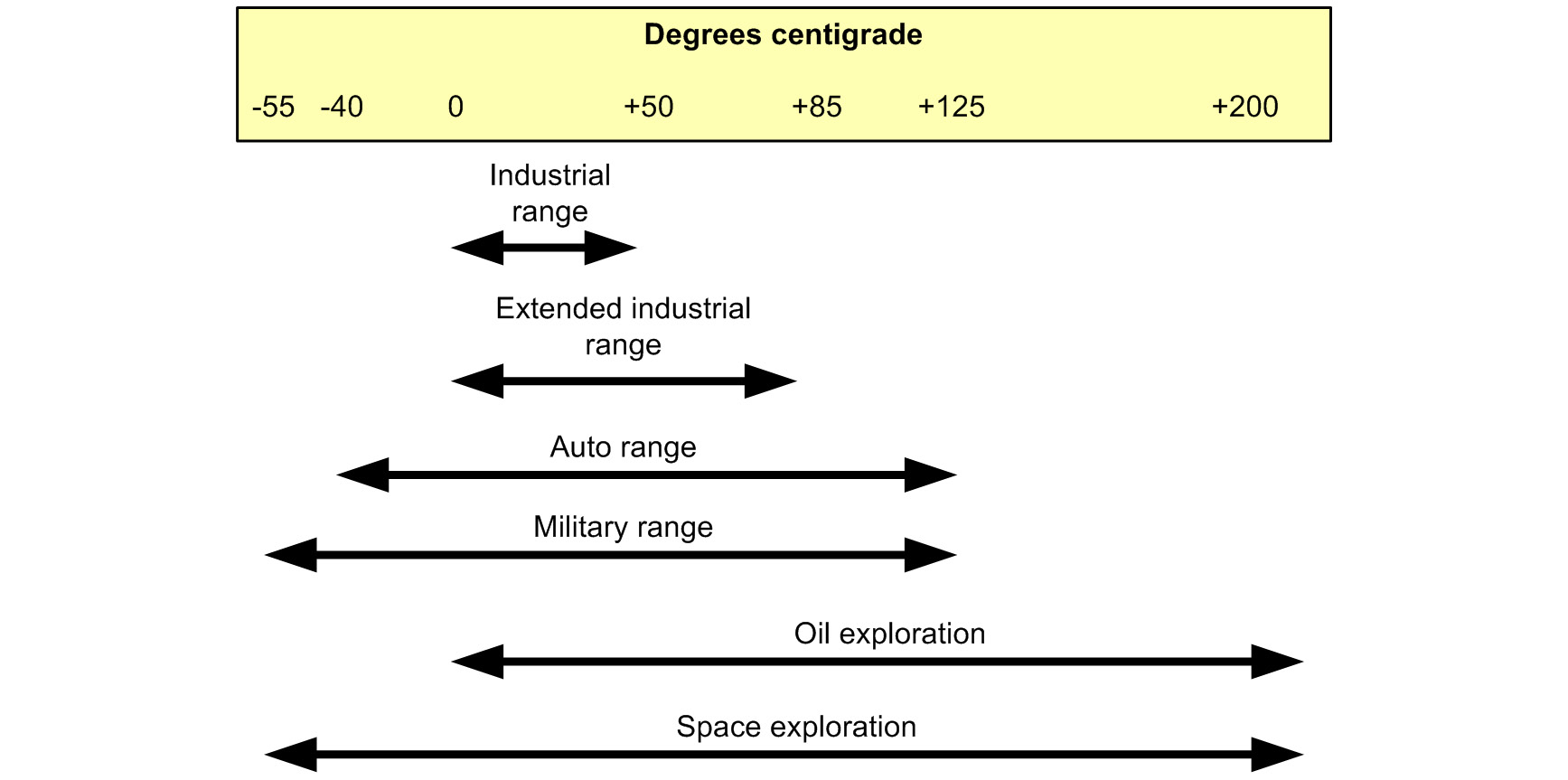

The temperature ranges commonly met in embedded applications are shown in Figure 1.13:

Figure 1.13: Typical temperature specifications for real-time applications

Many components used in commercial computers are designed to operate in the band 0-30°C. Electronic components aren't usually a problem. Items such as terminals, display units, and hard disks are the weaknesses. As a result, the embedded designer must either do without them or else provide them with a protected environment – which can be a costly solution. When the requirements to withstand shock, vibration, and water penetration are added, the options narrow. For instance, the ideal way to reprogram a system might be to update the system using a flashcard. But if we can't use this technology because of environmental factors, then what?

Size and weight are two factors at the forefront in the minds of many embedded systems designers. For vehicle systems, such as automobiles, aircraft, armored fighting vehicles, and submarines, they may be the crucial factors. Not much to do with software, you may think. However, suppose a design requirement can only be met by using a single-chip micro (learn in Section 1.3.4, Single-Chip Microcomputers). Additionally, suppose that this device has only 256 bytes of random-access memory (RAM). So, how does that affect our choice of programming language?

The electrical environments of industrial and military systems are not easy to work in. Yet most systems are expected to cope with extensive power supply variations in a predictable manner. To handle problems like this, we may have to resort to defensive programming techniques (Chapter 2, The Search for Dependable Software). Program malfunction can result from electrical interference; again, defensive programming is needed to handle this. A further complicating factor in some systems is that the available power may be limited. This won't cause difficulties in small systems. But if your software needs 10 gigabytes of dynamic RAM to run in, the power system designers are going to face problems.

Let's now turn to the operational environmental aspects of embedded systems. Normally, we expect that when the power is turned on, the system starts up safely and correctly. It should do this every time and without any operator intervention. Conversely, when the power is turned off, the system should also behave safely. What we design for are "fit and forget" functions.

In many instances, embedded systems have long operational lives, perhaps between 10 and 30 years. Often, it is necessary to upgrade the equipment a number of times in its lifetime. So, the software itself will also need upgrading. This aspect of software, its maintenance, may well affect how we design it in the first place.

(b) Performance

Two particular factors are important here:

- How fast does a system respond?

- When it fails, what happens?

(i) The Speed of Response

All required responses are time-critical (although these may vary from microseconds to days). Therefore, the designer should predict the delivered performance of the embedded system. Unfortunately, even with the best will in the world, it may not be possible to give 100% guarantees. The situation is complicated because there are two distinct sides to this issue – both relating to the way tasks are processed by the computer.

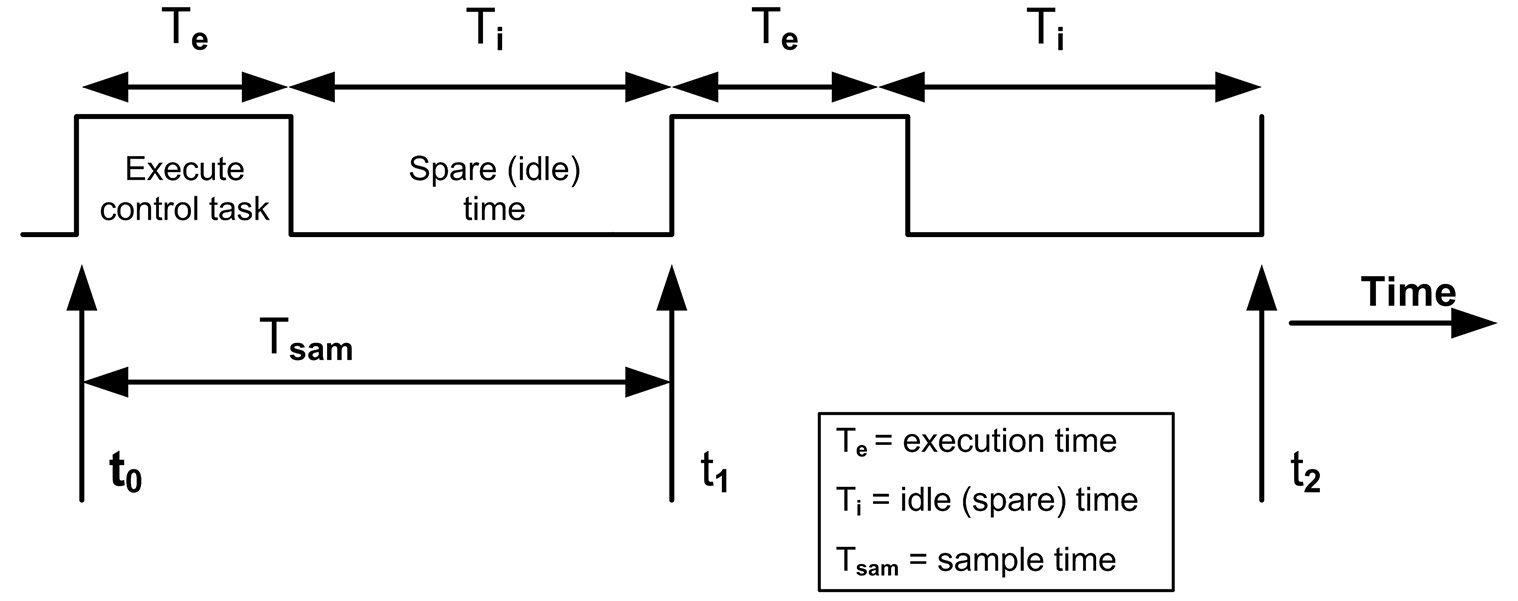

Case one concerns the demands to run jobs at regular, predefined intervals. A typical application is that of closed-loop digital controllers having fixed, preset sampling rates. This we'll define to be a "synchronous" or "periodic" task event (synchronous with a real-time clock – Figure 1.14):

Figure 1.14: Computer loading – a single synchronous (periodic) task

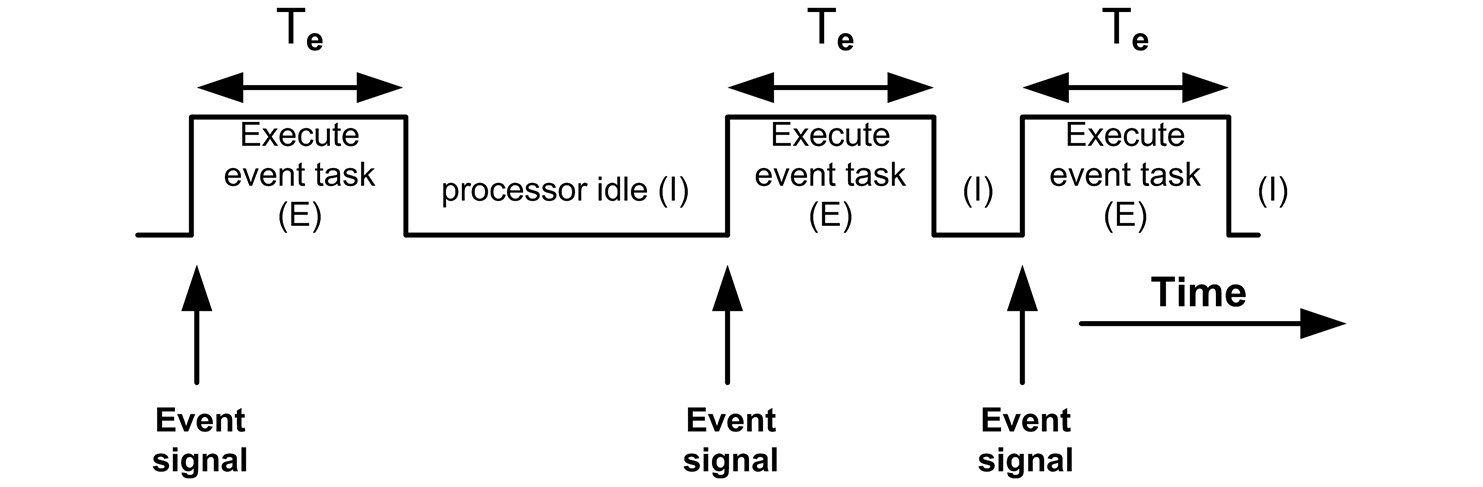

Case two occurs when the computer must respond to (generally) external events that occur at random ("asynchronous" or "aperiodic"). And the event must be serviced within a specific maximum time period. Where the computer handles only periodic events, response times can be determined reasonably well. This is also true where only one aperiodic event drives the system (a rare event), such as in Figure 1.15:

Figure 1.15: Computer loading – a single asynchronous (aperiodic) task

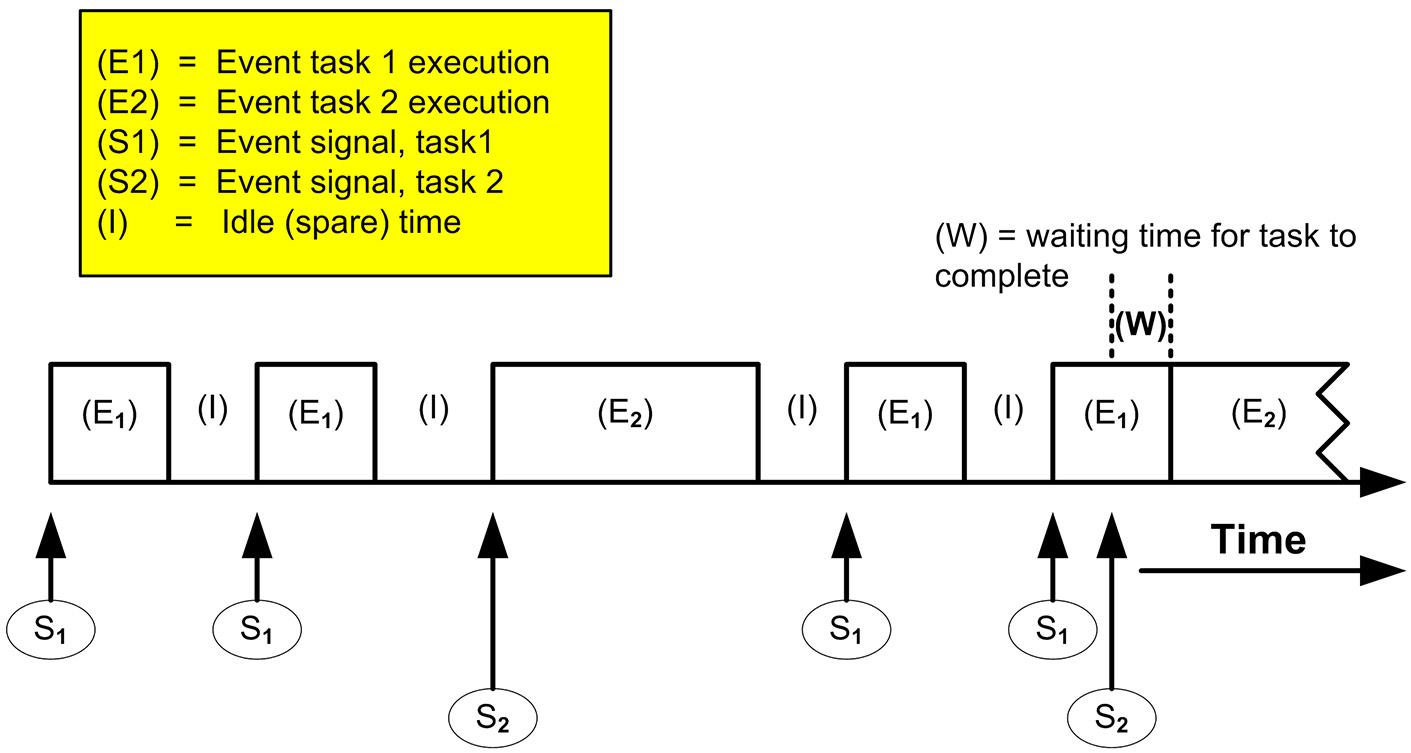

When the system has to cope with a number of asynchronous events, estimates are difficult to arrive at. However, by setting task priorities, good estimates of worst-case performance can be deduced (Figure 1.16). As shown here, task 1 has higher priority than task 2:

Figure 1.16: Computer loading – multiple asynchronous (aperiodic) tasks

Where we get into trouble is in situations that involve a mixture of periodic and aperiodic events – which are usually in real-time designs. Much thought and skill are needed to deal with the response requirements of periodic and aperiodic tasks (especially when using just one processor).

(ii) Failures and Their Effects

All systems go wrong at some time in their lives. It may be a transient condition or a hard failure; the cause may be hardware or software or a combination of both. It really doesn't matter; accept that it will happen. What we have to concern ourselves with are:

- The consequences of such faults and failures

- Why the problem(s) arose in the first place

Because a system can tolerate faults without sustaining damage doesn't mean that such performance is acceptable. Nuisance tripping out of a large piece of plant, for instance, is not going to win many friends. All real-time software must, therefore, be designed in a professional manner to handle all foreseen problems, that is, "exception" handling (an exception is defined here to be an error or fault that produces program malfunction, see Chapter 2, The Search for Dependable Software. It may originate within the program itself or be due to external factors). If, on the other hand, software packages are bought in, their quality must be assessed. Regularly, claims are made concerning the benefits of using Windows operating systems in real-time applications. Yet users of such systems often experience unpredictable behavior, including total system hang up. Could this really be trusted for plant control and similar applications?

In other situations, we may not be able to cope with unrectified system faults. Three options are open to us. In the first, where no recovery action is possible, the system is put into a fail-safe condition. In the second, the system keeps on working, but with reduced service. This may be achieved, say, by reducing response times or by servicing only the "good" elements of the system. Such systems are said to offer "graceful" degradation in their response characteristics. Finally, for fault-tolerant operations, full and safe performance is maintained in the presence of faults.

(c) Interfacing

The range of devices that interface to embedded computers is extensive. It includes sensors, actuators, motors, switches, display panels, serial communication links, parallel communication methods, analog-to-digital converters, digital-to-analog converters, voltage-to-frequency converters, pulse-width modulated controllers, and more. Signals may be analog (DC or AC) or digital; voltage, current, or frequency encoding methods may be used. In anything but the smallest systems, hardware size is dominated by the interfacing electronics. This has a profound effect on system design strategies concerning processor replication and exception handling.

When the processor itself is the major item in a system, fitting a backup to cope with failures is feasible and sensible. Using this same approach in an input-output (I/O) dominated system makes less sense (and introduces much complexity).

Conventional exception handling schemes are usually concerned with detecting internal (program) problems. These include stack overflow, array bound violations, and arithmetic overflow. However, for most real-time systems, a new range of problems has to be considered. These relate to factors such as sensor failure, illegal operator actions, program malfunction induced by external interference, and more. Detecting such faults is one thing; deciding what to do subsequently can be an even more difficult problem. Exception-handling strategies need careful design to prevent faults causing system or environmental damage (or worse – injury or death).