Examining text datasets with fastai

In the previous section, we looked at how a curated tabular dataset could be ingested. In this section, we are going to dig into a text dataset from the curated list.

Getting ready

Ensure you have followed the steps in Chapter 1, Getting Started with fastai, to get a fastai environment set up. Confirm that you can open the examining_text_datasets.ipynb notebook in the ch2 directory of your repository.

I am grateful for the opportunity to use the WIKITEXT_TINY dataset (https://blog.einstein.ai/the-wikitext-long-term-dependency-language-modeling-dataset/) featured in this section.

Dataset citation

Stephen Merity, Caiming Xiong, James Bradbury, Richard Socher. (2016). Pointer Sentinel Mixture Models (https://arxiv.org/pdf/1609.07843.pdf).

How to do it…

In this section, you will be running through the examining_text_datasets.ipynb notebook to examine the WIKITEXT_TINY dataset. As its name suggests, this is a small set of text that's been gleaned from good and featured Wikipedia articles.

Once you have the notebook open in your fastai environment, complete the following steps:

- Run the first two cells to import the necessary libraries and set up the notebook for fastai.

- Run the following cell to copy the dataset into your filesystem (if it's not already there) and to define the path for the dataset:

path = untar_data(URLs.WIKITEXT_TINY)

- Run the following cell to get the output of

path.ls()so that you can examine the directory structure of the dataset:

Figure 2.11 – Output of path.ls()

- There are two CSV files that make up this dataset. Let's ingest each of them into a pandas DataFrame, starting with

train.csv:df_train = pd.read_csv(path/'train.csv')

- When you use

head()to check the DataFrame, you'll notice that something's wrong – the CSV file has no header with column names, but by default,read_csvassumes the first row is the header, so the first row gets misinterpreted as a header. As shown in the following screenshot, the first row of output is in bold, which indicates that the first row is being interpreted as a header, even though it contains a regular data row:

Figure 2.12 – First record in df_train

- To fix this problem, rerun the

read_csvfunction, but this time with theheader=Noneparameter, to specify that the CSV file doesn't have a header:df_train = pd.read_csv(path/'train.csv',header=None)

- Check

head()again to confirm that the problem has been resolved:

Figure 2.13 – Revising the first record in df_train

- Ingest

test.csvinto a DataFrame using theheader=Noneparameter:df_test = pd.read_csv(path/'test.csv',header=None)

- We want to tokenize the dataset and transform it into a list of words. Since we want a common set of tokens for the entire dataset, we will begin by combining the test and train DataFrames:

df_combined = pd.concat([df_train,df_test])

- Confirm the shape of the train, test, and combined dataframes – the number of rows in the combined DataFrame should be the sum of the number of rows in the train and test DataFrames:

print("df_train: ",df_train.shape) print("df_test: ",df_test.shape) print("df_combined: ",df_combined.shape) - Now, we're ready to tokenize the DataFrame. The

tokenize_df()function takes the list of columns containing the text we want to tokenize as a parameter. Since the columns of the DataFrame are not labeled, we need to refer to the column we want to tokenize using its position rather than its name:df_tok, count = tokenize_df(df_combined,[df_combined.columns[0]])

- Check the contents of the first few records of

df_tok, which is the new DataFrame containing the tokenized contents of the combined DataFrame:

Figure 2.14 – The first few records of df_tok

- Check the count for a few sample words to ensure they are roughly what you expected. Pick a very common word, a moderately common word, and a rare word:

print("very common word (count['the']):", count['the']) print("moderately common word (count['prepared']):", count['prepared']) print("rare word (count['gaga']):", count['gaga'])

Congratulations! You have successfully ingested, explored, and tokenized a curated text dataset.

How it works…

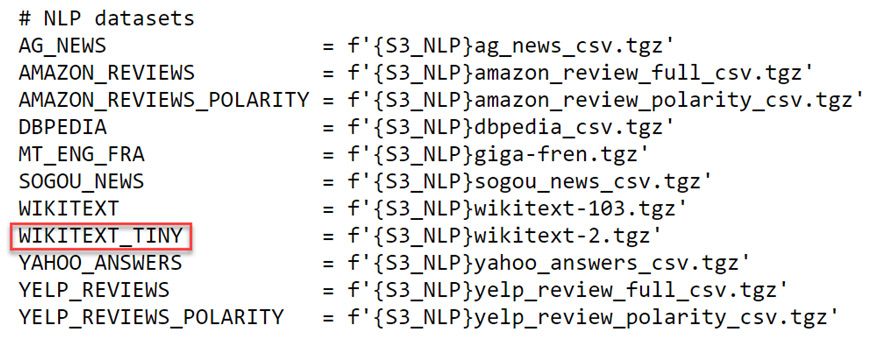

The dataset that you explored in this section, WIKITEXT_TINY, is one of the datasets you would have seen in the source for URLs in the Getting the complete set of oven-ready fastai datasets section. Here, you can see that WIKITEXT_TINY is in the NLP datasets section of the source for URLs:

Figure 2.15 – WIKITEXT_TINY in the NLP datasets list in the source for URLs