About the Book

You already know you want to learn data science, and a smarter way to learn data science is to learn by doing. The Data Science Workshop focuses on building up your practical skills so that you can understand how to develop simple machine learning models in Python or even build an advanced model for detecting potential bank frauds with effective modern data science. You'll learn from real examples that lead to real results.

Throughout The Data Science Workshop, you'll take an engaging step-by-step approach to understanding data science. You won't have to sit through any unnecessary theory. If you're short on time you can jump into a single exercise each day or spend an entire weekend training a model using scikit-learn. It's your choice. Learning on your terms, you'll build up and reinforce key skills in a way that feels rewarding.

Every physical print copy of The Data Science Workshop unlocks access to the interactive edition. With videos detailing all exercises and activities, you'll always have a guided solution. You can also benchmark yourself against assessments, track progress, and receive content updates. You'll even earn a secure credential that you can share and verify online upon completion. It's a premium learning experience that's included with your printed copy. To redeem, follow the instructions located at the start of your data science book.

Fast-paced and direct, The Data Science Workshop is the ideal companion for data science beginners. You'll learn about machine learning algorithms like a data scientist, learning along the way. This process means that you'll find that your new skills stick, embedded as best practice, a solid foundation for the years ahead.

About the Chapters

Chapter 1, Introduction to Data Science in Python, will introduce you to the field of data science and walk you through an overview of Python's core concepts and their application in the world of data science.

Chapter 2, Regression, will acquaint you with linear regression analysis and its application to practical problem solving in data science.

Chapter 3, Binary Classification, will teach you a supervised learning technique called classification to generate business outcomes.

Chapter 4, Multiclass Classification with RandomForest, will show you how to train a multiclass classifier using the Random Forest algorithm.

Chapter 5, Performing Your First Cluster Analysis, will introduce you to unsupervised learning tasks, where algorithms have to automatically learn patterns from data by themselves as no target variables are defined beforehand.

Chapter 6, How to Assess Performance, will teach you to evaluate a model and assess its performance before you decide to put it into production.

Chapter 7, The Generalization of Machine Learning Models, will teach you how to make best use of your data to train better models, by either splitting the data or making use of cross-validation.

Chapter 8, Hyperparameter Tuning, will guide you to find further predictive performance improvements via the systematic evaluation of estimators with different hyperparameters.

Chapter 9, Interpreting a Machine Learning Model, will show you how to interpret a machine learning model's results and get deeper insights into the patterns it found.

Chapter 10, Analyzing a Dataset, will introduce you to the art of performing exploratory data analysis and visualizing the data in order to identify quality issues, potential data transformations, and interesting patterns.

Chapter 11, Data Preparation, will present the main techniques you can use to handle data issues in order to ensure your data is of a high enough quality for successful modeling.

Chapter 12, Feature Engineering, will teach you some of the key techniques for creating new variables on an existing dataset.

Chapter 13, Imbalanced Datasets, will equip you to identify use cases where datasets are likely to be imbalanced, and formulate strategies for dealing with imbalanced datasets.

Chapter 14, Dimensionality Reduction, will show how to analyze datasets with high dimensions and deal with the challenges posed by these datasets.

Chapter 15, Ensemble Learning, will teach you to apply different ensemble learning techniques to your dataset.

Chapter 16, Machine Learning Pipelines, will show how to perform preprocessing, dimensionality reduction, and modeling using the pipeline utility.

Chapter 17, Automated Feature Engineering, will show you how to use the automated feature engineering techniques.

Note

You can find the bonus chapter on Model as a Service with Flask and the solution set to the activities at https://packt.live/2sSKX3D.

Conventions

Code words in text, database table names, folder names, filenames, file extensions, path names, dummy URLs, user input, and Twitter handles are shown as follows:

"sklearn has a class called train_test_split, which provides the functionality for splitting data."

Words that you see on the screen, for example, in menus or dialog boxes, also appear in the same format.

A block of code is set as follows:

# import libraries import pandas as pd from sklearn.model_selection import train_test_split

New terms and important words are shown like this:

"A dictionary contains multiple elements, like a list, but each element is organized as a key-value pair."

Before You Begin

Each great journey begins with a humble step. Our upcoming adventure with Data Science is no exception. Before we can do awesome things with Data Science, we need to be prepared with a productive environment. In this small note, we shall see how to do that.

How to Set Up Google Colab

There are many integrated development environments (IDE) for Python. The most popular one for running Data Science project is Jupyter Notebook from Anaconda but this is not the one we are recommending for this book. As you are starting your journey into Data Science, rather than asking you to setup a Python environment from scratch, we think it is better for you to use a plug-and-play solution so that you can fully focus on learning the concepts we are presenting in this book. We want to remove most of the blockers so that you can make this first step into Data Science as seamlessly and as fast as possible.

Luckily such a tool does exist, and it is called Google Colab. It is a free tool provided by Google that are run on the cloud, so you don't need to buy a new laptop or computer or upgrade its specs. The other benefit of using Colab is most of the Python packages we are using in this book are already installed so you can use them straight away. The only thing you need is a Google account. If you don't have one, you can create one here: https://packt.live/37mea5X.

Then, you will need to subscribe to the Colab service:

- First, log into Google Drive: https://packt.live/2TM1v8w

- Then, go to the following url: https://packt.live/2NKaAuP

You should see the following screen:

Figure 0.1: Google Colab Introduction page

- Then, you can click on

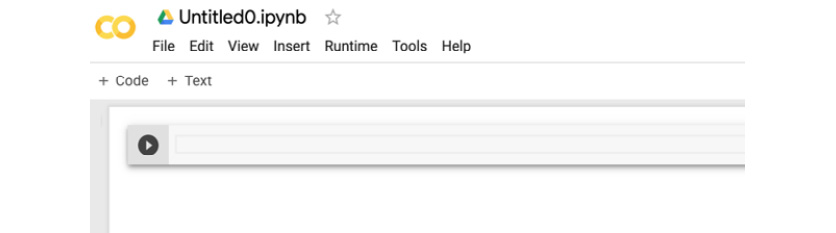

NEW PYTHON 3 NOTEBOOKand you should see a new Colab notebook

Figure 0.2: New Colab notebook

You just added Google Colab to you Google account and now you are ready to write and your own Python code.

How to Use Google Colab

Now that you have added Google Colab to your account, let's see how to use it. Google Colab is very similar to Jupyter Notebook. It is actually based on Jupyter, but run on Google servers with additional integrations with their services such as Google Drive.

To open a new Colab notebook, you need to login into your Google Drive account and then click on + New icon:

Figure 0.3: Option to open new notebook

On the menu displayed, select More and then Google Colaboratory

Figure 0.4: Option to open Colab notebook from Google Drive

A new Colab notebook will be created.

Figure 0.5: New Colab notebook

A Colab notebook is an interactive IDE where you can run Python code or add texts using cells. A cell is a container where you will add your lines of code or any text information related to your project. In each cell, you can put as many lines of code or text as you want. A cell can display the output of your code after running it, so it is a very powerful way of testing and checking the results of your work. It is a good practice to not overload each cell with tons of code. Try to split it to multiple cells so you will be able to run them independently and track step by step if your code is working.

Let us now see how we can write some Python code in a cell and run it. A cell is composed of 4 main parts:

- The text box where you will write your code

- The

Runbutton for running your code - The options menu that will provide additional functionalities

- The output display

Figure 0.6: Parts of the Colab notebook cell

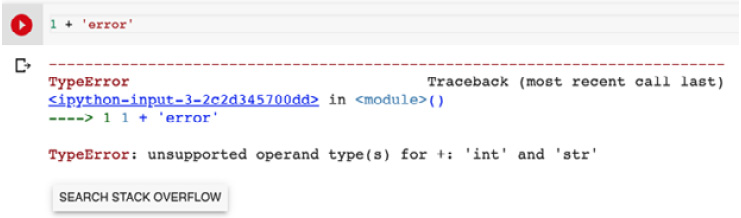

In this preceding example, we just wrote a simple line of code that adds 2 to 3. Then, we either need to click on the Run button or use the shortcut Ctrl + Enter to run the code. The result will then be displayed below the cell. If your code breaks (when there is an error), the error message will be displayed below the cell:

Figure 0.7: Error message on Google Colab

As you can see, we tried to add an integer to a string which is not possible as their data types are not compatible and this is exactly what this error message is telling us.

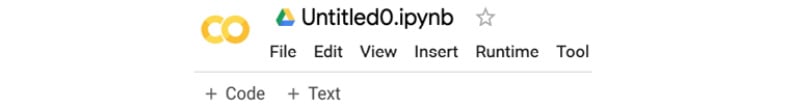

To add a new cell, you just need to click on either the + Code or + Text on the option bar at the top:

Figure 0.8: New cell button

If you add a new Text cell, you have access to specific options for editing your text such as bold, italic, and hypertext links and so on:

Figure 0.9: Different options on cell

This type of cell is actually Markdown compatible. So, you can easily create title, sub-title, bullet points and so on. Here is a link for learning more about the Markdown options: https://packt.live/2NVgVDT.

With the cell option menu, you can delete a cell, move it up or down in the notebook:

Figure 0.10: Cell options

If you need to install a specific Python package that is not available in Google Colab, you just need to run a cell with the following syntax:

!pip install <package_name>

Note

The '!' is a magic command to run shell commands.

Figure 0.11: Using "!" command

You just learnt the main functionalities provided by Google Colab for running Python code. There are much more functionalities available, but you now know enough for going through the lessons and contents of this book.

Installing the Code Bundle

Download the code files from GitHub at https://packt.live/2ucwsId. Refer to these code files for the complete code bundle.

If you have any issues or questions regarding installation, please email us at workshops@packt.com.

The high-quality color images used in book can be found at https://packt.live/30O91Bd.