Deploying one-click solutions and models with Amazon SageMaker JumpStart

If you're new to machine learning, you may find it difficult to get started with real-life projects. You've run all the toy examples, and you've read several blog posts on the state of the models for COMPUTER VISION OR NATURAL LANGUAGE PROCESSING. Now what? How can you start using these complex models on your own data to solve your own business problems?

Even if you're an experienced practitioner, building end-to-end machine learning solutions is not an easy task. Training and deploying models is just part of the equation: what about data preparation, automation, and so on?

Amazon SageMaker JumpStart was specifically built to help everyone get started more quickly with their machine learning projects. In literally one click, you can deploy the following:

- 16 end-to-end solutions for real-life business problems such as fraud detection in financial transactions, explaining credit decisions, predictive maintenance, and more

- Over 180 TensorFlow and PyTorch models pre-trained on a variety of computer vision and natural language processing tasks

- Additional learning resources, such as sample notebooks, blog posts, and video tutorials

Time to deploy a solution.

Deploying a solution

Let's begin:

- Starting from the icon bar on the left, we open JumpStart. The following screenshot shows the opening screen:

Figure 1.13 – Viewing solutions in JumpStart

- Select Fraud Detection in Financial Transactions. As can be seen in the following screenshot, this is a fascinating example that uses graph data and graph neural networks to predict fraudulent activities based on interactions:

Figure 1.14 – Viewing solution details

- Once we've read the solution details, all we have to do is click on the Launch button. This will run an AWS CloudFormation template in charge of building all the AWS resources required by the solution.

CloudFormation

If you're curious about CloudFormation, you may find this introduction useful: https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/Welcome.htmachine learning.

- A few minutes later, the solution is ready, as can be seen in the following screenshot. We click on Open Notebook to open the first notebook.

Figure 1.15 – Opening a solution

- As you can see in the following screenshot, we can browse solution files in the left-hand pane: notebooks, training code, and so on:

Figure 1.16 – Viewing solution files

- From then on, you can start running and tweaking the notebook. If you're not familiar with the SageMaker SDK yet, don't worry about the details.

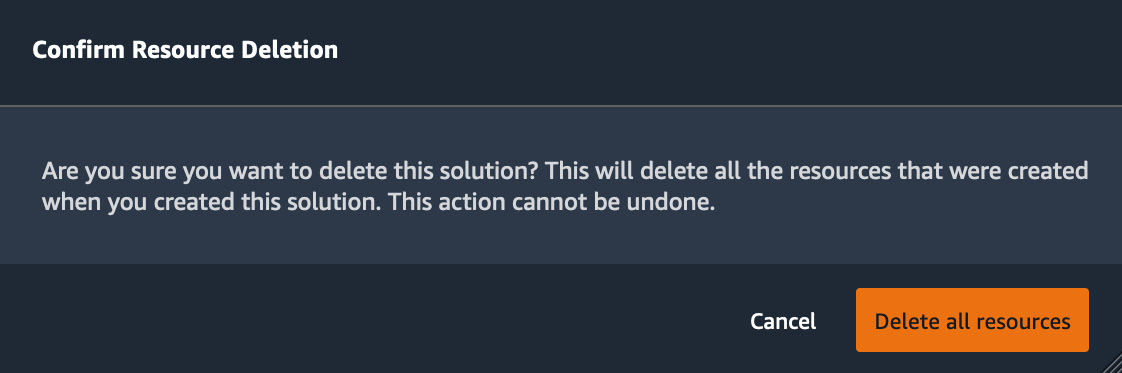

- Once you're done, please go back to the solution page and click on Delete all resources to clean up and avoid unnecessary costs, as shown in the following screenshot:

Figure 1.17 – Deleting a solution

As you can see, JumpStart solutions are a great way to explore how to solve business problems with machine learning and to start thinking about how you could do the same in your own business environment.

Now, let's see how we can deploy pre-trained models.

Deploying a model

JumpStart includes over 180 TensorFlow and PyTorch models pre-trained on a variety of computer vision and natural language processing tasks. Let's take a look at computer vision models:

- Starting from the JumpStart main screen, we open Vision models, as can be seen in the following screenshot:

Figure 1.18 – Viewing computer vision models

- Let's say that we're interested in trying out object detection models based on the Single Shot Detector (SSD) architecture. We click on the SSD model from the PyTorch Hub (the fourth one from the left).

- This opens the model details page, telling us where the model comes from, what dataset it has been trained on, and which labels it can predict. We can also select which instance type to deploy the model. Sticking to the default, we click on Deploy to deploy the model on a real-time endpoint, as shown in the following screenshot:

Figure 1.19 – Deploying a JumpStart model

- A few minutes later, the model has been deployed. As can be seen in the following screenshot, we can see the endpoint status in the left-hand panel, and we simply click on Open Notebook to test it.

Figure 1.20 – Opening a JumpStart notebook

- Clicking through the notebook cells, we download a test image and we predict which objects it contains. Bounding boxes, classes, and probabilities are visible in the following screenshot:

Figure 1.21 – Detecting objects in a picture

- When you're done, please make sure to delete the endpoint to avoid unnecessary charges: simply click on Delete in the endpoint details screen visible in Figure 1.20.

Not only does JumpStart make it extremely easy to experiment with state-of-the-art models, but it also provides you with code that you can readily use in your own projects: loading an image for prediction, predicting with an endpoint, plotting results, and so on.

As useful as pre-trained models are, we often need to fine-tune them on our own datasets. Let's see how we can do that with JumpStart.

Fine-tuning a model

Let's use an image classification model this time:

Note

A word of warning about fine-tuning text models: complex models such as BERT can take a very long time to fine-tune, sometimes several hours per epoch on a single GPU. In addition to the long waiting time, the cost won't be negligible, so I'd recommend avoiding these examples unless you have a real-life business project to work on.

- We select the Resnet 18 model (the second from the left in Figure 1.18).

- On the model details page, we see that this model can be fine-tuned either on a default dataset available for testing (a TensorFlow dataset with five flower classes) or on our own dataset stored in S3. Scrolling down, we learn about the format that our dataset should have.

- As visible in the following figure we stick to the default dataset. We also leave the deployment configuration and training parameters unchanged. Then, we click on Train to launch the fine-tuning job.

Figure 1.22 – Fine-tuning a model

- After just a few minutes, fine-tuning is complete (which is why I picked this example!). We can see the output path in S3 where the fine-tuned model has been stored. Let's write down that path; we're going to need it in a minute.

Figure 1.23 – Viewing fine-tuning results

- Then, we click on Deploy just like in the previous example. Once the model has been deployed, we open the sample notebook showing us how to predict with the initial pre-trained model.

- This notebook uses images from the original dataset that the model was pre-trained on. No problem, let's adapt it! Even if we're not yet familiar with the SageMaker SDK, the notebook is simple enough that we can understand what's going on, and add a few cells to predict a flower image with our fine-tuned model.

- First, we add a cell to copy the fine-tuned model artifact from S3, and we extract the list of classes and class indexes that JumpStart added:

%%sh aws s3 cp s3://sagemaker-REGION_NAME-123456789012/smjs-d-pt-ic-resnet18-20210511-142657/output/model.tar.gz . tar xfz model.tar.gz cat class_label_to_prediction_index.json {"daisy": 0, "dandelion": 1, "roses": 2, "sunflowers": 3, "tulips": 4} - As expected, the fine-tuned model can predict five classes. Let's add a cell to download a sunflower image from Wikipedia:

%%sh wget https://upload.wikimedia.org/wikipedia/commons/a/a9/A_sunflower.jpg

- Now, we load the image and invoke the endpoint:

import boto3 endpoint_name = 'jumpstart-ftd-pt-ic-resnet18' client = boto3.client('runtime.sagemaker') with open('A_sunflower.jpg', 'rb') as file: image = file.read() response = client.invoke_endpoint( EndpointName=endpoint_name, ContentType='application/x-image', Body=image) - Finally, we print out the predictions. The highest probability is class #3 at 60.67%, confirming that our image contains a sunflower!

import json model_predictions = json.loads(response['Body'].read()) print(model_predictions) [0.30362239480018616, 0.06462913751602173, 0.007234351709485054, 0.6067869663238525, 0.017727158963680267]

- When you're done testing, please make sure to delete the endpoint to avoid unnecessary charges.

This example illustrates how easy it is to fine-tune pre-trained models on your own datasets with SageMaker JumpStart and to use them to predict your own data. This is a great way to experiment with different models and find out which one could work best on the particular problem you're trying to solve.

This is the end of the first chapter, and it was already quite action-packed, wasn't it? It's now time to review what we've learned.