Language has played a critical role in the evolution of our species and was arguably the key competitive advantage for our hunter-gatherer ancestors over other species. Naturally evolved languages, also called natural languages, allowed our ancestors to communicate more efficiently with their flock. The development of language scripts further accelerated their growth, as important information could now be documented and reproduced, obviating the need for memorizing. Needless to say, we humans have a deep affinity toward our languages, and we cherish the ability to communicate with fellow humans.

A new class of languages called programming languages surfaced around the mid-20th century, with the objective of communicating with machines to get the desired output. With the explosive growth of computers, gaining familiarity with programming languages assumed great significance in order to harness the computational power of these machines. You will come across various profiles on LinkedIn in which people refer to themselves as polyglots, implying that they are proficient in multiple programming languages. While there are similarities between natural languages and programming languages, in that they are used to communicate and have rules and syntax, there are some major differences. The most important difference is that natural languages are ambiguous, and therefore cannot be comprehended by machines. For example, refer to the following statement: Pick an integer and divide it by two; if the remainder is zero, then it is an even number.

For those who are presumably proficient in Math and English, the preceding statement may make complete sense. However, for someone who is new to deciphering human languages, it may refer to either the integer, two, or the remainder. Likewise, natural languages encompass many other elements, such as sarcasm, double negation, rhetorical expressions, and so on, which increases complexity and requires a monumental effort to code every inherent rule of the language for the machine to understand. These factors make natural languages unfit to be used as programming languages.

How, then, do we communicate with computers humanly?

Understanding NLP

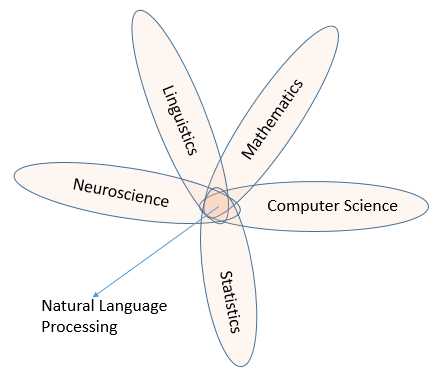

Scientists have been working on this precise question since the turn of the last century and, as of today, we have attained reasonable success in this area. The research on how to make computers understand and manipulate natural languages draws from several fields, including computer science, math, linguistics, and neuroscience, and the resulting interdisciplinary area of research is called NLP. Take a look at the following diagram, which illustrates this:

NLP is categorized as a subfield of the broader Artificial Intelligence (AI) discipline, which delves into simulating human intelligence in machines. English scientist Alan Turing, who is considered one of the pioneers of AI, developed a set of criteria (called the Turing test), which tested whether a machine could display intelligent behavior indistinguishable from that of a human. The machine's ability to understand and process natural languages is a prominent criterion of the Turing test.

Most early research in the field of NLP relied on fixed complex rules and mapping-based systems. These systems, although moderately successful, were difficult to scale. Another issue with the rule-based approach is that it does not mimic human learning of language very well. For example, if you are from Asia and are traveling to the USA, you will come across people who greet you by saying, How's it going? or How are you doing? A fixed rule-based language processing system would signal that the person cares about you and is genuinely interested to know about your wellbeing. However, before you prepare to give your long-winded response of how you are actually doing, you will see that the person has already walked by. When you see this pattern reoccurring and observe how other people respond to the same question, your brain overwrites the pre-existing rule and replaces it with a new contextual understanding, which was derived by some form of data analysis.

This data-driven approach is the cornerstone of most modern-day NLP research. With the advent of ML algorithms and the data deluge propelled by the internet and significantly increased computational capacity, NLP solutions have become way more scalable and reliable. The most exciting thing about this NLP revolution is that most of this is driven by open source technology, meaning these solutions are freely available to anyone who wants to consume or contribute to these projects.

We have covered many of these algorithms and tools in this book, including the following:

- ML algorithms (Naive Bayes; Support Vector Machine (SVM))

- DL algorithms (Convolutional Neural Network (CNN); Recurrent Neural Network (RNN))

- Similarity/dissimilarity measures

- Long Short-Term Memory (LSTM) network; Gated Recurrent Unit (GRU)

- BERT

- Building chatbots; sentiment analyzer

- Predictive analytics on text data

- Machine translation system

We hope that by the end of this book, you will be able to build reasonably sophisticated NLP applications on your desktop PC.