Installing software and setting up

As the book title says, Python is the language we will use to implement all machine learning algorithms and techniques throughout the entire book. We will also exploit many popular Python packages and tools such as NumPy, SciPy, TensorFlow, and scikit-learn. By the end of this kick-off chapter, make sure you set up the tools and working environment properly, even if you are already an expert in Python or might be familiar with some of those tools.

Setting up Python and environments

We will be using Python 3 in this book. As you may know, Python 2 will no longer be supported after 2020, so starting with or switching to Python 3 is strongly recommended. Trust me, the transition is pretty smooth. But if you're stuck with Python 2, you still should be able to modify the codes to work for you. The Anaconda Python 3 distribution is one of the best options for data science and machine learning practitioners.

Anaconda is a free Python distribution for data analysis and scientific computing. It has its own package manager, conda. The distribution (https://docs.anaconda.com/anaconda/packages/pkg-docs/, depending on your OS, or version 3.7, 3.6, or 2.7) includes more than 600 Python packages (as of 2020), which makes it very convenient. For casual users, the Miniconda (https://conda.io/miniconda.html) distribution may be the better choice. Miniconda contains the conda package manager and Python. Obviously, Miniconda takes much less disk space than Anaconda.

The procedures to install Anaconda and Miniconda are similar. You can follow the instructions from https://docs.conda.io/projects/conda/en/latest/user-guide/install/. First, you have to download the appropriate installer for your OS and Python version, as follows:

Figure 1.13: Installation entry based on your OS

Follow the steps listed in your OS. You can choose between a GUI and a CLI. I personally find the latter easier.

I was able to use the Python 3 installer, although the Python version in my system was 2.7 at the time I installed it. This is possible since Anaconda comes with its own Python. On my machine, the Anaconda installer created an anaconda directory in my home directory and required about 900 MB. Similarly, the Miniconda installer installs a miniconda directory in your home directory.

Feel free to play around with it after you set it up. One way to verify that you have set up Anaconda properly is by entering the following command line in your terminal on Linux/Mac or Command Prompt on Windows (from now on, we will just mention terminal):

python

The preceding command line will display your Python running environment, as shown in the following screenshot:

Figure 1.14: Screenshot after running "python" in the terminal

If this isn't what you're seeing, please check the system path or the path Python is running from.

At the end of this section, I want to emphasize the reasons why Python is the most popular language for machine learning and data science. First of all, Python is famous for its high readability and simplicity, which makes it easy to build machine learning models. We spend less time in worrying about getting the right syntax and compilation and, as a result, have more time to find the right machine learning solution. Second, we have an extensive selection of Python libraries and frameworks for machine learning:

|

Data analysis |

NumPy, SciPy, pandas |

|

Data visualization |

Matplotlib, Seaborn |

|

Modeling |

scikit-learn, TensorFlow, Keras |

Table 1.5: Popular Python libraries for machine learning

The next step involves setting up some of these packages that we will use throughout this book.

Installing the main Python packages

For most projects in this book, we will be using NumPy (http://www.numpy.org/), scikit-learn (http://scikit-learn.org/stable/), and TensorFlow (https://www.tensorflow.org/). In the sections that follow, we will cover the installation of several Python packages that we will be mainly using in this book.

NumPy

NumPy is the fundamental package for machine learning with Python. It offers powerful tools including the following:

- The N-dimensional array

ndarrayclass and several subclasses representing matrices and arrays - Various sophisticated array functions

- Useful linear algebra capabilities

Installation instructions for NumPy can be found at http://docs.scipy.org/doc/numpy/user/install.html. Alternatively, an easier method involves installing it with pip in the command line as follows:

pip install numpy

To install conda for Anaconda users, run the following command line:

conda install numpy

A quick way to verify your installation is to import it into the shell as follows:

>>> import numpy

It has installed correctly if no error message is visible.

SciPy

In machine learning, we mainly use NumPy arrays to store data vectors or matrices composed of feature vectors. SciPy (https://www.scipy.org/scipylib/index.html) uses NumPy arrays and offers a variety of scientific and mathematical functions. Installing SciPy in the terminal is similar, again as follows:

pip install scipy

Pandas

We also use the pandas library (https://pandas.pydata.org/) for data wrangling later in this book. The best way to get pandas is via pip or conda:

conda install pandas

Scikit-learn

The scikit-learn library is a Python machine learning package optimized for performance as a lot of the code runs almost as fast as equivalent C code. The same statement is true for NumPy and SciPy. Scikit-learn requires both NumPy and SciPy to be installed. As the installation guide in http://scikit-learn.org/stable/install.html states, the easiest way to install scikit-learn is to use pip or conda as follows:

pip install -U scikit-learn

TensorFlow

TensorFlow is a Python-friendly open source library invented by the Google Brain team for high-performance numerical computation. It makes machine learning faster and deep learning easier with the Python-based convenient frontend API and high-performance C++-based backend execution. Plus, it allows easy deployment of computation across CPUs and GPUs, which empowers expensive and large-scale machine learning. In this book, we will focus on CPU as our computation platform. Hence, according to https://www.tensorflow.org/install/, installing TensorFlow 2 is done via the following command line:

pip install tensorflow

There are many other packages we will be using intensively, for example, Matplotlib for plotting and visualization, Seaborn for visualization, NLTK for natural language processing, PySpark for large-scale machine learning, and PyTorch for reinforcement learning. We will provide installation details for any package when we first encounter it in this book.

Introducing TensorFlow 2

TensorFlow provides us with an end-to-end scalable platform for implementing and deploying machine learning algorithms. TensorFlow 2 was largely redesigned from its first mature version 1.0 and was released at the end of 2019.

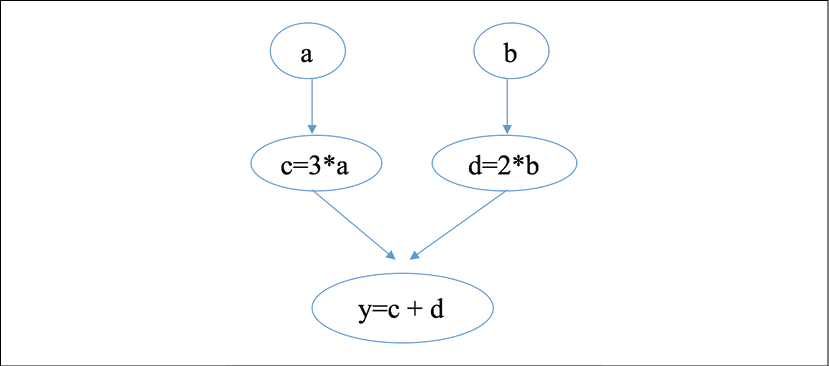

TensorFlow has been widely known for its deep learning modules. However, its most powerful point is computation graphs, which algorithms are built on. Basically, a computation graph is used to convey relationships between the input and the output via tensors. For instance, if we want to evaluate a linear relationship, y = 3 * a + 2 * b, we can represent it in the following computation graph:

Figure 1.15: Computation graph for a y = 3 * a + 2 * b machine

Here, a and b are the input tensors, c and d are the intermediate tensors, and y is the output.

You can think of a computation graph as a network of nodes connected by edges. Each node is a tensor and each edge is an operation or function that takes its input node and returns a value to its output node. To train a machine learning model, TensorFlow builds the computation graph and computes the gradients accordingly (gradients are vectors providing the steepest direction where an optimal solution is reached). In the upcoming chapters, you will see some examples of training machine learning models using TensorFlow.

At the end, we highly recommend you go through https://www.tensorflow.org/guide/data if you are interested in exploring more about TensorFlow and computation graphs.