Sticking with the postal mail analogy, it would be instinctive to realize that a method of determining what model is the best fit for a particular household could be ascertained simply by hanging out by the mailbox every day and recording what the postal carrier drops into the mailbox. It should also seem obvious that the more observations seen, the higher your confidence should be that your model is accurate. In other words, only spending 3 days by the mailbox would provide less complete information and confidence than spending 30 days, or 300 for that matter.

Algorithmically, a similar process could be designed to self-select the appropriate model based upon observations. Careful scrutiny of the algorithm's choices of the model type itself (that is, Poisson, Gaussian, log-normal, and so on) and the specific coefficients of that model type (as in the preceding example of λ) would also need to be part of this self-selection process. To do this, constant evaluation of the appropriateness of the model is done. Bayesian techniques are also employed to assess the model's likely parameter values, given the dataset as a whole, but allowing for tempering of those decisions based upon how much information has been seen prior to a particular point in time. The ML algorithms accomplish this automatically.

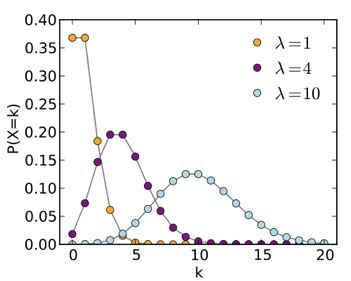

Most importantly, the modeling that is done is continuous, so that new information is considered along with the old, with an exponential weighting to the information that is fresher. Such a model, after 60 observations, could resemble the following:

Sample model after 60 observations

It will then seem much different after 400 observations, as the data presents itself with a slew of new observations with values between 5 and 10:

Sample model after 400 observations

Also notice that there is the potential for the model to have multiple modes, or areas/clusters of higher probability. The complexity and trueness of the fit of the learned model (shown as the blue curve) with the theoretically ideal model (in black) matters greatly. The more accurate the model, the better representation of the state of normal for that dataset, and thus ultimately, the more accurate the prediction of how future values comport with this model.

The continuous nature of the modeling also drives the requirement that this model be capable of serialization to long-term storage, so that if model creation/analysis is paused, it can be reinstated and resumed at a later time. As we will see, the operationalization of this process of model creation, storage, and utilization is a complex orchestration, which is fortunately handled automatically by ML.