Deploying a model for batch inferencing using the Studio

In Chapter 3, Training Machine Learning Models in AMLS, we trained a model and registered it in an Azure Machine Learning workspace. We are going to deploy that model to a managed batch endpoint for batch scoring:

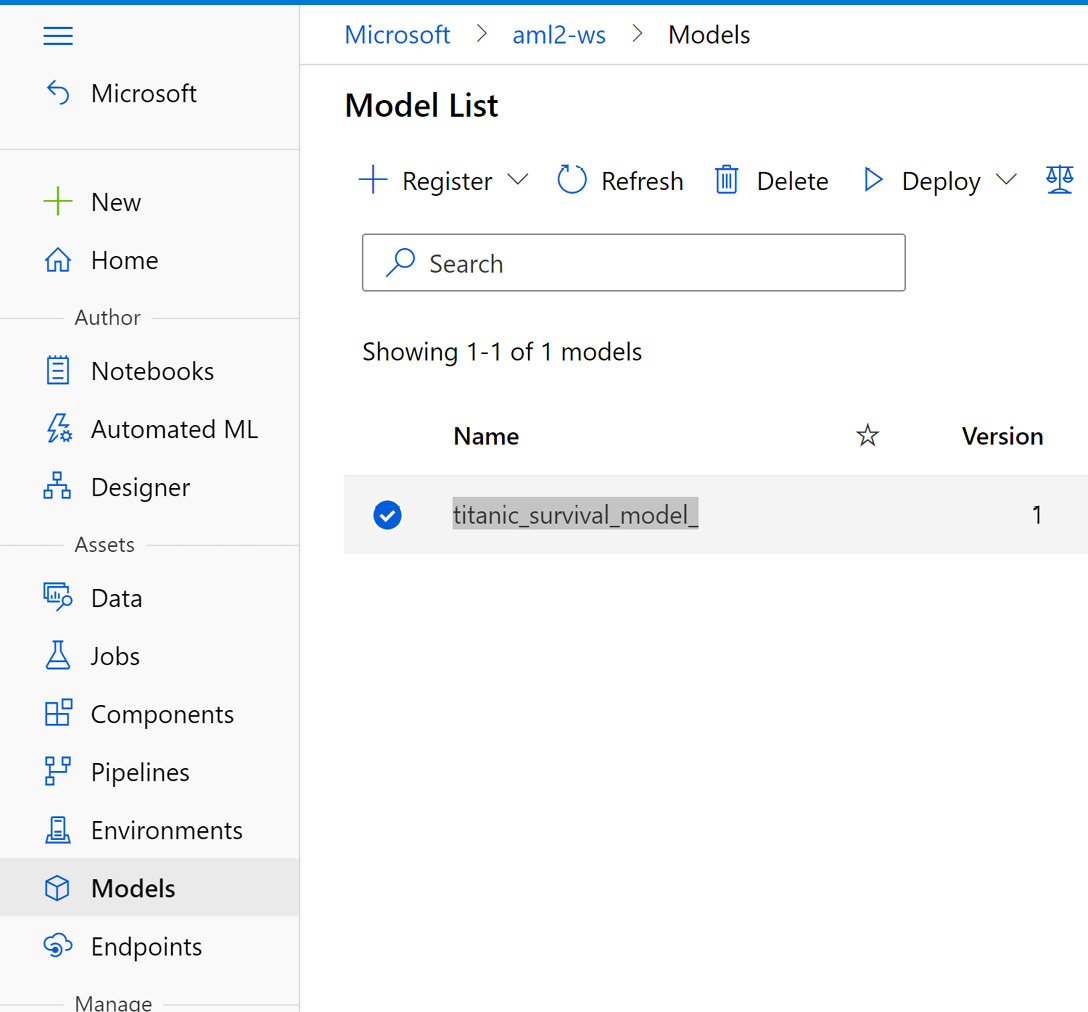

- Navigate to your Azure Machine Learning workspace, select Models from the left menu bar to see the models registered in your workspace, and select titanic_servival_model_, as shown in Figure 7.4:

Figure 7.4 – List of models registered in the workspace

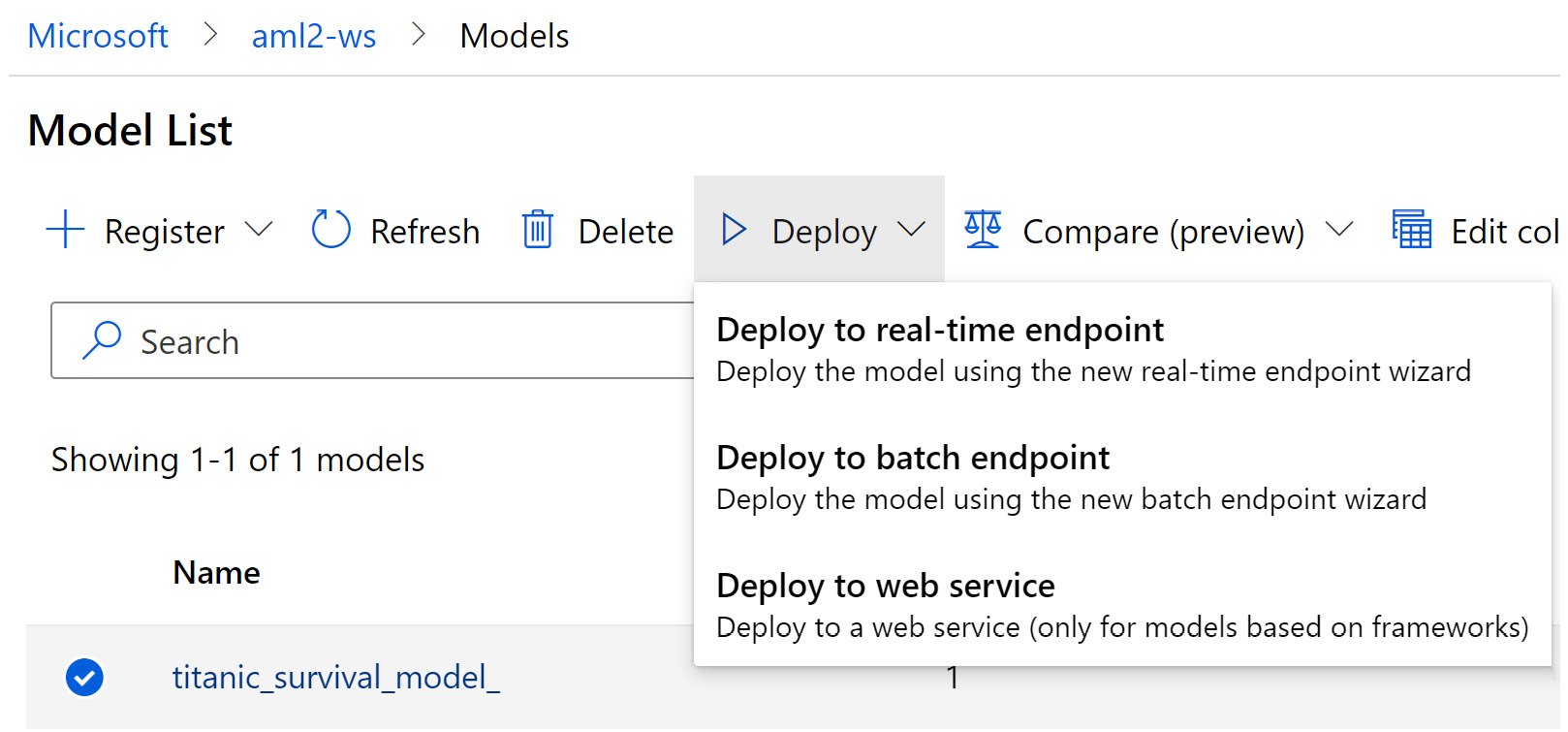

- Click on Deploy and select Deploy to batch endpoint, as shown in Figure 7.5:

Figure 7.5 – Deploy the selected model to a batch endpoint

This opens the deployment wizard. Use the following values for the required fields:

- Endpoint name:

titanic-survival-batch-endpoint - Model: Retain the default of titanic_survival_model_

- Deployment name:

titanic-deployment - Environment...