Computer and hardware architecture plays a significant role in SDCs. As we know, a large part of that lies in the hardware itself and the programming that goes into it. Tesla unveiled its new, purpose-built computer; a chip specifically optimized for running a neural network. It has been designed to be retrofitted in existing vehicles. This computer is of a similar size and power to the existing self-driving computers. This has increased Tesla's SDC computer capabilities by 2,100% as it allows it to process 2,300 frames per second, 2,190 frames more than the previous iteration. This is a massive performance jump, and that processing power will be needed to analyze footage from the suit of sensors Tesla has.

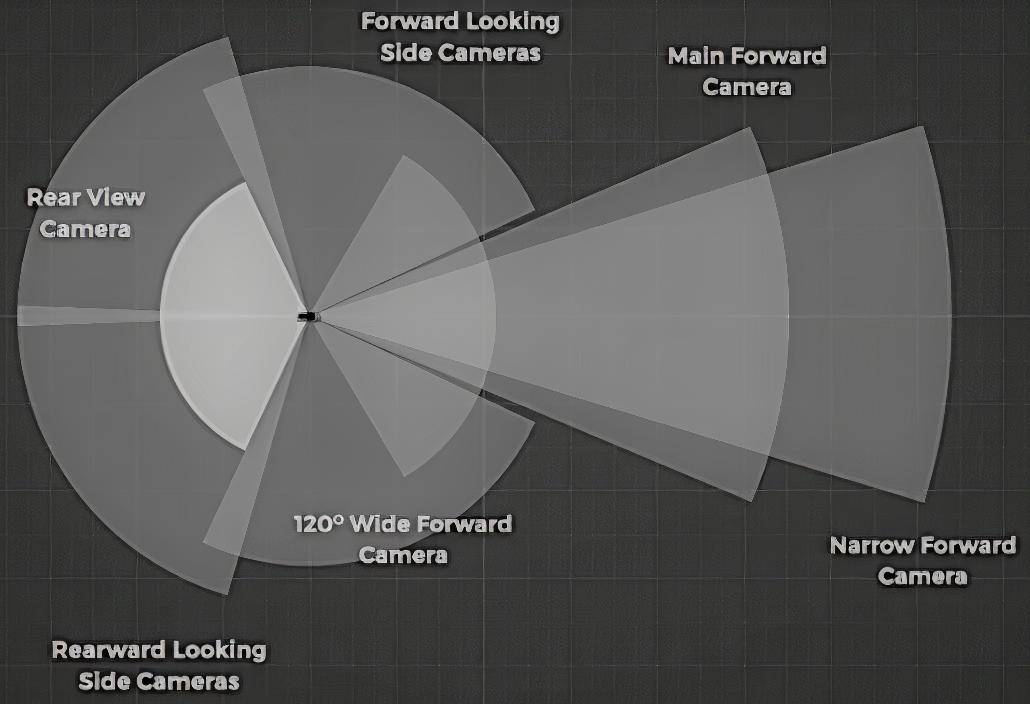

The Tesla autopilot model currently consists of three forward-facing cameras, all mounted behind the windshield. One is a 120-degree wide-angle fish-eye lens, which gives situational awareness by capturing traffic lights and objects moving into the path of travel. The second camera is a narrow-angle lens that provides longer-range information needed for high-speed driving. The third is the main camera, which sits in the middle of these two cameras. There are four additional cameras on the sides of the vehicle that check for vehicles unexpectedly entering any lane, and provide the information needed to safely enter intersections and change lanes. The eight and final camera is located at the rear, which doubles as a parking camera, but is also used to avoid crashes from rear hazards.

The vehicle does not completely rely on visual cameras. It also makes use of 12 ultrasonic sensors, which provide a 360-degree picture of the immediate area around the vehicle, and one forward-facing RADAR:

Fig 1.3: Camera views

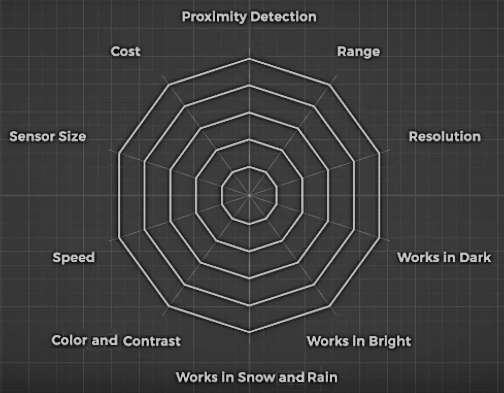

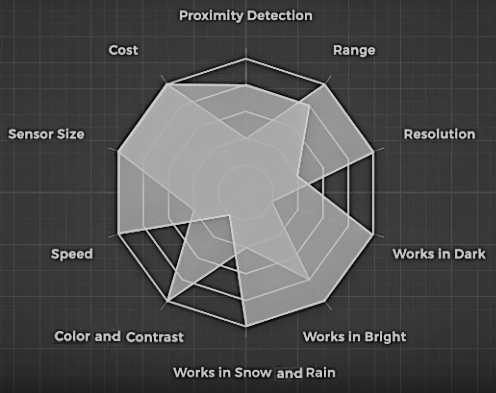

Finding the correct sensor fusion has been a subject of debate among competing SDC companies. Elon Musk recently stated that anyone relying on LIDAR sensors (which work similarly to RADAR but utilize light instead of radio waves) is doomed. To understand why he said this, we will plot the strengths of each sensor on a RADAR chart, as follows:

Fig 1.4: RADAR chart

RADAR has great resolution; it provides highly detailed information about what it's detecting. It works in low and high light situations and is also capable of measuring speed. It has a good range and works moderately well in poor weather conditions. Its biggest weakness is that these sensors are expensive and bulky. This is where the second challenge of building a SDC comes into play: building an affordable system that the average person can buy.

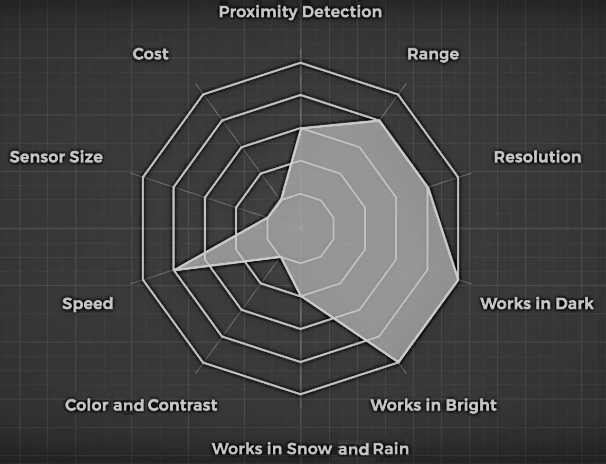

Let's look at the following RADAR chart:

Fig 1.5: RADAR chart – strength

LIDAR sensors are the big sensors we see on Waymo, Uber, and most competing SDC companies output. Elon Musk has become more aware of LIDAR's potential after SpaceX utilized it in their dragon-eye navigation sensor. It's a drawback for Tesla for now, who focused on building not just a cost-effective vehicle, but a good-looking vehicle. Fortunately, LIDAR technology is gradually becoming smaller and cheaper.

Waymo, a subsidiary of Google's parent company Alphabet, sells its LIDAR sensors to any company that does not intend to compete with its plans for a self-driving taxi service. When they started in 2009, the per-unit cost of a LIDAR sensor was around $75,000, but they have managed to reduce this to $7,500 as of 2019 by manufacturing the units themselves. Waymo vehicles use four LIDAR sensors on each side of the vehicle, placing the total cost of these sensors for the third party at $30,000. This sort of pricing does not line up with Tesla's mission as their mission is to speed up the world so that it moves toward sustainable transport. This issue has pushed Tesla toward a cheaper sensor fusion setup.

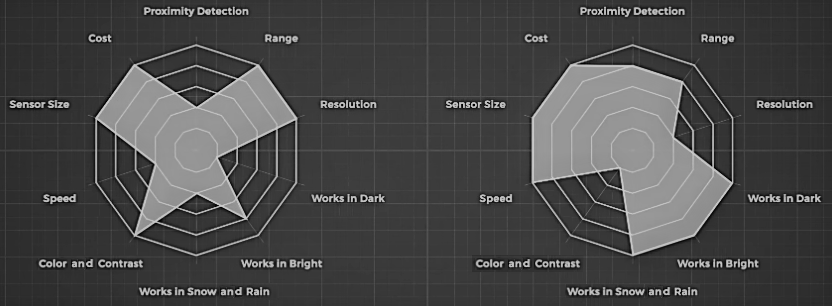

Let's look at the strength and weaknesses of the three other sensor types – RADAR, camera sensor, and ultrasonic sensor – to see how Tesla is moving forward without LIDAR.

First, let's look at RADAR. It works well in all conditions. RADAR sensors are small and cheap, capable of detecting speed, and their range is good for short- and long-distance detection. Where they fall short is in the low-resolution data they provide, but this weakness can easily be augmented by combining it with cameras. The plot for RADAR and its cameras can be seen in the following image:

Fig 1.6: RADAR and camera plot

When we combine the two, this yields the following plot:

Fig 1.7: Added RADAR and camera plot

This combination has excellent range and resolution, provides color and contrast information for reading street signs, and is extremely small and cheap. Combining RADAR and cameras allows each to cover the weaknesses of the other. They are still weak in terms of proximity detection, but using two cameras in stereo can allow the camera to work like our eyes to estimate distance. When fine-tuned distance measurements are needed, we can use the ultrasonic sensor. An example of an ultrasonic sensor can be seen in the following photo:

Fig 1.8: Ultrasonic sensor

These are circular sensors dotted around the car. In Tesla cars, eight surround cameras have coverage of 360 degrees around the car at a range of up to 250 meters. This vision is complemented by 12 upgraded ultrasonic sensors, which allow the detection of both hard and soft objects that are nearly twice the distance away from the prior device. A forward-facing RADAR with improved processing offers additional data about the world at a redundant wavelength and can be seen through heavy rain, fog, dust, and even the car ahead. This is a cost-effective solution. According to Tesla, their hardware is already capable of allowing their vehicles to self-drive. Now, they just need to continue improving the software algorithms. Tesla is in a fantastic position to make it work.

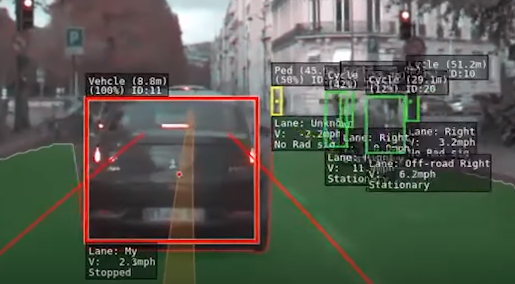

In the following screenshot, we can see the object detection camera sensor used by Tesla cars:

Fig 1.9: Object detection using a camera sensor

When training a neural network, data is key. Waymo has logged millions of kilometers driven in order to gain data, while Tesla has logged over a billion. 33% of all driving with Tesla cars is with the autopilot engaged. This data collection extends past autopilot engagement. Tesla cars also drive manually and collect data in areas where autopilot is not allowed, such as in the city or streets. Accounting for all the unpredictability of driving requires an immense amount of training for a machine learning algorithm, and this is where Tesla's data gives them an advantage. We will read about neural networks in later chapters. One key point to note is that the more data you have to train a neural network, the better it is going to be. Tesla's machine vision does a decent job, but there are still plenty of gaps there.

The Tesla software places bounding boxes around the objects it detects while categorizing them as cars, trucks, bicycles, and pedestrians. It labels each with a relative velocity to the vehicle, and what lane they occupy. It highlights drivable areas, marks the lane dividers, and sets a projected path between them. This frequently struggles in complicated scenarios, and Tesla is working on improving the accuracy of the models by adding new functionalities. Their latest SDC computer is going to radically increase its processing power, which will allow Tesla to continue adding functionality without needing to refresh information constantly. However, even if they manage to develop the perfect computer vision application, programming the vehicle on how to handle every scenario is another hurdle. This is a vital part of building not only a safe vehicle but a practical self-driving vehicle.

United States

United States

United Kingdom

United Kingdom

India

India

Germany

Germany

France

France

Canada

Canada

Russia

Russia

Spain

Spain

Brazil

Brazil

Australia

Australia

Argentina

Argentina

Austria

Austria

Belgium

Belgium

Bulgaria

Bulgaria

Chile

Chile

Colombia

Colombia

Cyprus

Cyprus

Czechia

Czechia

Denmark

Denmark

Ecuador

Ecuador

Egypt

Egypt

Estonia

Estonia

Finland

Finland

Greece

Greece

Hungary

Hungary

Indonesia

Indonesia

Ireland

Ireland

Italy

Italy

Japan

Japan

Latvia

Latvia

Lithuania

Lithuania

Luxembourg

Luxembourg

Malaysia

Malaysia

Malta

Malta

Mexico

Mexico

Netherlands

Netherlands

New Zealand

New Zealand

Norway

Norway

Philippines

Philippines

Poland

Poland

Portugal

Portugal

Romania

Romania

Singapore

Singapore

Slovakia

Slovakia

Slovenia

Slovenia

South Africa

South Africa

South Korea

South Korea

Sweden

Sweden

Switzerland

Switzerland

Taiwan

Taiwan

Thailand

Thailand

Turkey

Turkey

Ukraine

Ukraine

![Microsoft Power BI - The Complete Masterclass [2023 EDITION]](https://content.packt.com/V19592/cover_image.jpg)