Using jobs

Many of the PowerShell scripts we will create will execute in a serial fashion, that is, A starts before B which starts before C. This method of processing is simple to understand, easy to create, and easy to troubleshoot. However, sometimes there are processes that make serial execution difficult or undesirable. In that situation, we can look at using jobs as a method to start a task, and then move it to the background so that we can begin the next task.

A common situation I ran into is needing to get information, or execute a command, on multiple computers. If it is only a handful of systems, standard scripting works fine. However, if there are dozens, or hundreds of systems, a single slow system can slow down the entire process.

Additionally, if one of the systems fails to respond, it has the possibility of breaking my entire script, causing me to scramble through the logs to see where it failed and where to pick it back up. Another benefit of using jobs is that each job has the ability to execute independent of the rest of the jobs. This way, a job can fail, without breaking the rest of the jobs.

How to do it...

In this recipe, we will create a long-running process and compare the timing for serial versus parallel processing. To do this, carry out the following steps:

Create a long-running process in serial:

# Function that simulates a long-running process $foo = 1..5 Function LongWrite { Param($a) Start-Sleep 10 $a } $foo | ForEach{ LongWrite $_ }Create a long-running process using jobs:

# Long running process using jobs ForEach ($foo in 1..5) { Start-Job -ScriptBlock { Start-Sleep 10 $foo } -ArgumentList $foo -Name $foo } Wait-Job * Receive-Job * Remove-Job *

How it works...

In the first step, we create an example long-running process that simply sleeps for 10 seconds and returns its job number. The first script uses a loop to execute our LongWrite function in a serial fashion five times. As expected, this script takes just over 50 seconds to complete.

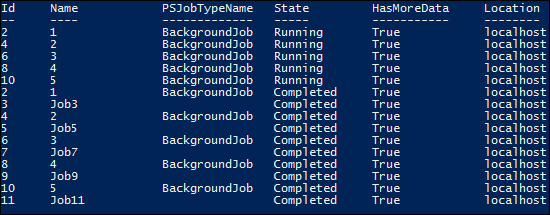

The second step executes the same process five times, but this time using jobs. Instead of calling a function, this time we are using Start-Job that will simultaneously create a background job, start the job, and then return for more. Once all the jobs have been started, we use Wait-Job * to wait for all running jobs to complete. Receive-Job retrieves the output from the jobs, and Remove-Job

removes the jobs from the scheduler.

Because of the setup and teardown process required for creating and managing jobs, the process runs for more than the expected 10 seconds. In a test run, it took approximately 18 seconds total to create the jobs, run the jobs, wait for the jobs to complete, retrieve the output from the jobs, and remove the jobs from the scheduler.

There's more...

Scaling up: While moving from 50 seconds to 18 seconds is impressive in itself (decreasing it to 36 percent of the original run-time), larger jobs can give even better results. By extending the command to run 50 times (instead of the original 5), run-times can decrease to 18 percent of the serial method.

Working with remote resources: Jobs can be used both locally and remotely. A common need for a server admin is to perform a task across multiple servers. Sometimes, the servers respond quickly, sometimes they are slow to respond, and sometimes they do not respond and the task times out. These slow or unresponsive systems greatly increase the amount of time needed to complete your tasks. Parallel processing allows these slow systems to respond when they are available without impacting the overall performance.

By using jobs, the task can be launched among multiple servers simultaneously. This way, the slower systems won't prompt other systems from processing. And, as shown in the example, a success or failure report can be returned to the administrator.

See also

More information on using jobs in PowerShell can be found at: