In this section we will conduct a quick overview of the evolution of Head Mounted Displays, including how they displayed their images and what head tracking technologies they used.

Interactivity and True HMDs

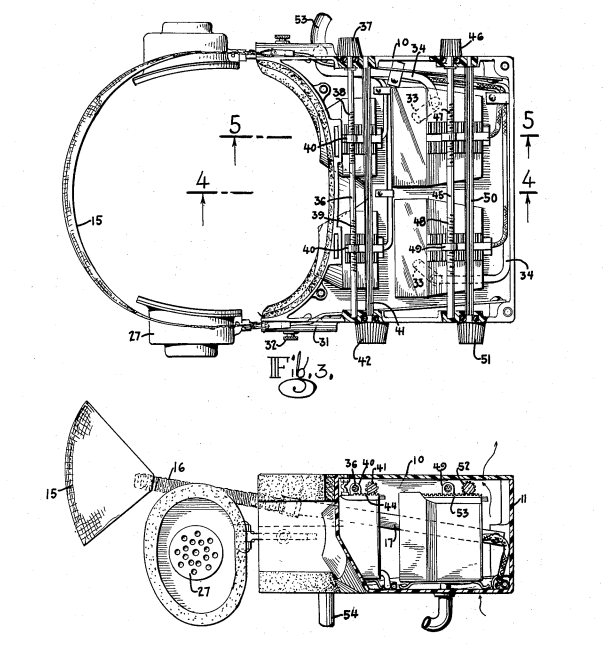

1960 – TelesphereMask

Morton Heilig patented one of the first functioning HMDs in 1960. While it did not have any head tracking and was designed exclusively for his stereoscopic 3D movies, the images from the patent look remarkably familiar to our designs 50 years later:

1961 – Headsight

This was a remote-viewing HMD designed for safely inspecting dangerous situations. This was also the first interactive HMD. The user could change the direction of the cameras from the live video feed by rotating their head, seeing the view angles update in real time. This was one step closer to immersive environments and telepresence.

1968 – Teleyeglasses

Hugo Gernsback bridged the gap between the sections on Science Fiction and HMD Design. Hugo was a prolific publisher, publishing over 50 hobbyist magazines based around science, technology, and science fiction. The Hugo awards for science fiction are named after him. While readers loved him, his reputation among contemporary writers was less than flattering.

Hugo not only published science fiction, he himself wrote about futurism, from color television, to cities on the moon, to farming in space. In 1968, he debuted a wireless HMD, called the Teleyglasses, constructed from twin Cathode-ray tubes (CRT) with rabbit ear antenna.

1965 – The Ultimate Display

Ivan Sutherland wrote about the Ultimate Display, a computer system that could simulate reality to the point where one could not differentiate between reality and simulation. The concept included haptic inputs and an HMD, the first complete definition of what Star Trek would call the Holodeck. We still do not have high-fidelity haptic feedback, though prototypes do exist.

1968 – Sword of Damocles

In 1968, Sutherland demonstrated an HMD with interactive computer graphics: the first true HMD. It was too large and heavy to be comfortably worn, so it was suspended from the ceiling. This gave it its name (Damocles had a sword suspended over his head by a single hair, to show him the perilous nature of those in power).

The computer-generated images were interactive: as the user turned their head, the images would update accordingly. But, given the computer processing power of the time, the images were simple white vector-line drawings against a black background. The rotation of the head was tracked electromechanically through gears (unlike today's HMDs, which use gyroscopes and light sensors), which no doubt added to the weight. Sutherland would go on to co-found Evans and Southerland, a leading computer image processing company in the 1970s and 1980s:

1968 – The mother of all demos

While this demo did not have an HMD, it did contain a demo of virtually every system used in computers today: the Mouse, Lightpen, Networking with audio, video, collaborative word processing, hypertext, and more. Fortunately, the videos of the event are online and well worth a look.

1969 – Virtual Cockpit/Helmet Mounted Sight

Dr. Tom Furness began working on HMDs for the US Air Force in 1967, moving from Heads Up Displays, to Helmet Mounted Displays, to Head Mounted Displays in 1969. At first, the idea was just to be able to take some of the mental load off of the pilot and allow them to focus on the most important instruments. Later, this evolved into linking the head of the pilot to the gun turret, allowing them to fire where they looked. The current F-35 Glass Cockpit HMD can be traced directly to his work. In a Glass Cockpit or even Glass Tank, the pilot or driver is able to see through their plane or tank via an HMD, giving them a complete unobstructed view of the battlefield through sensors and cameras mounted in the hull. His Retinal Display Systems, which do away with pixels by writing directly on the eye with lasers, is possibly similar to the solution of Magic Leap.

1969 – Artificial Reality

Myron Kruegere was a virtual reality computer artist who is credited with coming up with the term Artificial Reality to describe several of his interactive, computer-powered art installations: GLOWFLOW, METAPLAY, PSYCHIC SPACE, and VIDEOPLACE. If you visited a hands-on science museum from the 1970s through the 1990s, you no doubt experienced variations of his video/computer interactions. Several web and phone camera apps have similar features built-in now.

1995 – CAVE

Cave Automatic Virtual Environment (CAVE) is a self-referential acronym. It was the first collaborative space where multiple users could interact in virtual space. The systems used at least three, though sometimes more, stereoscopic 3D projectors covering at least three walls a room. Creating life-sized 3D computer images, the user could walk through them. While stereoscopic 3D projectors are inexpensive today, at the time their extremely high cost, coupled with the cost of computers to create realistic images in real time, meant CAVE systems were relegated to biomedical and automotive research facilities.

1987 – Virtual reality and VPL

Jaron Lanier coined (or popularized, depending on your sources) the term virtual reality. Jaron Lanier designed and built the first most complete commercially-available virtual reality system. It included the Dataglove and the EyePhone head-mounted display. The Dataglove would evolve into the Nintendo Powerglove. The Dataglove was able to track hand gestures through a unique trait of fiber optic cable. If you scratch a fiber optic cable, and shine light through it while it is straight, very little of the light will escape. But if you bend the scratched fiber optic cable, light will escape, and the more you bend it, the more light escapes. This light was measured and then used to calculate finger movements.

While the first generation of VR tried to use natural, gesture-based input (specifically hand), today's latest iteration of VR is, for the most part, skips hand-based input (with the exception of Leap and, to a very limited extent, HoloLens). My theory is that the new generation of VR developers grew up with a controller in their hands and were very comfortable with that input device, whereas the original set of VR designers had very little experience with a controller and that is why they felt the need to use natural input.

1989 – Nintendo Powerglove

The Nintendo Powerglove was an accessory designed to run on the Nintendo Entertainment System (NES). It used a set of three ultrasonic speakers that would be mounted to a TV to track the location of the player's hand. In theory, the player could grab objects by making a fist with their hand in the glove. Tightening and relaxing the hand would change how much electrical resistance was captured, allowing the NES to register a fist or an open hand. Only two games were released for the system, though its cultural impact was far greater: