We'll continue along a similar line to the previous section, in discussing classical statistical methods, but now in a new context. This section focuses on the mean of data that is quantitative, and not necessarily binary. We will demonstrate how to construct confidence intervals for the population mean, as well as several statistical tests that we can perform. Bear in mind throughout this section that we want to infer from a sample mean properties about a theoretical, unseen, yet fixed, population mean. We also want to compare the means of multiple populations, so as to determine whether they are the same or not.

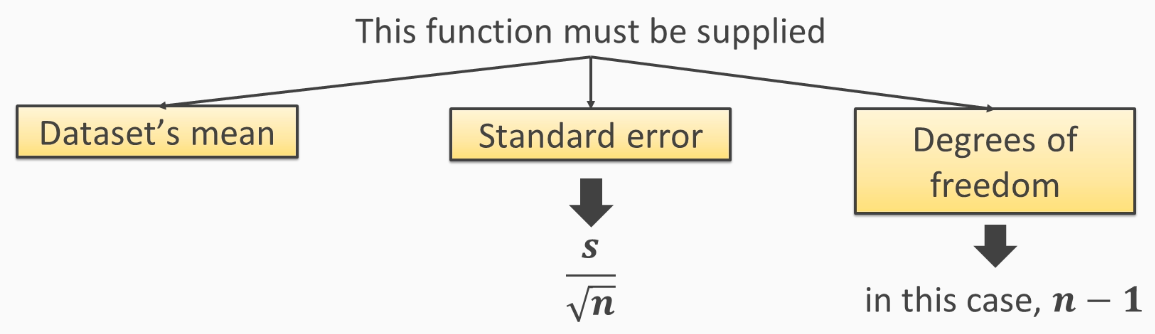

When we assume that the population is a normal distribution, otherwise known as a classic bell curve, then we may use confidence intervals constructed using the t-distribution. These confidence intervals assume a normal distribution but tend to work well for large sample sizes even if the data is not normally distributed. In other words, these intervals tend to be robust. Unfortunately, statsmodels does not have a stable function with an easy user interface for competing these confidence intervals; however, there is a function, called _tconfint_generic(), that can compute them. You need to supply a lot of what this function needs to compute the confidence interval yourself. This means supplying the sample mean, the standard error of the mean, and the degrees of freedom, as shown in the following diagram:

As this looks like an unstable function, this procedure could change in future versions of statsmodels.