Deploying the application on Kubernetes

Like Compose, Kubernetes or K8 manages multiple containers with or without dependencies on each other. Kubernetes can utilize volume storage for data persistence and has CLI commands to manage the life cycle of the containers. The only difference is that Kubernetes can run containers in a distributed setup and uses Pods to manage its containers.

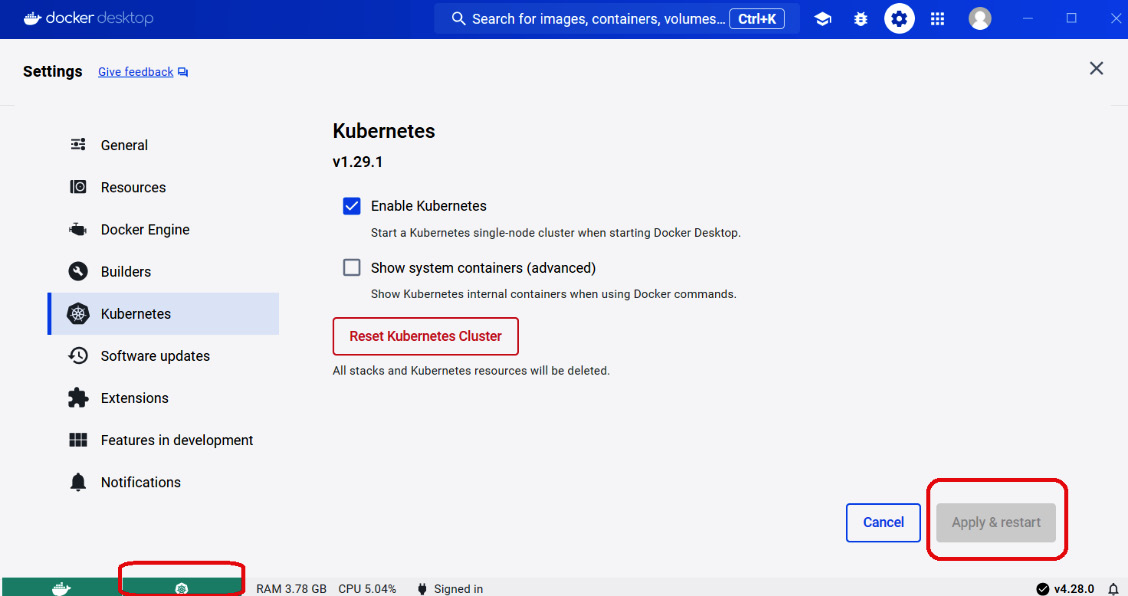

Among the many ways to install Kubernetes, this chapter utilizes the Kubernetes feature in Docker Desktop’s Settings, as shown in Figure 11.16:

Figure 11.16 – Kubernetes in Desktop Docker

Check the Enable Kubernetes checkbox from the Settings area and click the Apply & restart button in the lower right portion of the dashboard. It will take a while for Kubernetes to appear running or green in the lower left corner of the dashboard, depending on the number of containers running on Docker Engine.

When the Kubernetes engine fails, click the Reset Kubernetes...