Exploring interactive applications – chatbots and voice assistants

We can broadly categorize NLP applications into two categories, namely interactive applications, where the fundamental unit of analysis is most typically a conversation, and non-interactive applications, where the unit of analysis is a document or set of documents.

Interactive applications include those where a user and a system are talking or texting to each other in real time. Familiar interactive applications include chatbots and voice assistants, such as smart speakers and customer service applications. Because of their interactive nature, these applications require very fast, almost immediate, responses from a system because the user is present and waiting for a response. Users will typically not tolerate more than a couple of seconds’ delay, since this is what they’re used to when talking with other people. Another characteristic of these applications is that the user inputs are normally quite short, only a few words or a few seconds long in the case of spoken interaction. This means that analysis techniques that depend on having a large amount of text available will not work well for these applications.

An implementation of an interactive application will most likely need one or more of the other components from the preceding system diagram, in addition to NLP itself. Clearly, applications with spoken input will need speech recognition, and applications that respond to users with speech or text will require natural language generation and text-to-speech (if the system’s responses are spoken). Any application that does more than answer single questions will need some form of dialog management as well so that it can keep track of what the user has said in previous utterances, taking that information into account when interpreting later utterances.

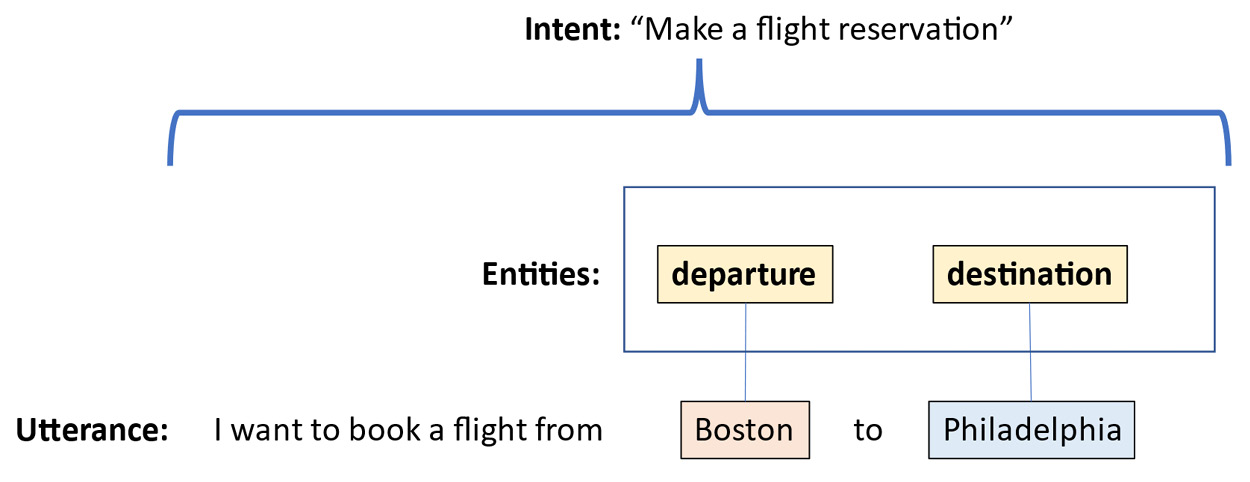

Intent recognition is an important aspect of interactive natural language applications, which we will be discussing in detail in Chapter 9 and Chapter 14. An intent is essentially a user’s goal or purpose in making an utterance. Clearly, knowing what the user intended is central to providing the user with correct information. In addition to the intent, interactive applications normally have a requirement to also identify entities in user inputs, where entities are pieces of additional information that the system needs in order to address the user’s intent. For example, if a user says, “I want to book a flight from Boston to Philadelphia,” the intent would be make a flight reservation, and the relevant entities are the departure and destination cities. Since the travel dates are also required in order to book a flight, these are also entities. Because the user didn’t mention the travel dates in this utterance, the system should then ask the user about the dates, in a process called slot filling, which will be discussed in Chapter 8. The relationships between entities, intents, and utterances can be seen graphically in Figure 1.3:

Figure 1.3 – The intent and entities for a travel planning utterance

Note that the intent applies to the overall meaning of the utterance, but the entities represent the meanings of only specific pieces of the utterance. This distinction is important because it affects the choice of machine learning techniques used to process these kinds of utterances. Chapter 9, will go into this topic in more detail.

Generic voice assistants

The generic voice assistants that are accessed through smart speakers or mobile phones, such as Amazon Alexa, Apple Siri, and Google Assistant, are familiar to most people. Generic assistants are able to provide users with general information, including sports scores, news, weather, and information about prominent public figures. They can also play music and control the home environment. Corresponding to these functions, the kinds of intents that generic assistants recognize are intents such as get weather forecast for <location>, where <location> represents an entity that helps fill out the get weather forecast intent. Similarly, “What was the score for <team name> game?” has the intent get game score, with the particular team’s name as the entity. These applications have broad but generally shallow knowledge. For the most part, their interactions with users are just based on one or, at most, a couple of related inputs – that is, for the most part, they aren’t capable of carrying on an extended conversation.

Generic voice assistants are mainly closed and proprietary. This means that there is very little scope for developers to add general capabilities to the assistant, such as adding a new language. However, in addition to the aforementioned proprietary assistants, an open source assistant called Mycroft is also available, which allows developers to add capabilities to the underlying system, not just use the tools that the platforms provide.

Enterprise assistants

In contrast to the generic voice assistants, some interactive applications have deep information about a specific company or other organization. These are enterprise assistants. They’re designed to perform tasks specific to a company, such as customer service, or to provide information about a government or educational organization. They can do things such as check the status of an order, give bank customers account information, or let utility customers find out about outages. They are often connected to extensive databases of customer or product information; consequently, based on this information, they can provide deep but mainly narrow information about their areas of expertise. For example, they can tell you whether a particular company’s products are in stock, but they don’t know the outcome of your favorite sports team’s latest game, which generic assistants are very good at.

Enterprise voice assistants are typically developed with toolkits such as the Alexa Skills Kit, Microsoft LUIS, Google Dialogflow, or Nuance Mix, although there are open source toolkits such as RASA (https://rasa.com/). These toolkits are very powerful and easy to use. They only require developers to give toolkits examples of the intents and entities that the application will need to find in users’ utterances in order to understand what they want to do.

Similarly, text-based chatbots can perform the same kinds of tasks that voice assistants perform, but they get their information from users in the form of text rather than voice. Chatbots are becoming increasingly common on websites. They can supply much of the information available on the website, but because the user can simply state what they’re interested in, they save the user from having to search through a possibly very complex website. The same toolkits that are used for voice assistants can also be used in many cases to develop text-based chatbots.

In this book, we will not spend too much time on the commercial toolkits because there is very little coding needed to create usable applications. Instead, we’ll focus on the technologies that underly the commercial toolkits, which will enable developers to implement applications without relying on commercial systems.

Translation

The third major category of an interactive application is translation. Unlike the assistants described in the previous sections, translation applications are used to assist users to communicate with other people – that is, the user isn’t having a conversation with the assistant but with another person. In effect, the applications perform the role of an interpreter. The application translates between two different human languages in order to enable two people who don’t speak a common language to talk with each other. These applications can be based on either spoken or typed input. Although spoken input is faster and more natural, if speech recognition errors (which are common) occur, this can significantly interfere with the smoothness of communication between people.

Interactive translation applications are most practical when the conversation is about simple topics such as tourist information. More complex topics – for example, business negotiations – are less likely to be successful because their complexity leads to more speech recognition and translation errors.

Education

Finally, education is an important application of interactive NLP. Language learning is probably the most natural educational application. For example, there are applications that help students converse in a new language that they’re learning. These applications have advantages over the alternative of practicing conversations with other people because applications don’t get bored, they’re consistent, and users won’t be as embarrassed if they make mistakes. Other educational applications include assisting students with learning to read, learning grammar, or tutoring in any subject.

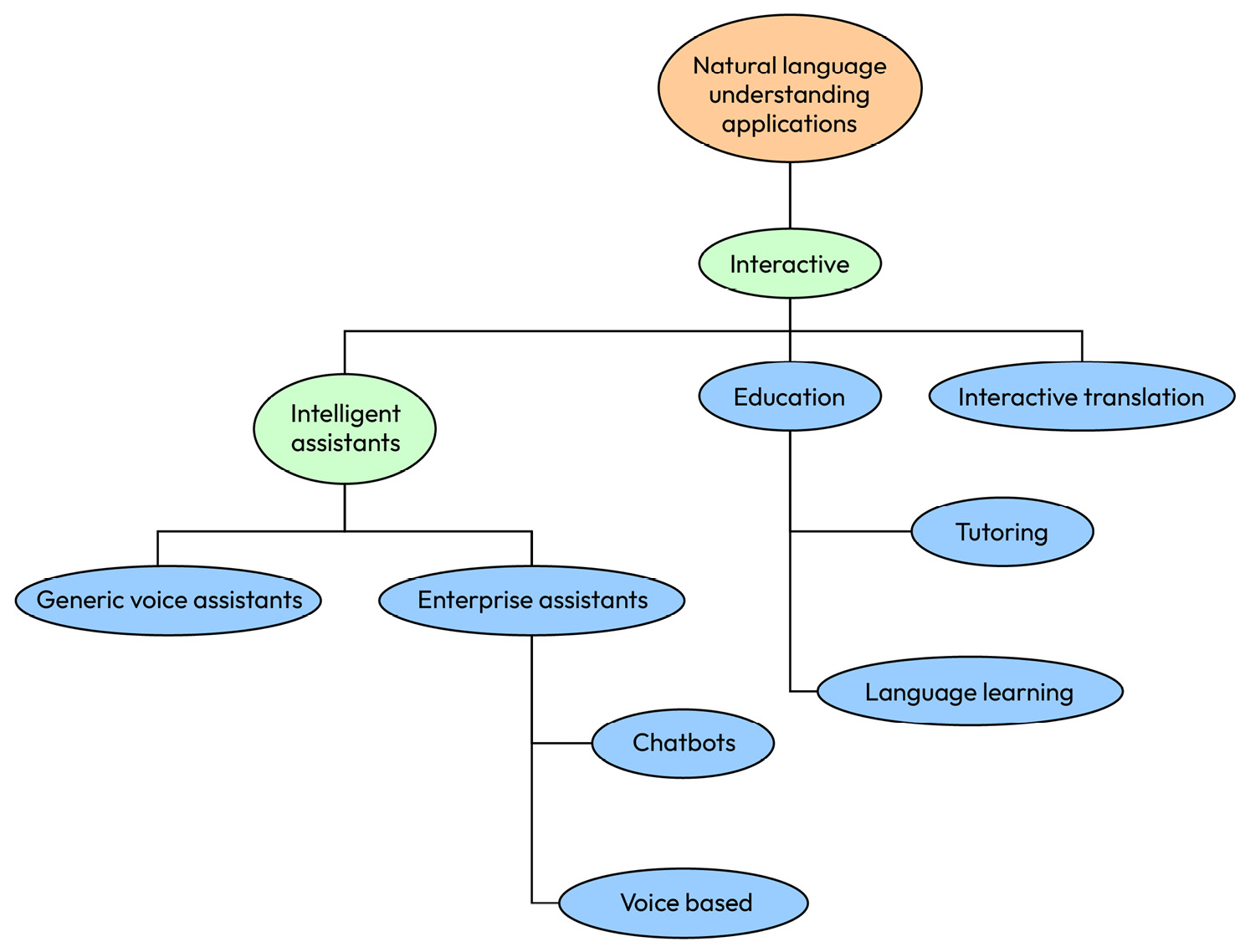

Figure 1.4 is a graphical summary of the different kinds of interactive applications and their relationships:

Figure 1.4 – A hierarchy of interactive applications

So far, we’ve covered interaction applications, where an end user is directly speaking to an NLP system, or typing into it, in real time. These applications are characterized by short user inputs that need quick responses. Now, we will turn to non-interactive applications, where speech or text is analyzed when there is no user present. The material to be analyzed can be arbitrarily long, but the processing time does not have to be immediate.