Introducing Word2Vec

The first step to comprehending the DeepWalk algorithm is to understand its major component: Word2Vec.

Word2Vec has been one of the most influential deep-learning techniques in NLP. Published in 2013 by Tomas Mikolov et al. (Google) in two different papers, it proposed a new technique to translate words into vectors (also known as embeddings) using large datasets of text. These representations can then be used in downstream tasks, such as sentiment classification. It is also one of the rare examples of patented and popular ML architecture.

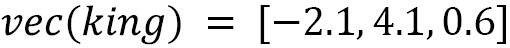

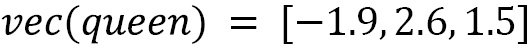

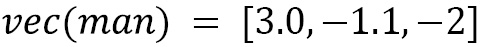

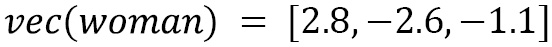

Here are a few examples of how Word2Vec can transform words into vectors:

We can see in this example that, in terms of the Euclidian distance, the word vectors for king and queen are closer than the ones for king and woman (4.37 versus 8.47). In general, other metrics, such as the popular cosine similarity, are used to measure...