The rise of Docker

Not very often does a technology come along that is adopted so widely across an entire industry. Since its first public release in March 2013, Docker has not only gained the support of both end users, like you and I, but also industry leaders such as Amazon, Microsoft, and Google.

Docker is currently using the following sentence on their website to describe why you would want to use it:

"Docker provides an integrated technology suite that enables development and IT operations teams to build, ship, and run distributed applications anywhere."

There is a meme, based on the disaster girl photo, which sums up why such a seemingly simple explanation is actually quite important:

So as simple as Docker's description sounds, it's actually a been utopia for most developers and IT operations teams for a number of years to have tool that can ensure that an application can consistently work across the following three main stages of an application's life cycle:

- Development

- Staging and Preproduction

- Production

To illustrate why this used to be a problem before Docker arrived at the scene, let's look at how the services were traditionally configured and deployed. People tended to typically use a mixture of dedicated machines and virtual machines. So let's look at these in more detail.

While this is possible using configuration management tools, such as Puppet, or orchestration tools, such as Ansible, to maintain consistency between server environments, it is difficult to enforce these across both servers and a developer's workstation.

Dedicated machines

Traditionally, these are a single piece of hardware that have been configured to run your application, while the applications have direct access to the hardware, you are constrained by the binaries and libraries you can install on a dedicated machine, as they have to be shared across the entire machine.

To illustrate one potential problem Docker has fixed, let's say you had a single dedicated server that was running your PHP application. When you initially deployed the dedicated machine, all three of the applications, which make up your e-commerce website, worked with PHP 5.6, so there was no problem with compatibility.

Your development team has been slowly working through the three PHP applications. You have deployed it on your host to make them work with PHP 7, as this will give them a good boost in performance. However, there is a single bug that they have not been able to resolve with App2, which means that it will not run under PHP 7 without crashing when a user adds an item to their shopping cart.

If you have a single host running your three applications, you will not be able to upgrade from PHP 5.6 to PHP 7 until your development team has resolved the bug with App2, unless you do one of the following:

- Deploy a new host running PHP 7 and migrate App1 and App3 to it; this could be both time consuming and expensive

- Deploy a new host running PHP 5.6 and migrate App2 to it; again this could be both time consuming and expensive

- Wait until the bug has been fixed; the performance improvements that the upgrade from PHP 5.6 to PHP 7 bring to the application could increase the sales and there is no ETA for the fix

If you go for the first two options, you also need to ensure that the new dedicated machine either matches the developer's PHP 7 environment or that a new dedicated machine is configured in exactly the same way as your existing environment; after all, you don't want to introduce further problems by having a poorly configured machine.

Virtual machines

One solution to the scenario detailed earlier would be to slice up your dedicated machine's resources and make them available to the application by installing a hypervisor such as the following:

- KVM: http://www.linux-kvm.org/

- XenSource: http://www.xenproject.org/

- VMware vSphere: http://www.vmware.com/uk/products/vsphere-hypervisor/

Once installed, you can then install your binaries and libraries on each of the different virtual hosts and also install your applications on each one.

Going back to the scenario given in the dedicated machine section, you will be able to upgrade to PHP 7 on the virtual machines with App1 and App2 installed, while leaving App2 untouched and functional while the development work on the fix.

Great, so what is the catch? From the developer's view, there is none as they have their applications running with the PHP versions, which work best for them; however, from an IT operations point of view:

- More CPU, RAM, and disk space: Each of the virtual machines will require additional resources as the overhead of running three guest OS, as well as the three applications have to be taken into account

- More management: IT operations now need to patch, monitor, and maintain four machines, the dedicated host machine along with three virtual machines, where as before they only had a single dedicated host.

As earlier, you also need to ensure that the configuration of the three virtual machines that are hosting your applications match the configuration that the developers have been using during the development process; again, you do not want to introduce additional problems due to configuration and process drift between departments.

Dedicated versus virtual machines

The following diagram shows the how a typical dedicated and virtual machine host would be configured:

As you can see, the biggest differences between the two are quite clear. You are making a trade-off between resource utilization and being able to run your applications using different binaries/libraries.

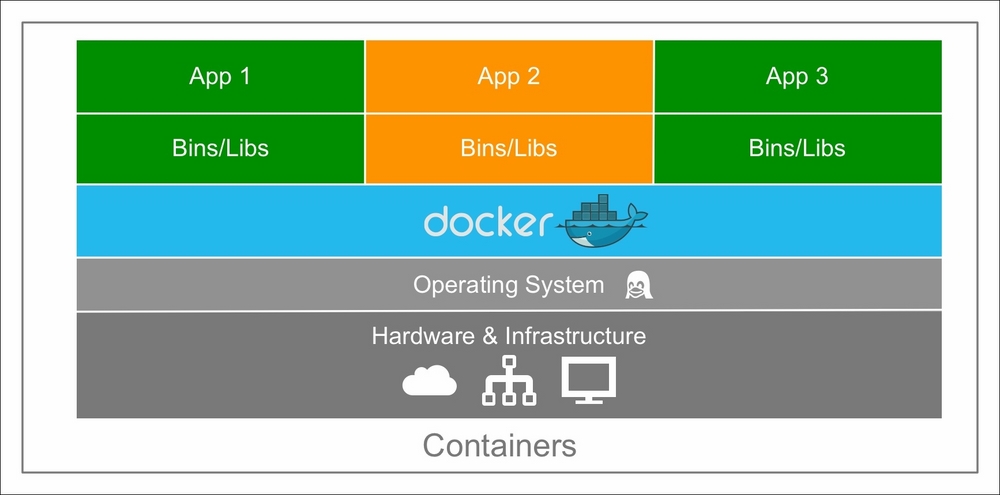

Containers

Now we have covered the way in which our applications have been traditionally deployed. Let's look at what Docker adds to the mix.

Back to our scenario of the three applications running on a single host machine. Installing Docker on the host and then deploying each of the applications as a container on this host gives you the benefits of the virtual machine, while vastly reducing the footprint, that is, removing the need for the hypervisor and guest operating system completely, and replacing them with a SlimLine interface directly into the host machines kernel.

The advantages this gives both the IT operations and development teams are as follows:

- Low overhead: As mentioned already, the resource and management for the IT operations team is lower

- Development provide the containers: Rather than relying on the IT operations team to configure each of the three applications environments to machine the development environment, they can simply pass their containers to be put into production

As you can see from the following diagram, the layers between the application and host operating system have been reduced:

All of this means that the need to use the disaster girl meme at the beginning of this chapter should be now redundant as the development team are shipping the application to the operations in a container with all the configuration, binaries, and libraries intact, which means that if it works in development, it will work in production.

This may seem too good to be true, and to be honest, there is a "but". For most web applications or applications that are pre-compiled static binaries, you shouldn't have a problem.

However, as Docker shares resources with the underlying host machine, such as the Kernel version, if your application needs to be compiled or have a reliance on certain libraries that are only compatible with the shared resources, then you will have to deploy your containers on a like-for-like operating system, and in some cases, hardware.

Docker has tried to address this issue with the acquisition of a company called Unikernel Systems in January 2016. At the time of writing this book, not a lot is known about how Docker is planning to integrate this technology into their core product, if at all. You can find out more about this technology at https://blog.docker.com/2016/01/unikernel/.