Understanding containers and Docker

Following this comparison between monolithic and microservice architectures, you should have understood that the architecture that best combines with agility and DevOps is the microservice architecture. It is this architecture that we will discuss throughout the book because this is the architecture that Kubernetes manages well.

Now, we will move on to discuss how Docker, which is a container engine for Linux, is a good option in which to manage microservices. If you already know a lot about Docker, you can skip this section. Otherwise, I suggest that you read through it carefully.

Understanding why Docker is good for microservices

Recall the two important aspects of the microservice architecture:

- Each microservice can have its own technical environment and dependency.

- At the same time, it must be decoupled from the operating system it's running on.

Let's put the latter point aside for the moment and discuss the first one: two microservices of the same app can be developed in two different languages or be written in the same language but as two different versions. Now, let's say that you want to deploy these two microservices inside the same Linux machine. That would be a nightmare.

The reason for this is that you'll have to install all the multiple versions of the different runtimes, as well as the dependencies, and there might also be different versions or overlaps between the two microservices. Additionally, all of this will be on the same host operating system. Now, let's imagine you want to remove one of these two microservices from the machine to deploy it on another server and clean the former machine of all the dependencies used by that microservice. Of course, if you are a talented Linux engineer, you'll succeed in doing this. However, for most people, the risk of conflict between the dependencies is huge, and in the end, you might just make your app unavailable while running such a nightmarish infrastructure.

There is a solution to this: you could build a machine image for each microservice and then put each microservice on a dedicated virtual machine. In other words, you refrain from deploying multiple microservices on the same machine. However, in this example, you will need as many machines as you have microservices. Of course, with the help of AWS or GCP, it's going to be easy to bootstrap tons of servers, each of them tasked to run one and only one microservice, but it would be a huge waste of money to not mutualize the computing power offered by the host.

That's why the second requirement exists: microservices should be decoupled from the microservice they are running on. To achieve this, we use Docker containers.

Understanding the benefit of Docker container isolation

Docker allows you to manage containers that are, in fact, isolated Linux namespaces. Docker's job is to expose a user-friendly API to manage containers, which are like small virtual machines that run on top of the Linux kernel, not at the hypervisor level. By installing Docker on top of your Linux system, you, therefore, add an additional layer of virtualization on top of your host machine. Your microservices are going to be launched on top of this layer, not directly on the host system, whose sole role will be to run Docker.

Since containers are isolated, you can run as many containers as you want and have them run applications written in different languages without any conflict. Microservice relocation becomes as easy as stopping a running container and launching another one from the same image on another machine.

The usage of Docker with microservices offers three main benefits:

- It reduces the footprint on the host system.

- It mutualizes the host system without the conflict between different microservices.

- It removes coupling between the microservice and the host system.

Once a microservice has been containerized, you can eliminate its coupling with the host operating system. The microservice will only depend on the container in which it will operate. Since a container is much lighter than a real full-featured Linux operating system, it will be easy to share and deploy on many different machines. Therefore, the container and your microservice will work on any machine that is running Docker.

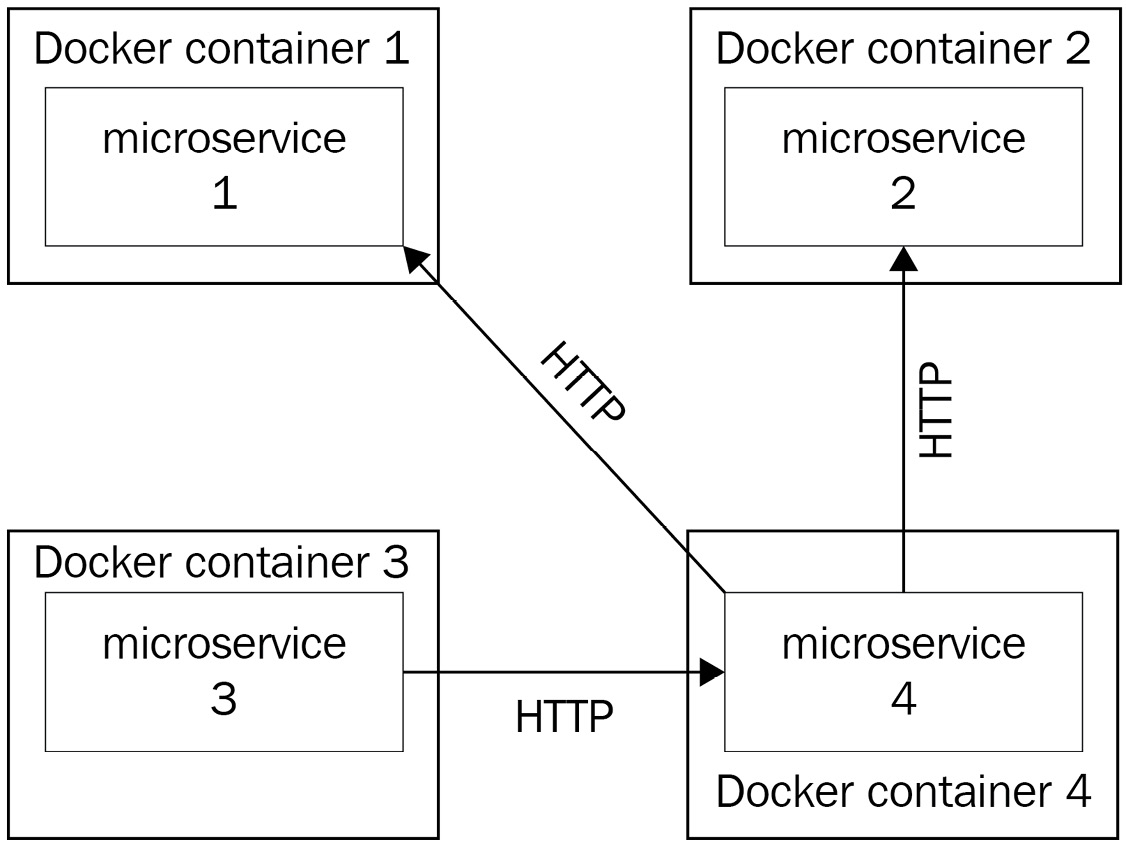

The following diagram shows a microservice architecture where each microservice is actually wrapped by a Docker container:

Figure 1.4 – A microservice application where all microservices are wrapped by a Docker container; the life cycle of the app becomes tied to the container, and it is easy to deploy it on any machine that is running Docker

Docker fits well with the DevOps methodology, too. By developing locally in a Docker container, which would be later be built and deployed in production, you ensure you develop in the same environment as the one that will eventually run the application.

Docker is not only capable of managing the life cycle of a container, it is actually an entire ecosystem around containers. It can manage networks, the intercommunication between different containers, and all of these features respond particularly well to the properties of the microservice architecture that we mentioned earlier.

By using the cloud and Docker together, you can build a very strong infrastructure to host your microservice. The cloud will give you as many machines as you want. You simply need to install Docker on each of them, and you'll be able to deploy multiple containerized microservices on each of these machines.

Docker is a very nice tool on its own. However, you'll discover that it's hard to run it in production alone, just as it is. The reason is that Docker was built in order to be an ecosystem around Linux containers, not a production platform. When it comes to production, everything is particular, because it is the concrete environment where everything happens for real. This environment deserves special treatment, and deploying Docker on it is risky. This is because Docker cannot alone address the particular needs that are related to production.

There are a number of questions, such as how to relaunch a container that failed automatically and how to autoscale my container based on its CPU utilization, that Docker alone cannot answer. This is the reason why some people were afraid to run Docker-based workloads in production a few years ago.

To answer these questions, we will need a container orchestrator, such as the one discussed in this book: Kubernetes.